Our research indicates that data platform wars have entered an entirely new phase, one where open data is no longer a marketing slogan and governance catalogs are now the new control point. We see this as the first of several structural changes to the software industry. The next price of entry we think will be an emerging layer originally conceived by Geoff Moore, systems of intelligence, or SOI, which we believe will become a high-value piece of real estate in the AI-optimized software stack.

In this Breaking Analysis, and just a few days removed from Snowflake Summit 2025, we unpack how Snowflake has moved from steward of its pristine proprietary data platform to orchestrating an ecosystem in a world that enables any compute engine to operate on any open data format. Last week, we framed the company’s Rubicon moment, meaning the pivot from analytic warehouse to enabler of intelligent data apps.

Introduction

This year, Snowflake doubled down on its simplicity message, bringing that ethos into AI, and in addition and importantly, extending its governance model further into the open data ecosystem, syncing permissions with Polaris for external Iceberg tables and adding Openflow for data pipelines outside the Snowflake walled garden. Taken together, these moves signal a deliberate strategy to ease storage lock-in and let customers own and control their data independent of any compute engine – something customers are demanding – while tightening its grip on the policy and metadata control plane. At the same time, Snowflake is bringing conversational and powerful AI and harmonizing data with a metric layer called Semantic Views to become the trusted source feeding agentic applications. As well, Snowflake is improving its engine to not only serve traditional analytic workload customers, but also improve its position for data engineering workloads and data science personas, historically challenging areas for the company.

The bottom line is that all this evolution and innovation is dragging Snowflake up into a new competitive battlefield, which we’ve often discussed in Breaking Analysis episodes. We’re not just talking about Databricks, but a spate of application vendors like Salesforce, ServiceNow, SAP, Palantir, Celonis and others. On balance, we see tremendous opportunities for Snowflake, but these TAM expanders come with risks and key decision points for Snowflake’s future.

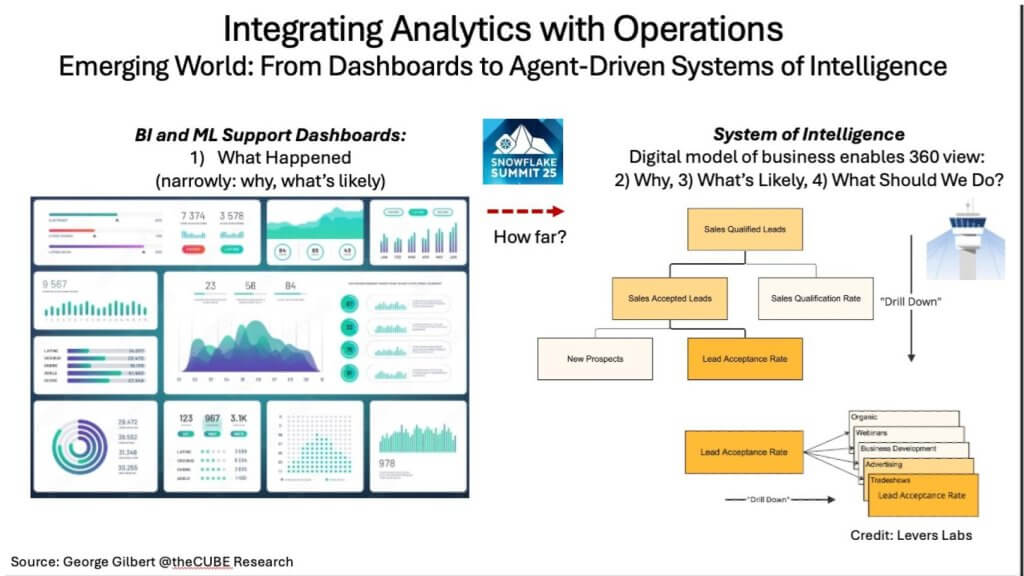

The North Star: From Dashboards to Systems of Intelligence

We believe last week’s “Crossing the Rubicon” thesis was only the prelude. Coming out of Snowflake Summit 2025, the industry is now clearly pivoting from backward-looking analytics toward fully integrated, agent-driven systems of intelligence (SoI). That shift in our view reframes every competitive debate.

In Exhibit 1 above, we show on the left side of the slide a collage of static dashboards – i.e., KPIs that show a historical view of what happened. This has been a useful tool to understand the past and help predict the future, but much more can be done in that realm. Contrast that with the right side, which shows part of a live metric tree, a digital representation of the linked KPIs that reflect how all the processes in the enterprise are linked and drive each other. This part of the metric tree traces every stage from net-new prospect to granular lead source. Here, metrics don’t merely describe performance; they codify cause, project impact, and allow action by *adjusting* the metric-driven business drivers in the same workflow. That is the outcome we believe will become increasingly possible and define value in data platforms for the next decade.

Our belief is that Snowflake’s architectural bet is to convert openness into leverage by embracing Iceberg, courting application partners, yet keeping the governance and control inside its own service boundary. In our view, that is a viable path to defend the high ground against hyperscalers’ native Iceberg services, aggressive app vendors building their own digital twins, connector incumbents such as Fivetran; and of course, Databricks, all of whom see Snowflake’s walled garden giving way to an open ecosystem.

By way of analogy, the web’s leap from brochureware to e-commerce mirrors the data world’s leap from dashboarding to operational applications. Just as the “Buy” button fused analytics with transaction flow, SoIs fuse analytics with agentic action. KPIs become control points, not just readouts, much like gauges and dials in a power plant. That meshing of analytic and operational planes is the competitive fulcrum Snowflake must navigate before rivals do.

In our opinion, Snowflake’s post-garden strategy will be judged on whether it can turn cooperative openness into durable control of the system-of-intelligence layer. An SoI emerging as a layer from a different vendor risks diminishing feature differentiation and pricing power in the underlying data platform. The chessboard is set; Databricks’ next moves in the coming week will give us further insight as to how the game will unfold.

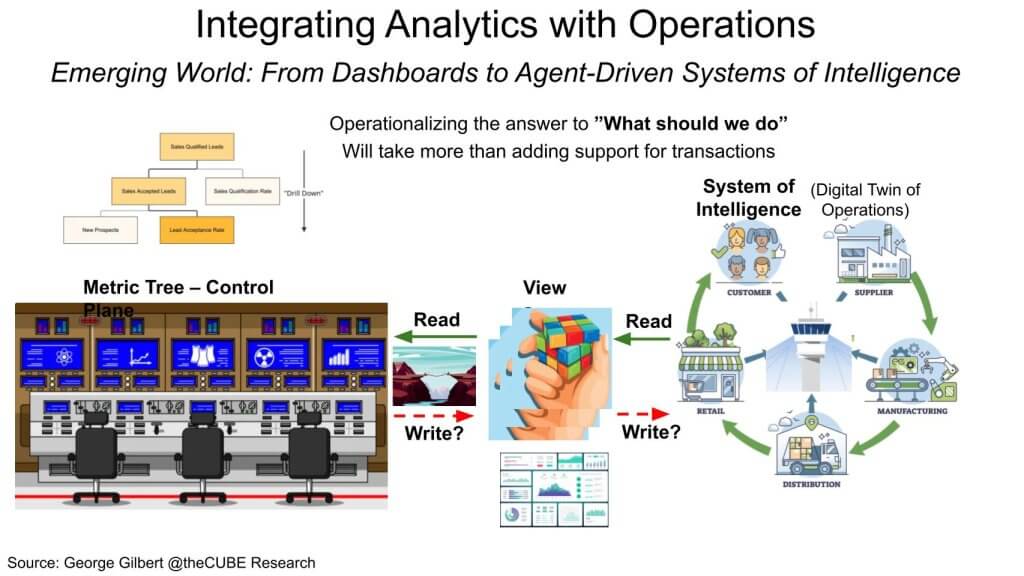

Beyond Read-Only Analytics: Closing the Loop with Operational Applications

Last week’s “Rubicon” metaphor highlighted the point of no return; Exhibit 2 below exposes the challenges in the middle of the river. Our premise is that even if Snowflake can ingest every telemetry stream from a digital twin or other sources, the job is only half done. The metric tree depicted below should act as a real-time control plane, detecting variance, and, importantly, enabling operators or their agents to update the operational Systems of Record. Today, that round-trip remains clunky, sometimes impossible. Analytic and operational applications live in hundreds or thousands of silos.

As shown above, on the right-hand side, the green arrows depict how the system-of-intelligence integrates an end-to-end representation of operations that feeds the metric tree. The system detects spikes in cost, slips in conversion rates, and lags in supply chain delivery. But the arrows that will matter most are the red ones pointing back to the SoI to update the CRM, tweak the ad budget, reschedule a shipment. Without those return arrows, the tree is just another BI artifact.

In our opinion, Snowflake knows this. The company telegraphed its ambition with Unistore, yet the recent CrunchyData acquisition suggests Unistore missed the mark. Integrating analytic and operational applications requires more than adding transactions. It requires harmonizing a unified representation of operational applications, similar to but even richer than the metric layer that harmonizes analytic data.

The point we’ve made in our research over the months and years is that dashboards model the past through OLAP cubes. OLAP uplevels data from “strings” to “things.” Operations on the other hand are modeled through a digital twin or knowledge graph that captures entities, relationships, and temporal state. It uplevels data about “activities” or business processes. The leap from “What happened?” to “Take the optimal course of action” requires mapping OLAP cubes to the knowledge graph. Anything less forces customers into a reverse-ETL hairball that negates the very promise of a live, integrated platform.

We believe the competitive control point is shifting. It used to be the DBMS that owned the data. It’s now the catalog that governs the open data formats. It will eventually be the SoI that represents a high-fidelity state of the business’s end-to-end operations. If Snowflake can bind analytic insight directly to operational action, before Databricks or the hyperscalers do, it secures the green high ground of the system-of-intelligence layer. If others layer the SoI as a new platform on top of the data platform, they will be in a position to capture more of the value that the entire stack creates.

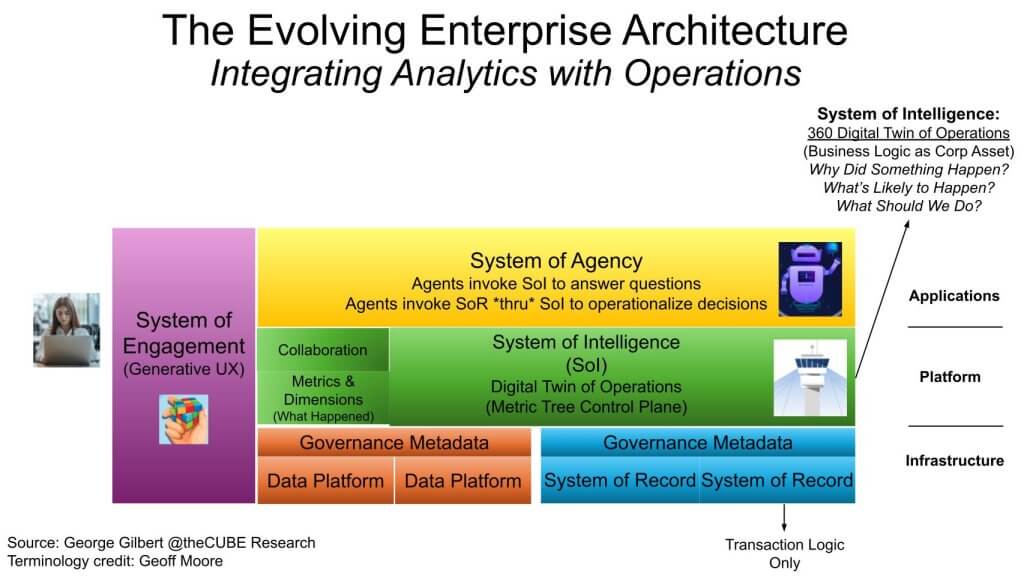

The Next Land-Grab: Engagement, Intelligence, and Agency

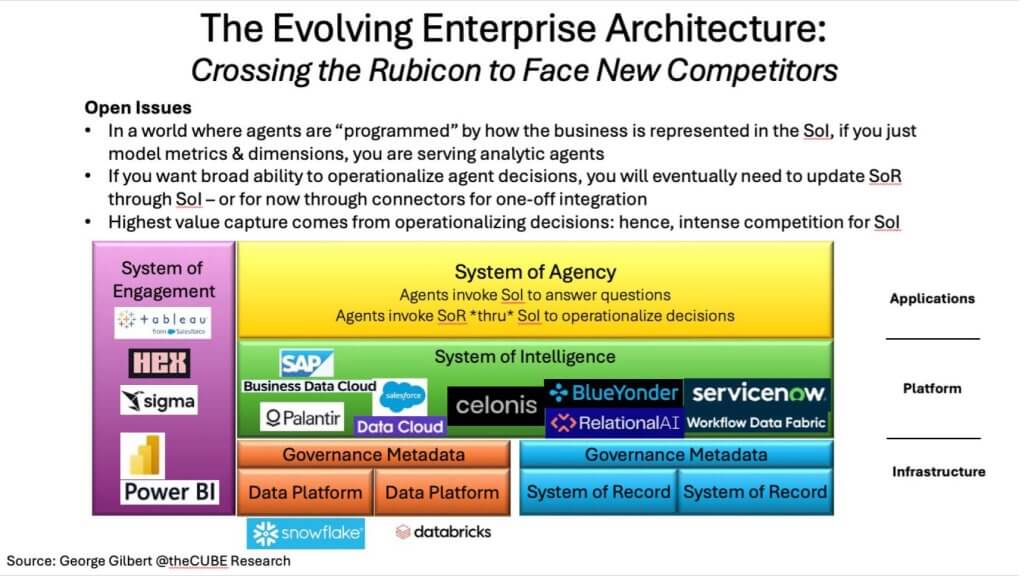

Exhibit 3 below tells the story of a harmonized data foundation supporting three interlocking tiers — Engagement, Intelligence, Agency. The middle tier, the bright-green system-of-intelligence (SoI) control plane, is where this architectural Rubicon turns both exciting and potentially dangerous.

At the core of the diagram above sits a digital twin, which represents an always-on, 4-D map of every customer, part, order, policy, process, and exception in an enterprise. The SoI treats the business logic previously in application silos as a harmonized corporate asset just as valuable as the data enterprises have been managing for decades. Building an integrated business model has been a false Grail for decades. But we believe this time Systems of Agency will help build it – a topic to be explored in future reports.

The analytic presentation of the data in the SoI may still be summarized in cubes of metrics and dimensions. But that analytic data must still be integrated bidirectionally with the richer operational representation in the knowledge graph. Sitting on top are human-supervised agents that interrogate the SoI (“Why is pipeline velocity sagging?”) and invoke recommended remediations into CRM, ERP, or supply-chain apps through the SoI. The agents bypass the silo’d business logic in the legacy Systems of Record (SoR) and only the transaction logic preserves low-level integrity.

We believe this SoI, shaded in green above, is the most valuable real estate in the modern stack. In the age of AI, how you model your business “programs” your AI-driven agents. Vendors that master it may disintermediate traditional SoRs and data platforms.

[Listen to how JP Morgan Chase built an early example of a high-value SoI layer on top of Snowflake]

The strategic stakes follow classic platform economics. Let’s call it platform envelopment. When a new layer emerges, incumbents below risk commoditization unless they move up the stack or tether upper-layer innovation tightly to their core. In the procedural era, compute was the infrastructure, the app server the platform. In today’s data-centric era, data is the infrastructure, the model is the platform, and agents are the applications. The vendor that binds those layers with a coherent, governed control plane will dictate the cadence of enterprise AI for the next decade.

This is key to why we talked about that trolling between Benioff and Nadella. Nadella was saying the systems of agency will talk directly to the CRUD databases below the systems of record. He’s trying to say SaaS will go away, because he’s not that competitive there. But we’re saying there’s going to be many layers in between to mediate all that, and that’s why we see vendors who are building that system of intelligence having a critical advantage.

Snowflake’s Post-Summit Blueprint: TAM Expansion to More Workloads or Owning the Stack?

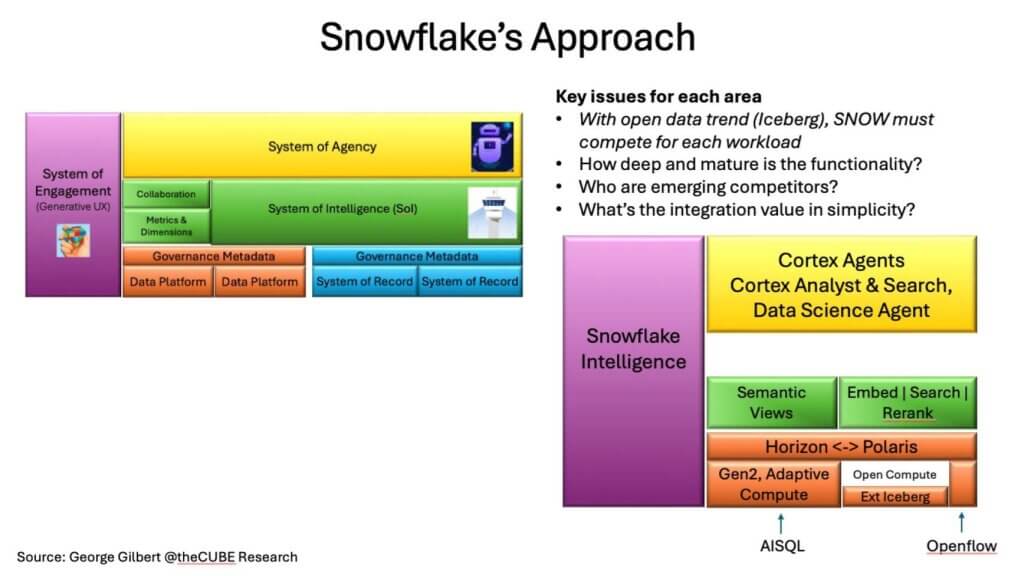

Snowflake’s 2025 Summit unleashed a mini-re:Invent torrent of features. We’ve tried to distill those announcements into the reference diagram shown below in Exhibit 4 and map them to our model of the future software stack. Generally, we see an architecture that aligns with the engagement-intelligence-agency model we laid out earlier, with some key questions.

In the picture above, we show four functional areas, including Snowflake’s natural language query capability in purple, Snowflake Intelligence, which can tap both structured and unstructured data.

- Cortex Agents (yellow, top). Snowflake’s orchestration layer where specialized agents work with different workloads, including structured and unstructured data. This is Snowflake’s first toe in the water on the system-of-agency tier.

- Semantic Views + Embedding & Search Services. Semantic Views translate raw operational data into metrics and dimensions, giving agents a business vocabulary beyond SQL. Embedding and search and reranking services extend the Snowflake platform into unstructured data.

- Horizon + Polaris Bridge (orange). Governance extended to open-table formats—Snowflake’s answer to “policy once, query many” across native and Iceberg data while defending its performance moat.

- Adaptive Compute & Gen 2. Adaptive compute means transparent sizing (no more T-shirt sizes). Faster, smarter scheduling and routing. Gen 2 – Roughly 1.3X more expensive with the promise of up to 2X+ the performance – leading to overall better TCO. Key to “may the best engine win” in a world that is increasingly “Iceberg-ized.”

- Open Compute Layer. External engines – e.g. Spark, Trino, Presto, etc., letting customers work on Iceberg tables with their choice of engine.

AI SQL extends the familiar SQL programming model to LLM-based functions. Openflow manages data pipelines that were previously outside of Snowflake’s reach. This means an expanded TAM beyond the walled garden by extending governance and control.

Our take. In an open-data era the customer, not the DBMS, owns the data, so engines must fight for every workload on two axes: 1) Best-of-breed capability; and 2) Synergistic integration. Snowflake is clearly leaning into the second lever with tight coupling of governance, semantics, and AI agents to make “using it together” the path of least resistance. Whether that synergy offsets specialized engine competition will define how much of the orange data platform real estate Snowflake ultimately captures.

Notwithstanding the near-term competition from other engines that open table formats enable, the long-term battle we believe will be leveraging the 4-D map of an enterprise, comprising people, places, things, and processes. Importantly, the process data, we believe, will be the most elusive for data platform providers as the likes of Celonis, Salesforce, Oracle, SAP and other application providers will protect that turf and potentially restrict easy access to data platform providers – trying to relegate them to data infrastructure versus the brain of an enterprise. Blue Yonder, the supply chain vendor, is building a system-of-intelligence along with a system-of-agency on top of Snowflake using the RelationalAI knowledge graph to harmonize and represent end-to-end operations.

Snowflake’s AI-Application Framework: Where It Shines and Where the Gaps Remain

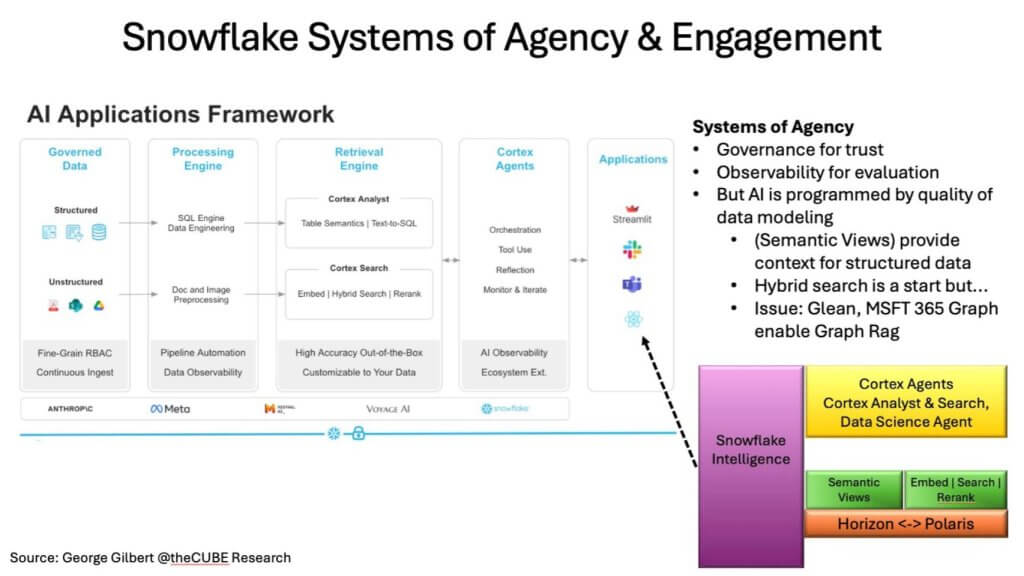

Exhibit 5 below lays out Snowflake’s bid to climb the value stack. We show a guided flow that starts with governed data, runs through multimodal processing and retrieval, and culminates in Cortex-driven agents that interact with end-user applications. In our view, the design goal is to guarantee trust and compliance at ingestion, enrich context, and let agents execute multistep playbooks, while observability, lineage, and policy metadata track every action to enable improvement and trust.

Below is a scorecard that maps the framework’s key layers to Snowflake’s current strengths and the open issues that could invite competition:

| Layer (left ➞ right) | Snowflake Capability Today | Differentiators | Open Questions / Competitive Seams |

|---|---|---|---|

| Governed Data RBAC, ABAC, masking | Mature for structured data; native Horizon policies extend to Iceberg tables | Unified security model across native & open formats | Can governance remain seamless and migrate ever richer policies to open catalogs? |

| Processing Engine SQL + doc/image pre-processing | Gen 2 adaptive compute boosts performance; adds unstructured ETL | Single experience for mixed workloads; highest performance engine; best TCO? | Cost/latency trade-offs for data pipelines vs. Spark & Trino on commodity Iceberg |

| Retrieval Engine Cortex Analyst (text-to-SQL) & Cortex Search (vector + keyword) | Hybrid search combines similarity & precision; semantic views jumpstart model definition with import from BI tools | Bottom-up learning enriches metric definitions over time | Richer “graph RAG” models from Glean, MS 365 Graph provide richer context for search than Snowflake today |

| Cortex Agents Orchestration, tool use, reflection | Natural-language interface to governed data; hooks for external tools | Policy metadata & AI observability baked in | Still early: will 3rd party Systems of Engagement (e.g. Hex, nexgen BI tools) use their own agents to navigate Semantic Views and Search directly? |

| Applications Tier Streamlit, Slack, Teams, React apps | Rapid front-end prototyping; Snowpark functions | Tight vertical integration accelerates time-to-value | Same as above: will 3rd parties use their own data agents integrated with their generative UX? |

Why this matters. In an open-data world, the locus of control shifts from owning the database to owning data governance. Snowflake’s current edge lies in the synergy of governance + data semantics + AI orchestration, a combination that leverages Snowflake’s simplicity mantra. The risk is that if richer Graph RAG search or SoIs (knowledge graphs) for harmonizing the operational application estate emerge as best-of-breed alternatives, agents will gravitate to these external services as part of a new control plane.

A key open question is how far up the stack Snowflake is willing to go, in particular with the 4-D map we often discuss. Its relationship with RelationalAI is a potential linchpin. Today, Snowflake is an analytic data platform. RelationalAI can turn it into a System of Intelligence. If Blue Yonder lives up to its promises, it will be one of the most advanced proof points that Snowflake can host Systems of Intelligence and AGency. Success here and its ability to attract other ISVs will be critical to determining whether Snowflake is relegated to data infrastructure and will serve the SoI layer, or control it outright.

The UX Battleground: Semantic Views vs. Generative Front-Ends

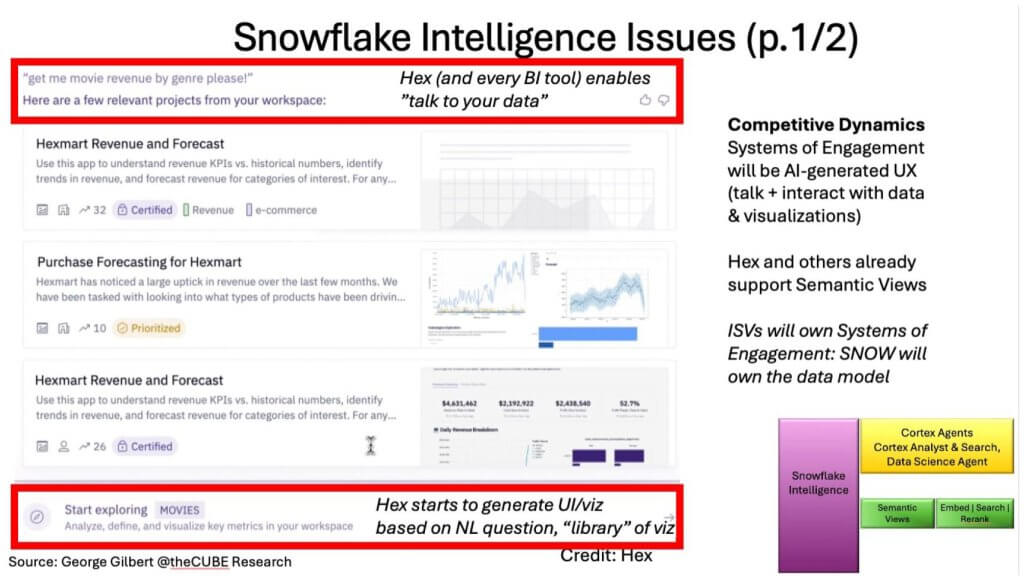

We believe one of Snowflake’s other hurdles is the “talk-to-your-data” promise. Snowflake Intelligence has been one of the company’s “tentpole” initiatives. Working with data is moving from business analysts to every knowledge worker, and that shift elevates the system-of-engagement layer, where nexgen BI vendors such as Hex, Sigma, and others are racing to wrap generative UX around trusted semantic models. We don’t believe this will remain a specialized niche. Microsoft Research has shown a generative UX for interacting with BI. And we believe Tableau will unveil a re-architected BI tool that better embraces a generative UX at Dreamforce this fall.

Exhibit 6 above is instructive. It takes a screenshot from Hex with a prompt of “Give me revenue by movie genre.”. A single natural-language query first surfaces already built interactive visualizations – no SQL, no manual chart-building. We highlight in the top red box the natural language query. The intermediate three recommended relevant visualizations are returned by Hex. The bottom red box offers the option to generate a completely new visualization.

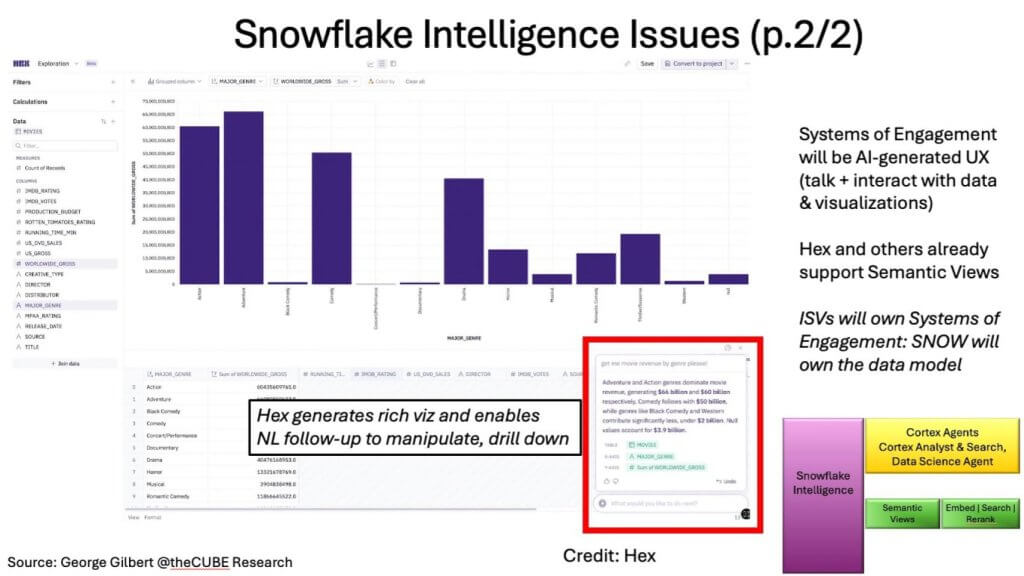

Exhibit 7 above shows the next visualization that Hex automatically generates when the user chooses a new exploration as opposed to an existing one. Not only does it generate a rich visualization, but the user can interact with it in natural language. It showcases what enterprise users will soon deem table stakes, thanks to their daily experiences with these nexgen tools.

Execution Risks on the UX Front

The critical point in the exhibits is that Hex and other specialized tools will probably be able to use their own agents and widgets behind the scenes to generate a richer UX than any data platform vendor. The data platform vendor will own the semantic model of the data. In fact, Hex already supports Snowflake Semantic Views.

Implications. Snowflake can afford to cede certain ground to best-of-breed UX vendors, provided its semantic layer remains the single source of governed truth and captures interaction data so that it can continually improve. The payoff is that every GenAI dashboard or chat interface built on top of Snowflake semantics amplifies its value and further secures its moat, nudging enterprises to centralize even more data under the platform’s policy umbrella.

The risk is equally clear: if ISVs use alternative semantic models and third-party search services, such as Glean or Microsoft Copilot 365, Snowflake becomes just another data lakehouse. Then it will compete more on cost and performance instead of commanding more of the emerging green System of Intelligence control-plane real estate.

Execution scorecard: Users now expect a chat prompt to return a polished visualization and narrative in one shot. Snowflake’s early “Intelligence” demo showcases the concept, but BI partners have more advanced technology here. To stay relevant, Snowflake’s semantic views must learn from users interacting with 3rd party BI tools. Snowflake’s Cortex agents must also enable these richer tools.

Bottom line. The generative engagement layer is where user loyalty is forged. In our opinion, Snowflake must double down on making its semantic views the indisputable single source of governed context, then let the BI ecosystem run wild on UI innovation.

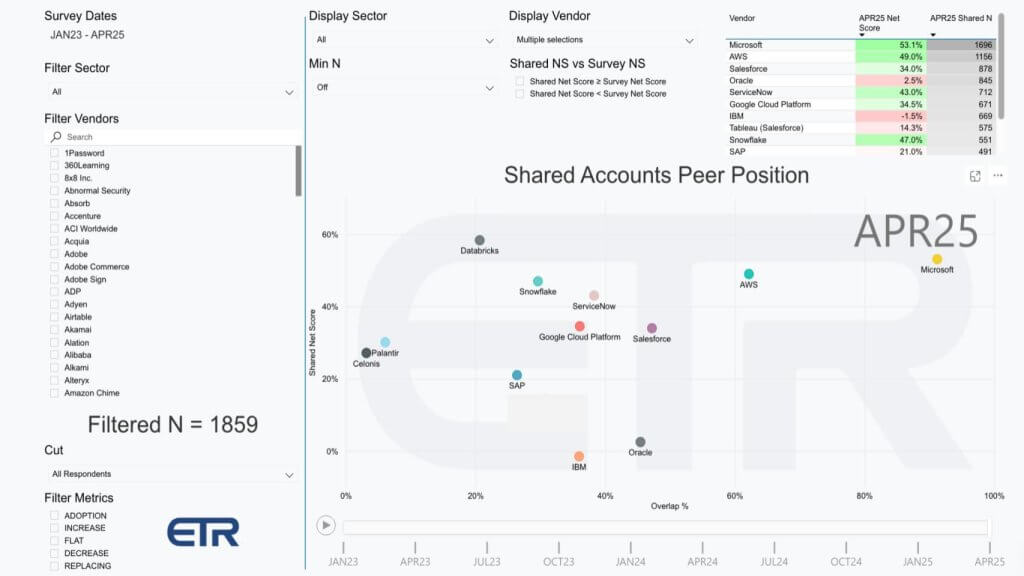

Reading the ETR Tea Leaves: Who Owns the BI Mindshare?

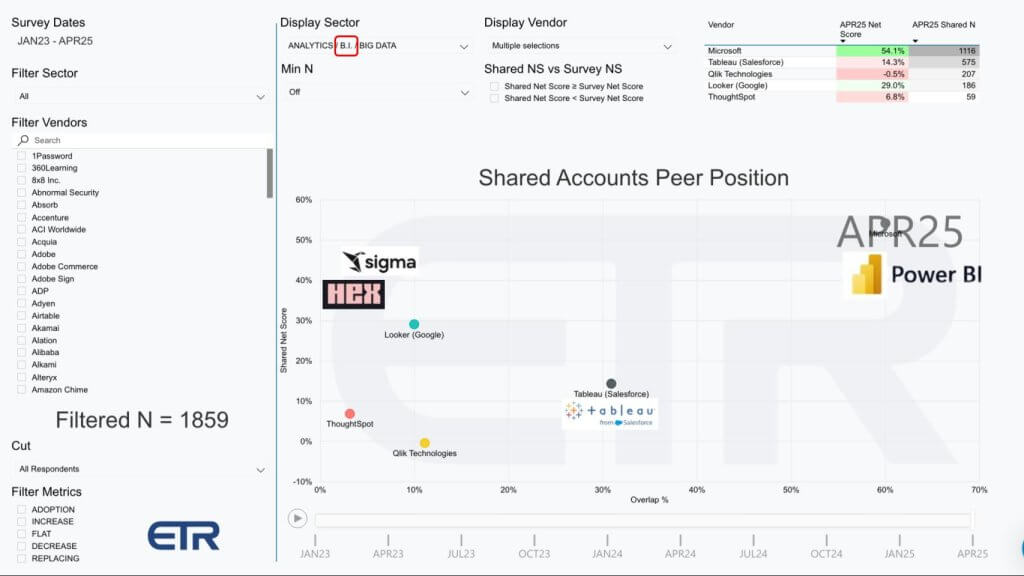

Our latest ETR snapshot shown below in Exhibit 8, maps spending velocity (shared net score, Y-axis) against account penetration (X-axis) for a handful of BI vendors. The picture reinforces that mindshare goes to those with broad account penetration but but innovation can still upset the balance.

Data Caveat: This is meant only to be representative and mixes three ETR sectors, including Analytics, BI and Big Data. It is not precisely representative of pure play BI vendors in all cases.

- Microsoft’s gravity well. By virtue of Office 365 bundling (and an aggressive Power BI land-and-expand motion), Microsoft sits in the chart’s northeast quadrant, deeply penetrated and still showing elevated spend momentum. For enterprise buyers, adopting the built-in tool often feels like a default, not a decision.

- Looker’s altitude vs. Tableau’s breadth. Google’s Looker scores higher on the velocity axis, signaling healthy momentum even though its installed base remains narrower than Tableau’s. Tableau, for its part, enjoys broad deployment but only middling net-score lift, which provides evidence that Salesforce has yet to reignite growth in what was once the category’s innovation engine.

- ThoughtSpot’s puzzling dip. Previous surveys showed ThoughtSpot punching above its weight in momentum; its current low reading raises questions about go-to-market execution or simply sample noise. Worth watching, because its search-first UX concept dovetails with the generative wave.

- Qlik as context, not contender. Qlik now focuses on data integration and Talend-driven pipelines; its BI heritage keeps it in the mix, but it is no longer the competitive benchmark for next-gen engagement layers.

- Hex and Sigma: Data TBD, ambition clear. ETR hasn’t yet captured statistically significant reads on these up-and-comers, but the anecdotal buzz is encouraging. Both vendors wrap natural-language prompts around governed semantic models; and Sigma’s early experiments in collaborative write-back hint at the very control-loop capabilities we’ve argued are essential for a true system-of-engagement.

Strategic take-away. Today’s BI tools still function primarily as read-only presentation layers. The first crack in that facade is Sigma enabling forecast data collection and reverse-ETL write-back. Snowflake and Databricks are reacting by embedding Postgres to capture lightweight transactions on-cluster, a move that edges them closer to operational interlock but still stops short of updating core SaaS or ERP systems directly.

In our view, the vendor that marries high account penetration with seamless, governed write-back will seize the engagement layer; and by extension add value to the wider system-of-intelligence agenda. Microsoft’s scale gives it a head start, but the field remains open for a specialist that can prove both velocity and precision without sacrificing governance.

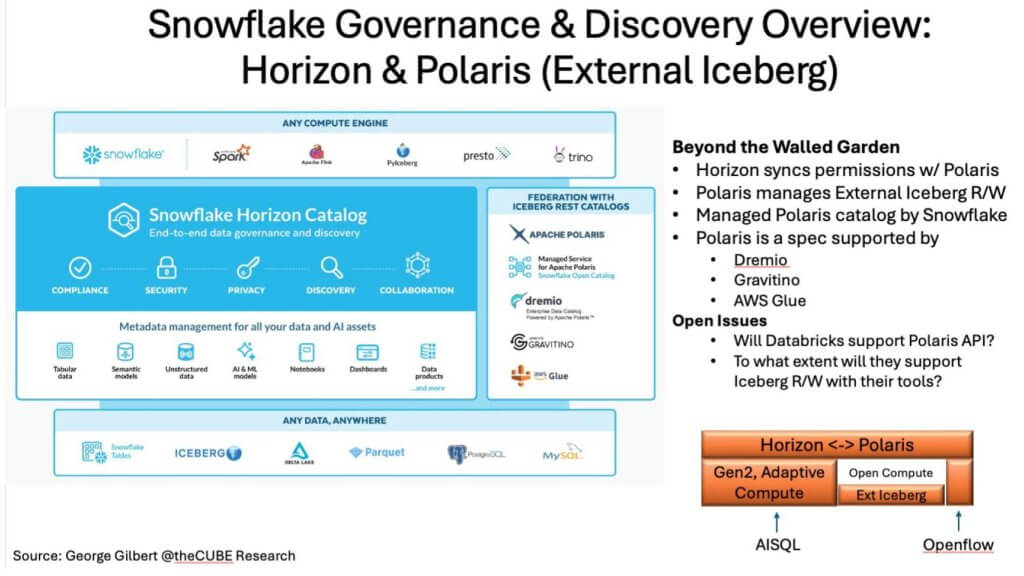

Horizon and Polaris: Snowflake’s Bid to Govern the Open Frontier

Open-table momentum has swung from curiosity to imperative. By federating its proprietary Horizon catalog with the open-source Polaris REST catalog spec for Apache Iceberg, Snowflake is attempting the most consequential move since first pledging Iceberg support in 2022. The blue-box architecture shown below in Exhibit 9 conveys that Horizon remains the nucleus for privacy, lineage, and policy management, while Polaris starts to extend those controls to any Spark, Trino, or Flink job running on external Iceberg tables. Permissions and policies flow outward to the external data. Our premise is this preserves Snowflake’s governance moat even as compute becomes a free-for-all.

This matters for three reasons:

- Control-point shift. In the open-data era the buyer owns the data. The technology vendor that defines and enforces policy owns the trusted relationship. By synchronizing catalogs, Snowflake moves that control point up the stack while expanding to data it didn’t manage previously.

- Any engine, same rules. Enterprises can mix the Snowflake data platform with best-of-breed engines yet keep a single source of truth for governance.

- Competitive test. The onus now falls on rivals – especially Databricks this coming week – to match or exceed the Polaris spec. Unity Catalog can read Iceberg today but still lags on write and bidirectional policy propagation. One would expect that to change this month. Adoption decisions in the next release cycle will reveal whether the ecosystem coalesces around a shared governance API or fractures into vendor-specific silos.

Our take: Horizon-to-Polaris is a calculated bet to embrace openness while cementing Snowflake’s role as the policy root of trust. Success hinges on both richer Horizon metadata that keeps ahead of external catalogs; and flawless propagation that proves governance is consistent, irrespective of where the data lives or which engine touches it. If Snowflake executes, it retains the high ground in the age of Iceberg; if it stumbles, or competition out markets them, the control plane shifts to whoever can become the open-source data governance steward.

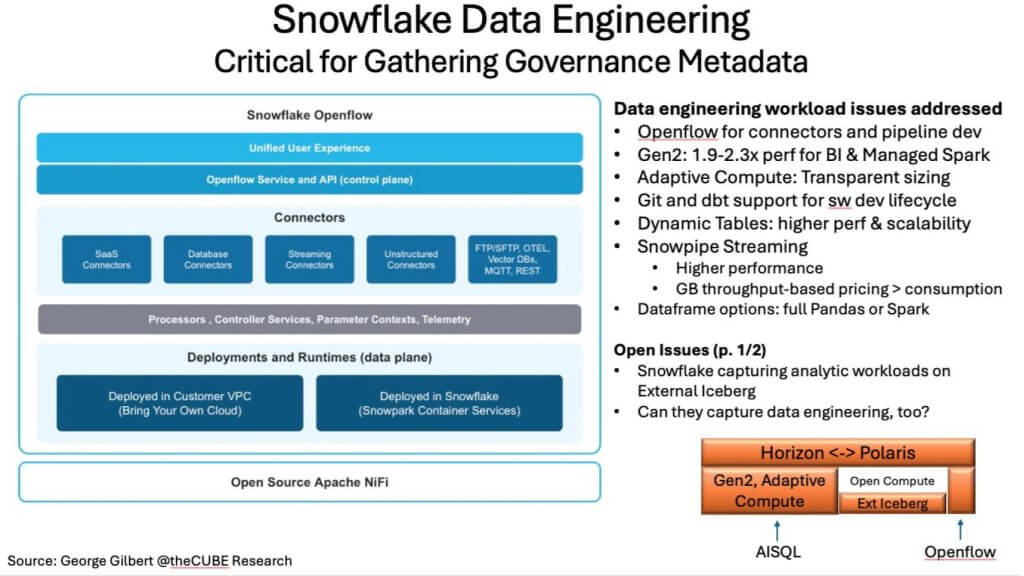

Pipeline Wars Reignited: Can Snowflake Re-Capture Data Engineering Workloads?

Our research indicates that another front in Snowflake’s battle is not simply about smarter insights or richer governance, it is about reclaiming the ingest-and-transform pipelines that have quietly (or not so quietly) bled to cheaper Spark engines over the past two years. Exhibit 10 below lays out the piece parts of its strategy as we see it. It comprises Gen2 Warehouses, Adaptive Compute, and the new Openflow control plane form the basis of Snowflake’s strategy to go hard after these workloads. As well, we believe there is a new pricing tier for lower value batch workloads, but have not 100% confirmed this as fact.

Why it matters. A digital twin is only as good as the freshness, lineage, and cost profile of its source data. When Instacart famously shifted high-volume ETL jobs to Databricks, its Snowflake bill reportedly fell by more than half. That move also siphoned off critical lineage metadata, weakening Snowflake’s ability to assert Horizon as the single source of governance truth. The lesson to Snowflake was: lose the upstream pipelines and the downstream control plane eventually fragments.

Snowflake’s playbook.

- Openflow (powered by NiFi) is positioned as more than an ingestion tool; it is the policy-aware conduit that ties source-system lineage directly into the Horizon | Polaris framework. If successful, every bit arriving via Openflow carries built-in permissions and provenance, preserving the governance envelope from origin to agentic action.

- Gen2 Warehouses pledge ~2.1× query throughput, to offset a ~30% higher credit price. For data engineering workloads that are throughput-sensitive but latency-tolerant, the math must net out in favor of total-cost-of-ownership, otherwise Spark will remain the rational choice.

- Adaptive Compute eliminates T-shirt-sizing guesswork, auto-scaling clusters up and down so engineers don’t overprovision for peak. It mirrors capabilities Oracle has long touted and neutralizes a frequent competitive talking point.

- Dynamic Tables & enhanced Snowpipe Streaming aim to blend incremental views with volume-based pricing. We’re still awaiting final confirmation, but his would be attractive for low latency change data capture (CDC) pipelines.

What Snowflake must still prove. Open benchmarks against Databricks, Fivetran-run ingest pipelines, and other open compute stacks will determine whether Gen2 truly wins on price-performance for data engineering. Just as importantly, the company must convince data-engineering teams that moving or retaining pipelines inside Snowflake adds net value—via simpler lineage capture, unified policy enforcement, and lower operational friction—without locking them into a premium compute tax.

In our opinion, the stakes are quite high. If Snowflake pulls more data engineering back under Horizon’s umbrella, its governance moat becomes self-reinforcing. If it fails, customers will route lineage through Unity or another external catalog, diluting Snowflake’s control plane to just one of many metadata hubs in a multi-engine world.

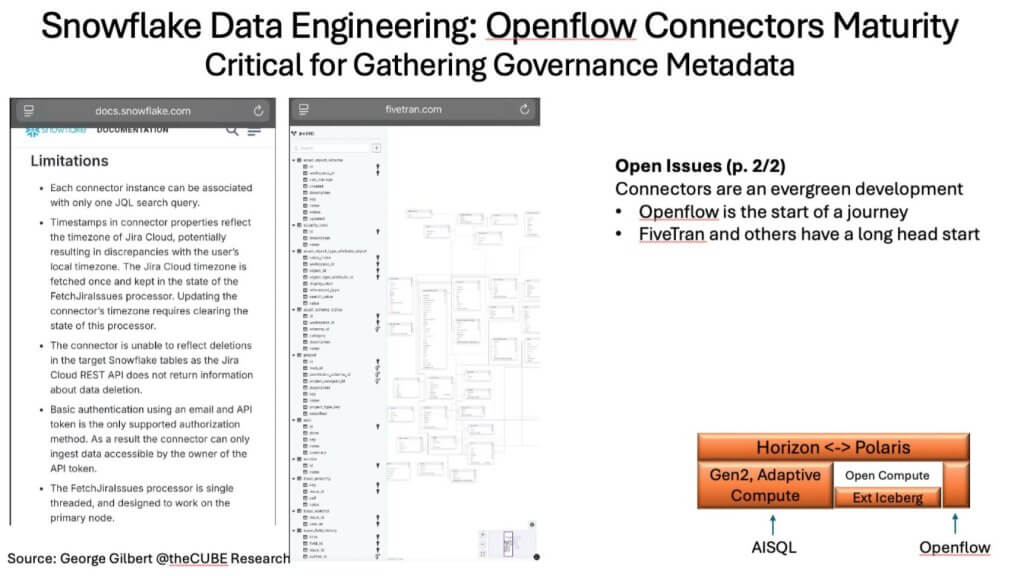

Connector Reality Check: Can Openflow Close the Ingest Gap?

The fact of a digital-twin initiative requires a rich, real-time event stream with hundreds of battle-tested pipelines that keep pace via connectors to external application and data sources. A leader in the field today is Fivetran along with a handful of startups and of coursee, legacy ETL players who have spent a decade hardening their connectors against schema drift, rate-limit problems, and authentication quirks.

Exhibit 11 below shows an example of the immaturity of Snowflake’s offering compared to Fivetran’s hardened solution. On the left you see Snowflake’s own docs spelling out connector limitations to Jira. For example, single-threaded Jira pulls, basic auth only, no delete tracking. Contrast that with the schema richness in the middle, showing FiveTran’s battle-tested map of the same source. That visual alone tells you which player has more battle scars.

(Images of Snowflake and Fivetran documentation from George Fraser, CEO of Fivetran)

Snowflake’s Openflow is derived from its Datavolo acquisition. The strategic risk is if Fivetran manages ingest for critical SaaS data to Iceberg, some of the authoritative lineage, and therefore policy control, may migrate outside Horizon’s purview.

Catching up is a two-front campaign:

- Connector breadth and durability. Building and maintaining hundreds of high-fidelity connectors is an evergreen grind. Snowflake must accelerate with pre-built kits, joint engineering programs, or even further M&A to close the gap Fivetran, Informatica, and others enjoy.

- Low-code transformation authoring. Openflow’s NiFi-based engine promises drag-and-drop pipeline design, but the market is moving toward metadata-driven, AI-assisted pipeline generation. Salesforce’s $8 billion bet on Informatica raises the stakes where low-code isn’t a feature, rather it’s table stakes for harnessing legacy data at scale.

Until Snowflake proves parity on both fronts, the governance narrative remains susceptible to attack. Horizon’s policy graph is only as complete as the data it ingests; a fragmented ingest layer would hand rival catalogs – Unity or otherwise – the opportunity to assert themselves as the true system of record.

The New Battlefield: Who Commands the System-of-Intelligence High Ground?

Exhibit 11 below widens the lens beyond Snowflake’s roadmap to a subset of the cast of rivals now eyeing the green System-of-Intelligence (SoI) band. Once you declare ambitions to own this layer, you step onto a proving ground already filled with well-funded incumbents like SAP with Business Data Cloud, Salesforce with Data Cloud, ServiceNow with Workflow Data Fabric, Celonis with process mining, Microsoft and Oracle with decades of application context, and niche innovators (and Snowflake partners) such as RelationalAI and Blue Yonder, weaving graph semantics directly into decision automation.

Our research indicates that the SoI is the linchpin of modern enterprise architecture because agents are only as smart as the state of the business represented in the knowledge graph. If a platform controls that graph, it becomes the default policymaker for “why is this happening, what comes next, and what should we do?” In that world, the yellow System-of-Agency tier may gravitate toward the same vendor, cementing a feedback loop of data, context, and action. At a minimum, agents need to be able to continually train on the SoI. Emergent behavior that needs to be repeatable, perfomant, precise, and explainable also needs to be materialized back into the knowledge graph.

Snowflake and Databricks still dominate the orange analytics foundation, but they must now elevate into the green zone without triggering a frontal assault from application giants that view the same territory as their destiny. Cortex is Snowflake’s opening gambit; Databricks will answer at its summit this coming week. The outcome will determine who writes the rules of engagement and who settles for plumbing-level margins in an increasingly open, Iceberg-driven data economy.

| Architectural Layer | Strategic Role / What It Does | Likely Vendors | Primary Control Point & Value Capture | Competitive Intensity & Key Dynamics | Implications for Snowflake |

|---|---|---|---|---|---|

| System of Engagement (purple, far-left column) | Generative UI/UX that lets business users “talk to data,” visualize results, and interact with agents | Tableau (Salesforce), Hex, Sigma, Power BI | Owns user mind-share and workflow surface; drives demand for governed semantics | BI/UX ISVs racing to wrap GenAI around trusted models; hyperscalers bundling with suites | Must feed these tools rock-solid semantic views or risk disintermediation; partner economics matter |

| System of Agency (yellow band, top) | Orchestrates agents, executes multistep playbooks, invokes SoI for answers and SoR for actions | Cortex (Snowflake), Microsoft Copilot stack, Salesforce Agentforce, UiPath, Celonis (partial) | Becomes the automation “brain”; sets guardrails | Still fluid—hyperscalers, SaaS giants, and independents all staking claims | Early land-grab opportunity; Snowflake must harden Cortex APIs and governance hooks before rivals envelop layer |

| System of Intelligence (green band) | Digital-twin knowledge graph—captures people, processes, things, activities; feeds agents context & causality | SAP Business Data Cloud, Salesforce Data Cloud, Palantir, Celonis, Blue Yonder, ServiceNow Workflow Data Fabric, RelationalAI | Richest semantic model = highest value; programs all higher-level agents | Hottest real estate—apps, hyperscalers, and ops-platforms converging here | Snowflake’s semantic views + Horizon/Polaris must expand into full graph or risk ceding layer to application incumbents |

| Governance Metadata (orange/teal bar) | Policy, lineage, privacy, security across native & Iceberg data; handshake between engines | Horizon (Snowflake) ↔ Polaris REST spec; Unity (Databricks); Informatica, Collibra, etc. | Trust and compliance root-of-truth | Race to become neutral, bidirectional standard for open data | Horizon-Polaris sync must continue to get richer to remain authoritative catalog |

| Data Platform / System of Record (blue & orange base) | Core analytical engines, storage, and transactional systems feeding the stack | Snowflake, Databricks (Iceberg/Lakehouse); traditional SoR apps (SAP, Oracle, Microsoft, etc.) | Performance, cost, and data gravity | Commoditizing via open formats; best-engine wins fights | Gen2 warehouses, adaptive compute, Openflow pipelines must deliver TCO wins to keep workloads in-house |

In short, crossing the Rubicon is not merely about exposing Iceberg tables or adding write-back support, it is about marching an army into new territory already patrolled by SAP, Salesforce, ServiceNow, and Microsoft. Whoever maps the richest, most dynamic enterprise knowledge graph will program the next decade of AI agents, relegating others else to more commodity-like infrastructure beneath the green bar.

Market‐Momentum Reality Check

The latest ETR XY snapshot (spending velocity on the Y-axis, account penetration on the X-axis) is shown below in Exhibit 12. It reminds us that architectural ambition has to land inside real budgets with technology buyers.

- Microsoft sits in its normal position, the northeast corner. It’s products are ubiquitous with sustained spend momentum. Power BI rides inside M365 and benefits from its accelerating Copilot push.

- AWS isn’t far behind, buoyed by SageMaker, Bedrock and the new Q natural-language layer, signaling it will monetize AI higher up the stack.

- Salesforce enjoys broad presence but middling velocity; execution hinges on turning Data Cloud and Agentforce (along with Slack, Tableau, Mulesoft, etc.) into a cohesive SoI rather than a bundle of piece parts.

- ServiceNow owns its service-workflow domain and is quietly wiring a knowledge graph beneath it. While it has a smaller footprint in the survey, it has big leverage in its installed base.

- Google Cloud shows improving momentum post-Next and IO announcements; Vertex plus Gemini integrations are starting to resonate with customers and partners.

- Oracle and IBM rank low on account momentum but have large installed bases, a value play and remain cash machines where they land. Both firms pursue differentiated data plays. Oracle is more vertically integrated while IBM is going after a data integration opportunity across hybrid estates. Both have AI plays that escape pure XY optics in the ETR data set.

- SAP retains deep SoR entrenchment and is now layering Business Data Cloud as its bid for the SoI slot.

- Snowflake and Databricks remain twin pillars of the analytic-infrastructure layer; each must now prove it can climb into the green SoI band without alienating partners. Or perhaps choose to participate as the world’s best data infrastructure.

- Palantir and Celonis punch above their weight in spend velocity. Palantir is focused on its domain graphs while Celonis is trying to be the Switzerland of data harmonization and agentic control.

Closing Perspective

Our research indicates that the industry is decisively moving beyond static dashboards and walled gardens toward an agent-driven future where value accrues to whoever commands the rich, governed knowledge graph at the heart of the enterprise. Snowflake’s Horizon | Polaris bridge, Gen 2 warehouse, Cortex agents, and Openflow pipelines are strong moves to capture that ground, but they also invite direct confrontation with application giants and hyperscalers who eye the same prize.

In our opinion, the winners over the next decade will be those who can: 1) Guarantee high quality data harmonization and governance across open data; 2) Deliver price-competitive pipelines that keep metadata in-house; and 3) Build a dynamic system of intelligence that agents can trust and act upon in real time. Everyone else risks relegation to lower-value plumbing in an increasingly open, Iceberg-native world. Still a good business but it won’t command the highest valuations in our view.

The Rubicon is in sight. Cross it with caution and a strong position and embark on a campaign to secure the high ground.