Executive Summary

If you have an all-flash laptop, could you ever accept going back to one with a hard disk? Can you imagine having to remember to save data on your mobile device or all-flash laptop? How irritating is using software designed for hard disks and losing data when you forget to save? Could you, as a consumer, go back to using the magnetic storage used in your cameras, cell phones and music players a decade ago? When new technologies are first introduced, they are initially used to replace existing technologies. The second stage is when advanced users (and very occasionally new vendors) find new ways of using these new technologies to solve new problems never even conceived by the original technologists.

Wikibon has researched in depth the early adoption of flash storage by leading IT organizations, and concluded that best-practice use of flash necessitates a radical change in the way IT systems are organized, developed and architected. These changes will directly increase productivity for IT, the application end-user and the organization. The changes will also enable new business and organizational models and drive significant increases in productivity, value-add and revenue.

The thesis of the overall Wikibon research in this area is that within 2 years, the majority of IT installations will be moving to combine workloads together to share data, using NAND flash as the only active storage media. This move together with consolidation onto a cloud-enabled converged infrastructure will reduce IT budgets and improve the productivity of IT, especially IT development. Most dramatic of all will be the improvement in applications by utilizing hugely increased amounts of data. The productivity of end-users will increase, both for business users and customer/partner users. Organizations will be significantly more productive and/or will drive higher revenues. A major contributor to this change will be the migration for all but the most moribund of data from disk to flash. A major stepping stone in that migration will be the replacement of traditional disk and hybrid arrays by all-flash arrays.

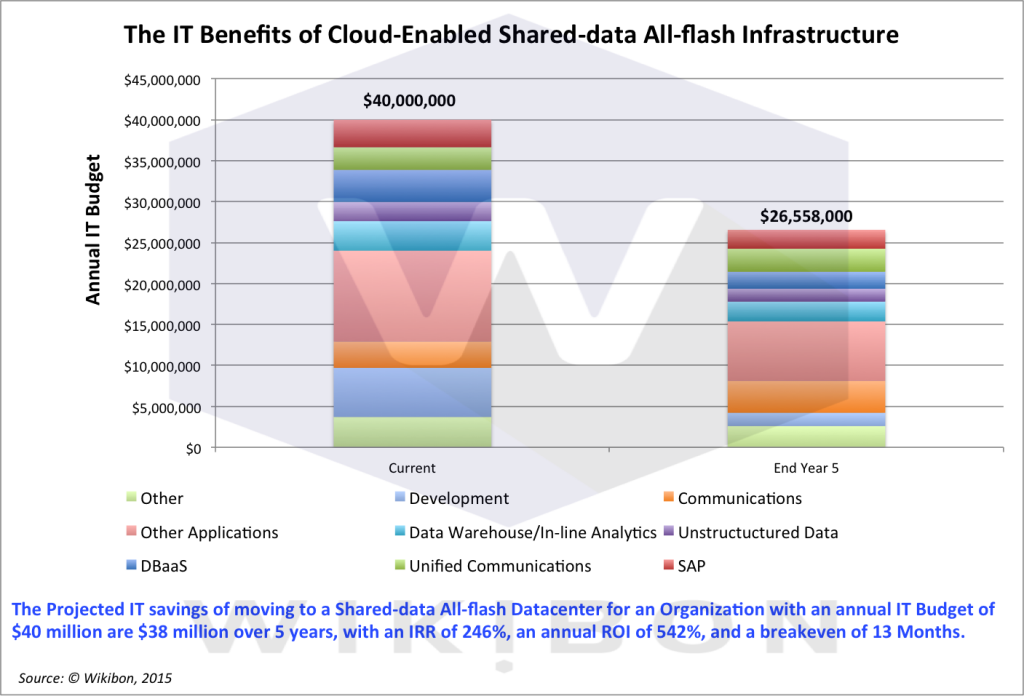

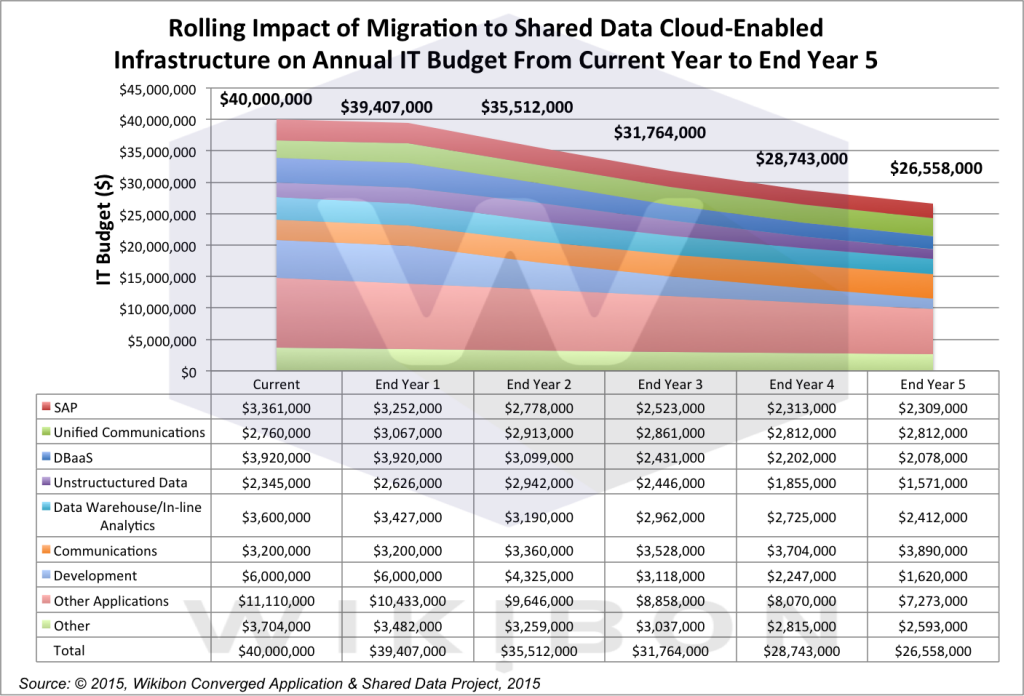

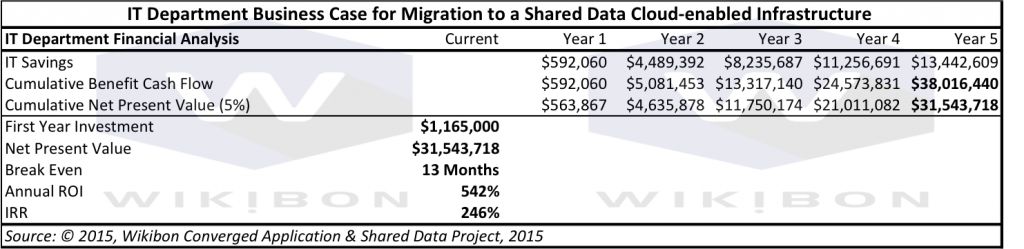

This research builds on previous Wikibon work including “Migrating to a Shared Cloud-Enabled Infrastructure“ and “Evolution of All-Flash Array Architectures“, and focuses on the changes required by IT to deliver on a shared data promise. It concludes that these changes together with converged infrastructure have the potential to reduce the typical IT budget by one third over a five year period while delivering the same functionality with improved response times to the business. Figure 1 below shows the projected IT savings of moving to a shared-data all-flash converged datacenter for a “Wikibon standard” organization with an IT budget of $40 million will be $38 million over 5 years, with an IRR of 246%, an annual ROI of 542%, and a breakeven of 13 months.

- Figure 1: The IT Benefits of Cloud Enabled All-flash Datacenter

Source: © 2015, Wikibon Converged Application & Shared Data Project, 2015

Future research will look at the potential to maximize the contribution of IT to the business by maintaining or increasing IT budgets, and will conclude that IT budgets should increase to deliver historic improvements in internal productivity and increased business potential.

The Business Benefits for Early Adopters of an All-flash Datacenter

Introduction

When technologies such as NAND flash become available, it is not the technology vendor but the technology deployer that decides the business value and tries new ways of deployment. To understand the potential of an electronic datacenter using all-flash storage, it is valuable to look at the early pioneers to see how flash is used to create business value. Below are the experiences of three early pioneers.

UK Financial Services

An all-flash array was initially deployed to solve a problem with batch processing. There is a two hour window in the morning to complete all the batch processes. There were many database and IO problems with meeting the business start-of-day deadline. The choices were to recode the application or deploy flash storage technology. The all-flash array technology was by far the cheaper and quicker option, and the batch processing went from two hours to less than 30 minutes. This success motivated IT to look at how they might apply flash more effectively in other IT areas, and decided to apply it to the developer community. This financial house develops most of its financial services software and has over 25 developers. Creating database copies for the developers was previously an intense bandwidth and elapsed time operation taking place on weekends, and providing only subset copies of the database. The EMC XtremIO all flash array allows space-efficient snapshot copies, where no data is copied initially and only metadata is initially updated. The changes to the snapshot are then write to a deltas file. This enables very fast publication of a new test version of any database. Because no data is copied, every developer, tester and QA person is able to have a full logical copy of the database sharing a single physical copy of the database. The combination of improved response time, higher bandwidth and earlier access allows the doubling of developer productivity, and a much higher quality of code from the developers for QA. In addition, the number of shared databases has doubled, allowing simpler schema and greater end-user functionality to be developed in less time. As a result of these experiences, IT is implementing an all-flash storage strategy.

US ISV

A US independent software vendor (ISV) is in the process of moving to a continuous development model. By combining the new development philosophy with a much better storage infrastructure on an all-flash array, the ISV has accelerated and enhanced its move to a continuous development environment. A key metric for success in the marketplace for this ISV is enabling new function in their core products. They measure the number of updates in a new release, where an update can be anything from fixing a bug to a new function. The ISV has improved this by a factor of three, from 600 to 1,800 updates per release. This is enabling the ISV to be far more competitive in the marketplace. This ISV has also implemented an all-flash storage strategy for its datacenter.

US Electronics Distributor

Previously this US distributor had to schedule when different modules of its core distribution ISV package could be used. For example, the priority during the day was given to the call center, with procurement only allowed to run at night. By moving to an all-flash environment, this distributor was able to allow all departments to use any module at any time. This enabled an increase in revenue of 30% over the next 18 months with no additional headcount or increase in support costs. These benefits directly improved the profitability and level of service of the distributor and improved overall profitability by 20%. The ISV developer of the distributor package now recommends an all-flash environment for its software.

Lessons Learned

All of these early pioneers started out to solve a specific performance problem. The benefits of predictable performance, lower operational costs and greater IT productivity (especially in development) led all of them to rapidly adopt an all-flash strategy.

Flash & Hard Disk Technology Costs

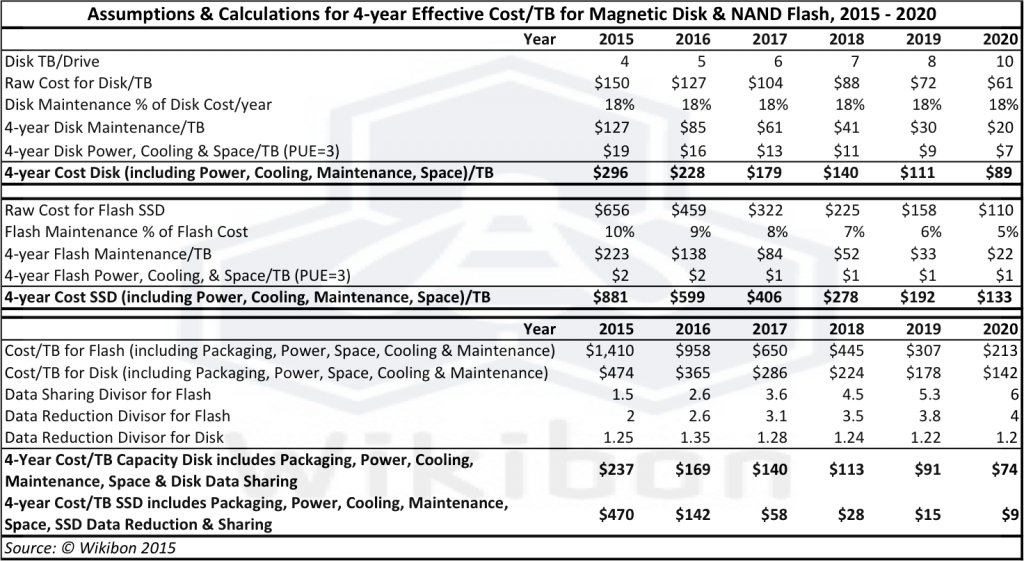

-

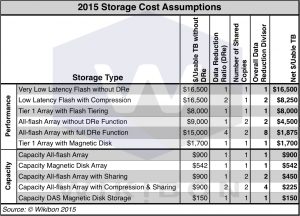

Table 1: 2015 Storage Cost Assumptions

Source: © Wikibon 2015. 4-Year Cost/TB Magnetic Disk includes Power, Maintenance, Space & Disk Data Reduction. 4-year Cost/TB SSD includes Power, Maintenance, Space, SSD Data Reduction & Data Sharing.

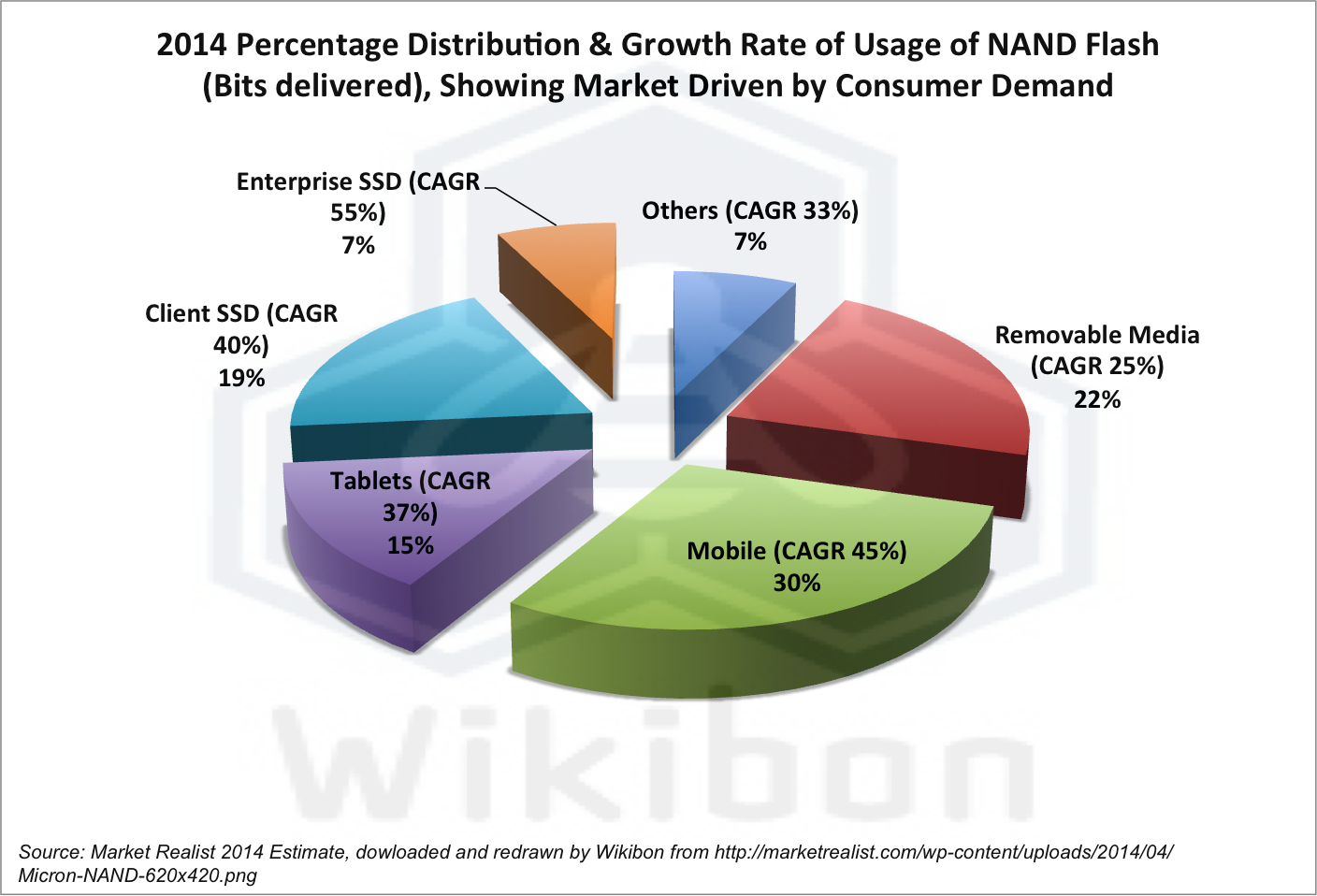

Source: Market Realist 2014 Estimate, dowloaded and redrawn by Wikibonfrom http://marketrealist.com/wp-content/uploads/2014/04/Micron-NAND-620×420.png

Figure 2 shows that the overall growth of NAND bits delivered in 2014 is 38%. Only 7% of that is currently Enterprise SSD. Although this sector is growing the fastest at 55%/year, at this rate it will still be less that 15% by the end of the decade. The SSD form factor is changing as NAND flash manufacturers (e.g. Micron & SanDisk) are establishing increasing value by delivering more consumer-level flash and greater functionality within larger form factors. The overall growth rate of NAND bits is being driven by huge demands from consumer technology. Figure 2 shows the details. Mobile is the greatest consumer of NAND flash storage. The increasing demand for consumer flash and the larger form factors are key to the rapid reduction in flash prices. Samsung and others have invested in the new 3D flash foundries delivering NAND chips with 15 nanometer and lower lines. These investments in foundries and new technologies will drive increased flash density and reduced flash costs for consumers through to 2020 and beyond, with enterprise flash hanging on to the coattails of consumer flash.

There is little to no investment going into traditional magnetic disks for new functionality. The last throw of the die is the 10TB helium filled drives with overlapping shingled write technology being introduced by HGST, which are built for low-speed sequential processing and suitable only for WORN (write once read never) archiving applications.

Table 1 shows the cost of performance and capacity of HDD and flash technologies in 2015. The cost difference between the very lowest flash storage with no data reduction technology ($16,500/terabyte with no DRe) and the lowest direct attached magnetic disk storage (DAS at $150/terabyte) is over 100 times. Also in the table are the data reduction ratios that can be reasonably obtained in 2015, and the number of copies that can reasonably be obtained in 2015. The Wikibon research in the posting called “Evolution of All-Flash Array Architectures” examines the technology cost reductions over time, and looks at what can reasonably be achieved in data reduction and number of copies. The detailed analysis and assumptions are shown in the previous research in Figure 2, Figure Footnotes-1, and Table Footnotes-1 below in this research.

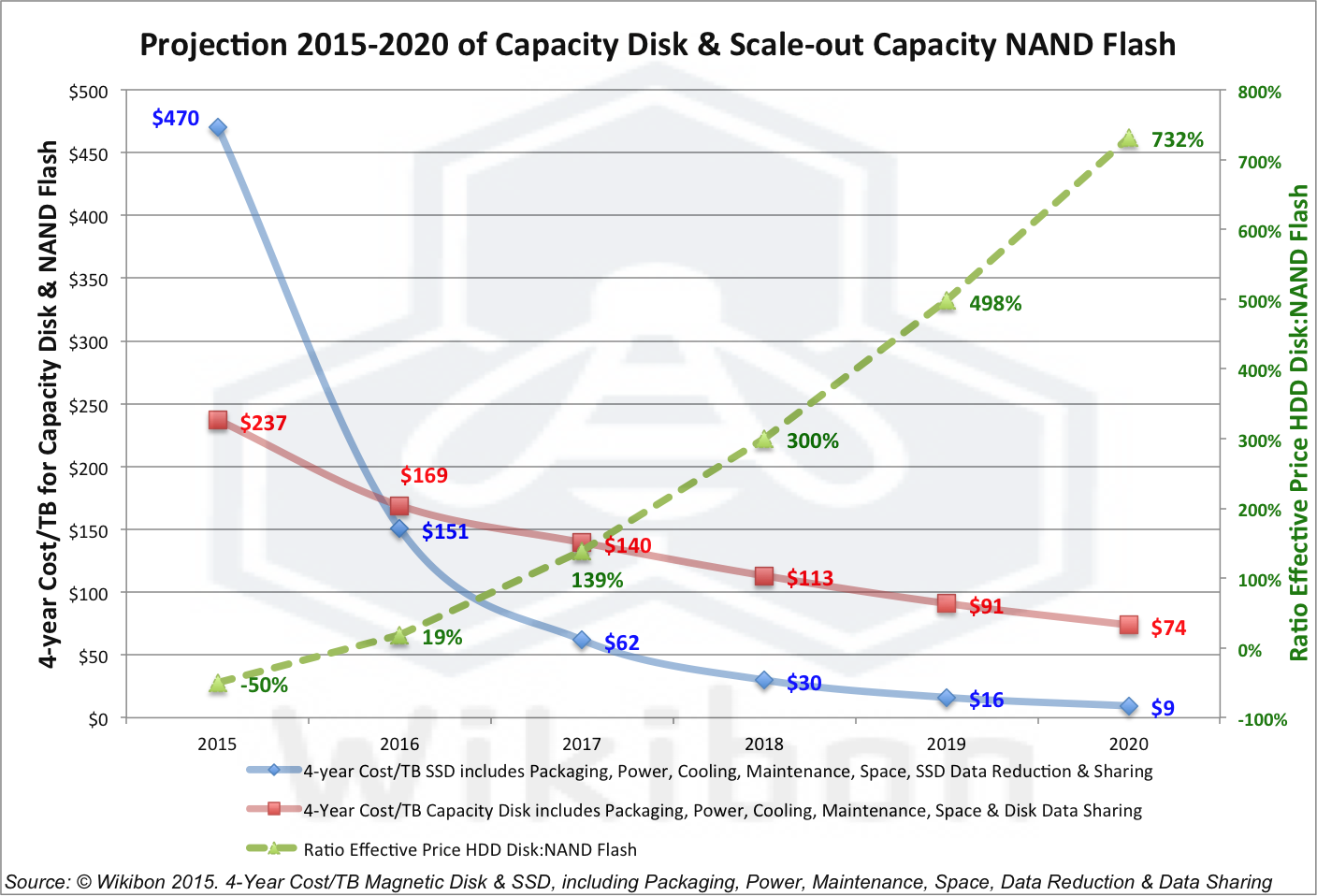

Wikibon’s analysis of the 4-year effective cost of NAND flash storage (Figure 3) shows it dropping from about $470/terabyte in 2015 to $9/terabyte in 2020. That is a fifty times (50x) reduction in cost over 5 years. In stark contrast, capacity magnetic disk will drop from $237/terabyte to $74/terabyte over the same period, only a three time (3x) reduction in cost. The green line in Figure 3 shows the ratio between magnetic disk costs and NAND flash costs, climbing from -50% in 2015 to over 700% by the end of the decade.

Repeating this conclusion – if NAND flash storage is deployed correctly in the data center, it will be lower in cost in 2017, and be over 7 times cheaper that disk by the end of the decade.

This conclusion does not mean that magnetic disk drives will disappear overnight. Like all displaced technologies, the change will take place over many years, and there will be opportunities for well managed magnetic disk manufacturers to ride the cash-cow for the next decade.

However, these changes mean that datacenters will be organized very differently, IT operations will be very different, and middleware and applications will be designed differently. A starting point will be the modern all-flash datacenter, as part of a consolidated converged cloud-enabled datacenter.

Source: © Wikibon 2015. 4-Year Cost/TB Magnetic Disk includes Power, Maintenance, Space & Disk Data Reduction. 4-year Cost/TB SSD includes Power, Maintenance, Space, SSD Data Reduction & Data Sharing. The Key assumptions for these projections are laid out in detail in Table Footnotes-1 in the Footnotes below

Deploying an All-flash Data Center

The two keys for organizations to achieve these reductions in data center costs are as follows:

- Measure and minimize the number of physical copies of data, and increase the number of logical copies deployed from this data

- Plan to combine transactional, data warehouse & development data initially, and all data within five years.

This is antithetical to the way that most data centers are organized and operated today, where transactional, business intelligence and development usually have there own storage silos, and where data is copied from one silo to another. The main technical reason behind this topology is the difficulty of sharing HDD storage, which has very low access density. Any other processes sharing at the physical data level can affect performance in unpredictable ways, especially if they affect the cache or interfere with the working set size. Operations such as moving to/from row-based to column-based operations or moving data while it is being used are all challenging. The best way of reading and writing traditional disk storage is in sequential mode, which again limits the ability to share data and the ways that data can be accessed and processed.

Flash allows true virtualization of data, which in turn allows data to be aggressively reused with far fewer physical copies needed. Flash is orders of magnitude superior for random workloads, and can be striped in the same way as magnetic disk to deliver very high bandwidth when needed, at throughput rates orders of magnitude higher than disk. There are 2015 product designs that are going to allow the uploading of a terabyte of data from flash to DRAM within a minute.

Applications and the operational storage systems that support them today need large caches and small working sets to operate effectively. It is essentially impossible to mix operational row-based systems with business intelligence column-based systems. The virtualization of storage with flash technologies makes this sharing possible and practical, and will be a major factor in removing the ETL bottlenecks in creating reports from operational systems.

These changes mean that datacenter will have to be organized very differently:

- The more data held in consolidated storage, the greater the savings there will be in reducing copies

- A scale out architecture is absolutely required to allow sufficient processor power to be applied to manage the storage and the metadata about the storage. The more data that is shared, the more processor power will be required.

- The cost of maintenance on NAND storage will reduce to less than 5% of initial purchase price per year. The only maintenance of importance will be to update software functionality on the processors and the controllers within the flash units.

- Flash units will move away from the traditional SSD format, which was used to take early advantage of disk ecosystems and infrastructure.

- Flash drives are more reliable than magnetic disk drives, degrade more predictably than tradition disk drives, and will improve system reliability and availability.

- Flash will have a much longer life in data centers, increasing to 10-15 years and longer.

- Dynamic addition of capacity with different technology levels will be an essential design point for storage systems.

- No tiering is required for 95%+ of data, as tiering uses bandwidth and for the most part leads to unnecessary complexity

- Simple tiering is only required for less than 5% of enterprise data with very low change rate, low historical data access and no dynamic requirement for transfer

- Full storage reduction techniques and sharing data have a multiplicative impact on the savings from flash, compared to traditional operational techniques.

- Future storage systems must be able to keep metadata about each physical copy of data, the number of logical copies deployed from this data, and the usage and performance of each of the physical and logical copies.

- Space-efficient snapshots will be the major (and usually the only way) that logical copies of data are created

- Sophisticated snapshot systems will allow much higher levels of snapshots to be made, including snapshots being taken every few seconds.

- Space-efficient snapshots of flash storage will allow finer-grained RPO & RTO recovery by application or data store by separating out the deltas that need to be taken offsite.

- Space-efficient snapshots will completely change the current backup and recovery software, and will obviate the need for most de-duplication appliances. These snapshots will enable less complex and quicker backup and recovery focused on application priority. The integration of new methods of backup with a cloud-enabled cloud infrastructure will allow business continuance systems to exploit cloud services.

- Space-efficient snapshots on flash storage will replace traditional replication methods, both synchronous and asynchronous on traditional storage.

- Virtualization & sharing of data both require extremely high levels of metadata protection against:

- Accidental loss

- Microcode failure

- Technology failure

- Malicious long-term/short-term hacking.

Managing an All-flash Data Center

The management of NAND flash data will also differ significantly from traditional storage arrays.

- Sophisticated snapshot change management systems will be needed across all flash data deployed.

- Since NAND flash storage is so much faster than traditional storage, there will be a need for sophisticated catalogs of physical (and logical) copy data creation, modification and deletion. Catalogs within the storage systems will be a pre-requisite for effective data governance, compliance and risk management.

- Catalogs will need to span multiple data sources and link to remote data sources. The metadata created will be central to greater data sharing and reuse.

- Backup & Recovery management systems will exploit snapshots and deltas, be fully automated and be designed at the application level to allow dynamic changing of RPO and RTO.

- The storage systems will enable full access to data, metadata and management data via open APIs, to allow integration into platforms of choice.

- Storage systems will need extensive quality of service management, including:

- Minimums & maximum limits for IOPS, bandwidth and response time,

- Management of short-term bursting,

- Different QoSs for snaps,

- Full application IO view.

- Storage systems will need sophisticated monitoring and reporting:

- By application

- By copy

- By volume

- By middleware.

- Storage systems will need to support automated migration of moribund data to HDD or very high capacity flash, with the option of retaining metadata on flash.

- Storage systems will enable full orchestration & workflow automation support for all platforms.

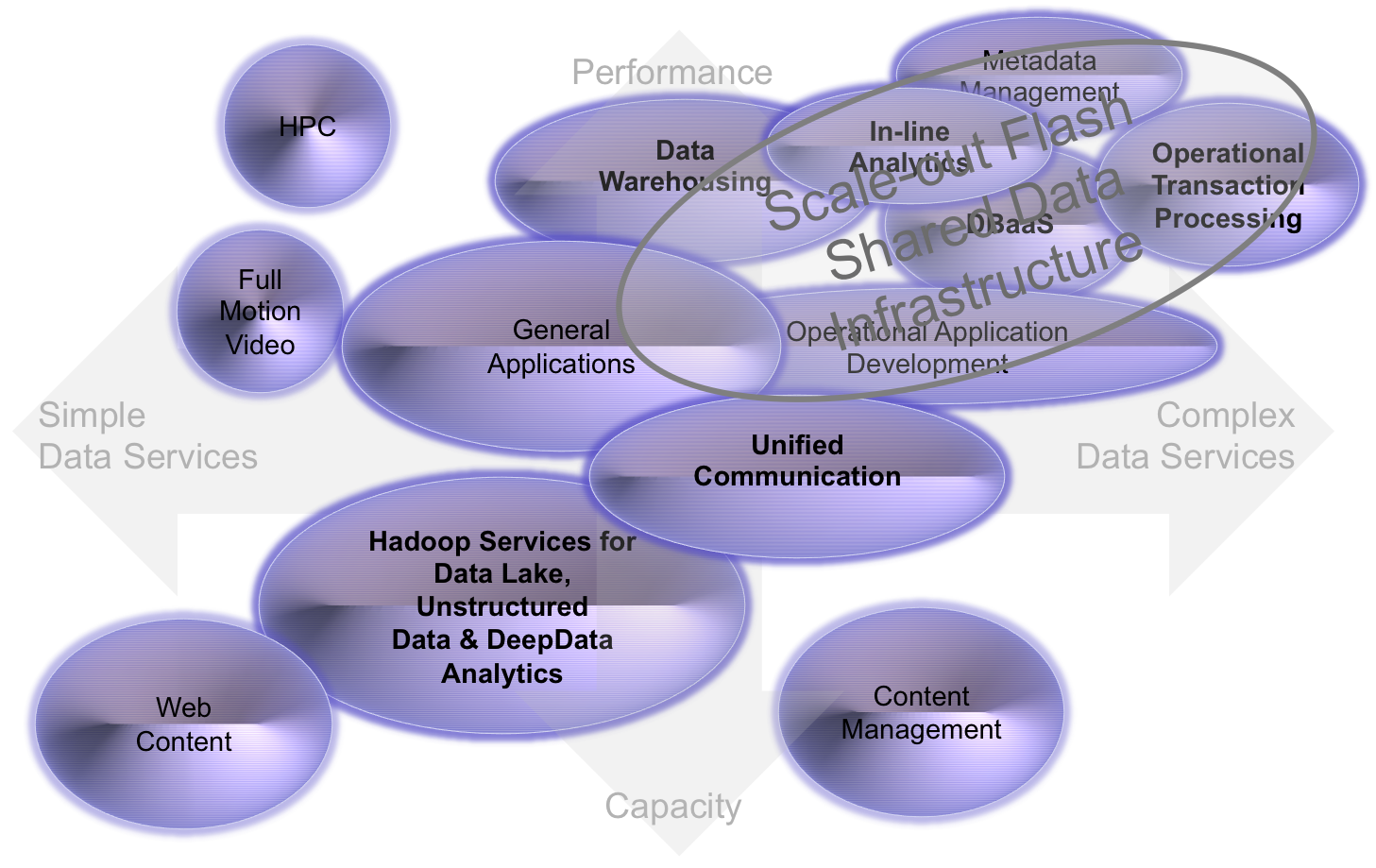

Business Case for Shared Data Cloud-enabled Infrastructure

Figure 4 shows typical datacenter workloads on two axes, a data services axis (horizontal) from simple to complex, and a performance/capacity axis (vertical). The most demanding workloads are on the top right, and include transactional processing, database-as-a-service, inline analytics, data warehousing, and application development. These workloads also have high levels of potential data sharing, including multiple copies of databases for recovery, ETL input to data warehousing and real-time input to inline analytics. These applications are the most demanding for IO response time (e.g., operational database systems with high locking levels) and for IO bandwidth (e.g., ETL for creating data warehousing). Snapshots are used as the primary and only way of copying logical data.

- Figure 4: Key Initial Workloads for Establishing a Shared-data Cloud-enabled All-flash Infrastructure

Source: © Wikibon 2015. 4-Year Cost/TB Magnetic Disk includes Power, Maintenance, Space & Disk Data Reduction. 4-year Cost/TB SSD includes Power, Maintenance, Space, SSD Data Reduction & Data Sharing.

In previous research “Migrating to a Shared Cloud-Enabled Infrastructure“, Wikibon showed that IT costs could be reduced by 24% over 5 years. This is shown in Figure 9 of this previous research. In this previous research Wikibon assumed that development and communication costs would remain constant.

Wikibon has adjusted this research to include different assumptions for development and communications. IT development is shown decreasing significantly of the five year period, as new new flash storage with data sharing techniques are introduced, together with improved development flows using techniques such as continuous development. Wikibon believes that organization will not decide to cut developers, but rather will apply these resources to improving development projects.

Communication was seen in the original research as remaining flat. One of the impacts of faster storage and increased cloud computing will require communication infrastructure to improve to avoid becoming a bottleneck. Wikibon believes there will be a modest increase in comparative spending on communication technology, from 8% of IT budget currently to 15% in five years time.

These adjustments are shown in Figure 5 below, and show that an all-flash datacenter together with converged infrastructure has the potential to reduce the typical IT budget by one third over a five year period, while delivering the same functionality with improved response times to the business. Figure 1 in the executive summary above is based on Figure 5, and shows the projected IT savings of moving to a shared-data all-flash converged datacenter for a “Wikibon standard” organization with an IT budget of $40 million will be $38 million over 5 years, with an IRR of 246%, an annual ROI of 542%, and a breakeven of 13 months. The details of this are shown in Table 2 in the next section.

Source: © 2015, Wikibon Converged Application & Shared Data Project, 2015

Conclusions and Recommendations

The most striking conclusion of this research is the changing cost equation for flash storage systems. Early users focused initially on solving performance problems with flash, but the focus has quickly moved to sharing data instead of copying it. Early users found themselves consolidating physical data as much as possible, both for cost and performance reasons. The initial benefits were better and more consistent response times for OLTP and batch systems, with greater throughput and “very happy” end users and business managers. IT productivity for operational staff was the next obvious and important focus for improvement by these early adopters. After addressing the operational benefits, application development was the next target: in general, application development productivity was improved by 2-3 times.

Figure 3 above shows the projection of the four year costs of NAND flash storage and traditional magnetic disk storage, including costs of technology, power, cooling, space and maintenance. Also included is the amount of data reduction and data sharing enabled by flash technology. All the assumptions are given in the Footnotes-1 Table in the Footnotes below. The projection shows the cross over in cost in 2016, with NAND flash storage becoming 7 times lower cost than traditional disk by 2020.

The overall IT business case for migration to a shared data cloud-enabled converged infrastructure is given in Table 2 below. It shows a break-even in 13 months and a rate of return (IRR) of over 200%. This is an IT business case alone, taking into account lower technology costs and lower IT staff costs in operations and development. The IT budget can be reduced by 34% over five years, with better IT and more responsive IT service that matches the capabilities of cloud service providers.

Source: © 2015, Wikibon Converged Application & Shared Data Project, 2015

Wikibon believes that starting this migration and moving to this environment will earn IT the right to include the business benefits of the migration. The initial business benefits of this migration will be better and more consistent response times for the vast majority of applications. Elapsed time for IT processes such as loading data warehouses and development projects will be reduced. Wikibon believes that these will be seen by the business as tangible business benefits and build trust for future projects. This will result in IT budgets at least staying flat, but in most organizations actually rising, as the ability to apply this new infrastructure to improve the productivity, agility and revenue of the business is demonstrated.

Wikibon believes that the most important business benefits will come from exploiting the local and global data available to the business, arising from better access to all the data within an organization as well as data from the internet and the internet of things. This big data environment at the moment is just beginning, with most of the insights being delivered as reports and visualization techniques to a very few people. The insights tend to stay with those few people, rather than being applied to the business as a whole, and is a reason why big data projects are losing 50 cents on the dollar invested at the moment.

Wikibon believes that the exploitation of this data will explode with in-line analytics, enabled by the new all-flash converged infrastructure. This will allow the insights to be translated into direct real-time operational savings by means of improvements to the core business systems. Real-time ETL of time-sensitive data, merging of local and global data, improved real-time analytic and cognitive systems will all play a role in improving enterprise productivity and agility.

The specific topology of all-flash devices is not at all settled. PCIe cards were the early runner in cloud service infrastructure, and still make up the majority of very low latency solutions. SSDs in the server are also used extensively, especially in database servers. The scale-out all-flash array is the fastest growing segment at the moment, with EMC XtremIO as the leading vendor, and SolidFire in second place. New PCIe switching topologies from new startups such as DSSD (acquired by EMC) and Primary Storage (led by David Flynn, a co-founder of Fusion-io) are also players. These have the potential of reducing IO latencies from the one millisecond of all-flash arrays (average with all delays from flash hardware, flash controller, software, switching, HBAs and queueing) to lower than 100 microseconds, and bandwidth of greater than 150Gbits/second. Server SAN (hyper converged) topologies which share server resources between computing and storage are projected by Wikibon to be the long-term topology for cost and functionality reasons.

Strategically, the large potential IT and business benefits make it more important to just get started, and Wikibon strongly recommends a good scale-out architecture as a pre-requisite for all mid-size and large IT installations.

The scope and importance of these benefits and the ability to merge private and public cloud computing will be the subject of future Wikibon research.

Action Item

The starting point for all CIOs is setting a strategic objective to create a modern all-flash datacenter, as part of a consolidated converged cloud-enabled datacenter. This project is self-funding and should start immediately. For local shared data (within the division or enterprise), the starting point is to migrate the current infrastructure and reorganize IT. With better and more consistent response times and reduced development times, IT will have earned the right to propose projects enabling far reaching improvements to core business processes.

Footnotes

The Table Footnotes-1 below gives the detailed assumptions for Figure 3 above.

Source: © Wikibon 2015