Where? Navigating the Edge, the Cloud, Compliance, and Chaos

So far in our Black Hat 2025 debrief, we’ve covered the who of identity and the what of the attack surface. Now we turn to the question of Where. For nearly every “modern” enterprise (insert eyeroll), the answer used to be a simple, one-way migration to the cloud. This wasn’t always the case, though, and most people I talk to agree that the intention was (and still should be) a hybrid architecture that makes the best of both architectures.

The borderline-gamification of spend via cloud credits (tokens spend faster and than quarters, you get more aggressive with chips or paper money than dollars, etc) made it easier to spend money in the cloud than to buy or build services at the edge, leading to the all-in cloud approach. But now, the intense demands of AI are forcing a significant return to on-prem and edge computing, making the “where” a whole lot more complicated. The modern enterprise isn’t just multi-cloud, it’s radically hybrid.

This reality presents a dual challenge. Organizations must innovate at the speed of the cloud, but they must also secure a sprawling infrastructure that now stretches from public cloud providers back into their own data centers. At this year’s conference, it was clear that security and governance must be able to operate seamlessly across this entire hybrid landscape.

The Hybrid Reality: AI’s Gravity Pull

To be clear, “the cloud” isn’t just AWS, GCP, or Azure anymore. A major undercurrent in my conversations the last few months was how the sheer gravity of AI and the massive datasets necessary for training, combined with the need for low-latency inference is pulling workloads back on-prem. Companies are building out powerful GPU clusters in their own data centers because, for certain AI tasks, it’s just faster and cheaper.

The level of observability, redundancy, and resiliency needed to process workloads at the edge, especially on the new wave of AI PCs, though, is not something it seems most companies are considering. I bluntly asked one company talking about AI at the edge, “What happens if my laptop ****s the bed while it’s half way through training a model or processing data?”

This seemed, to me, like a pretty standard question, given how often it happened when I was writing machine learning models as a data scientist. Those jobs were tiny compared to the workloads we’d be talking about now, but were often still enough to overwhelm my laptop with heat, kill the battery when I accidentally kicked the plug out, or just freeze because some app or service tried to do something while under stress.

Device issues aside, this hybrid strategy also creates a massive visibility gap for security teams. You can’t just secure your cloud accounts and call it a day, you need a unified view that spans your public cloud, your private data centers, and your edge locations. You also need ways to monitor ALL the places data can hide at the edge and reign in the inadvertent training of models with sensitive data in those places. Any solution that can’t see across this entire hybrid landscape is leaving you dangerously blind, and while everyone is selling a platform and single pane of glass, the sheer number of booths in the Business Hall at Black Hat shows that no one has really figured it out end-to-end.

The GRC Breakdown: Innovation Outpacing Governance

This hybrid chaos is where governance completely breaks down. In my conversation with Charles Henderson, the legendary hacker who now leads Division Hex at Coalfire, he dropped a bombshell statistic: his team has successfully hacked 100% of the generative and agentic AI applications they’ve tested, with at least one critical, “game over” finding in every single engagement.

How is this possible? Henderson explained that whether in the cloud or on-prem, AI is often so cheap to deploy that it completely bypasses the traditional budget cycle, the very process that would normally trigger a security review. Development teams are just spinning things up without oversight. “We’re getting these calls from enterprise customers who are saying, ‘Hey, my team did a thing and we have deployed AI. It’s in production. We need you to come test it,'” he said. “It’s like 1997 all over again”.

Seeing the Unseeable: The Power of Context

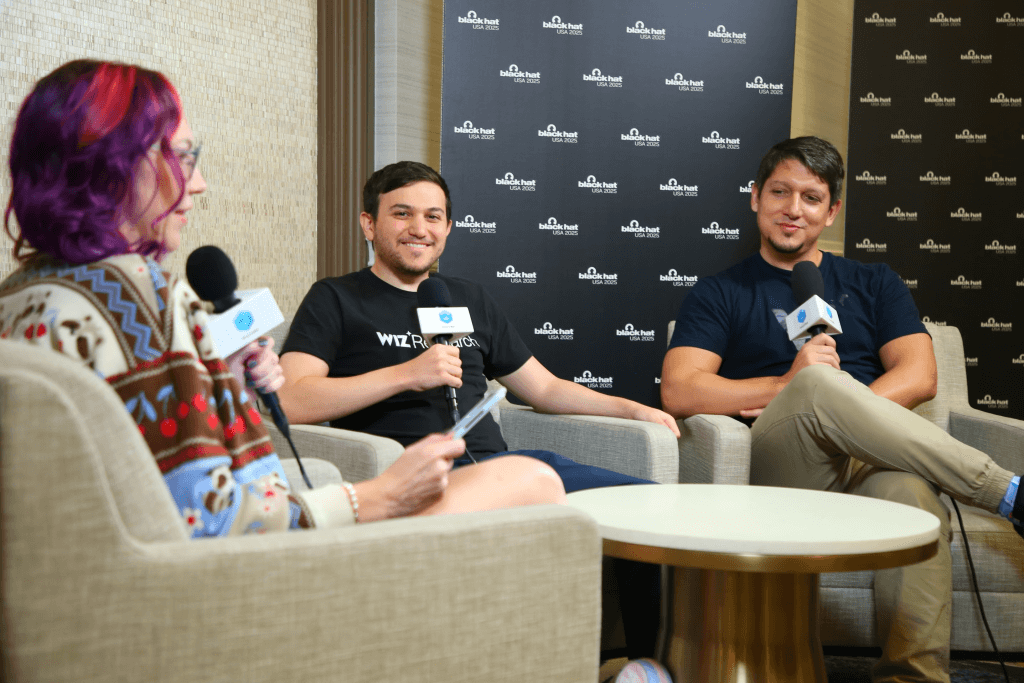

This rapid, ungoverned deployment creates hidden, systemic risks across the entire hybrid environment. The team at Wiz provided a perfect, and frankly terrifying, example. In my conversation with Hillai Ben Sasson and Andres Riancho, both Security Researchers at Wiz, they shared that they’d discovered a container escape vulnerability in a common NVIDIA open-source library. Because that library was used everywhere, both in the cloud and on-prem, this single flaw created a massive, widespread risk. A successful exploit could allow an attacker to escape their container and access other workloads running on the same host.

This is the central problem CNAPPs (Cloud Native Application Protection Platforms) were built to solve, and why they must be hybrid-aware. The power of a platform like Wiz lies in its Security Graph, which connects the dots between disparate risks across all environments. It shows you the “toxic combinations” like that vulnerable NVIDIA library running in a container with excessive permissions, whether it’s on an EC2 instance or a server in your data center, that create a true, exploitable attack path. As Wiz researcher Hillai Ben Sasson put it, the industry needs to stop chasing hype and get back to basics: “AI security is just infrastructure security,” no matter where that infrastructure lives.

Securing the Connections: The Zero Trust Exchange

Securing the infrastructure itself is only half the battle. You also have to secure the countless connections that flow between the cloud, the data center, and your users. This is the domain of the Zero Trust exchange.

Zscaler, a pioneer in this space, operates on a simple principle: connect authenticated users directly to authorized applications, never to the underlying network. In my talk with EVP Deepen Desai, he shared findings from their new ThreatLabz 2025 AI Security Report and he also laid out a clear, four-stage journey for implementing Zero Trust that applies to any user accessing any application, anywhere. By acting as an intelligent cloud switchboard, Zscaler’s platform makes lateral movement, the hallmark of nearly every major breach, all but impossible.

Cloudflare provides a complementary set of solutions on a massive scale. Their Zero Trust platform offers a modern alternative to legacy VPNs, securing access to applications regardless of where they are hosted. But beyond the technology, my conversation with Cloudflare CSO Grant Bourzikas drove home a more fundamental point. He told me the story of a Thanksgiving Day security incident in 2024. Instead of burying it, they published a 12-page, radically transparent blog post detailing exactly what happened. The result? It built trust. “If I’m telling you the truth on this… then you should be able to trust us as an organization,” he said. It’s a powerful reminder that in our industry, trust is the ultimate currency.

Conclusion: A Unified Strategy for a Hybrid World

The message from Black Hat 2025 is that securing the “where” of the modern enterprise demands a comprehensive, hybrid-aware strategy. You need deep, contextual visibility across cloud and on-prem infrastructure (Wiz). You need a mature governance and testing process to keep up with the pace of innovation (Coalfire). And you need a Zero Trust architecture to secure every connection, no matter where it starts or ends (Zscaler, Cloudflare).

But even with the strongest preventative controls, incidents will still occur. In our next post, we’ll tackle the theme of “When”: when an attack is underway, how do we win the race to respond? We’ll tackle the When? tomorrow.

Check out theCUBE for all of our Black Hat USA 2025 coverage!