We’re on the cusp of a new software- enabled business model that will determine winners and losers in the coming decades. We call this service -as-software. Specifically, we believe enterprises will begin to organize knowledge work in new ways, that harmonize islands of automation into a build-to-order assembly line for knowledge work. Firms that aggressively pursue this opportunity will be on a learning curve that we think will create sustainable competitive advantage, and, importantly, a winner-take-most dynamic. Now, we’re not just talking about technology vendors here. Rather, we believe new technology, operational, and business models will emerge, and apply to all enterprises across every industry. In this breaking analysis, theCUBE Research team presents a new way to think about how businesses will operate in the AI era; with implications for application developers, edge deployments, and security / governance models. With a glimpse toward the path that we think organizations can take to go from where they are today into the future.

From On-Prem to SaaS to Service-as-Software

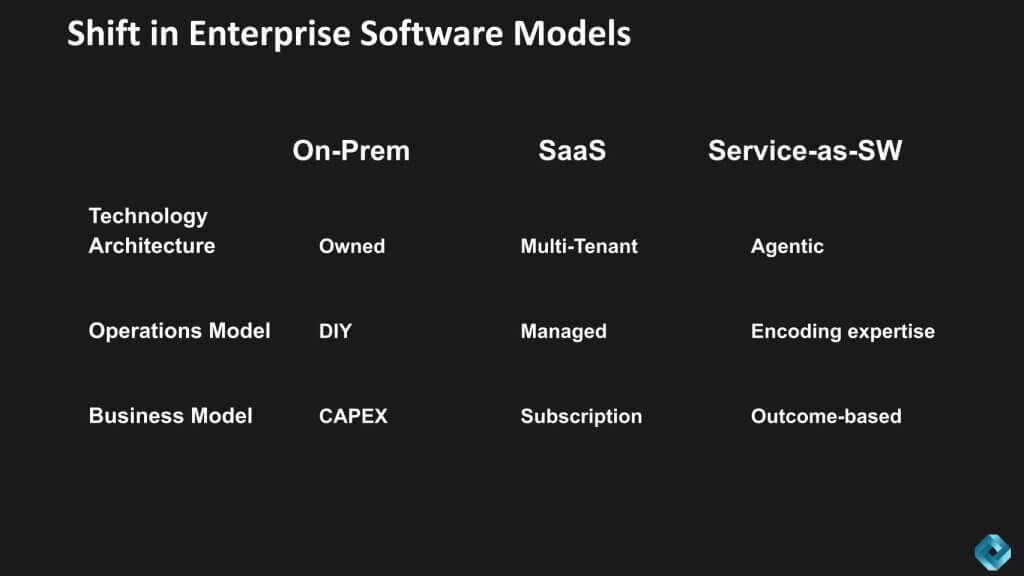

Every major shift in software, brings a change in the technology architecture, operational models, and business models. For example, when we went from on-premises software to SaaS, the technology model shifted from customer-owned to, ultimately, a multi- tenant model. The operational model was very much do- it- yourself, then shifted to managed services with SaaS, and the business model went from heavily capex-focused to subscription- based models. We think that there’s a similar dynamic occurring with service-as-software in the agentic era as shown below.

We believe this marks a profound shift. In prior eras—on-prem and SaaS—the changes largely sat inside IT (both enterprise teams and their vendors). What’s different now is that the technology model, the operations model, and the business model of the entire customer organization are set to change.

Historically, enterprises ran on islands of automation—ERP for the back office and, later, a proliferation of apps. CRM was the first to introduce a new operating model (cloud delivery) and a new business model (subscription versus perpetual licenses). Today, the enterprise itself must begin to operate like a software company. That requires harmonizing those islands into a single unified layer where data and application logic collapse into an integrated System of Intelligence. Agents rely on this harmonized context to make decisions and, when needed, invoke legacy applications to execute workflows.

Operating this way also demands a new operations model: a build-to-order assembly line for knowledge work that blends the customization of consulting with the efficiency of high-volume fulfillment. Humans supervise agents, and in doing so progressively encode their expertise into the system. Over time, organizations don’t manage a handful of agents; they orchestrate an army that captures decision logic across the enterprise.

This, in turn, enables a new business model built on an experience curve. As machine learning predicts process behavior and customer responses, more work is digitized, marginal costs decline, and operating leverage increases. The combination of encoded, specialized know-how (greater differentiation) and falling unit costs yields stronger pricing power and continuous efficiency gains.

Our research indicates this dynamic will be winner-take-most—not just in consumer online services but across any industry with significant knowledge work, including manufacturing (within its management layer). That, in our view, is the fundamental change now underway.

Service-as-Software Brings a New Technology Model

Let’s take those three dimensions, the technology model, the operational model, and the business model and break them down a little further; starting with the technology model implications.

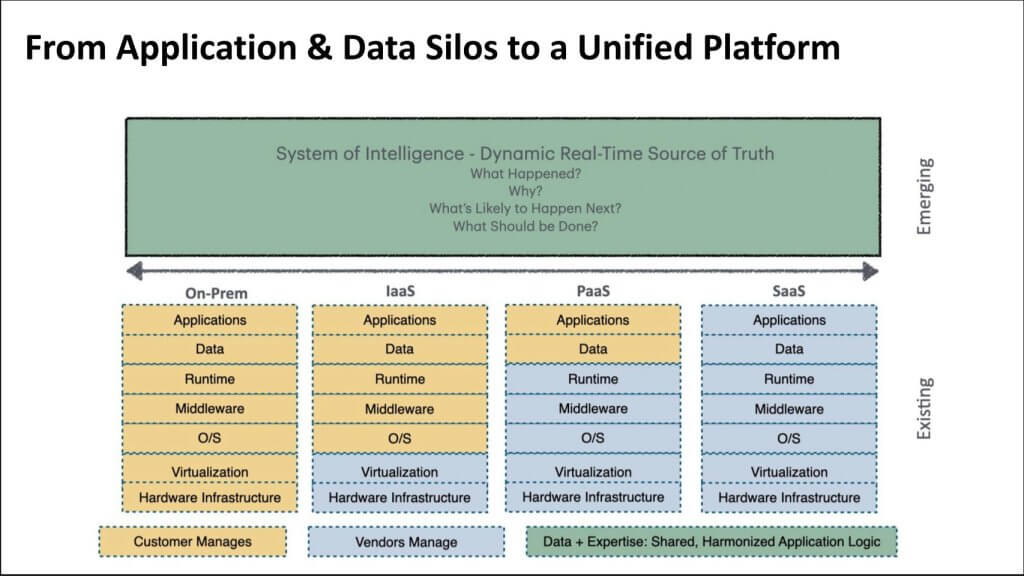

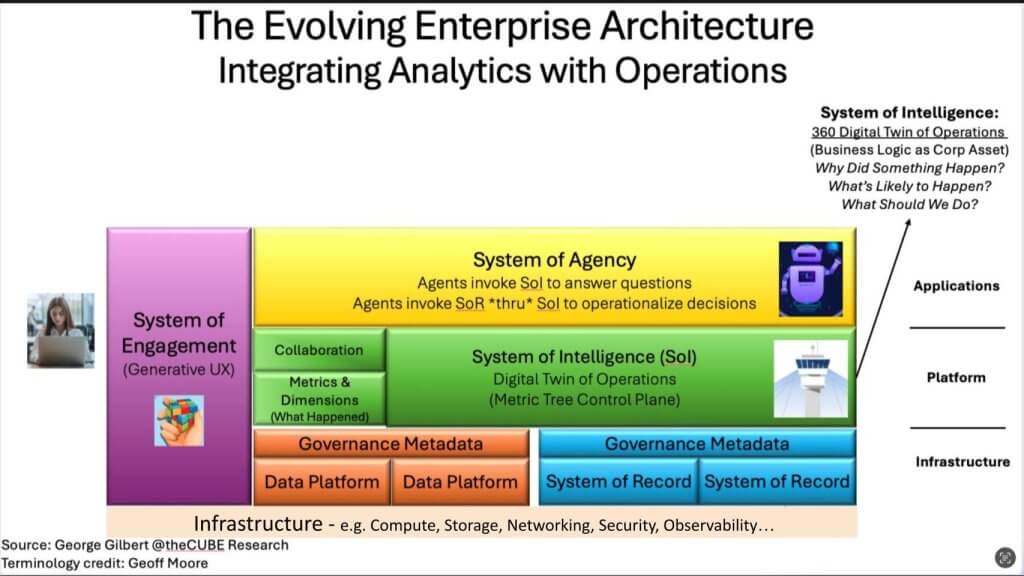

We believe the next stage is a shift from fragmented application silos to a unified platform as shown above. Even when data is consolidated in a lakehouse, it remains functionally fragmented – e.g. sales, marketing, and finance each keep their own star-schema “cubes,” which do not harmonize across the enterprise.

Our premise is this migration runs in parallel with the stack’s operating shift – from customer-managed on-prem to vendor-managed IaaS/PaaS – while remaining hybrid across on-prem and cloud. At the top of the stack sits an increasingly strategic layer we call the System of Intelligence (SoI). Its job is to synthesize context so agents can answer not only “what happened” and “why,” but also “what happens next” and “what should we do.”

The important point to remember is that islands of automation impede management’s core function – i.e. planning, resource allocation, and orchestration with full visibility across levels of detail and business domains. Data lakes do not solve this by themselves; each star schema is another island. Near-term, organizations can start small and let agents interrogate a single domain (e.g., the sales cube) and take limited actions by calling systems of record via MCP servers, for example, viewing a customer’s complaints and initiating a return authorization.

Today you can ask “what happened in sales,” but you can’t ask, ” So how exactly did marketing impact the sales? How did the fulfillment activity impact the customer experience?” You’d have to create a whole new data engineering project and a new set of cubes. The point is, today, the way we can get started with agents is you can look inside, perhaps, one cube, and you can have an agent ask questions in terms of what happened from multiple perspectives within that domain, e.g. within sales or marketing. And you might be able to take limited action using an agent calling into this existing system of record with an MCP server.

So you might, within customer service for example, be able to see customer complaints for that particular customer, and you might be able to take some action, let’s say to manage a return authorization or some limited activity. Again, these are small islands, but to get to that broader, full visibility across the enterprise, that’s going to take a lot of work where we harmonize the data and the business processes, and we get to a conceptual model we borrowed from Geoffrey Moore, the system of intelligence. And then the systems of agency are able to have this four- dimensional dynamic view of the entire business.

To achieve enterprise-wide visibility, data and business processes must be harmonized into the SoI, upon which Systems of Agency can operate with a dynamic, “four-dimensional” view of the business. In our current thinking, that’s the target architecture. What we’re discussing today is just the starting point, and we’re trying to get to a much richer view.

Vendors are converging on this direction, for example:

- Palantir builds an ontology – i.e. a 4D map rather than isolated star schemas – positioning it well for agentic workflows.

- Salesforce provides a packaged customer-data platform and Customer 360 processes so firms need not build from scratch.

- Celonis delivers packaged process models, reducing custom encoding.

- SAP is moving this way with Business Data Cloud.

While these are broader islands than yesterday’s point apps, they still need to be stitched together. Today’s projects are the starting point; the goal is a unified layer that feeds agents with enterprise context and enables end-to-end action.

Building the Digital Twin – From Data Islands to a System of Intelligence

Let’s take a look at the software stack we see emerging as depicted in this next diagram. There are changes, shifts and new competitive dynamics. At the bottom layer, you’ve got infrastructure, we’re talking about compute, storage, networking, and other parts of the infrastructure, security, observability, there’s data movement and the like. You’ve got the system of engagement, the generative UX is what we show below on the chart.

We believe the enterprise interface is thinning as users increasingly “speak” to systems in natural language. This shift reframes how the digital representation of the enterprise should be built. Beneath the surface sit modern data platforms – Snowflake and Databricks among them – surrounded by a rising governance layer. The database is becoming less of the control point; the center of gravity is moving toward governance catalogs, increasingly open source, with Apache Iceberg a driving force and open source catalog implementations such as Polaris and Unity – contributing to that transition.

The governance model is going to be very critical, because as you open up access to your data more broadly, you’re going to have to have a new governance model that’s much more policy- oriented and dynamically- enforced, and even maybe dynamically- generated. And as you’re letting agents take more and more actions, perhaps by talking to the system of record, at least initially, through MCP servers, you’re going to need a governance model that is completely new, which is governing actions. We had governance of APIs in the past, but now you’re going to need governance of actions, that essentially manage the behavior of agents.

At the data platform level, we’ve heard a lot about semantic layers, and a Snowflake-led an effort to get a big swath of the industry to agree on a semantic interoperability definition. We don’t have all the details yet, but the idea is, just to be clear, these are the metric and dimension definitions that you see in a dashboard, like customer lifetime value, or quarterly sales. So you have to agree on what’s the definition of sales, what’s a quarter, things like that. And that’s at that green square at the lower left, just above the data platform layer.

Our belief is the next architectural milestone is the emergence of a System of Intelligence (SoI) – i.e. a unified, contextual layer that interprets signals (including from BI) and feeds systems of agents coordinated by agent-control frameworks. Market moves like Fivetran’s potential acquisition of dbt underscore an upstream push by data platforms that now collide with process-centric players such as Celonis, Salesforce, and ServiceNow. The strategic objective is to construct a digital twin of the enterprise – i.e. a “4D map” capable of understanding what happened, why it happened, what will happen next, and what should be done.

However, end-to-end visibility remains constrained by fragmentation. As stressed earlier, consolidating data in a lakehouse does not resolve the issue when each star schema remains an island. Even standardizing on a single vendor and instance fails to unify data across domains. Cross-functional questions as we posed earlier (“i.e. How did marketing affect sales?” “How did fulfillment impact customer experience?”) typically require new, cross-departmental cubes – a heavy data-engineering lift – when what’s needed is on-demand generation of such views.

To unlock this, governance must evolve alongside architecture. As access broadens, policies need to be dynamically enforced, and, in some cases, dynamically generated. As agents begin to take actions -initially by calling systems of record through MCP servers – governance must shift from traditional API oversight to governance of actions that manages agent behavior and intent.

Crucially, building a digital twin is not just about metrics and dimensions – 2D or 2.5D snapshots such as orders or sales. It requires explicit modeling of business processes in terms of how customers traverse touchpoints and how work truly flows. Most organizations will not model this from scratch. They will assemble vendor-supplied process building blocks, much as they adopted packaged applications in prior eras, composing them into the SoI so agents can operate with real business context – the equivalent of an “agent as consultant.”

In our view, the path forward is a new full stack that favors end-to-end integration of both data and processes over the creation of new islands. As interfaces thin and language becomes the control plane, governance catalogs become the point of control, the SoI becomes the enterprise brain, and agentic systems become the execution layer. This is how the digital twin moves from concept to operating reality.

Later on, we’ll talk about, context for the agent as a consultant to think about to make a decision. So it’s a whole new stack that has to come together, and rather than adding new islands, we now have to think about end- to- end integration, not just of data, but of processes.

Mapping “Service-as-Software” to AppDev + DevSecOps

[Watch Paul Nashawaty’s Full Analysis]

We believe the next-gen service-as-software stack aligns tightly with classic application-development phases – Day 0, Day 1, Day 2 – with DevSecOps woven through every step. This lens preserves the familiar build–release–operate cadence while shifting the center of gravity to a hosted, outcome-oriented model where the stack handles the heavy lifting and accelerates time-to-value.

Day 0 — Build (Plan & Design)

In our view, Day 0 is the planning and design stage where teams figure out how solutions will be built and how they will run in production. From an app-dev perspective this means explicitly defining architecture, APIs, and required infrastructure. A service-as-software approach encourages thinking in modular, cloud-native pieces from the start so scalability, observability, and security are baked in rather than scrambled for later. This is the moment to specify the contracts, guardrails, and platform choices that will let downstream phases move fast without rework.

Day 1 — Release (Deploy & Provision)

Day 1 is where applications come to life through deployment and provisioning. Environments are split up automatically – typically via infrastructure as code, containers, or serverless setups – so developers can focus on writing code and shipping features instead of wrestling with infrastructure. The result is a repeatable, predictable motion that is materially faster than traditional setups. In a service-as-software model, this consistency is a first-order feature: the platform abstracts complexity so teams can push value to users sooner.

Day 2 — Operate (Run & Optimize)

Day 2 covers the operational/optimization phase where apps run in production and start evolving. Teams monitor performance metrics, scale dynamically, and roll out updates without breakage. Critically, service-as-software surfaces telemetry and feedback that inform how the application should improve over time—which is why Day 2 is where continuous improvement happens. The hosted model’s strength is converting runtime signals into safe, rapid iterations.

DevSecOps — Everywhere, End-to-End

DevSecOps is tied into every single part of the process – it’s everywhere. Security is built in from the get-go: automated testing, policy checking, and vulnerability scanning run in the background and are integrated into the CI/CD pipeline. The service-as-software stack favors secure-by-default choices so developers can move fast without introducing risk.

What the data suggests (Time-to-Value & Trade-offs)

Our research indicates the stack handles the heavy lifting and provides faster time-to-value. 34% of respondents prefer managed/SaaS-based delivery, versus 17% who want pure distribution. Even though SaaS/hosted offerings may have reduced functionality relative to full distribution, they deliver faster time-to-value. The advantage is clear: developers focus on features, user experience, and innovation, while the stack ensures reliable, secure, at-scale operations – with significant impact across the CI/CD pipeline and the overall software lifecycle.

Bottom line: Mapping Day 0/1/2 through a service-as-software lens aligns planning, release, and operations to a platform that automates undifferentiated work, institutionalizes continuous improvement, and accelerates outcomes – safely.

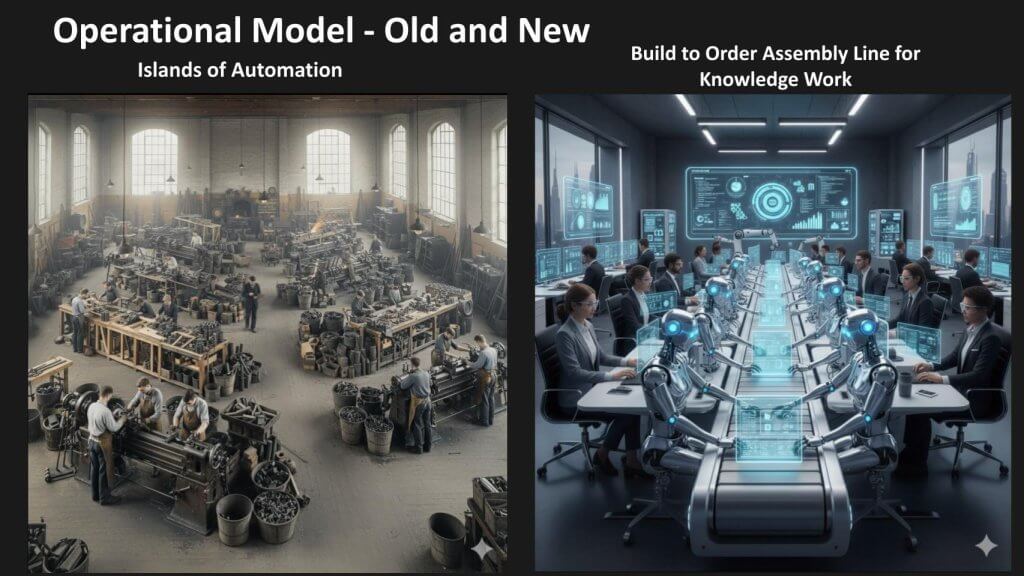

Rewiring the Operational Model for the Agentic Era

We believe the operational dimension is undergoing a step-function change. The reference diagram below juxtaposes today’s islands of automation (left) with a build-to-order assembly line for knowledge work (right). The visuals intentionally overstate for clarity, and – importantly – reflect the emerging reality that managers will supervise armies of agents rather than only human teams, echoing the Marc Benioff notion that we are the last generation of managers to manage humans alone.

Historically, knowledge work has resembled pre-assembly line manufacturing – think artisan workflows in small cells, with coordination and orchestration handled by humans – patterns that have barely changed for decades. The opportunity now is to organize work around scarce human expertise, with the understanding that, regardless of how advanced agents become, human judgment and decision-making are progressively imparted to agents over time.

The build-to-order model combines the efficiency of volume operations with the customization of advisory work (as seen in consulting or investment banking). With end-to-end visibility and digital orchestration in place, any expertise not yet encoded in agents becomes a floating, high-value resource deployed precisely when needed. Every such human intervention is captured for future use – akin to a Tesla Autopilot disengagement that yields training data – so the next occurrence of that exception no longer requires a human. In effect, the workplace is specifically designed to encode expertise in the flow of work, steadily increasing agent maturity and expanding effective capacity.

We view this assembly line as an evergreen initiative – i.e. similar to a strategic capital project, yet operated as OpEx. Success metrics shift accordingly, from traditional departmental efficiency measures (e.g., deals closed, time-to-market, etc.), to expertise amplification and a true return on intellectual capital. In this configuration, as interfaces thin and language becomes the control plane, individual managers direct large swarms of agents, defining what we believe will be the operational blueprint for the agentic era.

In this build- to- order assembly line, we’re combining the best of volume operations, and we’re borrowing concepts from Geoffrey Moore again. The efficiency of volume operations, but the customization of custom work that you might find in an advisory business, like consulting, or investment banking. You’re going to get the best of both. But the key thing is, because you have this end- to-end visibility, and you have the digital orchestration of the process, what happens is that human expertise, that has not been encoded in the agents, that becomes a pluggable resource, that essentially this assembly line brings to bear when it’s needed, and every time there’s a human intervention, that’s captured for future use.

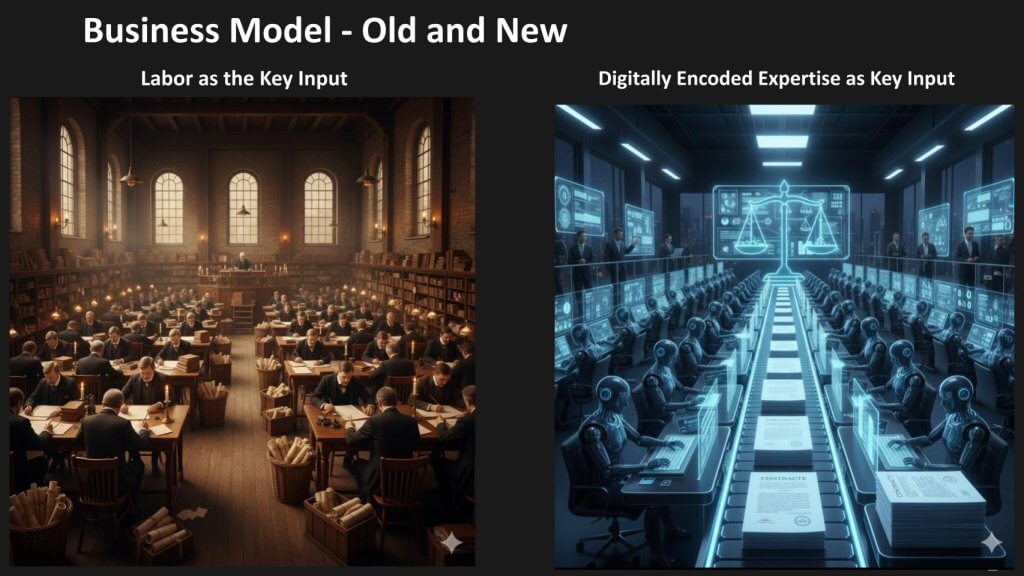

From Labor-Scaled Services to Digitally Encoded Expertise – The Business Model Shift

We believe security and governance cannot be glossed over – especially in regulated industries. Compliance, regulation, and security remain front and center in healthcare (HIPAA), financial services, and under EU regulations. With the technology and operational models outlined above, we now examine the business model transition.

Our graphic below depicts two contrasting states shown here. On the left-hand side of the current paradigm, profits rise with labor: add capacity, add people, and – hopefully – scale. Marginal economics vary by sector. In software, non-recurring engineering dominates up front; ongoing maintenance persists, but marginal costs trend toward near-zero at volume. In hardware, COGS remain a persistent drag. In services, labor is the key input, often leading to diseconomies of scale and challenges achieving operating leverage.

On the right-hand side, we see a fundamentally different environment driven by digitally encoded expertise – what we regard as an autodidactic, self-learning system that improves over time. This becomes the key input of production, which is why we expect winner-take-most dynamics not only in technology but across all industries.

To make the implications concrete, consider a law firm – an early example of a services business being reimagined as software. Historically, little has changed since the “quill-pen at standing desk” era – i.e. to take on more business, firms hire more lawyers; costs scale with revenue; and competitive advantage rests in relationships and reputation. Pricing tends to be fee-based, often time-and-materials (or a markup from there).

In the new model, legal work is progressively encoded in agents and operated as a platform. Each time the system encounters a new situation, the captured expertise becomes part of the agent’s knowledge base; and next time, the human lawyer is not required for that specific pattern. Human professionals increasingly oversee an assembly line of agents. Work types that were priced by the hour – contracts, M&A tasks, and similar matters – can shift to fixed-fee services. As volume increases, learning improves, creating both greater differentiation and lower costs. Data network effects and encoded expertise compound, reinforcing the experience curve and propelling winner-take-most outcomes. In effect, services businesses become platform businesses, powered by software that understands human language and organizational process flows. We see this as profound change.

In our view, this is the crux of the business model transition – i.e. from labor-scaled economics with uneven marginal costs to platform economics powered by digitally encoded expertise, governed under stringent compliance and governance guardrails, and capable of compounding advantage at scale.

Agents as “Consultants,” Business-Value Metrics, and the Edge as a New Data Center

[Watch Savannah Peterson’s Full Analysis]

We believe the agentic angle on service-as-software is best understood by viewing agents as consultants inside the enterprise. Framed this way, agents tap institutional knowledge, industry best practices, and codified business processes learned from operational experience, telemetry, and edge-device data -among other sources. This mental model simplifies a complex shift: agents are not merely people or simple bots; they behave like embedded consultants drawing on shared context to deliver outcomes.

Our premise is enterprises are adopting two distinct approaches to measuring agentic impact. One emphasizes counting agents (e.g., “500,000 agents deployed”) and listing headline ROI totals. The other – closer to how organizations actually report value – focuses on business outcomes – i.e. money saved, opportunity realized, and experience improvements (e.g., NPS and customer satisfaction). We believe the second approach will drive true adoption at both enterprise and consumer levels because it aligns agent programs with tangible, comprehensible results. A notable example is Workato, which intentionally brands agents as “genies” – an approachable, dynamic metaphor – and orients launch narratives around business impact rather than raw agent counts.

Technically, we contend that agentic leverage adds a Z-axis beyond classic automation – i.e. reasoning, decision-making, and novel information acquisition that were previously impractical now become feasible- often feeling “magical” in early prototypes. Paradoxically, many current MVPs and POCs are under-asking; teams may not yet grasp what’s actually possible because the organization has never been able to do these things before. We recommend structuring programs, marketing, and success criteria around tangible impact metrics rather than agent tallies, ensuring the bar is set high enough to capture the full promise of agentic systems.

Compliance and governance once again, are first-class concerns – not afterthoughts. As AI is implemented at scale and agents are deployed at scale, the volume, locality, and handling of data expand by another order of magnitude. That intensifies the need to manage where data lives, how it’s accessed, how it’s secured, and how value is extracted – across jurisdictions with different rules. Our conversations and event work on theCUBE, underscore the structural advantages held by providers with long-standing public-sector relationships, established data-center real estate, and access to power and water, along with the policy and legislative navigation required country by country and region by region. Equinix shared strong examples at its analyst event recently. In our view, choosing the right partners at the top – those that meet data where it is and guarantee its safety – is critical; the wrong choices create sticky, potentially existential risks as programs scale.

We further believe this era extends well beyond the SaaS playbook. SaaS accelerated task-level automation; services-as-software operates at the intelligence level. It introduces greater complexity, demands new tooling, and requires deliberate strategy. The goal is not merely to make simple things faster; it is to make very complex things possible.

Finally, the edge becomes a new data center. We expect AI at the edge with devices continuously reporting back into systems that support evolving, field-validated best practices – what works best in reality, not just what’s hypothesized. This feedback loop also reshapes managerial roles in the agentic enterprise, meaning leadership shifts from supervising only people to orchestrating agents, integrating edge-sourced signals and institutional knowledge to improve outcomes. In parallel, a durable ecosystem role emerges for consulting on the agents themselves – i.e. a services layer that helps organizations design, govern, and tune their agent workforces for safety, compliance, performance, and value realization.

In our view, reframing agents as consultants, measuring business outcomes, elevating compliance and governance, partnering for data locality and infrastructure realities, and treating the edge as a first-class compute and data plane are the practical pillars of moving from task automation to service-as-software at enterprise scale.

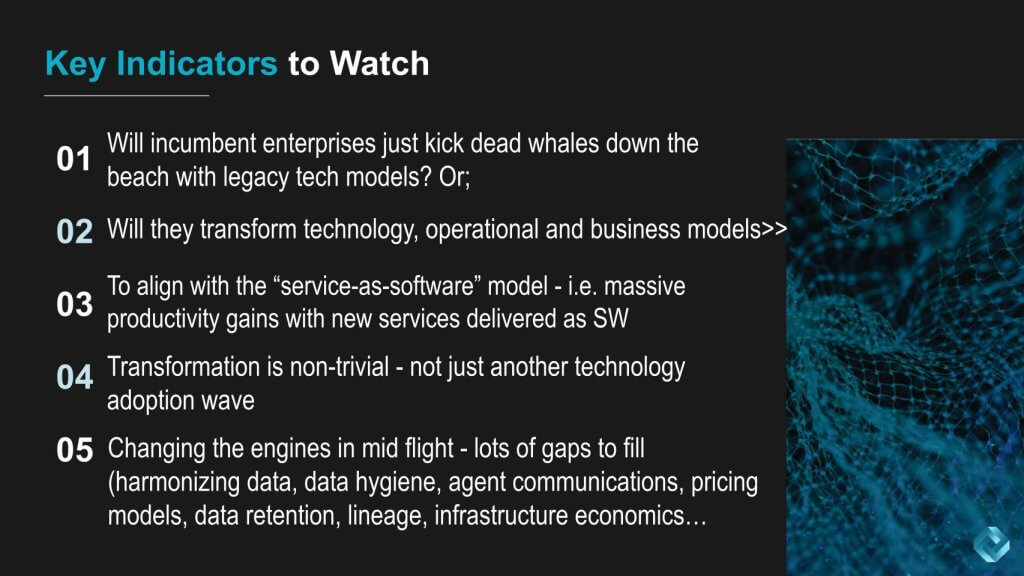

A Decade-Long Transition to Services-as-Software—What to Watch Now

We believe the technology, operational, and business-model shifts outlined here will materially change how companies make money and how they charge. This is the evaluation framework we will use going forward – and we will update it continuously. It also aligns with ongoing analytical work (including collaborations with external research partners) and is designed to surface where service-as-software creates new productivity drivers. We will assess the future in this context and track key indicators to watch as this plays out.

A central question is how incumbents handle technical debt. In our view, enterprises face a choice of “kicking dead whales down the beach” – a metaphor for perpetuating legacy burden – or transforming their technology, operations, and business models in line with the service-as-software framework. The prize is massive productivity gains; the risk of inaction is disruption by new services delivered as software with marginal software economics. We’ve seen the power of learning-curve bundling before – e.g., Microsoft’s bundling in the ’80s/’90s – but we believe today’s change is significantly more dramatic.

This transformation is not easy. It is not simply adopting cloud, swapping in a new security framework, or standing up another data platform. It is organizationally transformational and must occur while running the current business. There are many missing pieces that demand deliberate resolution:

- Data harmonization and hygiene to “get the data house in order.”

- Agent interoperability and security: how agents will talk to each other and how those interactions will be secured.

- Pricing models for agentic capabilities: the market is still experimenting; we see Salesforce testing approaches, and it remains unclear how others (e.g., UiPath) will price to value.

- Data retention and lineage: some industries require retention for years (often seven). We anticipate requirements to recreate and explain agent decisions.

- Infrastructure economics in hybrid: a continued push to bring AI to data on-prem where appropriate.

We believe this will evolve over time, which is why we consistently say this will take most of a decade to fully play out. 2025 or 2026 will not be “the year of the agent.” More likely, this will be the decade of the agent.

From a cost-structure standpoint, the business-model implications are profound. Pricing agents is hard because, unlike historical software patterns, there are now meaningful marginal costs – notably compute – on top of a new factor of production – i.e. data. Reports suggest that leading AI providers could still be spending on the order of ~45% of revenue several years out on operating costs and data acquisition/curation. This underscores why cost structure remains a first-class concern and why data governance and quality will directly influence unit economics.

Observability must advance in lockstep with security. Traditional data lineage is insufficient. We will need observability for agents – capturing reasoning traces and the data used to make decisions – a new lineage that supports auditability, safety, compliance, and forensics.

While the full enterprise transition may take a decade, we see a nearer-term wave. The biggest impact in the next 12–18 months will be applying genAI and agents to the mainstream tools enterprises use to build everyday software. The software industry’s tooling for mainstream customers must be remade first around agentic technologies – before the broader service-as-software replatforming of the mainstream enterprise fully takes shape. Put differently, we are painting a vision for enterprise reinvention, but the toolchains used to build that reinvention will change dramatically over the next 12 months.

Key factors to watch include incumbent responses to technical debt, credible pricing models for agentic services, concrete progress in data harmonization and retention/lineage, real hybrid economics (AI to data), and measurable improvements in agent observability/security. In our opinion, organizations that align to this framework early – adapting tech, ops, and business models in concert – will be best positioned to capture learning-curve advantages and services-as-software economics as the agentic decade unfolds.