Much attention has been focused in the news on the useful life of GPUs. While the pervasive narrative suggests GPUs have a short lifespan, and operators are “cooking the books,” our research suggests that GPUs, like CPUs before, have a significantly longer useful life than many claim. In this Breaking Analysis we use the infographic below to explain our thinking in more detail.

Premise

In January 2020, Amazon changed the depreciation schedule for its server assets, from three years to four years. This accounting move was implemented because Amazon found that it was able to extend the useful life of its servers beyond three years. Moore’s Law was waning and at Amazon’s scale, it was able to serve a diverse set of use cases, thereby generating revenue out of its EC2 assets for a longer period of time. Other hyperscalers followed suit and today, the big three all assume six year depreciation schedules for server assets.

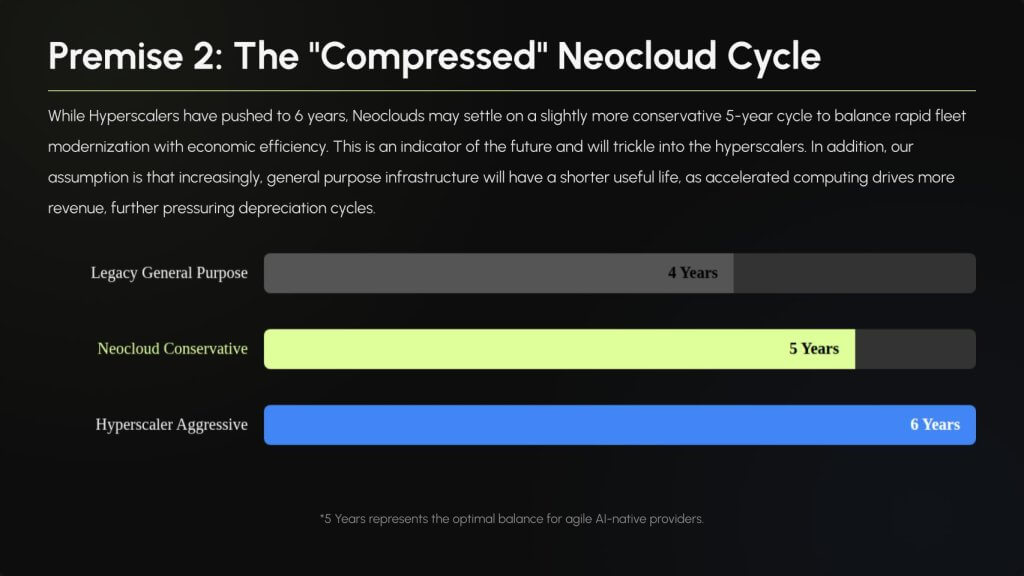

The question is, in the AI factory era, will this same dynamic hold or will the useful life of servers compress? It is our view that the dynamic will hold in that today’s most demanding training infrastructure will serve a variety of future use cases, thereby extending the useful life (i.e. revenue per token) of GPUs beyond their initial purpose. At the same time, we believe the more rapid innovation cycles being pushed by NVIDIA will somewhat compress depreciation cycles from their current six years to a more conservative five year timeframe.

AI, Server Depreciation, and the 6-Year CPU/GPU Lifespan

In the first half of the 2020s, a major financial shift occurred. The world’s largest cloud providers – Amazon, Alphabet, and Microsoft – all extended the official “useful life” of their servers from a 3- or 4-year standard to 6 years. This is an accounting change that noticeably impacts the income statement. The question is does the extended lifecycle apply for the new economics of Artificial Intelligence?

Fig. 1: Server useful life (depreciation schedule) for the “Big 3” hyperscalers. The coordinated shift from 3/4 years to 6 years is easy to see across time.

This trend isn’t limited to the “Big 3.” A new class of AI-focused “Neoclouds” like CoreWeave have adopted the 6-year schedule from the start, while others like Nebius and Lambda Labs are setting their own policies on a more conservative basis. This visual compares the 2025 landscape, showing how 6 years has become the new standard for AI infrastructure, but gives us a glimpse from the pure play GPU clouds how the cycle may compress.

Fig. 2: 2025 server useful life comparison. The 6-year schedule dominates, while Nebius and Lambda Labs keep shorter, more conservative policies.

How can $50,000+ GPUs that go into servers that cost millions, in a field that innovates

every 1-2 years, still be “useful” in year 5 or 6? The answer is the value cascade.

A GPU’s economic life extends far beyond its primary use. It “cascades” down from the most

demanding tasks (training) to less demanding, but still highly profitable, tasks (inference).

The asset (e.g., NVIDIA GB200) is deployed for its most valuable, cutting-edge task.

A new generation GPU arrives. The old GPU (GB200 / H100) “cascades” to high-end inference.

The asset (H100 / A100) is fully depreciated but still generates

revenue from less-demanding tasks.

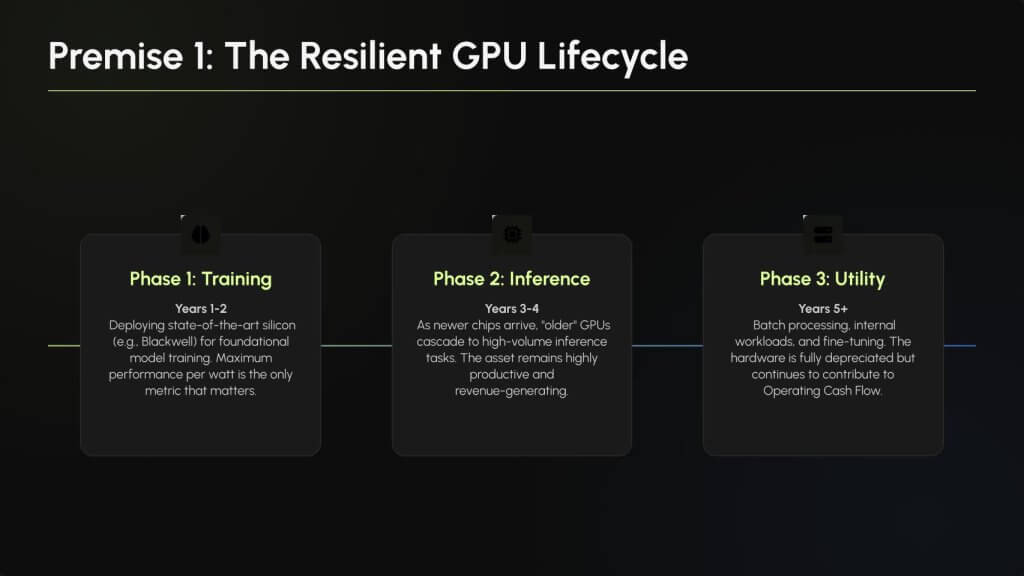

Figure 3: The idea that GPUs will have a useful life of 2-3 years is incorrect in our view. Today’s training infra will support future workloads and applications such as inference .

The benefit of such a change to operating profit is meaningful, but perhaps not as dramatic as many investors fear.

For example, Amazon increased the estimated useful life of its servers in three stages: Jan ’20 (3 to 4), Jan ’22 (4 to 5)

and the most recent change from 5 years to 6 years in early 2024. The most recent change added roughly $3.2 billion to

its operating income in 2024. Over time this could grow but so will the company’s operating profits. So we’re talking

about perhaps a 10-12% tailwind. Not game changing but noticeable.

Years

The new de facto standard depreciation schedule for AI-focused cloud infrastructure.

Extended Life

The “value cascade” gives GPUs an economic life 2-3 times longer than their primary training role.

Annual Impact

Extending asset life directly boosts operating income by billions of dollars for hyperscalers.

Figure 4: Extending server and GPU lifecycles creates measurable, multi-billion-dollar financial tailwinds. But at the scale of large cloud providers, the overall operating income impact is around 10% and not as dramatic as many believe.

The Great Recalculation

The “6-Year Shift”

Provider

2017

2018

2019

2020

2021

2022

2023

2024

2025

Amazon (AWS)

3

3

3

4

4

5

5

6

6

Alphabet (Google Cloud)

4

4

4

4

4

4

6

6

6

Microsoft (Azure)

4

4

4

4

4

4

6

6

6

The New Players Emerge

Will 6 Years Hold for AI? The “GPU Value Cascade”

Primary Economic Life

Secondary Economic Life

Tertiary Economic Life

The Financial Impact

Note from Amazon’s 2024 10K: Depreciation and amortization is recorded on a straight-line basis over

the estimated useful lives of the assets (generally the lesser of 40 years or the remaining life of the underlying building, four years prior to January 1, 2022 and five years subsequent to January 1, 2022 for our servers, five years prior to January 1, 2022 and six years subsequent to January 1, 2022 for our networking equipment, ten years for heavy equipment, and three to ten years for other fulfillment equipment). Depreciation and amortization expense is classified within the corresponding operating expense categories on our consolidated statements of operations.

Our infographic is misleading in that AWS only depreciates networking gear on a six-year cycle, servers are on a five-year schedule.

The “6-Year Shift”

What the infographic shows:

Figure 1 shows the depreciation schedules for AWS, Google Cloud, and Azure from 2017–2025. The chart highlights the coordinated progression from 3- and 4-year schedules to a uniform six-year useful life assumption beginning in 2023–2024.

Key takeaways:

- AWS was first to extend asset life, triggering a fast-follow response.

- By 2023, all three hyperscalers normalized on six years. Note: This is true for AWS’ networking equipment. Servers are on a 5-year cycle. Microsoft’s 10K is opaque and provides ranges of 2-6 years for equipment. As such we’ve landed on a 6-year cycle for Microsoft.

- The expansion represents a noticeable change in operating income by spreading depreciation over a longer horizon.

- The shift occurred before the massive wave of AI CAPEX, meaning AI infrastructure inherits but may eventually challenge this standard.

Our research suggests that this shift reflects the hyperscalers’ confidence in workload diversification. Even as hardware aged, demand for general compute, analytics, web services, and long-tail workloads sustained revenue-generation across an asset’s lifecycle. The open question is whether AI GPU estates, which are more expensive, power constrained, and evolving faster, behave the same way.

The New Players Emerge

What the infographic shows:

Figure 2 shows a horizontal bar comparison of depreciation schedules across hyperscalers and AI-native neoclouds (CoreWeave, Nebius, Lambda Labs). The big clouds have settled on a five-to-six year depreciation cycle, while neoclouds take more conservative positions (5 years for Lambda Labs, 4 years for Nebius).

Key takeaways:

- CoreWeave is the outlier, adopting an aggressive six-year posture despite an exclusively AI-intensive focus;

- Nebius and Lambda Labs use shorter cycles, reflecting faster modernization cycles and possibly less heterogeneous workload mix;

- Neocloud strategies give an indicator of where AI-pure-play economics may differ from general cloud economics.

In our view, Figure 2 is a harbinger for slight compression. AI-first clouds cannot afford stagnant infrastructure; performance/Watt gains in successive GPU generations directly determine competitiveness. As neoclouds scale, their 4-5-year cycles will likely influence hyperscaler modeling —especially as accelerated computing consumes a larger share of CAPEX.

Will 5/6 Years Hold for AI? The GPU Value Cascade

What the infographic shows:

Figure 3 depicts a three-stage lifecycle framework:

- Years 1–2: Primary economic life to support foundational model training

- Years 3–4: Secondary life to support high-value real-time inference

- Years 5–6: Tertiary life to support batch inference & analytics workloads

Key takeaways:

- The value cascade structurally extends GPU usefulness, even as generations turn over rapidly;

- Training requires peak performance; inference tolerates lower latency constraints; batch/analytics operate at the long tail;

- This is analogous to server repurposing that justified past depreciation extensions.

We believe this framework supports longer useful life assumptions than the 2-3 years many have stated. Silicon doesn’t die at end-of-training usefulness, rather it transitions to less demanding, but still revenue-generating tasks. This is the essence of token revenue maximization in the AI factory era.

The Financial Impact

What the infographic shows:

Figure 4 shows three tiles summarizing impact:

- 5 or 6 years as emerging standard

- 2–3x extended life from cascading

- $7B annual impact to operating income (example using approximate figures from hyperscaler).

Key takeaways:

- Extending useful life does lift GAAP profitability for hyperscalers;

- GPUs however may actually have longer economic tails than CPUs because inference and internal workloads are insatiable. But perhaps not as long as pre-GenAI CPU cycles;

- The financial leverage from longer depreciation schedules will become even more pronounced as GPU CAPEX scales into the trillions.

Our analysis suggests that AI factories magnify the issue but not to the extent many in the media have projected. A one-year change in useful life assumptions for trillion-dollar GPU estates can swing operating income by tens of billions; but in the grand scheme of operating profits for hyperscalers it’s not game changing. This dynamic will become a recurring narrative in earnings calls and investor guidance. As such, investors should look at operating and free cash flows to get a better sense of business performance.

The following section summarizes our thinking on this issue:

Premise 1: GPUs Have Resilient Lifecycles

Key takeaways:

- Training is a short window (1–2 years) where only the newest silicon is competitive;

- Inference demand is exploding and absorbs “last-gen” GPUs for years 3–4;

- Utility workloads (internal processing, fine-tuning, retrieval augmentation) extend asset life to year 5 and potentialy beyond.

We believe the proliferation of agentic applications, retrieval workflows, automation assistants, and fine-tuning pipelines will expand the useful life of GPUs beyond the training phase. This reinforces the durability of extended economic cycles.

Premise 2: Neoclouds Hint at Slightly Shorter Lifespans for Hyperscalers

Key takeaways:

- AI-native clouds optimize for modernization speed over accounting benefits;

- A 5-year midpoint appears to be the emerging equilibrium for AI-centric providers;

- Accelerated computing is changing the shape of budgets – shorter depreciation aligns better with faster GPU innovation cycles.

Our research indicates that this five-year midpoint is where hyperscalers will ultimately converge as accelerated infrastructure dominates CAPEX. While general compute has historically survived at six years, AI factories operate under different competitive pressures. Moreover, the utility of legacy CPU technologies may compress as they become less useful. But on balance, we don’t see a dramatic alteration of the income statement as a result of AI.

Premise 3: Cash Flow Becomes the More Meaningful KPI

Key takeaways:

- High depreciation masks the true economic performance of AI factories;

- OCF adds back depreciation and reveals that GPU estates generate strong cash even when earnings appear compressed;

- As CAPEX rises, earnings divergence from cash flow will widen.

In our opinion, investors must shift their valuation frameworks. Operating cash flow will become an increasingly important KPI relative to non-GAAP and GAAP income. OCF will be a primary indicator of AI factory health, sustainability, and ROI timelines. This becomes crucial as depreciation cycles compress.

Synthesis of the Premise

Key takeaways:

- GPUs are durable economic assets, not short-lived commodities;

- A five-year cycle is emerging as the balance point between modernization and economic efficiency;

- Investors must evaluate AI factories through a cash-flow lens rather than earnings alone.

The above graphic frames the strategic direction of the industry, which will likely extended economic utility with slightly shorter accounting life – reflecting both technological realities and revenue/token expectations.

Conclusion

Our research indicates that the useful life of GPUs will continue to benefit from the “value cascade,” allowing assets to generate revenue well beyond their initial training window. At the same time, the arrival of new architectures every 12–18 months will put pressure on formal depreciation schedules. We believe hyperscalers will ultimately converge on a five-year cycle – shorter than today’s six-year model but still supported by extended economic usefulness.

In the AI factory era, depreciation becomes a lever. The winners will be operators who maximize the utility curve of GPUs across training, inference, and internal workloads while maintaining access to compute, land, water, power and the skills to build AI factories. As CAPEX scales into the trillions, cash flow will increasingly be the metric investors will watch.