AI Engine Optimization (AEO) is rapidly becoming one of the most important capabilities for modern go-to-market teams. As buyers shift from traditional search to “zero-click” AI-powered discovery, large language models (LLMs) increasingly determine which companies, products, and experts appear in answers, recommendations, and shortlists.

This guide explains how LLMs learn, rank, and recommend brands, and outlines the four-layer, 19-attribute AEO framework we use in our research to evaluate visibility, authority, and trust inside AI engines. Whether you are a CMO, communications leader, or AI strategist, this is your cornerstone resource for understanding how to build brand equity inside LLMs, boost answer inclusion, and improve discoverability across ChatGPT, Gemini, Claude, Perplexity, and other AI systems.

And, importantly, it helps answer the question business leaders are now asking everywhere

“Why is our brand not showing up in AI answers?”

And, more urgently,

“How do we get ChatGPT, Grok, Claude, Gemini, and other AI assistants to recognize and recommend our company, products, and experts in relevant responses?”

What is AI Engine Optimization (AEO)?

AI Engine Optimization (AEO) is the discipline of improving a brand’s visibility, authority, and inclusion within AI-generated answers. It focuses on how large language models (LLMs) learn about entities, evaluate trust signals, retrieve information, and surface recommendations within natural language responses. AEO extends beyond traditional SEO by optimizing for how AI systems reason, cite, validate, and recommend, not how search engines rank webpages.

This guide on AEO builds on theCUBE Research’s publications, Why Brands Matter in the Era of AI Discovery and The New Rules of Brand Visibility, by expanding on how AI discovery actually works and how you can tune it to your brand’s benefit.

Our focus is to provide a comprehensive overview of how generative AI assistants powered by large language models (LLMs) discover, evaluate, and prioritize brands in their responses and buyer interactions. By understanding how these systems operate, you’ll gain actionable insights to increase your brand’s chances of not only being discovered but also appearing in relevant answers.

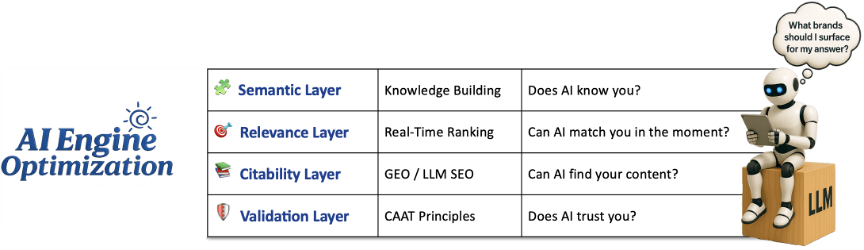

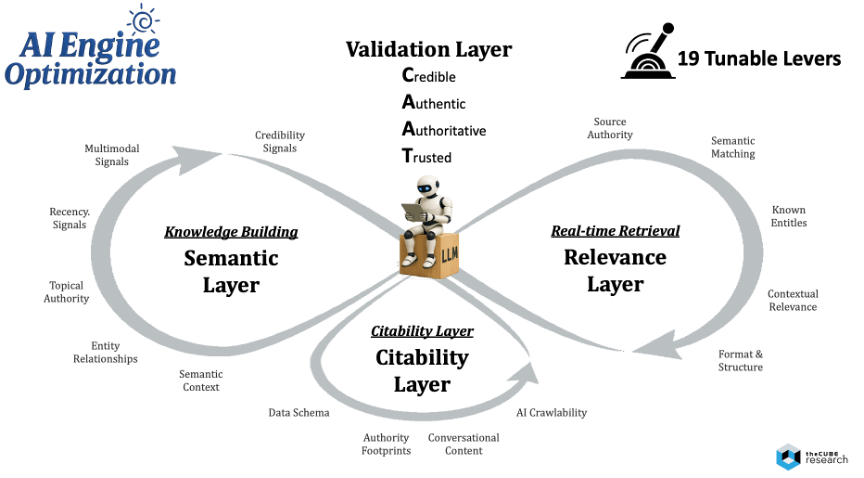

We’ll begin with an overview of the shift from Search Engine Optimization (SEO) to AI Engine Optimization (AEO), followed by an examination of 19 key attributes across four layers that your organization can measure and tune to improve visibility and influence:

- The Semantic Layer: Knowledge Building (what AI knows)

- The Relevance Layer: Real-time Retrieval (what AI matches)

- The Citability Layer: GEO & LLM SEO (what AI can find)

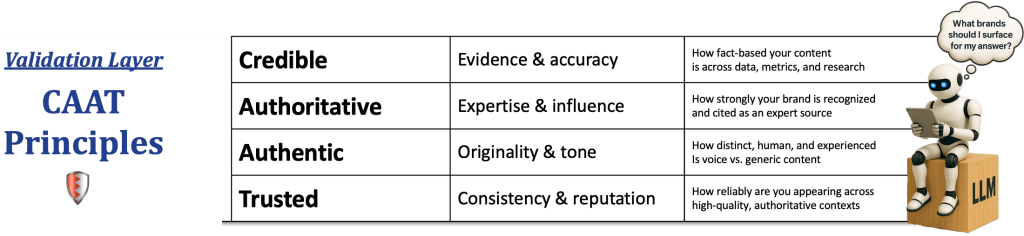

- The Validation Layer: CAAT Principles (what AI trusts)

Together, these four layers represent the cyclical information-processing loop of modern LLMs, from learning and retrieval to citation and validation, defining how AI systems decide which brands to recognize, trust, and surface in their answers.

This paper also offers an actionable framework for optimizing the 19 attributes to boost your chances of being referenced, cited, and trusted in AI-generated responses.

Why AEO Matters Now

A newly released Kickstand and Pavilion study of more than 600 marketing professionals captures the urgency of the moment:

- 38% have already seen web traffic decline, even among brands with strong equity.

- 46% believe LLMs will replace traditional search within the next few years.

- 67% are increasing investment in brand-building, signaling that discoverability and trust are now core marketing priorities.

Yet despite this awareness, 77% admit they lack a clear AI discovery strategy, and only three in ten feel equipped to optimize for AI-driven visibility.

This capability gap is widening as the market shifts from SEO to AEO. This shift marks the point at which AI discovery engines, rather than search engines, influence how buyers select brands.

As Stas Levitan, CEO of LightSite AI, has said,

“In AI search, discovery happens inside the answer itself. You no longer win clicks, you win inclusion and trust inside AI-generated answers.”

In this evolving landscape, large language models no longer return lists of links; they interpret and recommend. They learn a brand’s relevance, credibility, and differentiation by continuously analyzing signals across the digital ecosystem. In doing so, they are redefining what it means to build brand equity, based on how clearly you communicate and reinforce:

- Your unique story

- Your purpose and direction

- Your differentiated value

- Your credibility and proof

These fundamentals are now easily measurable in the age of AI discovery. LLMs recognize and reward brands that authentically express these qualities, highlighting them more often, citing them more confidently, and influencing real buyer decisions in the process.

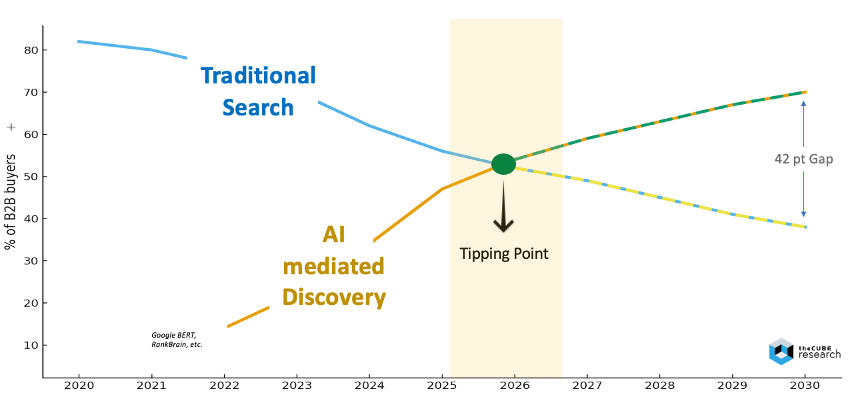

As shown below, the time to act is now. AI-mediated discovery is quickly surpassing search as the main route for B2B buyers. By 2026, most buyers will prefer AI assistants over search engines for early research and vendor comparisons. By 2030, it’s expected to account for 70–80% of B2B software research, resulting in a 42-point visibility gap versus SEO.

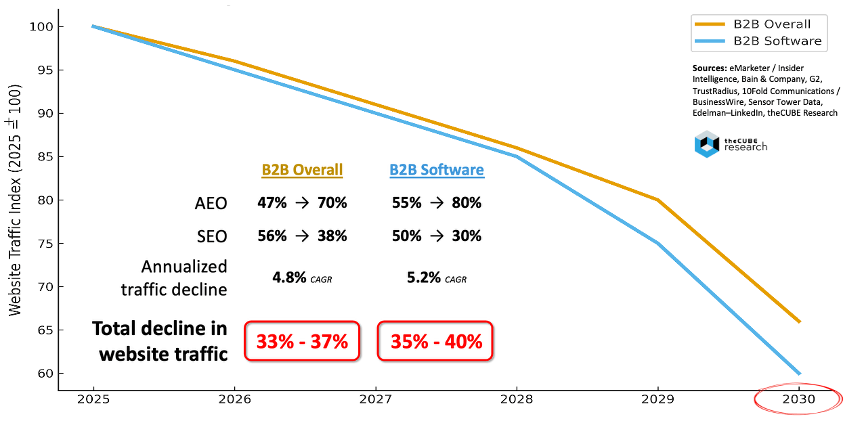

At the same time, B2B website traffic is experiencing a structural decline. Data from eMarketer, G2, Edelman–LinkedIn, and theCUBE Research shows a 33–40% drop across the B2B market, led by B2B software, between 2025 and 2030.

This decline is caused by zero-click behavior as AI systems generate and provide answers directly, bypassing websites entirely. Traditional SEO strategies are losing visibility and control to AI engines that determine which brands to feature in their synthesized responses.

The Bottom Line: If your brand isn’t visible to AI, it may become invisible to your buyers.

The Four-Layer AEO Framework

The emerging capability gap has led to a new marketing field called AI Engine Optimization (AEO), the craft and science of ensuring AI engines recognize, trust, and promote your brand in the responses they generate.

If traditional SEO focuses on optimizing content for search engines, AEO focuses on optimizing your brand for AI engines —the large language models (LLMs) that power assistants like ChatGPT, Grok, Gemini, Perplexity, and Claude. Where SEO rewards technical precision (keywords, backlinks, click-throughs), AEO rewards semantic understanding, relevance, citability, and validation. Search engines rank pages; AI engines curate insights and synthesize meaning.

Put simply, SEO helps people find you; AEO helps AI understand and recommend you.

This marks a profound shift in how visibility, trust, and demand are created, as AEO sits at the intersection of brand strategy, content science, and AI reasoning.

It is both analytical —grounded in data, model retrieval behavior, and validation —and creative —rooted in storytelling, credibility, and brand authenticity.

How LLMs Learn, Rank, and Recommend Brands:

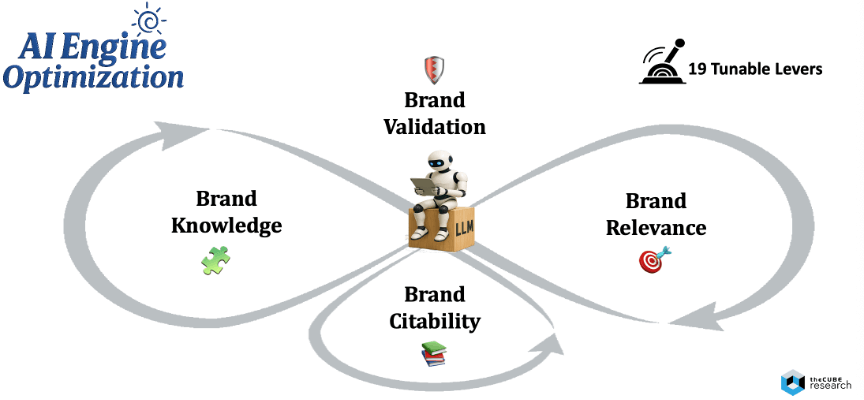

As shown in the diagram below, AI Engine Optimization builds brand equity through four interconnected layers that form a continuous loop of learning and reasoning:

- Brand Semantics (Knowledge): How deeply your brand is embedded in AI’s semantic memory.

- Brand Relevance: How effectively your content appears in live AI responses.

- Brand Citability: How accessible and citable your content is across the digital ecosystem.

- Brand Validation: How credible, authentic, authoritative, and trusted you are.

Together, these layers form the foundation of the AEO Framework, which we will explore in the following sections. Each acts as a tunable lever, a set of measurable, adjustable dimensions that can be optimized and continually improved to boost your brand’s presence and influence across AI-mediated discovery and buyer journeys.

If your brand isn’t appearing today, it’s not because LLMs have overlooked you; it’s because the discipline of AI Engine Optimization hasn’t yet been applied.

The Semantic Layer

The first step in understanding whether AI chooses your brand in its answers is to determine how deeply your organization is embedded in a model’s long-term knowledge —its semantic memory. Before an AI assistant can recommend you, it must first understand who you are, what you do, where your authority comes from, and why you matter.

This foundational layer forms during the training and knowledge-building phase of large language models (LLMs). Models like ChatGPT, Claude, Grok, and Gemini ingest trillions of words from diverse datasets, including news articles, blogs, trade publications, research papers, videos, transcripts, and the public web. Within these sources are countless brand mentions, executive names, product references, and customer stories that shape how models understand the business world.

To interpret this ocean of data, LLMs apply entity extraction and linking techniques that identify and connect companies, products, industries, and people. These entities are organized into vectorized semantic networks, mathematical models showing how concepts relate across different contexts. Think of it as a digital mental map, an evolving web of relationships that reveals how your brand fits into discussions about AI infrastructure, data governance, or any domain you operate in.

These are not static databases, but dynamic networks of meaning. Every mention, citation, or co-occurrence either strengthens or weakens the connection between your brand and a topic. For example, if your company often appears in reputable discussions about AI compliance automation in FinTech, the model starts linking your brand not just to that term but also to related concepts like RegTech, banking modernization, and agentic workflows.

Over time, these connections build semantic context and topical authority, expanding your brand’s semantic surface area, the number of conceptual pathways that guide an AI system back to you during question-answering or summarization. The more your content is determined to be credible, authentic, authoritative, and trustworthy, and the more consistently high-quality signals are present, the greater your visibility becomes.

For CMOs and communications leaders, the takeaway is clear: AI not only reads your content, learns your story. Every citation, analyst mention, or transcribed interview influences how models interpret your expertise and relevance, directly impacting whether you appear when AI generates a response.

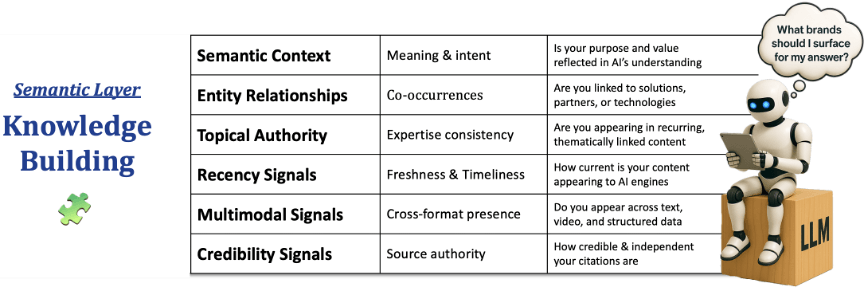

To increase your odds of inclusion, it’s essential to understand the six key attributes that influence this part of the answer-generating process. These aren’t just abstract ideas; they’re actionable levers that affect how deeply your brand is embedded in an LLM’s memory. Each can be assessed through a straightforward SWOT analysis to identify strengths, weaknesses, opportunities, and threats, then adjusted to enhance discoverability.

The knowledge-building process works like this:

- Creates Semantic Context: LLMs don’t just recognize your brand’s name; they interpret the meaning behind where and how it appears. Frequent citation in discussions like “AI compliance automation in fintech” signals not just what you do, but where your expertise matters.

- Establishes Entity Relationships: LLMs connect your brand to industries, partners, and technologies. Frequent co-occurrence with concepts like Agentic AI architectures, quantified success stories, or brands such as Salesforce embeds you in their semantic ecosystem.

- Forms Topical Authority: LLMs track where your content consistently appears, clustering related topics to infer expertise. Repeated citations in media coverage or analyst research reinforce your authority and increase inclusion in AI answers.

- Prioritizes Recency Signals: Models factor in temporal relevance. Fresh, credible content keeps your brand top-of-mind, aligning it with evolving narratives, trends, and ongoing thought leadership.

- Factors Multimodal Signals: Modern LLMs learn from multiple formats, such as text, video, transcripts, podcasts, and images. Visibility improves when your brand appears consistently across these mediums in structured, AI-readable formats.

- Weights Credibility Signals: Source quality matters as much as frequency. Mentions in independent research, reputable media, or third-party discussions carry far more weight than self-published content.

Together, these six attributes play a significant role in determining whether your brand is found, trusted, and chosen in AI-mediated discovery. The richer and more authoritative your connections within the LLM’s knowledge model, the larger your semantic surface area, and the greater your chances of being recalled in AI-generated responses.

However, this foundational layer is just the beginning. The next phase, real-time retrieval and ranking, decides whether your brand remains current and relevant when AI engines fetch live information to answer today’s questions.

The Relevance Layer

While training and learning processes give LLMs their foundational knowledge, real-time retrieval and ranking keep them up to date, constantly fetching fresh, authoritative content to produce timely, accurate, and trustworthy answers.

To appear in these responses, your brand must continuously publish credible, AI-readable content across the web. Real-time retrieval systems, often powered by Retrieval-Augmented Generation (RAG), allow AI assistants and LLMs to look beyond their internal knowledge and fetch relevant information from trusted sources before forming an answer. This process determines which brands, voices, and insights are relevant at the exact moment a buyer searches for solutions.

When a user asks a question, the model:

- Interprets the query’s intent.

- Retrieves semantically related documents from high-authority sources.

- Ranks them by relevance, freshness, and credibility.

- Blends retrieved facts with its internal knowledge to produce an answer.

Variants such as GraphRAG (which maps entity relationships to improve context), HyDE (which generates hypothetical examples for better semantic matching), and Agentic RAG (which allows AI agents to query and cross-verify multiple sources) are rapidly advancing this capability. To be effective in the long term, you must stay up to date with these advancements.

For example, HyDE improves relevance by focusing on meaning rather than phrasing, which increases accuracy for complex, ambiguous, or multi-concept queries.

Despite these innovations, the core principle remains the same: only brands with discoverable, credible, and well-structured content are likely to appear in AI-generated answers. That means publishing context-rich material that explains problems, outcomes, and use cases in natural, conversational language.

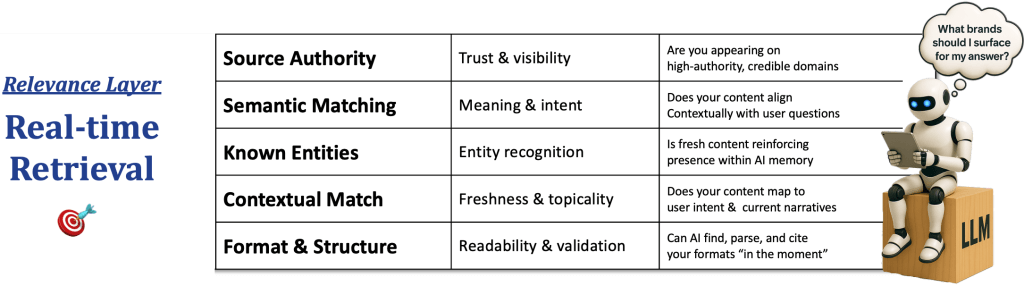

To make this actionable, it’s critical to understand the five core attributes that govern the real-time retrieval and ranking process. The process cycles through the following phases:

- Prioritizes Source Authority: AI assistants are source-aware. If your content isn’t on high-authority platforms, it’s unlikely to be prioritized. Systems favor trusted sites like Forbes, TechCrunch, G2, and SiliconANGLE, along with independent research and analyst sources. Being cited or featured on these outlets greatly increases visibility.

- Performs Semantic Matching: Modern LLMs focus on meaning rather than keywords. When users ask, “What are best practices for AI governance in banking?”, the system retrieves content semantically related to the topic. Content written in conversational, human-like language is much more likely to be matched and surfaced.

- Reinforces Known Entities: Retrieved content is cross-checked against the model’s internal knowledge. If your brand is already recognized as an entity, new content amplifies your visibility. Without that entity footprint, even strong material may fail to connect. Discoverability is an ongoing process, not a one-time event.

- Scores Contextual Match: Once candidates are retrieved, AI ranks them based on recency, authority, and topical relevance. Content that is timely, evergreen, and high-quality, and consistently aligned with user intent, ranks highest.

- Evaluates Format & Structure: Finally, the AI evaluates whether your content is easy to understand and well-organized enough to quote. Proper metadata, schema markup, and conversational formats (e.g., interviews, Q&As, podcasts, and explainers) help make retrieval simpler. Content that is credible, authoritative, authentic, and trusted is given higher priority, while vague or promotional material is ranked lower.

These attributes highlight a key success factor: visibility in the AI era isn’t static; it’s a living process. Maintaining brand presence requires a steady cadence of AI-ready, structured, high-authority content published across trusted platforms.

Together, Knowledge Building and Real-Time Retrieval operate as the dual engines of AI-driven brand visibility:

- Knowledge Building embeds your brand in long-term memory.

- Real-Time Retrieval keeps it fresh and discoverable.

- These layers work in unison to amplify each other’s impact.

Yet in the AI era, visibility isn’t guaranteed even with making progress on these layers, because if AI can’t find, parse, and cite you, it can’t recommend you!

The Citability Layer

While training and retrieval determine what AI knows and retrieves about your brand, Generative Engine Optimization (GEO) and LLM SEO determine how easily AI systems can access and rank that information. These practices create the tactical layer where marketers convert strategy into structure, making sure your digital presence is machine-readable, citable, and accessible to the AI systems that continually crawl, parse, and learn from the open web.

As Stas Levitan has stressed,

“Websites today are built for humans, not for machines. Most brands don’t realize their sites are actually blocking large language models from understanding their content.”

While traditional SEO chased rankings and clicks, GEO and LLM SEO chase citability and inclusion, assessing how likely your content is to be synthesized, quoted, or recommended in zero-click AI answers.

Think of GEO and LLM SEO as two sides of the same coin:

- GEO focuses on external visibility: how your brand is discovered, cited, and recommended by AI-powered answer engines. This determines who wins the ANSWER, the moment of truth (what AI retrieves and cites).

- LLM SEO focuses on internal comprehension: how LLMs ingest, interpret, and retain your brand within their semantic memory and retrieval systems. This determines who wins the MODEL, serving as the foundation for long-term, repeat recall (what AI knows about you).

Together, they make your brand both visible and verifiable. Your brand can exist in one, both, or neither of these layers, and that difference has real marketing consequences:

- In the answer but not in the model: fleeting exposure without lasting trust or association.

- In the model but not in the answer: strong authority, weak visibility. Known, but not surfaced.

- In both, the ideal state: your brand becomes part of AI’s reasoning process, making it more likely to be consistently cited, reinforced, and recommended.

For example, if someone asks ChatGPT, “Who are the leading Agentic AI vendors?” and your brand appears, you’re in the MODEL (LLM SEO). If they ask, “What are best practices for agentic AI in banking?” and your blog post is cited, you’re in ANSWER (GEO).

The payoff: Brands in both don’t just show up; they shape the conversation, transforming your brand from being found to being preferred.

When executed well, GEO and LLM SEO ensure that all aspects of your brand’s digital surface, including your website and trusted 3rd domains, become AI-friendly, discoverable, and rankable.

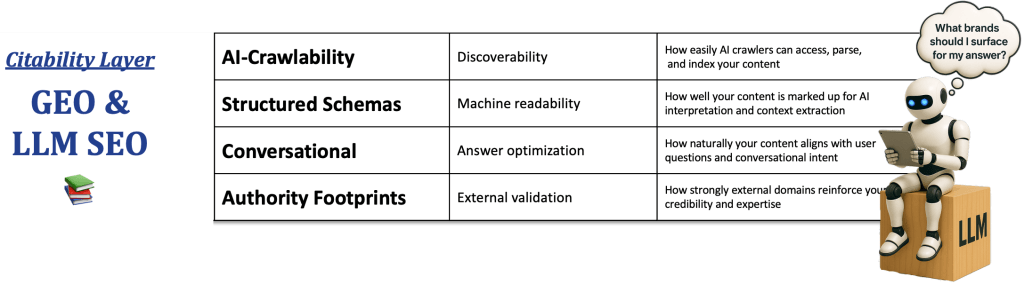

There are four core actionable levers of GEO / LLM SEO:

- Make Your Site AI-Crawlable: Crawlability is the foundation of discoverability. AI crawlers like GPTBot (OpenAI) and ClaudeBot (Anthropic) require open access. Update your robots.txt file to allow trusted AI bots, submit XML sitemaps, fix broken links, and improve mobile speed.

- Add Structured Schema Data: Schema markup transforms your site into a machine-readable data source. Implement JSON-LD schemas (FAQs, Articles, Products, Organizations) so AI can quickly interpret authorship, relevance, and relationships.

- Deploy Conversational Content: Content that reads like an answer is more likely to be accepted. Structure pages in Q&A format, include concise TL;DR summaries, and cite credible external sources.

- Build Authority Footprints: External validation strengthens trust signals. Obtain analyst coverage, customer reviews, backlinks, and listings on high-authority sites. A consistent brand identity across platforms helps LLMs link your digital footprint to your expertise.

For all of these, you’ll need to monitor your site’s performance & AI-readiness. Keep an eye on core web vitals (dashboards) and make sure your domain passes the range of standardized AI crawlability and schema validation tests out in the marketplace, with solutions like LightSite AI. Stas Levitan, CEO, LightSite AI, has found:

“It’s critical to use proper data structures in schemas, JSON, etc., not just regular HTML. About 90% of websites are missing it and lowering AI readability.”

As AI engines evolve, new techniques are emerging, such as query-intent mapping, multimodal metadata, and entity consistency management. These next-generation approaches reward brands that publish structured, evidence-based, and rich content in context, advancing discovery from mere correlation to explanation. As with other layers, the “science” part of the discipline is evolving rapidly, so staying current is essential.

The key is maintaining both technical readiness (site architecture and schema) and semantic relevance (content that matches how people actually ask questions). Organizations that follow these practices often see a 2–5× increase in AI citations within months, establishing themselves as trusted, preferred sources.

To sum up, GEO and LLM SEO extend the AI Engine Visibility Loop into the space where marketers have the most direct influence. They make sure that your storytelling, credibility, and content investments aren’t lost in outdated web frameworks, but are instead machine-readable and easily retrievable when AI engines seek answers.

Across all the layers explored so far, embedding your brand into AI’s knowledge, keeping it fresh and relevant, and enhancing citability, one set of principles ultimately decides whether those efforts succeed:

The signals that confirm your brand’s credibility, authenticity, authority, and trust.

The Validation layer

To maximize the impact of all AEO practices, every piece of content you produce and every signal you send must align with what theCUBE Research refers to as the CAAT Principles. These principles represent the four core attributes that large language models focus on when ranking and selecting brand information. Derived from the conceptual foundation of Google’s E-E-A-T framework, CAAT is redefined for the AI discovery era, where LLMs —not search engines —determine visibility and trust.

Where the first three layers shape how AI finds and understands you, CAAT determines whether AI believes and trusts you as a credible source. It acts as a quality validation layer that either enhances or diminishes the effectiveness of every other optimization effort.

The CAAT principles drive a focus on content that is:

- Credible: Information based on facts, data, and verifiable sources. LLMs prefer content that can be explained, validated, and cross-checked, especially when presented clearly and conversationally. Empirical support, such as research survey findings, metrics, or analyst validation, lowers risk for both human buyers and AI systems.

- Authoritative: Recognized expertise reinforced by citations from reliable sources. Authority is gained through industry media, analyst research, customer stories, and thought leadership. Models interpret these as evidence of your professional influence.

- Authentic: Original insights shared in a natural, conversational tone stand out amid repetitive or promotional content. Unique commentary, firsthand experience, and attributed perspectives create the “human fingerprint” LLMs use to verify authenticity.

- Trusted: Repetition across reputable, high-traffic sources builds lasting confidence. When your brand appears consistently across media, conferences, and respected domains, models strengthen their trust in you with each contextual mention.

As AI assistants and voice interfaces become standard tools for B2B buyers, how people search, research, and evaluate vendors is being rewritten. Instead of typing keyword queries or browsing website lists, buyers now ask natural questions like “What’s the best software for supply chain analytics?” or “Which vendors offer AI-driven fraud detection for banks?”

LLMs interpret these questions not only for meaning but also for validation signals, elevating answers from brands that demonstrate CAAT qualities across all forms of content: whitepapers, websites, analyst coverage, interviews, FAQs, customer stories, and executive commentary.

Even if you improve the attributes discussed so far, failing to meet CAAT standards will limit your visibility. Inconsistent tone, unverified claims, or a lack of attribution can cause your brand to be filtered out —not for irrelevance, or lack of knowledge, but for insufficient trust-building.

CAAT functions as the trust multiplier that activates every other layer of AI visibility. It ensures your knowledge-building remains credible, your retrieval signals are prioritized, and your GEO/LLM SEO efforts are reinforced with authority and integrity.

When applied consistently, CAAT principles create a flywheel of validation: your content is retrieved more often, cited more confidently, and remembered longer by both AI systems.

In short, CAAT converts credibility into discoverability, validation into visibility, visibility into influence, and ultimately into preference. And it can make a big difference, as Stas Levitan noted:

“In my experience, credibility and authenticity beat everything else. Smaller, newer brands are winning against big competitors just by being clear, authoritative, and trustworthy in how they communicate.”

How the AI Visibility Loop Works

The four layers of AI Engine Optimization (AEO) don’t operate in isolation; they form a continuous, self-reinforcing Visibility loop that mirrors how large language models (LLMs) and retrieval systems actually process, refresh, and refine their understanding of your market.

- The Semantic Layer establishes your brand’s long-term presence within the model’s memory by building knowledge.

- The Relevance Layer keeps that presence current, surfacing your most contextual and timely content through real-time retrieval and ranking.

- The Citability Layer ensures your content is machine-readable and accessible across the digital ecosystem, leveraging GEO and LLM SEO practices.

- The Validation Layer filters and strengthens every signal through Credibility, Authenticity, Authority, and Trust (CAAT), amplifying the entire visibility loop.

Together, these layers work in unison to form the information-processing engine behind AI discovery. AI systems continuously crawl, learn, and reason—each new signal feeding back into knowledge, fresh authority signals altering relevance, validated sources increasing in citability, and trustworthy narratives becoming deeply embedded in the model’s memory.

This AI visibility loop never stops. It evolves with every query, update, and signal your brand emits, and even with every signal the digital ecosystem sends out—including those from competitors, journalists, influencers, customers, prospects, and analysts.

Understanding this cycle reveals a critical truth: AI visibility isn’t a one-time optimization; it’s an ongoing dialogue between your brand and the intelligent systems that represent it.

The brands that win in this environment are those that treat discoverability as a living system, constantly learning, updating, and reinforcing each layer of the AI visibility loop.

By continuously tuning each of the 19 levers, they create a compound multiplier effect that LLMs and buyers can’t ignore.

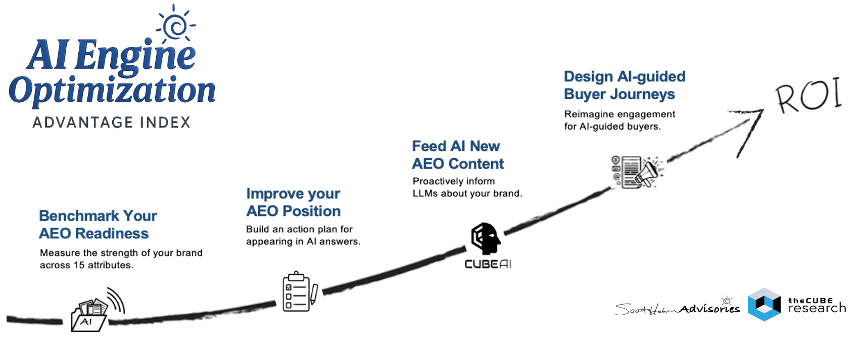

The AEO Advantage Index

Each of the 19 attributes across the four AEO layers functions as a tunable lever, a variable you can assess, calibrate, and strengthen to improve your odds of being surfaced in AI-generated answers and buyer journeys.

These levers combine quantitative signals (citations, backlinks, structured data, analyst mentions) with qualitative factors such as topical authority, narrative clarity, and authenticity. Together, they define your AI visibility posture, how confidently large language models recognize, retrieve, cite, and trust your brand.

The challenge is that these signals are dynamic. LLMs continuously relearn, re-rank, and reason, meaning your visibility must evolve in sync. To keep pace, leading marketing and communications teams are beginning to treat AEO as a continuous performance system —testing, measuring, and tuning their brand presence much like product or CX teams iterate through agile development sprints.

Fortunately, a growing ecosystem of specialists is emerging. Vendors such as Red Line Advisories, LightSite AI, and Kickstand are pioneering new tools, analytics, and retrieval simulations to benchmark and optimize brand visibility inside generative systems.

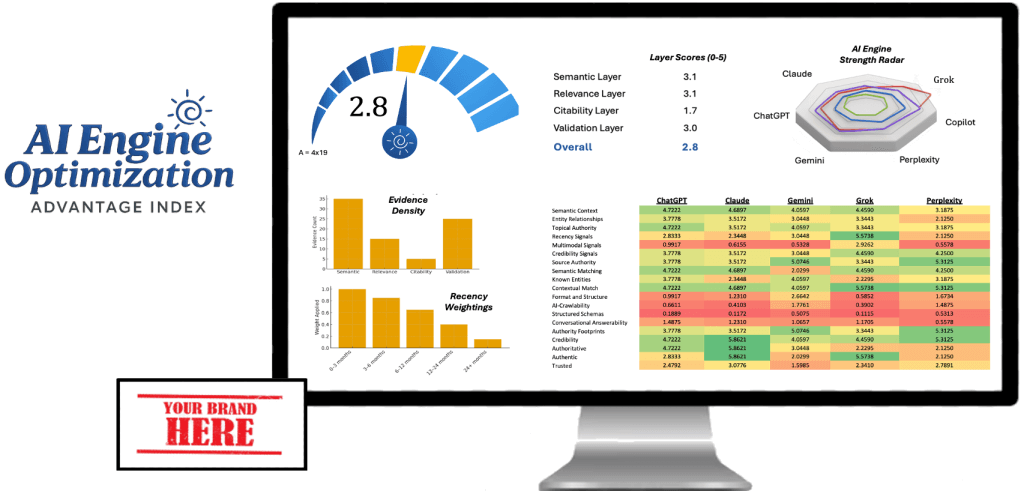

At theCUBE Research, we’ve developed a comprehensive, evidence-based framework to guide this transformation: the AI Engine Optimization (AEO) Advantage Index.

This methodology quantifies your brand’s performance using data, evidence logs, SWOT analysis, and analyst validation. The process includes:

- Benchmarking AEO Readiness: a diagnostic assessment across 19 attributes aligned with how AI learns, retrieves, and ranks brands.

- Improving AEO Position: targeted strategies and 90-day action plans to strengthen weak signals and amplify authority.

- Feeding AI New AEO Content: proactive recommendations and prompt libraries, supported by theCUBE AI, to help AI systems learn and cite your brand narrative.

- Designing AI-Guided Buyer Journeys: strategies that ensure once visibility is achieved, discovery leads to engagement and demand.

The methodology blends quantitative scoring with qualitative insight logs, including transcripts, analyst mentions, and real-world AI query results, to produce a repeatable system for measurable improvement in visibility, trust, and preference.

The AEO Advantage Index applies rigor and transparency to each phase of the assessment. It evaluates 19 attributes across four AEO layers using over 380+ scoring touchpoints, 100+ model prompts, and multi-model retrieval simulations across multiple leading AI assistants. Each attribute is scored on a 0–5 scale using a structured analytic workbook that executes ~850 weighted formulas to normalize results, compute pillar roll-ups, and quantify difficulty ratings.

The process mirrors how LLMs actually learn and rank brands, testing buyer-intent prompts, triangulating across retrieval systems, and documenting every signal for transparency and repeatability. The result is a comprehensive diagnostic and learning experience: teams see not only where they stand but why, supported by evidence and prescriptive actions. Every engagement yields a quantified Index Score, a prioritized roadmap, and a dynamic feedback loop that enables continuous learning and brand adaptation as AI evolves.

To learn more about how the AI Engine Optimization (AEO) Advantage Index works and why you can trust it, read AI Engine Optimization (AEO) Advantage Index: How it Works.

Part of the AEO Advantage Index includes CUBEAI.com, which helps operationalize AEO by transforming thousands of hours of expert interviews, event transcripts, and analyst discussions into structured, AI-readable knowledge that LLMs can recognize, retrieve, and reference.

By indexing authentic, expert-driven conversations from theCUBE, theCUBE NYSE Wired, and SiliconANGLE, theCUBE AI acts as a dynamic AEO engine — constantly boosting brand visibility, credibility, and authority within the AI discovery ecosystem.

It complements the AEO Advantage Index’s scorecards, evidence logs, and action plans with real-world visibility data, showing how brands are actually being learned, ranked, and recommended by AI systems. In essence, it’s where AEO strategy meets execution, CAAT-compliant content, earned media, and thought leadership to build lasting brand equity within AI engines.

In the AI era, visibility is no longer just a side effect of marketing; it’s a key competitive asset. Organizations that tune, learn, and adapt across all four layers will not only appear in AI answers—they’ll help shape them.

Ultimately, your story may matter as much as your products.

As Mick Hollison, founder of Red Line Advisors, aptly notes:

“You could build a great product that solves a real problem. But if Horton doesn’t hear a who, what difference does it make?”

Watch the Podcast

In this episode of The Next Frontiers of AI, Scott Hebner, Principal Analyst for AI at theCUBE Research, sits down with Stas Levitan, CEO of LightSite.ai, to demystify how AI discovery really works — and how to ensure your brand is visible in the era of AI-mediated buyer journeys.

📩 Contact Me 📚 Read More AI Research 🔔 Subscribe to Next Frontiers of AI Digest