Why banks and regulated industries are building on‑prem/hybrid AI stacks — In conversation with Starburst CEO Justin Borgman

TL;DR

- We believe hybrid AI is the enterprise base case — i.e., keep data in place and move intelligence to it.

- Today, most high‑visibility AI runs in public clouds and neo‑clouds (e.g., CoreWeave); hybrid enables inference, fine‑tuning, and RAG/agent proximity to governed data for latency, cost, and compliance.

- Starburst is an example of a firm that provides governed, federated access to data where it lives (Trino), avoiding mass ETL and re‑platforming.

- Dell Lakehouse (powered by Starburst) plus Dell AI Factory with NVIDIA forms a validated on‑prem pattern, with burst lanes to cloud/neo‑cloud for training spikes.

- NVIDIA leads on ecosystem/software; AMD — and eventually Intel — offer credible alternatives as buyers tune TCO and diversify supply chains; specialists (Groq, Cerebras, etc.) fit specific SLA niches.

- Consistent with our Jamie Dimon vs. Sam Altman thesis, proprietary enterprise context is the real moat; hybrid AI operationalizes that advantage.

We believe the next phase of enterprise AI won’t be decided in a hyperscale region. It will be decided inside regulated data estates — banks first, then other regulated industries and eventually mainstream enterprises — where intelligence moves to the data rather than the other way around. Today, most headline AI activity still occurs in public clouds and in neo‑clouds such as CoreWeave (backed by NVIDIA, with Dell as a major supplier). That won’t change overnight. But our research indicates that inference, fine-tuning, retrieval‑augmented generation (RAG), and agentic workflows will land on‑prem and hybrid for reasons that are practical, not ideological – i.e., governance, latency, and unit economics.

Starburst is aiming to squarely fit into this shift. The company’s foundation — commercializing Trino (ex‑Presto) and leaning into Iceberg OTFs — to query data where it lives — is purpose‑built for hybrid AI, in our view. Borgman reminded us that Starburst took a hybrid stance from day one, while many peers (e.g., Snowflake) went cloud‑only, and that posture has aged well as large enterprises faced the reality of data in “multiple form factors.” The demand right now is strongest in financial services. Starburst claims to serve 8 of the 10 largest banks and sees renewed on‑prem enthusiasm driven by AI. When workloads meet regulated data, customers want optionality and control, often up to air‑gapped architectures running local LLMs (e.g., Llama‑based, or even DeepSeek local) with zero external calls.

The blueprint Starburst follows is a federated data plane that provides fast, governed access across lakes, warehouses, and operational systems; open table formats enable portable storage; and a validated on-prem AI stack for secure inference and selective fine‑tuning, augmented by burst lanes to cloud/neo‑cloud for training spikes. Starburst covers the data plane. Dell covers the on‑prem packaging with two complementary offerings.

First, Dell Lakehouse (powered by Starburst). Borgman shared that this is an OEM integration — Starburst under the covers — aimed at buyers who want a turnkey, open data plane in their own data center. It marries Iceberg‑based tables with S3‑compatible object storage (think PowerScale/ObjectScale/ECS) and aims to give customers a “looks‑like‑cloud, runs‑on‑prem” operating model. The strategic benefit is not only convenience, but also governance consistency across environments without the penalty of data movement.

Second, Dell AI Factory with NVIDIA provides the compute plane, with PowerEdge systems, NVIDIA accelerators, NVIDIA AI Enterprise (including NeMo and NIMs microservices), plus validated networking (Ethernet/ROCE or InfiniBand), and lifecycle tooling. If paired together, customers get an appliance‑like experience with federated, policy‑aware data access on one side and an accelerated, supportable AI runtime on the other. This may be exactly what a big bank needs to run air‑gapped inference and fine‑tuning inside the walls and still burst externally for large training jobs when it makes sense. Nonetheless, we continue to hear from financial institutions that it’s early days and they’re still mixing and matching components to try to squeeze every ounce of efficiency out of their infrastructure.

Open formats are a key piece of the puzzle. Borgman’s read is that the format war is over. He said, “Pretty much every new project we see is Iceberg today.” With storage standardized, the strategic questions are shifting to where catalog metadata and access policies live and how to preserve optionality at that layer. We agree. Access control and catalogs increasingly look like features of the data platform, not perpetual standalone products, which is why buyers must decide how much to integrate versus disaggregate for flexibility.

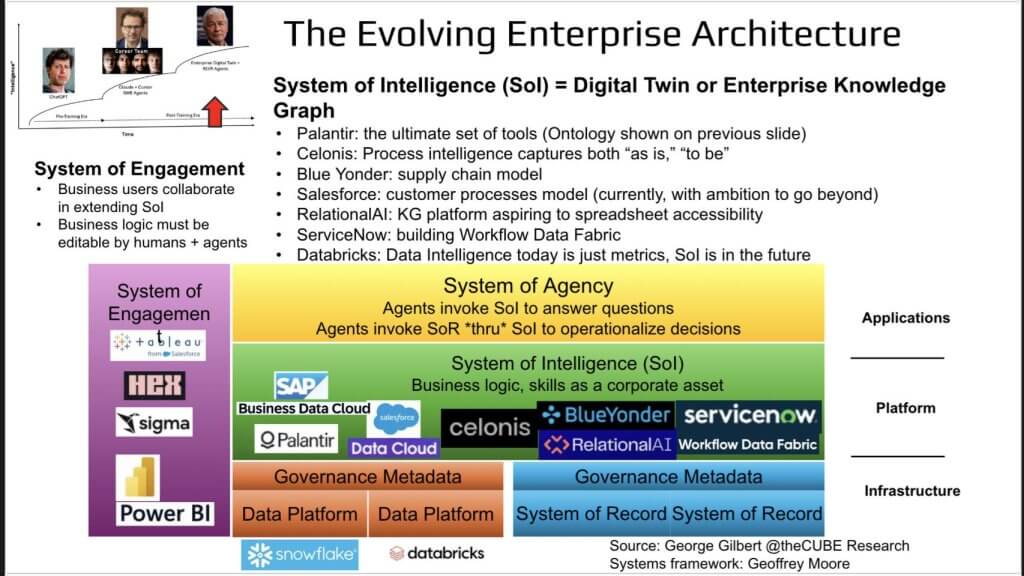

The agent conversation pushes the value up the stack in our view. As Borgman noted, application vendors (Salesforce, ServiceNow, and SAP) and data platforms (Snowflake, Databricks, and Starburst) are entering overlapping territory. Borgman’s take is that the SaaS players are entering the domain of data platforms. Our view is somewhat different. As we’ve described in our “Crossing the Rubicon” post, we believe that operational applications hold the crown jewels, namely the business logic, associated metadata and process knowledge. In fact, we believe that these elements can enable organizations to build a digital representation of the enterprise and a digital twin where data programs workflows.

To enable this vision, someone must own the system of intelligence that interprets metrics, understands entities and processes, and feeds agents. We believe, as does Borgman, that many app vendors are hedging, partnering heavily with data platforms to avoid a frontal fight; meanwhile, those data platforms are climbing the stack. Starburst’s approach is pragmatic, meaning it offers a conversational NL‑to‑SQL agent backed by curated data products to reduce hallucination and, more importantly, enable customers to build their own task‑specific agents that source governed context across silos. This aligns with our “Jamie Dimon vs. Sam Altman” framing — i.e., proprietary enterprise context, organized, governed, and instantly retrievable: Therein lies the moat. Hybrid AI operationalizes that moat.

Economics are pushing in the same direction. As Borgman points out, a decade ago, the cloud was the default answer, especially as the Hadoop wave crested and cloud elasticity outclassed brittle and expensive on‑prem infrastructures. The gap has narrowed. Workload dynamics are the determinant today, where spiky experimentation and large-scale training favor cloud/neo‑cloud, but steady‑state, latency‑sensitive inference and selective fine‑tuning increasingly favor on‑prem, where CAPEX can be amortized and data egress eliminated. Banks are the penguins off the berg — they dive first. Others follow when the pattern is proven.

To ground the “what runs where” decision, we advise keeping the placement simple:

| Phase | Where it runs best today | Why it belongs there |

|---|---|---|

| Foundation model training | Governance, auditability, and predictable cost | Elastic GPUs and time‑to‑capacity win |

| Enterprise fine‑tuning (sensitive data) | Hybrid / On‑prem | Low latency, data‑access control, and reliability |

| RAG and agentic inference (production) | On‑prem / Hybrid | Low latency, data‑access control, reliability |

NVIDIA’s position in this architecture remains central because of software completeness and ecosystem breadth. CUDA, Triton, TensorRT, NeMo, and NIMs reduce integration risk for regulated buyers. That said, we believe a more inclusive silicon market will form as stacks normalize and buyers diversify supply:

- AMD: MI300‑class components are increasingly attractive on perf‑per‑dollar and supply; ROCm continues to mature.

- Intel Gaudi: is still playing catch-up, but Ethernet-first scale-out and TCO‑sensitive deployments may gravitate as supplies ramp — especially if the market remains constrained.

- Specialists: Groq for ultra‑low‑latency text inference; Cerebras for very large models and niche fits that win certain battles.

We see all this as a clear market requirement. If the enterprise wants trustworthy agents, it must assemble a digital representation of the business — people, places, things, and processes — grounded in governed data products and continuously updated. That representation cannot live solely in one vendor’s proprietary cloud or in a siloed application. It must be accessible, with fine‑grained access enforced at query time, and it must keep model access management in scope so sensitive fields don’t leak into prompts or embeddings. Starburst’s “facile, governed access” mantra is relevant precisely because it doesn’t force a re‑platform; it federates what’s already there and feeds it into validated AI runtime patterns.

What follows is a potential near‑term execution playbook:

- Stand up Starburst as the federated plane; standardize on Iceberg and an S3‑compatible object store; rationalize catalogs and access policies.

- Deploy a modest AI Factory footprint (Dell + NVIDIA) for secure inference; set low-latency SLOs and monitor results.

- Launch one regulated RAG use case (e.g., KYC investigations) with local vector embeds refreshed via governed queries.

- Consider adding domain adapters where business demands justify it; implement model access management and clear milestones.

- Establish burst lanes to a neo‑cloud (e.g., CoreWeave) for episodic training with portable artifacts (containers, open runtimes).

We note the following key factors as important markers:

- Convergence on NIM‑like microservices across vendors; standardized inference runtimes.

- Infiniband versus Ethernet/ROCE choices as operators optimize cost and operational maturity.

- Regulatory clarity on model risk management and audit for agentic systems.

- Data platform vendor moves to compete up the stack.

- How competitors approach the system‑of‑intelligence layer (see diagram below):

The bottom line: In our opinion, hybrid AI is the enterprise end‑state. Keep sensitive, high‑value data where it belongs and move intelligence to it. Starburst + Dell is one practical path example — i.e., an open, federated data plane (e.g., Dell Lakehouse with Starburst) paired with an on-prem AI Factory runtime infrastructure based on NVIDIA, with cloud/neo‑cloud remaining essential for elasticity and speed. NVIDIA leads today, but AMD, Intel, and specialists have growing roles as buyers optimize risk, cost, and supply. The fintechs are showing the way. The rest of the market will follow.

Appendix — Vendor Fit Map

| Layer | Primary Option(s) | Alternatives / Where They Fit |

| Federated Access | Starburst (Trino) | Dremio; Databricks SQL; Presto variants |

| Table Format | Iceberg | Delta (if committed); Hudi (niche) |

| Storage | Object/file | Dell; MinIO; NetApp; Pure; VAST, etc. |

| Inference Runtime | NVIDIA AI Enterprise / Triton / NIMs | vLLM; ONNX Runtime; open‑source stacks |

| Accelerators | NVIDIA | AMD for perf/$; Intel Gaudi for Ethernet scale; Groq/Cerebras for niche |

| Networking | NVLink, InfiniBand, and Spectrum‑X | Ethernet/RoCE with careful tuning |

| Agentic Layer | Starburst agent plus customer‑built agents | App‑suite agents (Salesforce, ServiceNow, and Palantir) with governed access back to data |