As my colleagues from theCUBE Research and I left IBM’s TechXchange this week, we agreed that this year’s event focused on a singular theme: industrializing agentic AI within enterprise environments. It emphasized evolution, not revolution. It was practical and ROI-driven. Trust was the common denominator. The rallying cry for the 10,000 attendees was to create a “new speed of business,” transforming experimentation into governed, trusted, and ROI-enhancing agentic workflows that actually know how to think, learn, and act.

Dinesh Nirmal, IBM’s SVP for Software, set the stage during his keynote with a reality check: most enterprises have invested heavily in Agentic AI pilots, but only 5% see any meaningful ROI.

As we dove into the details supporting IBM’s theme, they resonated well with us due to the core tenets of success learned from our engagements:

- Your future starts with your past

- Trust is the currency of ROI

- Go open, or go broke

- No miracles required, just progress

IBM’s strategic intent at the TechXchange 2025 was to demonstrate IBM’s focus on building trust within open ecosystems by taking concrete steps to enable governance-embedded innovation and a path to ROI by operationalizing agentic AI lifecycles. The goal? To generate an ROI multiplier effect throughout the entire agentic AI development process and ecosystem, accelerating digital labor transformation.

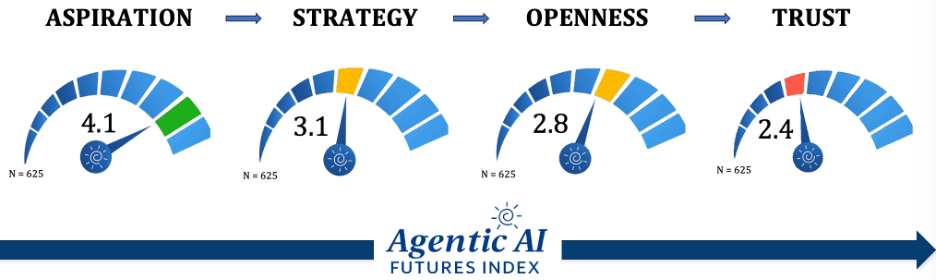

Our September 2025 Agentic AI Futures Index survey, conducted with 625 pre-qualified AI business and tech professionals across 13 industries, highlights the strategic nature of IBM’s PoV. It found a sharp decline in maturity as organizations shift from aspiration to trusted execution, exactly what IBM is investing to address:

- Aspirations are high (4.1 score): 90% of leaders are enthusiastic about the transformative nature of agentic AI-enabled digital labor.

- Strategy is maturing (3.1 score): 61% are planning to deploy AI agents over the next 18 months, with 62% focused on enabling knowledge work.

- Openness is core (2.8 score): 57% are investing in achieving open interoperability across their agentic enterprises.

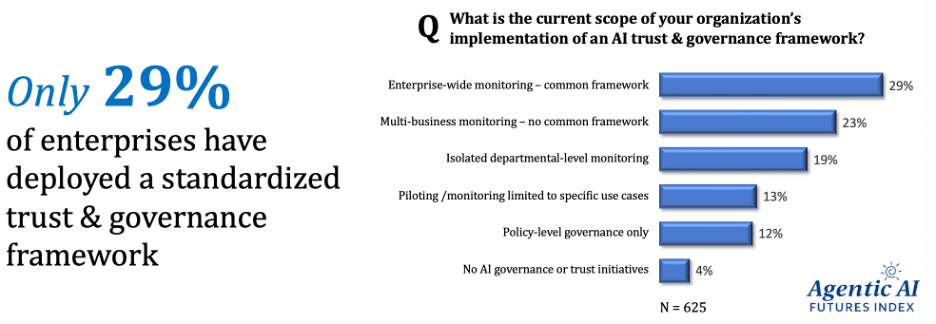

- Trust is elusive (2.4 score): Today, only 52% have a high level of trust in agentic AI outcomes due to technology gaps (73%) and weak governance frameworks (71%).

These findings tell the story: enterprises envision high-ROI use cases beyond task automation; Agentic AI investment is accelerating; openness is key; and trust is the overriding barrier to ROI. Yet, while few have deployed trust and governance frameworks, the vast majority have established them as a core investment priority.

The good news for IBM is that TechXchange attracted more than 10,000 participants, including developers, data scientists, architects, and business leaders, to hear this message and learn firsthand that IBM has emerged as a safe, business-oriented, and hands-on AI company.

At the conference, IBM didn’t just talk strategy, as it did at IBM Think 2025 in May; it talked execution. They also strengthened their enterprise stance by adding a trio of new potentially game-changing advancements to their watsonx platform to address these challenges head-on:

- Anthropic: a new strategic partnership, integrating Claude with watsonX

- Project Bob: a new AI-first integrated development environment (IDE)

- Project Infragraph: a new agentic control plane for hybrid infrastructures

In this research brief, we will dive deeper into the value and strategic intent of these key announcements and assess IBM’s ability to execute ROI growth for its clients.

Also, make sure you check out coverage from my colleagues who were also in attendance: Savanah Peterson, Rob Strechay, Jackie McGuire, and Bob Laliberte on theCUBE Research portal.

Anthropic: An Agentic Trust Multiplier

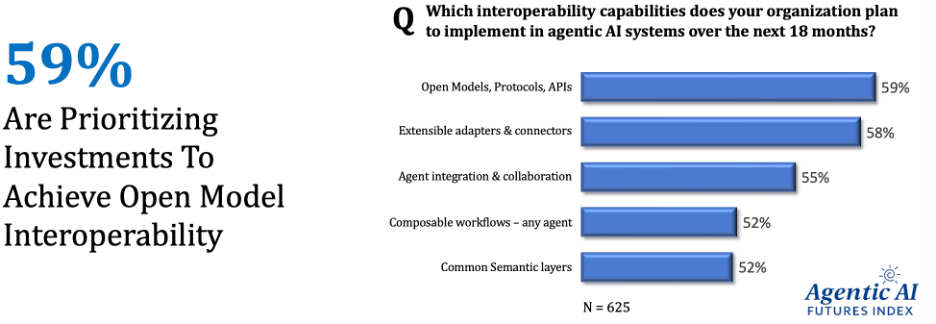

IBM’s strategic partnership with Anthropic may represent one of the most consequential moves in IBM’s AI roadmap since the launch of watsonx. It gives IBM’s commitment to an open, multi-model AI ecosystem more teeth, which is the foundational differentiator of IBM’s AI strategy. The Agentic AI Futures Index survey highlights why this is consequential:

The collaboration goes beyond just providing model access; it focuses on embedding trustworthy intelligence directly into core enterprise workflows. By enabling enterprise access to Anthropic’s Claude family of large language models, known for safety, reasoning, and reliability, IBM is not only fulfilling its open AI promise but also its trusted AI commitment. Initially, through watsonx.ai and watsonx Orchestrate, IBM clients will be able to harness Claude’s advanced reasoning and natural language understanding within controlled, compliant environments.

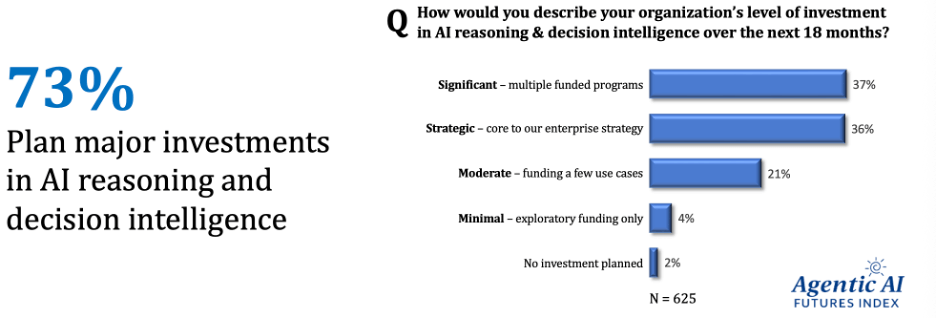

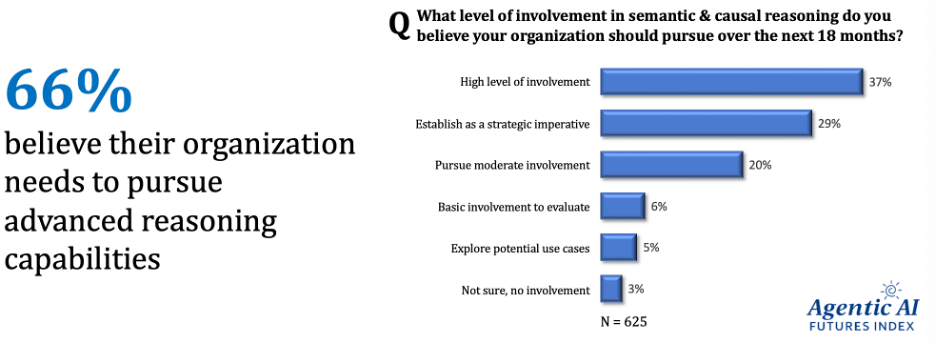

From a capability standpoint, Claude brings a distinctive strength in structured reasoning and constitutional AI, a design philosophy rooted in aligning model behavior with explicit ethical principles. For enterprises operating under strict compliance and audit requirements, this design maps naturally to IBM’s longstanding focus on responsible AI. This enables clients to adopt powerful AI agents that don’t just generate code or content, but also reason, explain, and justify their outputs —a critical differentiator in regulated industries such as finance, healthcare, and government. This represents a tangible step on the ladder to AI decision intelligence, which is emerging as the clear priority in the next frontier of enterprise AI. The findings from the recent Agentic AI Future Index survey support this PoV:

Under the agreement, IBM will integrate Claude models into its software development, orchestration, and decision intelligence layers within its WatsonX architecture. This multi-model orchestration allows Claude to handle creative reasoning or ambiguous goals, while IBM Granite enforces domain-specific constraints. The result is an AI fabric optimized for hybrid enterprise workflows that balance innovation and governance.

A key part of the collaboration is the co-created guide “Architecting Secure Enterprise AI Agents with MCP,” which formalizes what IBM refers to as the Agent Development Lifecycle (ADLC). This framework outlines a clear, repeatable process for designing, deploying, and monitoring AI agents within enterprise systems using the Model Context Protocol (MCP), an open standard that guarantees safe, consistent, and contextual communication between AI models and business tools. The ADLC codifies practices such as:

- Context injection: policies, permissions, compliance data, etc.

- Auditability: agent decision chains, accountability, regulatory alignment, etc.

- Optimization loops: data, outcome, human feedback learning

For mutual clients, the companies reported that simulation pilots led to a 70% reduction in deployment risk and approximately 40% faster prototyping cycles. This promises to deliver enterprises enhanced:

- Choice and Control: Enterprises can select and integrate the right models for the right job, for example, Claude for creative reasoning, IBM Granite for domain expertise, and Llama for lightweight inferencing.

- Governed Innovation: Their jointly designed framework provides a proven pathway to safely operationalize AI agents across complex IT estates, addressing top enterprise concerns around trust, governance, and compliance.

- Accelerated ROI: By leveraging Claude’s reasoning into watsonx’s orchestration and lifecycle tools, enterprises can shorten to more intelligent AI agents and decision-driven workflows.

From our perspective here at theCUBE Research, the IBM–Anthropic alliance marks a strategic inflection point in enterprise AI maturity. While other hyper-scalers (cloud and AI) pursue generative AI and LLM model supremacy, IBM is pursuing trust supremacy, which is critical to the concept of digital labor transformation, a notion 90% of leaders believe is inevitable. For IBM, this partnership enhances its open-ecosystem play versus proprietary AI competitors, following the same strategy that led to its success in open standards (WebSphere), hybrid cloud (Red Hat), and, now, agentic AI. For Anthropic, it delivers enterprise distribution at scale, expanding Claude’s reach into some of the most data-sensitive and compliance-heavy sectors.

This is a win-win-win-win proposition for IBM, Anthropic, their customers, and their partners. Collectively, it’s our view that this will spark yet another multiplier of ROI value, especially in complex enterprise settings.

Meet Bob, Your New Digital Developer

The introduction of Project Bob is a tangible advancement in their agentic AI developer strategy. While still in private technical preview, Bob promises to partner with human developers to totally reimagine the software development lifecycle (SDLC) as a collaborative human-AI workflow.

IBM is not positioning Bob as just another coding co-pilot, but rather as a broader AI-first development lifecycle environment that relies on multi-model orchestration to handle everything from code modernization to security compliance.

It was impressive to see the speed-to-value in a live on-stage demo, where Bob and his human co-worker migrated a legacy Java application into a new microservices architecture on Red Hat OpenShift, auto-generating Kubernetes manifests, scanning for vulnerabilities, and modernizing the user interface, all in under 30 minutes.

Dinesh Nirmal, during his keynote, stated that IBM has over 6,000 internal developers using Bob on real-world projects, where 95% of the interactions extend beyond code generation to include refactoring, documentation, and even DevSecOps automation—collectively, achieving a 45% productivity improvement and 60% higher quality.

What makes Bob so interesting is its context awareness. It can understand the full scope of a project, elevating developers to manage the process by issuing high-level, goal-based prompts (e.g., “make this responsive for mobile devices on iOS 26”). Even more interesting, Bob integrates with Claude for greater trust and reasoning, WatsonX Orchestrate for agentic workflows, and IBM’s AgentOps framework to provide an AI co-worker that helps developers not only code, debug, and test but also audit, explain, and improve.

For IBM clients, Bob is more than just a productivity booster; he acts as a new team member that organizations can “hire” to give their developers “superpowers” to accelerate their path to ROI. By integrating multi-model orchestration, contextual understanding, and enterprise-grade governance, Bob transforms developers from simply writing code into orchestrators of agentic workflow solutions, nearly doubling the speed while halving the risk and cost. This is crucial for most enterprises, as 70% of their development capacity remains tied up in maintenance and modernization, limiting their ability to innovate at scale with AI. Bob directly tackles this challenge by offering a way for clients to modernize legacy systems and embed trusted AI development, all without the need to overhaul existing DevSecOps pipelines.

For IBM, it’s a potential major success because it builds on its open agentic AI architecture to address one of the biggest challenges all companies face: the idea that your future depends on your past. Unlike lightweight co-pilots tied to a single model, Bob runs on IBM’s watsonx Orchestrate and Anthropic Claude integrations, allowing it to access multiple reasoning engines within a unified governance framework. This perfectly aligns with IBM’s broader goal to become the neutral orchestrator of the hybrid enterprise, rather than just another model provider.

If IBM can build upon its initial capabilities, Bob could mark the start of a new era in enterprise development, where digital coworkers work alongside human engineers to reduce time-to-value, institutionalize new best practices, and re-imagine what “software built by AI” truly entails.

In short, Bob seems like a cool guy (or gal). He/she could serve as the blueprint for how agentic AI transforms not only business processes but also the fundamental nature of innovation economics itself.

Infragraph: An Agentic Control Plane

If our new co-worker, Bob, empowers developers, Project Infragraph empowers the operators of the underlying AI infrastructure itself. Infragraph, built by IBM’s HashiCorp, creates a real-time infrastructure graph that merges semantic reasoning with operational observability to form the connective intelligence across hybrid clouds.

At its core, Infragraph creates knowledge graphs that understand the connections between infrastructure assets, applications, services, metadata, policies, and data flows, integrating them into a single semantic model. This greatly surpasses the linear dashboards and static configuration databases that dominate IT management today. By modeling entities, relationships, meaning, and context, Infragraph allows AI agents to reason about how infrastructure components relate, interact, and influence each other. Importantly, it transforms the cloud estate into a dynamic web of knowledge that AI can understand, analyze, and act upon.

We view this as a high-potential, high-value innovation that represents a meaningful step forward in making agentic AI systems practical and reliable in enterprise settings. Why? In traditional operations, infrastructure visibility has been limited to metrics, monitoring, and alert systems that can tell you what is happening, but not why. That is, root causes are always the first step in remediation.

Infragraph promises to change that equation, giving agentic systems the ability to:

- Reason about system states: dependencies, vulnerabilities, relationships.

- Diagnose issues in context: trace it to its upstream cause or policy violation.

- Plan and act: execute remediation runbooks via Ansible and Turbonomic.

- Learn from outcomes: capture consequences to continuously improve.

For enterprise clients, Infragraph addresses a long-standing problem: fragmented infrastructure knowledge spread across isolated monitoring tools, digital documents, and teams. By consolidating everything into a single, semantically consistent model, it enables AI agents to access shared context for safe collaboration across DevOps, SecOps, and FinOps. IBM claimed that with Infragraph, what once took days or weeks of cross-team coordination could now be done in minutes with full auditability.

The underlying innovation is the semantic layer enabled by AI knowledge graphs, which can effectively transform infrastructure services into a thinking system. That is, an AI infrastructure capable of self-assessment, explanation, and self-remediation in service of agentic systems. Knowledge graphs are emerging as the next rung on the ladder of Agentic Reasoning and Decision Intelligence, bridging the gap between generative AI and accurate contextual understanding. If value is created within the complexity of infrastructure services, its application to AI agents and agentic workflows will likely be much more profound. Furthermore, this stage is for what is likely to come next: causal knowledge graphs — systems that don’t just map relationships, but actually understand why those relationships exist and how changes in one element affect another. This can become a game-changing technology advancement in the enterprise.

Infragraph may ultimately prove to be one of IBM’s most important innovations of this decade, not because it manages infrastructure better, but because it teaches AI how to understand the infrastructure it manages. As organizations move from static automation to adaptive intelligence, knowledge graphs like Infragraph will serve as the substrate for agents that can perceive, reason, and decide, while enabling AI agents to understand consequences, anticipate risk, and make informed judgments, unlocking the full promise of agentic AI and digital labor transformation.

Infragraph, in short, is not just about observability; it’s about operational cognition. It’s a blueprint for how the next generation of AI will learn, reason, and act with accountability. For IBM and its clients alike, it signals the arrival of a new layer in enterprise AI, the foundation for decision-centric automation.

Analysis & Insights

IBM’s trajectory is clear, combining insights from both IBM Think earlier this year and IBM TechXchange this week. It is designing an open platform for digital labor, positioning itself not as a provider of isolated tools or models but as the trusted orchestrator of the hybrid, agentic enterprise. TechXchange 2025 clarified that IBM’s goal is no longer to chase generative AI, just as it stopped chasing public clouds, but rather to embrace, innovate around, and build upon them with transformative ROI as the focus.

At the foundation of this strategy sits watsonx, IBM’s modular platform designed to unify model development, data management, governance, and orchestration into a cohesive, interoperable framework, easily extended by what clients already have and the innovations to come. Each layer has a defined purpose:

- watsonx.ai — for model building, training, and multi-model optimization

- watsonx.data — for contextual, governed data pipelines that feed AI reasoning

- watsonx.governance — for risk management, compliance, and policy enforcement

- watsonx Orchestrate — for agentic workflow design, deployment, and supervision

- watsonx Connect — for open, cross-platform connectivity of any agent, anywhere

Collectively, they create a semantic and development and operational control plane for AI agents (also known as digital labor) that helps organizations safely and efficiently shift from today’s fragmented automation silos to integrated, decision-focused ecosystems. IBM clearly recognizes that your future depends on your past, trust is essential for ROI, adopt openness or face failure, and no miracles are needed, just steady progress.

One underappreciated aspect of these initiatives (Bob + Infragraph + watsonx + Claude ) is their potential to directly address one of the most difficult problems enterprises face with agentic AI adoption: building a practical trust and governance framework that can be consistently applied at scale.

For many organizations, AI governance exists only in policy documents, disconnected from daily workflows where models are trained, tested, and deployed. These efforts form the foundation to operationalize governance by integrating AI oversight, explainability, and compliance checks directly into the software development lifecycle (SDLC). Every action—whether generating code, refactoring a module, or running a security scan—is traceable, explainable, and aligned with enterprise policies and regulatory mandates. This approach could shift governance from a retrospective audit to a real-time, co-piloted discipline where AI and human developers share accountability for outcomes. Given the immaturity of AI trust and governance across most enterprises, as found by the Agentic AI Futures Index, this represents a significant opportunity for IBM leadership.

But, more importantly, in our view, is IBM’s open ecosystem strategy, demonstrated not only by the Anthropic partnership but also by hundreds of new participants in its watsonx Connect program, which is based on open protocols and MCP. They are embracing third-party innovation while maintaining an enterprise-grade architecture. This approach builds on a playbook that has served IBM well through three decades of technological reinvention.

- WebSphere (1990s): open standards for e-business.

- Red Hat (2010s): open hybrid cloud interoperability.

- watsonx (2020s): open agentic orchestration.

Each era saw IBM succeed by leaning into openness, integration, and governance rather than control. Its current strategy follows the same arc, not to own the entire stack, but to orchestrate across it. This pragmatic stance is proving resonant with enterprises fatigued by vendor lock-in and siloed innovation.

By combining its heritage of enterprise trust, hybrid deployment, and compliance-grade governance, IBM is building the framework for what it calls the Trusted Agentic Enterprise, where organizations seamlessly integrate humans and digital coworkers to collaborate on real-world business tasks, guided by policies, explainability, and choices.

Looking ahead, we believe this creates a unique opportunity for both IBM and Anthropic to establish a beachhead in what we consider to be the next frontier of enterprise AI. Time will tell if IBM and Anthropic expand this partnership into more advanced AI reasoning and decision-making technologies, such as semantic and causal reasoning, a market space seeking a leader as we approach 2026. As the recent Agentic AI Futures Index found, this space is definitely on the minds of the strategic thinkers of leading enterprises.

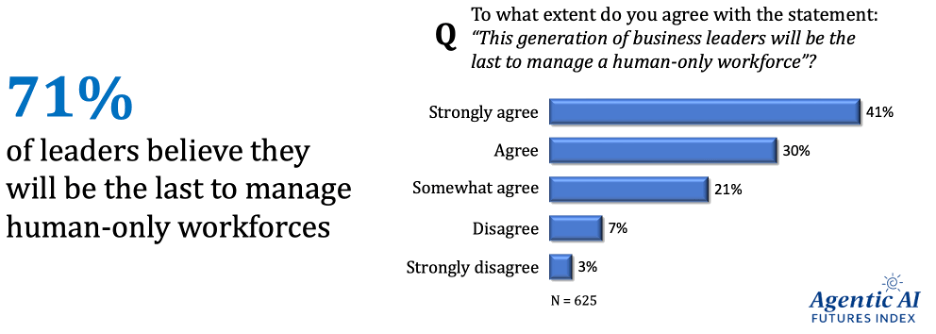

Finally, as reported in our May 2023 “IBM Aims to Unify Digital Labor Across the Agentic Enterprises” research brief, IBM is playing long ball with its eyes on helping its clients succeed in their digital labor transformation strategies. The Digital Labor Transformation Index, published in September 2025, underscores IBM’s strategy, where 71% of leaders agree they will be the last to manage human-only workforces.

IBM’s response to these market dynamics was clearly evident at TechXchange 2025. If IBM Think in May 2025 focused on strategy, IBM TechXchange in October 2025 centered on execution. As we always say, a strategy without execution is a hallucination. IBM deserves praise for blending strategy and execution not only in how it manages its business but also in how it interacts with its clients through this conference platform.

The next frontier of enterprise AI won’t be driven by AI that just talks or automates tasks; it will be driven by AI that thinks, makes decisions, acts, and earns trust. IBM’s TechXchange 2025 made it clear that this is no longer a vision; it’s an architecture in motion.

- Read my colleague’s analysis on IBM TechXchange here

- Read or watch our analysis of IBM’s strategy unveiled at IBM Think here

- Read or watch the Digital Labor Transformation Index podcast here

Finally, as always, contact us if we can help you on this journey by booking a briefing here or messaging me on LinkedIn.

Thanks for reading. Feedback is always appreciated.