Attending the recently-held HPE Investor Relations’ AI Day was like a bit of old home week for me, at least it was “old” in terms of revisiting the 80s and the start of my computing career — and it all started at the relatively unassuming Museum of Industry and Technology The museum was originally called the Cray Museum, as it was largely dedicated to honoring the legacy of Cray Research, a supercomputer company founded by Seymour Cray and headquartered in Wisconsin, but has since expanded to showcase a broader look at the innovations and accomplishments of the tech industry.

HPE Investor Relations’ AI Day: Taking Place in the Perfect Setting

While it may not be immediately obvious, there was every reason for the HPE Investor Relations’ AI Day event to be hosted at the CFMIT. Seymour Cray’s legacy is a storied one, especially as it relates to the development of supercomputing technology, and the museum was a visual delight, offering insights into the innovations that shaped the field.

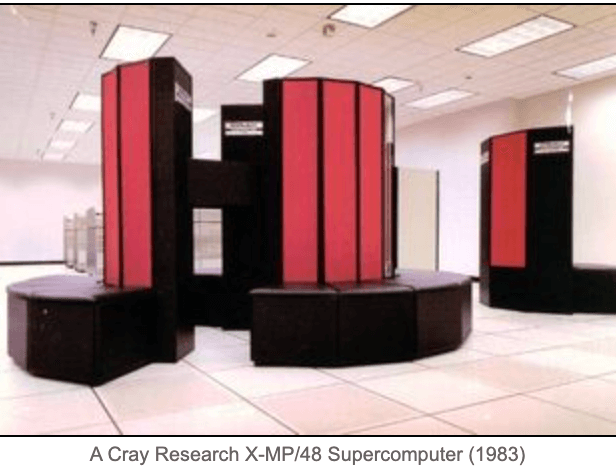

While there, I saw the first love of my life, the Cray XMP, among the other Cray supercomputers that were on display. Back in the day, a 20-year-old David Linthicum was an AI developer using Lisp on that machine. While I never saw one in person, I had a picture in my cube — and looked at it wistfully on a regular basis. Just take a look at that beauty, will you?

The Cray XMP was known for its capabilities in high-performance computing, including support for AI and Lisp. In the 1980s, it was utilized for various scientific and engineering applications, and its architecture was well-suited for the demands of AI development.

Even today, that computer remains the most fantastic computer I have ever seen — it is truly a work of modern art. That said, I also know that today I have that same computing power tucked inside my pocket.

What Does That Have to Do With Us in 2025?

What does this have to do with us today? In a word: everything. Just as we are doing today, back in the 80s, we were also attempting to figure out how to get value from AI and deal with the higher computing needs it needed. And just as we argue about the value of GPUs today, it was much the same discussion as when AI first appeared as a viable technology for business.

So, why did HPE take me through a computer museum before touring their HPE site manufacturing facility, which was just down the street? It was actually quite brilliant, and allowed IT silverbacks such as myself not only a glimpse back into the past, but also helping us see the links between the challenges we faced then, and the challenges we face today. And it allowed those attendees with slightly fewer gray hairs to see the path that has come before as we discussed what’s ahead.

HPE’s HPCs Do Not Disappoint

HPE has an impressive line of high-performance computers (HPCs). Having an opportunity to see the manufacturing process in person was exceptional, and something I’m rarely allowed to see up close. This included manufacturing and assembly of Liquid Cooling Systems (LCS)-based computers.

These are interesting considering that while LCS was a massive pain in the neck in the 80s, including numerous instances where data center managers would not allow them in the centers. The reality, however, was that the cost of LCS-based computers was prohibitive for many of the businesses that could leverage them for significant use cases…such as my customers back in the 80s. As such, although AI was considered interesting and unique, it was simply too expensive to purchase and maintain the supercomputers that AI needed to work effectively.

Modern HPCs Using Liquid Cooling Systems (LCS)

I’ve always found data centers somewhat annoying. My annoyance doesn’t have anything to do with all the blinking lights, but rather my dislike stems from the fact that most servers in data centers, even today, are air-cooled. This leads to a terrific windstorm that flows down the aisle, which serves to limit my time there, given the noise one must endure. Indeed, many who work in data centers wear earplugs, and for good reason.

What I discovered during my visit to the HPE manufacturing facility during the HPE Investor Relations’ AI Day event that I found most impactful was the fact that the LCS systems made little, if any, noise. When standing next to them, you could have a conversation, which is not possible in data centers where the servers are air-cooled.

HPE offers several lines of supercomputers that utilize liquid cooling systems. Some of their prominent LCS-equipped supercomputer lines include:

HPE Cray EX. This is HPE’s flagship exascale-class supercomputer line, designed for the most demanding high-performance computing (HPC) and AI workloads. It uses direct liquid cooling for maximum efficiency and performance.

HPE Apollo Systems. Many models in the Apollo line, particularly those designed for HPC and AI, use advanced liquid cooling technologies.

HPE Superdome Flex. While not all models use liquid cooling, some configurations of this high-end server line incorporate liquid cooling for enhanced performance and efficiency.

HPE SGI 8600. This scalable, liquid-cooled system is designed for HPC and deep learning applications.

So, Do Modern LCSs Stack Up with LCSs from Years Past?

Do modern LCSs stack up against their predecessors? That question elicits a resounding “Yes!” The performance difference between an HPE Cray EX and a Cray XMP is enormous, showcasing the dramatic advances in supercomputing technology over several decades.

The Cray XMP, the exact one yours truly used to create AI systems back in the day, could achieve up to 800 million floating-point operations per second (mfpopsin its most advanced configurations. The XMP utilized a vector processor-based architecture, typically featuring 2 to 4 processors. It was primarily used for scientific computing, simulations, and early AI research of that era.

In contrast, the modern HPE Cray EX can scale up to exaflop performance, which means quintillions of floating-point operations per second (FLOPS) — a mind-boggling improvement over its predecessor. The Cray EX employs a massively parallel processing architecture incorporating thousands of nodes and GPUs.

Of course, it is an LCS to manage the immense heat its powerful components generate efficiently. This supercomputer is designed to tackle the most demanding computational challenges in climate modeling, astrophysics, and AI research.

Let’s geek out a little, shall we? To calculate the percentage of performance improvement from the Cray XMP to the HPE Cray EX, we can use the following formula:

Percentage Improvement=(New Performance−Old PerformanceOld Performance)×100Percentage Improvement=(Old PerformanceNew Performance−Old Performance)×100

Using the example values:

- Cray XMP performance: 800 megaflops (or 0.8 gigaflops)

- HPE Cray EX performance: Up to 1 exaflop (or 109109 gigaflops)

Substituting in the values:

- Percentage Improvement= (109−0.80.8)×100Percentage Improvement=(0.8109−0.8)×100

This results in:

- Percentage Improvement≈ (1090.8)×100≈1.25×1011%Percentage Improvement≈(0.8109)×100≈1.25×1011%

By my calculations, this indicates an astronomical performance improvement of approximately 125 billion percent from the Cray XMP to the HPE Cray EX — but feel free to check my math.

The evolution from the Cray XMP to the HPE Cray EX exemplifies the exponential growth in computing power and efficiency over time, driven by significant advances in processor technology, parallel computing architectures, and cooling solutions. What was impossible to do when I was 20 is now not only possible now that I’m 62, but it’s also economical.

Wrapping Up: HPE Investor Relations’ AI Day

In sum, it’s probably quite clear that I very much enjoyed my time at HPE Investor Relations’ AI Day. Not only did the team at HPE share a wealth of interesting information, I left inspired. Inspired and compelled to dig in and figure out how to allow businesses to leverage this technology as a true force multiplier for their respective businesses.

HPE has built some pretty fine technology designed to make AI useful for everyone, and today, that’s the name of the game. I encourage you to check out the HPE Cray Portfolio, purpose built for HPC and AI workloads, and 100% direct liquid-cooled. And if you’d like a deeper look, here’s a video showcasing HPE Cray Supercomputing XD665 model.

Last bit of advice: if you ever get a chance to visit HPE, definitely make time for a visit to the Museum of Industry and Tech — I can promise you won’t be disappointed.

See related coverage here:

HPE Fortifies AI-Powered Networking Portfolio with Advanced Security Features

HPE’s Acquisition of Morpheus Data and Its Strategic Implications

Breaking Analysis: HPE Wants to Turn Supercomputing Leadership into Gen AI Profits