ABSTRACT: Enterprise leaders at Databricks, Ataccama, Anomalo, and IBM are advancing unstructured data integration, observability, and governance to support AI, LLMs, and agentic workflows across platforms like Snowflake and Unity Catalog. These innovations empower data engineers, business analysts, AI product teams, and data stewards to operationalize PDFs, contracts, emails, and other content trapped in silos using scalable, secure pipelines. With unified data quality, lineage, and orchestration, these companies are helping organizations unlock the full value of unstructured data for generative and explainable AI.

The enterprise data landscape is undergoing a fundamental shift as the importance of unstructured data grows in parallel with the rise of generative AI and Agentic workflows. Data platforms are evolving, with new capabilities to bring the unstructured, semi-structured, and structured data worlds together. Recent announcements from Databricks, Ataccama, Anomalo, and IBM underscore how leading vendors tackle the trust and governance challenges associated with this data class, once considered too unwieldy to operationalize at scale.

Databricks goes with the Lakeflow

During the Databricks Data + AI Summit, the GA of Lakeflow Connect, which now provides robust support for unstructured data ingestion, seamlessly bringing documents, like PDFs, Excel files, and other files from sources such as SharePoint, object storage (S3/ADLS/GCS), and local uploads directly into your lakehouse. Through its managed ingestion pipelines, these raw file-based assets are ingested into Delta tables, with built-in support for schema evolution and auto-detection of new files via incremental processing. This streamlines the handling of unstructured content, whether text-heavy or semi-structured, by automatically integrating it into downstream ETL workflows, governance frameworks, and AI/ML models, all within the serverless, Unity Catalog‑governed environment, which is Databricks’ key to using the built-in data quality and governance features.

Databricks also unveiled its new Lakeflow Designer, built to empower business analysts with a no-code, drag-and-drop, and natural-language interface to build production-grade ETL pipelines that maintain the same scalability, governance, and maintainability as those created by data engineers. Built atop Lakeflow, Unity Catalog, and Databricks Assistant, Designer bridges the gap between code and no‑code tools, enabling non‑technical users to solve business questions in a drag-and-drop manner.

Our ANGLE on Databricks Lakeflow

Databricks continues to build better data pathways with a keen view on simplification to expand the personas they provide solutions to. We see the GA of Lakeflow connect as another step towards making first-party integrations in the unstructured and semi-structured world a regular part of their metadata purview. This will be critical to them and customers focused on Unity Catalog as the single source of truth. We do not see this changing or impacting many of those in their partner eco-system, as Databricks focuses on the compute and above layer for data utilization and transformation, meaning you need to be building on Databricks only. This will help organizations that intend to leverage other Databricks announcements, such as Agent Bricks, in the types of interactions and functions for which agents will be designed based on available data products.

Ataccama makes it easy for Snowflake customers

Earlier this month, Ataccama debuted Ataccama ONE on Snowflake Marketplace, unveiled at Snowflake Summit 2025, which marks a significant step toward mainstreaming unstructured data processing in cloud-native data ecosystems. Integrating with Snowflake’s Document AI and Arctic-TILT LLM, Ataccama ONE allows enterprises to extract structured data directly from documents, like contracts and invoices, using natural language prompts. The structured outputs are immediately written to Snowflake tables, where Ataccama layers data profiling, quality checks, and governance policies. This integration eliminates data movement and ensures that unstructured-to-structured workflows remain secure, auditable, and scalable, all within the Snowflake environment.

The significance of this move lies in its unification of data quality, observability, lineage, and governance, which has historically been applied only to structured data with AI-ready unstructured sources. As organizations build agentic applications powered by LLMs and retrieval-augmented generation (RAG) systems, this capability enables them to construct pipelines from document ingestion to AI consumption. Ataccama’s ability to track data lineage, apply validation rules, and enrich metadata can provide operational efficiencies and a much-needed foundation of trust in AI workflows.

Our ANGLE on Ataccama

We see what Ataccama is doing for Snowflake, to unify unstructured and semi-structured data with the vast structured data in the thousands of Snowflake customers as critical to those customers leveraging their Snowflake investment. This end-to-end pipeline, spanning ingestion, validation, and governance, enables trusted, scalable AI workflows using LLMs and RAG systems without moving data outside Snowflake. But hey do this for many another platforms as well, with support for Databricks and the major hyperscaler and open source platforms. The news here is the addition and simplification in the Snowflake marketplace. Our view is that Snowflake is ahead on the simplification of their marketplace, among data platform vendors, allowing partners to more readily help existing Snowflake customers, and generate revenue.

Anomalo aims to make unstructured data “quality”

Meanwhile, Anomalo is taking aim at unstructured data quality through its Unstructured Data Monitoring solution, which is now fully integrated with Databricks and Snowflake. As AI adoption accelerates, especially in platforms like Databricks, which support LLMs, retrieval-augmented generation (RAG), and data pipelines, data quality has emerged as a gating factor and source of much toil. Unstructured data, which makes up over 80% of enterprise information, if not more, is inconsistent and not well governed by traditional structured data tooling. That blind spot can introduce significant risk into AI initiatives and undermine the trust enterprises seek to build into their GenAI outputs, such as hallucinations or escape of sensitive data.

Anomalo addresses this with an automated, scalable quality layer designed for the complexity of unstructured text, contracts, PDFs, emails, customer feedback, and support tickets. Directly within Databricks, the platform evaluates documents for 15 out-of-the-box issues, ranging from PII exposure and abusive language to redundant content and contradictions. It supports custom quality definitions with adjustable severity levels. This lets enterprises curate clean, compliant datasets faster, dramatically reducing the overhead of manual document review.

But Anomalo’s approach goes well beyond simple redaction or filtering. Its Workflows module turns monitoring into a strategic data intelligence layer: surfacing patterns, tracking sentiment and tone, clustering topics, and enabling unstructured content to be transformed into structured datasets suitable for analytics, AI, and operational reporting. With this capability, customers build AI-ready pipelines that preserve context while enforcing governance. This can help to build trust in many of the inwardly facing use cases organizations have chosen for their first GenAI deployments.

The integration with Databricks is compelling. Documents analyzed by Anomalo can be processed using Databricks-hosted LLMs, like Llama or Claude via Mosaic AI Gateway, or external models, enabling teams to classify content, redact sensitive fields, and detect anomalies without moving data out of governed environments. Cleaned and enriched outputs are written directly into Unity Catalog volumes, ensuring lineage, access control, and reusability for downstream AI workflows.

Our ANGLE on Anomalo

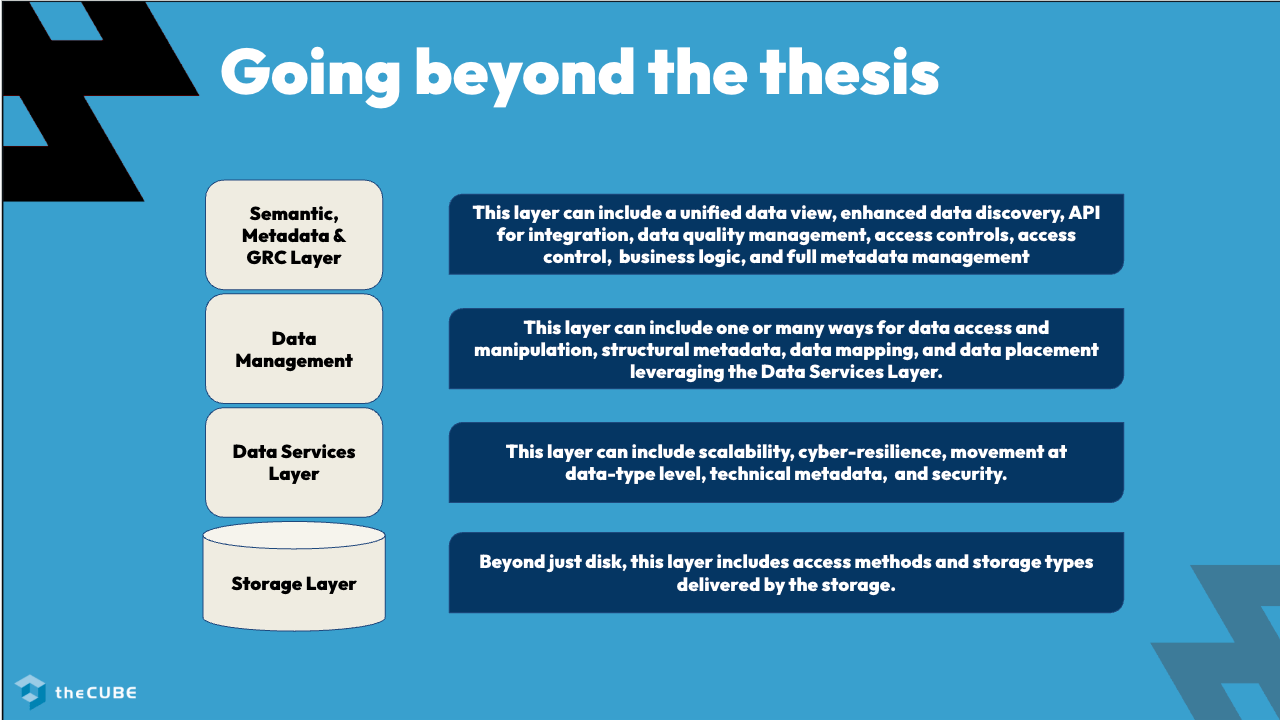

We see that data quality across data silos is a significant requirement for those moving into more advanced usage of GenAI and as those GenAI applications become pieces of the agentic AI workflows. This will be increasingly important for many personas, such as ML practitioners, data stewards, and AI product teams, who require a path to trusted, actionable, and explainable AI built on high-quality content, rather than a hope that the data is “good enough.” This speaks to reporting, explainability, and lineage. We see this topic growing in importance every day, which is why others also aim to bring this into the semantic layer.

IBM brings together the AI tool chest

IBM has been transforming and consolidating its AI tool chest to fit better how practitioners want to consume the tooling to build their AI and agents. An example on display at Datbricks Data + AI summit was IBM’s new watsonx.data integration product represents an advancement in streamlining enterprise data operations for the AI era. Designed as a unified control plane, it enables seamless integration across structured and unstructured data sources using multiple paradigms, batch ETL/ELT, real-time streaming, data replication, and observability. This flexibility allows data teams to work across low-code, code-first, and agent-driven tools, minimizing the need for point solutions and simplifying orchestration across hybrid environments. This hybrid environments is a key to where organizations are creating, intentionally and unintentionally, many data silos. With direct support for unstructured data formats and built-in observability, watsonx.data integration removes the friction of legacy pipelines, enabling enterprises to efficiently operationalize their proprietary data for generative and agentic AI workloads.

By embedding integration capabilities into watsonx.data, IBM reinforces its vision of a unified, open, and hybrid data lakehouse that supports the full data-for-AI lifecycle. This approach empowers organizations to overcome fragmentation, again intentional or unintentional, in their data silos and capitalize on potentially under utilized or coordinated content, such as emails, PDFs, and multimedia files. The platform’s openness allows enterprises to integrate with third-party systems, enabling broader ecosystem innovation while maintaining control and governance. A big piece of this is the ability to tie into watsonx.data intelligence to also map the technical metadata to the business terms and data classes, with the ability to bring this to your catalog for governance.

Our ANGLE on IBM watsonx.data integration and intelligence

We see IBM and its watsonx.data portfolio taking leaps and bounds to bring together several essential tools from integration of pipelines, to intelligence in unstructured and structured data, to those day 1 and day 2 activities of observability of the data and the AI systems. We expect this will continue to evolve, with IBM simplifying the user interface and workflows to bring the best pieces further into focus. The personas that they are helping to reduce toil for include data engineers, data scientists, and platform engineering teams. We believe that IBM has many more pieces to the AI pie that will enable this solution to go even further as the full portfolio is brought to bear.

Conclusion

From our perspective, the latest moves by Databricks, Ataccama, Anomalo, and IBM reflect a broader industry pivot toward operationalizing unstructured data as a first-class citizen in the AI lifecycle. Each company is addressing key gaps, whether it’s simplifying ingestion, ensuring data quality, unifying structured and unstructured workflows, or enabling governed access at scale. Together, these efforts are reducing complexity and fragmentation while expanding access to trustworthy, AI-ready data across a growing range of personas, from business analysts and data scientists to data engineers and platform engineering teams. As generative and agentic AI evolve, the ability to transform unstructured content into governed, actionable insight will be a defining capability for enterprise data platforms.

Feel free to reach out and stay connected through robs@siliconangle.com, read @realstrech on x.com, and comment on our LinkedIn posts.