As we approach SuperComputing 2024 (SC24), We’ve been talking on theCUBE about the rise of new supercomputing infrastructure concept which I’ve been calling: Clustered Systems. Over the past few years, We’ve been tracking how this concept has evolved and its game-changing role in the world of AI, especially generative AI. At its core, clustered systems represent a fundamental shift in how we approach computing infrastructure, and they’re setting the stage for the next wave of innovation in AI and enterprise technology.

Why Clustered Systems Matter

Clustered systems aggregate multiple computing unit such as servers, GPUs, and networking components into a single, unified computing environment. This allows the systems to handle the kind of computational workloads that generative AI models demand, whether it’s training massive language models or running inference across diverse applications. While the concept of clustering isn’t new, its application to generative AI is groundbreaking. AI models today are far more complex, requiring immense scalability and performance. Clustered systems deliver that by distributing workloads intelligently, enabling enterprises to innovate faster and at scale.

Take Nvidia, for example. They’ve been at the forefront of this shift, not just with their GPUs but with their entire ecosystem—NVLink, CUDA, and their DGX systems. But it’s not just Nvidia driving this forward. Companies like Dell Technologies are advancing the infrastructure landscape with their PowerEdge XE9680 servers, which integrate Intel Gaudi 3 AI accelerators, and next-generation PowerEdge systems featuring AMD EPYC 5th Gen processors. These systems are built to scale AI workloads and provide the flexibility needed for training and inference. Dell’s approach shows how hardware tailored for AI can meet the growing demands of clustered systems, ensuring enterprises can optimize both performance and cost.

The GenAI Law: A New Paradigm for Infrastructure

One of the critical things I’ve been talking about is what I call the “GenAI Law” – Dave Vellante. my cofounder and cohost of theCUBE, calls it “Jensens Law”. We’ve seen this before with Moore’s Law during the PC revolution, where hardware advancements pushed software innovation forward. Today, generative AI is creating a similar dynamic. The question now is whether the hardware you invest in today like infrastructure optimized for training AI models can also serve your needs for inference tomorrow. Interoperability and scalability have become the name of the game.

Dell is addressing this challenge with solutions like their AI Factory, which integrates advanced infrastructure with NVIDIA technology to optimize the entire AI lifecycle. From training large-scale models to deploying small, task-specific ones, the infrastructure is built for versatility. This kind of flexibility is critical in a world where infrastructure must evolve to support the growing diversity of AI workloads.

Multi-Scale AI and Developer Empowerment

Generative AI is also shifting enterprises think about AI models. It’s not just about building massive models anymore; it’s about creating ecosystems of models, both large and small. Specialized, task-specific models are emerging alongside general-purpose ones, and clustered systems are perfectly suited for this multi-scale AI future. They allow enterprises to handle everything from tiny models for edge use cases to sprawling, multi-billion-parameter models for advanced applications.

Developers, too, stand to benefit immensely. With clustered systems, they can create scalable software that runs consistently across diverse environments whether in an enterprise data center or in the cloud. For instance, Dell’s rack-scale management solutions and HPC offerings are designed to give developers the tools they need to deploy and manage scalable software across hybrid environments. These kinds of advancements make it easier to test, iterate, and deploy AI applications efficiently.

The New Unit of Computing: The Data Center Connected to Cloud

Jensen Huang, Nvidia’s CEO, made a profound statement recently: “The new unit of computing is the data center.” I couldn’t agree more. Enterprises are no longer looking at individual servers or nodes; they’re thinking holistically about their infrastructure. If you’re serious about competing in the world of generative AI, you’re going to build your own supercomputer, your the entire computer, and connect it to the cloud to take advantage of its scalability and services. Supercomputing is powering Superclouds.

Dell and many of the top AI server vendors are rolling out sustainable data center solutions, which are not only eco-friendly but also built for scalability. The focus is on direct liquid cooling (DLC) to ensure that enterprises can achieve higher performance without the energy overhead typically associated with large-scale computing.

What to Watch at SC24: Setting the Agenda for the Future of AI Infrastructure

SuperComputing 2024, SC24, is where all of these ideas will come together with AI being fused into supercomputing for the masses intersecting with generative AI software as the core innovation.. This year, I’ll be watching several several key areas:

- AI and Advanced Computing Hardware: Innovations in GPUs, CPUs, and accelerators are driving the clustered systems revolution. Dell’s PowerEdge servers and AI Factory are standout examples of how hardware and software can work in unison to meet enterprise needs. Look for advances in resource capacity sharing and allocation of compute powering AI resilience, recovery, and self-healing.

- Data Center Technologies: From liquid cooling to cooling on the chip to sustainable operations, these innovations are shaping the future of AI infrastructure to be viable. Look for chips that are optimized for certain applications at cost and power efficiency levels that are sufficient to be deployed.

- AI Platforms and Frameworks: The software that powers clustered systems is as important as the hardware. Partnerships with generative AI models and infrastructure capabilities are worth watching as the ecosystem becomes connected and scalable with data.

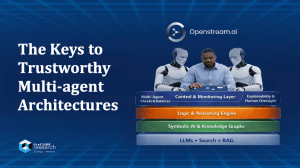

- Networking and Connectivity: Low-latency, high-bandwidth connections are critical for scaling AI enabling robust AI fabrics look for multi-agent integrations with intelliigent prompt routing.

- Supercomputing Solutions: Enterprises are embracing supercomputing to unlock the full potential of AI.

- Applications and Customization: AI isn’t one-size-fits-all, and clustered systems provide the flexibility businesses need. Look for infrastructure innovaiton to power the model selection compute needs for developers.

- Sustainability and Education: From reducing environmental impact to fostering the next generation of AI talent, these areas are vital for long-term success. Look for new tools around cost controls for sustainability and transparency.

The Future of Clustered Systems

To me, the rise of clustered systems is nothing short of an industrial revolution powered by AI. Companies like Nvidia, Dell, AWS, and Broadcom are pioneering advancements in hardware, software, and connectivity that will redefine what is possible in AI. From generative AI applications to sustainable data center to cloud to edge technologies, the full potential of clustered systems is only beginning to be realized.

At SC24, we will see the new era of Clustered Systems enter the conversation for any enterprise looking to compete in the age of AI.