As we advance into the next frontier of AI, the global economy is approaching a structural shift that will redefine how intelligence is generated, priced, traced, and trusted. And at the center of this shift lies a dual transformation: enterprises demanding a far more trustworthy AI foundation, and the world’s creators demanding fair economic participation in the intelligence they help produce.

We’ve seen this movie before.

Just as the early internet transformed digital content into a new real-world asset class, the next era of AI will elevate model-learned knowledge and algorithms into yet another new asset class. Today’s AI systems are built on top of a massive, uncompensated global contributor base: the creator economy alone now exceeds $250 billion+, and open-source developers generate trillions of dollars in downstream economic value — yet almost none of that value flows back to the people whose work is fueling the models.

Furthermore, during the rise of e-business over 20 years ago, companies quickly realized that what mattered most was verified identities, transaction attribution, and transparent auditability. Today, AI stands at a similar inflection point. Enterprises are embedding AI into decision-making, workflows, and customer experiences, yet 69% according to our recent Agentic AI Futures Index survey), cannot trace the source of model-derived knowledge, validate its integrity, or demonstrate compliance. As a result, half lack trust in AI.

This imbalance is becoming untenable. Foundation models and AI agents ingest enormous amounts of public, private, and community-generated data, but the origins of that data are largely unverified, uncredited, and unrewarded. As AI becomes more powerful and more deeply embedded in enterprise workflows, this “extraction model”, where AI takes without giving back, is now both a business liability and a trust crisis.

Ram Kumar (@Ramkumartweet (X), founder and CEO of OpenLedger, captured the moment succinctly in a recent interview:

“We’re living in a world where AI learns from everyone, but rewards no one. That is not sustainable for creators, for enterprises, or for the future of AI.”

This imbalance has catalyzed a new category of innovation centered around payable AI and trusted AI — systems that compensate creators, data owners, developers, enterprises, and communities when their contributions are used, and deliver attributable trust. But the significance extends beyond economics. Payable AI also lays the foundation for trusted AI in the enterprise, where attribution, provenance, and explainability are not optional, but essential.

Despite broad acknowledgment that this shift is necessary, few have proposed, let alone built, a credible, global infrastructure capable of supporting it. But OpenLedger has.

OpenLedger has positioned itself at the center of what may become a highly consequential transformation within the AI ecosystem. Its thesis is simple but profound: to fix AI’s trust problem, the world must first fix AI’s attribution problem — and the only viable path is on-chain.

As Ram explained:

“You can’t have trust without attribution. And you can’t have real attribution without blockchain. It’s the only global, tamper-proof system capable of tracking how contributions flow into intelligence.”

In our view at theCUBE Research, the implications are enormous. If OpenLedger and its ecosystem succeed, they will help establish the next real-world asset class: verifiable, tokenized intelligence — enabling creators, developers, enterprises, and communities worldwide to earn recurring, transparent value for the insights and data that power the AI economy.

In this research brief, we examine what OpenLedger is building, why it matters, how it works, and what enterprises must do now to prepare.

Watch theCUBE Conversation

The Next Economic Asset Class

The concept that intelligence itself could become an asset class may sound futuristic, but it follows directly from macroeconomic logic and from the last three decades of technology-driven precedent. In the industrial era, physical labor was the primary source of economic value. In the digital era, data became the most valuable resource, driving the rise of cloud platforms and the attention economy. The blockchain era then showed that digital scarcity and digital ownership could be created and traded at scale, with cryptocurrencies and tokenized assets proving that entirely new asset classes can emerge when technology makes them verifiable and exchangeable.

The AI era marks the next stage of this progression: intelligence, not labor or raw data, becomes the dominant driver of value creation, and, with verifiable attribution, it becomes an asset that can be priced, owned, and rewarded.

Yet the supply chain for intelligence, the inputs that models learn from, is opaque, unpriced, and unaccounted for, and not always trusted. Scott Hebner, principal analyst for AI at theCUBE Research framed the emerging paradox in a conversation with Ram Kamar:

“How can AI become the ‘intelligence’ operating system of the enterprise if we cannot trace where its knowledge comes from, validate its origins, and compensate the people whose work made that intelligence possible?”

This is the conceptual market gap that OpenLedger is addressing: the need for a new asset class. Four forces are converging to drive this emerging need, especially among enterprises:

- Massive AI scaling across consumer and enterprise markets: Models are growing hungrier for data and more dependent on real-world contributions — code, content, sensor data, community interactions, and decision traces.

- The legal and regulatory wave: Copyright lawsuits, regulatory scrutiny, and data-sovereignty frameworks (EU AI Act, emerging U.S. legislation, India’s AI governance draft) increasingly require models to prove provenance, licensing, and consent.

- The creator and developer backlash: Millions of creators have begun asking, “Why is my data feeding corporate AI systems without attribution or compensation?”

- Enterprise trust and compliance pressures: Enterprises are demanding auditability, explainability, chain-of-custody evidence, and verifiable lineage.

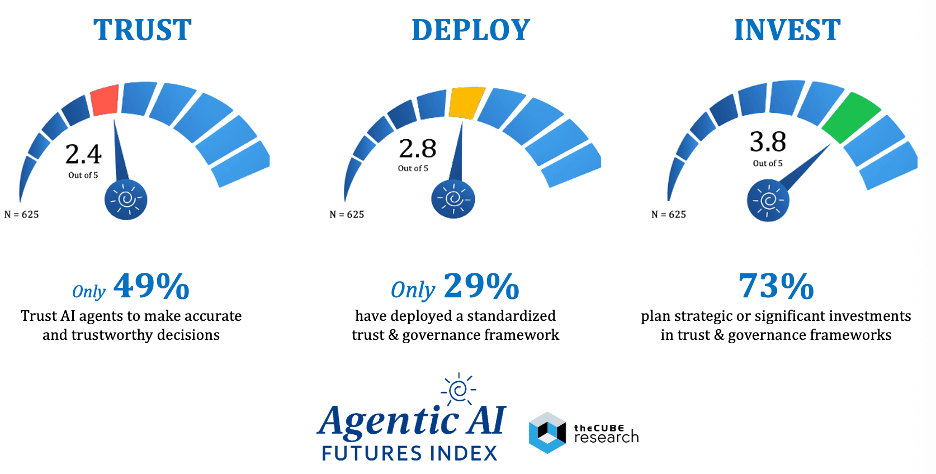

Trust within enterprises is especially crucial, as our Agentic AI Futures Index clearly shows. Despite quick experimentation, most organizations remain cautious about letting AI make or influence real decisions. Only 49% of leaders trust AI agents to deliver accurate and reliable results, and adoption is limited because just 29% have put in place a standardized trust and governance framework. However, the demand is clear: 73% plan to make significant investments to bridge this gap.

These figures reveal a harsh reality: without transparent attribution, verifiable provenance, and explainable decision processes, AI cannot earn the institutional trust necessary to grow. That’s why on-chain attribution matters; it tackles the core reason for enterprise hesitation by offering a foundation for provable, compliant, and trustworthy intelligence.

OpenLedger sits at the intersection of these forces, proposing a model in which the inputs to intelligence, whether human or algorithmic, become traceable, ownable, and rewardable assets, and in which the economic flows generated by AI can be redistributed transparently.

This is very likely to create a new real-world asset class: attribution-backed intelligence units, a new category of AI-native tokens that represent verifiable contributions to model learning, decision-making, or agent behavior, and that carry lasting economic value as those contributions are used, reused, and compounded throughout the AI ecosystem.

The OpenLedger Vision

OpenLedger’s thesis is as straightforward as it is ambitious:

- AI should pay for what it learns.

- People and enterprises should be rewarded for what they contribute.

- And attribution should travel with the intelligence forever.

- Enterprises require attribution-backed intelligence assets

To achieve this, they are developing an on-chain attribution protocol that serves as the economic and verification layer for the AI ecosystem. Unlike today’s AI supply chains, which are fragmented, closed, and unverifiable, OpenLedger’s innovative architecture promotes transparency and trust by using blockchain to cryptographically ensure integrity, transparency, and trust:

- Who contributed what?

- When was it used?

- How did it influence a model?

- Can it be trusted?

- What value was created as a result?

- And should rewards be distributed back to contributors?

This vision aligns with the broader shift toward “trusted and rewarded AI.”

“We want to create the rails for decentralized intelligence, where every data point, every code commit, every decision trace can be attributed, valued, and rewarded. That’s the foundation of a fair AI economy.” — Ram Kumar

These ideas are not yet widely considered across the industry, but we believe they will become a much-needed and welcomed development over time.

How OpenLedger Works

The OpenLedger architecture is designed to effectively address this trust gap. Rather than treating attribution as an afterthought or just a compliance feature, it incorporates provenance, transparency, and economic alignment directly into the core of how intelligence is created and used. Its design changes the AI supply chain from a black box into a verifiable, auditable, and rewardable network of contributions.

To understand how this works, it helps to break down the system into its three main architectural layers, each supporting a different aspect of trust, traceability, and value creation. The system can be understood as three core components:

- The Attribution Recording: Every contribution (content, code, model weight, decision trace, etc.) is assigned a unique fingerprint and recorded on-chain. This establishes immutable provenance by fingerprinting every contribution (code, content, model weights, agent traces) directly on-chain, ensuring that the origins of intelligence are never lost or disputed.

- The Attribution Mapping: links contributions to specific model behaviors, model updates, agent actions, or downstream outputs. This is the attribution knowledge graph that connects contributions to their actual influence across AI systems, creating a dynamic attribution graph that tracks how data and decisions move through models, agents, and workflows.

- The Reward and Settlement: When an AI model or agent uses a contributed element, a reward stream is triggered and distributed automatically to contributors. This can be done in stablecoins, tokens, or fiat equivalents, closing the loop by converting those attributions into programmable economic value, automatically distributing rewards in tokens, stablecoins, or fiat whenever contributions are used.

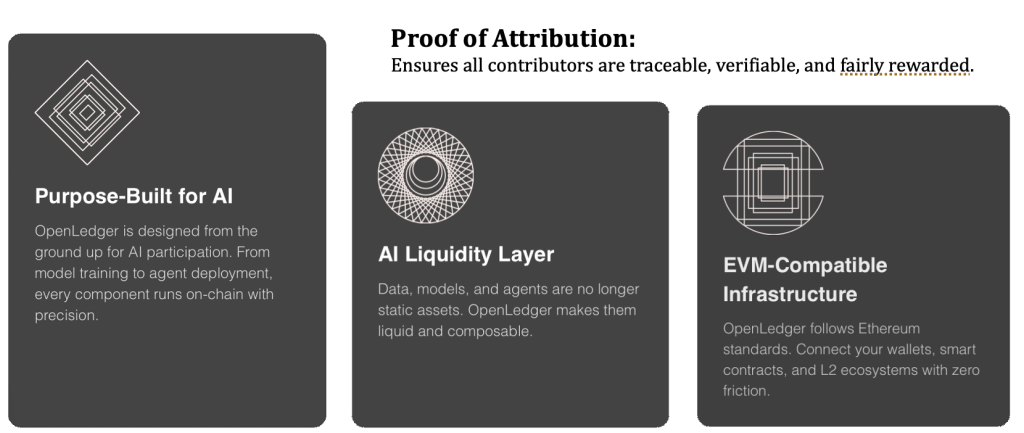

Together, these capabilities culminate in a purpose-built, on-chain infrastructure where AI can finally operate with the same clarity, composability, and economic alignment as modern financial systems. These capabilities deliver a platform that makes every component of the AI lifecycle (models, data, agents, and contributions) traceable, liquid, interoperable, and fairly rewarded.

Its AI-first architecture ensures precision and verifiability; its liquidity layer makes intelligence assets composable and exchangeable; its EVM-compatible foundation guarantees seamless integration across wallets, smart contracts, and Layer 2 ecosystems; and its proof-of-attribution engine embeds fairness and transparency directly into AI workflows.

The end result is a unified system where intelligence becomes a trusted, value-bearing asset class, unlocking enterprise confidence, ecosystem interoperability, and a sustainable rewards model for the creators and contributors who power the AI economy.

On top of its attribution and reward architecture, OpenLedger delivers a product suite designed to make decentralized, verifiable intelligence practical for builders, enterprises, and community contributors:

- OpenLedger AI Studio serves as the gateway for developers and enterprises to build, fine-tune, and deploy AI models natively on-chain, ensuring that every training signal, dataset, and model update is automatically attributed and rewardable.

- OpenLedger Open LoRA extends this capability by enabling lightweight, domain-specific fine-tuning (LoRA adaptations) that inherit verifiable provenance and can be composed, shared, or monetized across the ecosystem.

- OpenLedger Data Net transforms data contributions into liquid, traceable intelligence assets—allowing creators, enterprises, and communities to contribute datasets with guaranteed attribution, transparent licensing, and programmable economic participation.

Together, these products operationalize OpenLedger’s core vision: a unified environment where trusted AI can be built, enriched, deployed, and economically aligned with the people and organizations who make it possible.

Ram Kumar describes this system as “the economic layer the AI ecosystem has been missing,” adding:

“We’re creating an attribution graph for the entire AI supply chain. It’s the connective tissue that ties intelligence back to its origins. Without it, AI becomes unaccountable. With it, AI becomes rewardable, transparent, and fair.”

This approach matters because attribution is not just metadata; it becomes the basis for a new economic model. One of the clearest insights from architecture is that traditional databases cannot solve the attribution and trust problem. Enterprises often ask why blockchain is necessary. Ram’s answer is candid:

“You can fake logs. You can modify databases. You can overwrite audit trails. But you cannot rewrite a decentralized ledger. Trust requires immutability.”

At its core, blockchain is a distributed ledger technology that records information across a network of independent nodes, ensuring that no single party can alter history, manipulate data, or rewrite transactions. Unlike traditional databases controlled by one organization, blockchains create a shared, tamper-resistant record of events that all participants can verify.

This makes it uniquely suited for environments where provenance, integrity, and multi-party trust are essential — exactly the conditions that define the modern AI supply chain. When AI models learn from countless contributors, datasets, and systems across institutions, a centralized ledger cannot provide the transparency or protection required. Blockchain can. It creates a neutral, cryptographically secured substrate that preserves the lineage of every contribution and prevents retroactive manipulation, essentially providing:

- Tamper-proof provenance

- Decentralized verification across multiple nodes

- Global transparency of reward flows

- Long-term durability of attribution records

- Programmability for recurring payments and value flows

In other words, blockchain is probably the only infrastructure able to support the attribution and trust demands of the AI era.

Creating Enterprise Trust

In the near term, as enterprises grapple with the “trust problem,” OpenLedger offers plenty of solutions for real-world enterprise use cases—right now.

As enterprises confront the harsh reality of the current AI model lifecycle, they are realizing that today’s AI systems cannot reliably answer:

- Where did a model learn a particular insight?

- Which data sources influenced a specific output?

- Who owns the underlying intellectual property?

- Has the model unintentionally absorbed proprietary or copyrighted material?

- Can an enterprise audit the lineage of knowledge used in regulated decisions?

- What value did each contributor create, and how should it be compensated?

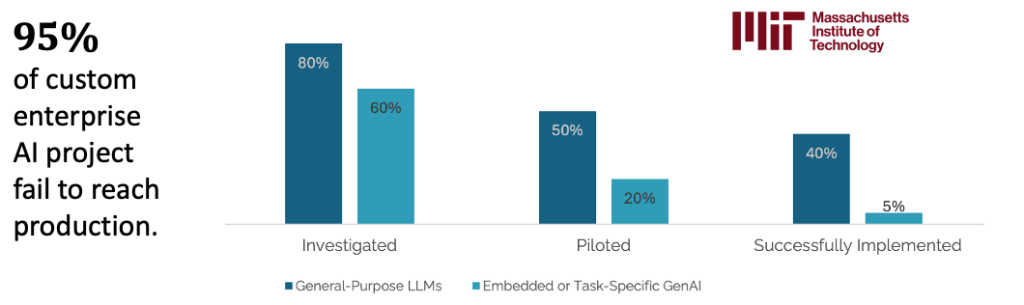

If these questions are not answered, they become strategic blockers. As reported by not only theCUBE Research but also recently by MIT and the Boston Consulting Group. The MIT study, The GenAI Divide: State of AI in Business 2025, for example, found that trust issues are one of the primary reasons why 95% of enterprise AI pilots fail to yield a measurable business impact.

In a world where AI is integrated into financial decisions, healthcare workflows, marketing automation, government policy, and critical infrastructure, enterprises require provable trust. They need lineage. They need verifiable provenance, not only of the data but of the AI itself.

Yet most enterprises today run their AI supply chains like black boxes, which we believe will become intolerable as AI agents proliferate. By 2027, enterprises will employ hundreds of agents interconnected across departments, each making decisions, generating knowledge, and consuming internal and external data. But without attribution, enterprises cannot trust the outputs. Without trust, they cannot scale digital labor. Without scale, they cannot capture ROI.

Trust has become the currency of ROI. No trust, no ROI.

In addition, enterprises are no longer just adopting AI; they are being regulated, required, and increasingly compelled to follow AI discipline. The shift is no longer optional or experimental; it is fundamental. With the EU AI Act, emerging U.S. federal guidance, sector-specific fiduciary rules, global data-sovereignty mandates, and rapidly evolving copyright laws, organizations now encounter a complex web of regulatory, policy, and fiduciary protections designed to ensure AI systems are transparent, compliant, and aligned with responsible business practices.

Beyond regulation, boards and executives are demanding stronger assurances that AI-driven decisions are reliable, justifiable, and free from unlicensed or opaque data sources. This now becomes a matter of institutional trust: if enterprises cannot verify how an AI system reached a particular outcome, they cannot responsibly implement it in finance, healthcare, supply chain, legal operations, customer-facing workflows, or policy-sensitive environments. Therefore, enterprises urgently need full traceability, auditability, and explainability across their AI systems, along with verifiable model lineage and clear licensing and consent records that demonstrate exactly where an AI’s knowledge comes from and whether its use is compliant.

These requirements serve as the essential controls for any organization aiming to trust AI outcomes, fulfill its fiduciary responsibilities, and operate safely in an increasingly scrutinized AI landscape.

This is where the OpenLedger solutions become strategically important within the enterprise. It allows leaders to document exactly where a model’s knowledge comes from, a critical requirement across finance, healthcare, the public sector, and any domain where AI influences human decisions.

Simply stated by Ram Kumar,

“Without attribution, you cannot do compliance. Without compliance, you cannot deploy trusted AI.”

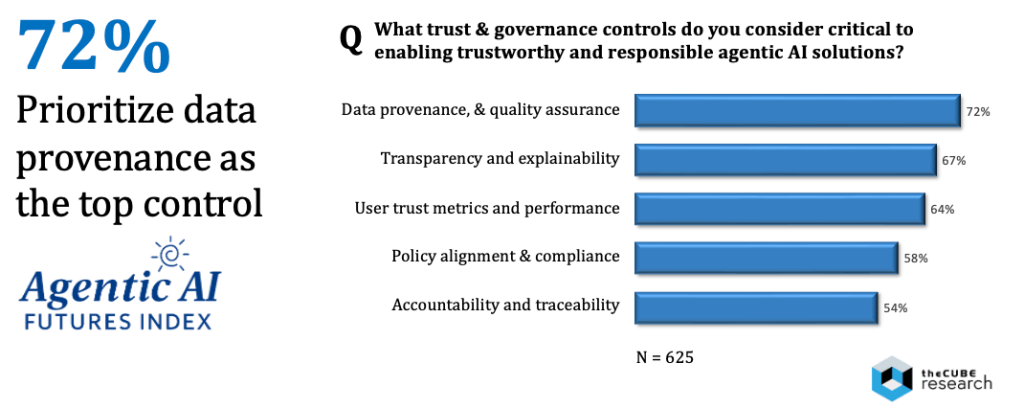

Enterprises increasingly agree. According to theCUBE Research’s Agentic AI Futures Index, 72% of leaders now prioritize data provenance as the single most critical control for enabling trustworthy and responsible AI. Close behind, 67% cite transparency and explainability. Policy alignment, compliance readiness, accountability, and traceability all follow as additional enterprise imperatives by a majority of enterprises surveyed.

These findings make one point unmistakably clear: without verifiable data lineage and transparent attribution, enterprises cannot trust AI to operate responsibly or at scale.

Creating a New Asset Class

As enterprises strive to meet the trust challenge, a deeper structural imbalance is emerging in the global economy. The early AI era has been driven largely by the unpaid contributions of the wider community, billions of people whose creativity, labor, insights, and culture feed AI models without any financial return. Analysts estimate that the vast majority of the data used to train today’s cutting-edge models comes from public, creator-generated, or open-source content, while fewer than 1% of contributors receive any form of attribution, licensing, or payment.

Meanwhile, the global creator economy has grown beyond $250 billion, and open-source developers collectively generate trillions of dollars in downstream enterprise value, yet both groups remain largely excluded from AI’s monetization stream. This is the core economic challenge of the early AI era: benefits are concentrated, while contributions are widespread. Whether it’s communities creating linguistic context, developers writing code, researchers sharing knowledge, or everyday users posting and interacting in ways that influence model behavior, the value generated by the world’s contributions tends to flow to just a few AI providers.

This imbalance is not only unfair, it’s economically unsustainable. It calls for rethinking the economic structure of intelligence so that value flows back to the people, communities, and organizations whose efforts make AI possible.

As Hebner stated,

“AI’s greatest economic paradox is that the world creates the intelligence, but only a few capture the monetary value.”

OpenLedgers most revolutionary idea is that contributions to AI intelligence are assets—tokenized attribution as a new, emerging asset class, involving things like:

- When a developer contributes code to a shared repository.

- When a creator produces content that trains a model

- When a community provides feedback that improves an AI agent.

- When an enterprise curates datasets that power a domain-specific workflow.

Those contributions have recurring value, not one-time value. Just as intellectual property generates royalties, OpenLedger proposes that contributions to AI should generate ongoing rewards, redefining how value flows through the intelligence ecosystem. In this model, each contribution becomes part of a new asset class—attribution units that represent verifiable inputs to model learning and agent behavior.

These units power a new incentive system, where reward flows automatically back to contributors based on actual usage. As this scales, it forms a new economic network in which intelligence itself becomes payable, tradable, and composable. And ultimately, it establishes a new social contract for AI—one where creators, developers, enterprises, and communities share in the value generated by the intelligence they help produce.

This is not just a philosophical shift; it is an economic one. Tokenized attribution has the potential to unlock entirely new value streams across the ecosystem — creating new revenue for creators, new monetization pathways for developers, new business models for enterprises, stronger incentives for data quality, and even entirely new markets for intelligence itself. The closest analogy is how renewable energy credits created a market for carbon reduction. Attribution units may similarly create a market for intelligence creation. If for that, why not contributions to AI’s almost magical capabilities?

Ram Kumar frames it as a generational shift:

“This will be the first time in history where intelligence is compensated. Not labor. Not compute. Not data. Intelligence.”

Analyst Angle

The next era of AI will be defined not by larger models alone but by the architecture that connects them. As intelligence becomes spread across thousands of models, agents, data sources, and jurisdictions, the AI ecosystem shifts from centralized platforms to interconnected, multi-stakeholder networks of intelligence. In this environment, no single model or vendor can control the entire value chain; instead, value arises from collaboration—models learning from creators, agents learning from enterprises, and communities sharing linguistic, cultural, and contextual knowledge. However, this interconnected system can only work if it is built on a foundation of trust, provenance, and shared economic goals.

This is where OpenLedger has an opportunity to play a defining role. Their strategy is to position themselves not just as another model provider, but as the trust fabric of the decentralized AI economy. By delivering a coordinated layer that connects multi-model, multi-agent, multi-cloud, multi-enterprise, and multi-creator environments, and by anchoring intelligence to its origins to ensure that every contribution is attributable and rewardable.

OpenLedger is enabling distributed AI systems to operate with the same confidence, integrity, and fairness that enterprises expect from regulated, mission-critical infrastructure. It is the connective tissue that allows decentralized intelligence to function as a trustworthy whole.

A few case studies illustrate the value of this strategy:

- Co-owned, Co-monetized Intelligence Assets: One of the most compelling demonstrations of their value is in community-driven AI, where local populations contribute linguistic nuances, cultural contexts, and domain-specific knowledge that no centralized model provider can match. With OpenLedger, every piece of community insight, whether a dialect example, a cultural interpretation, or a contextual correction, is recorded with verifiable attribution. The system tracks how these contributions influence downstream model behavior and ensures that communities receive transparent, recurring rewards for their contributions. This transforms community participation from a free, uncredited data source into a co-owned, co-monetized asset.

- Open-source Intelligence Royalty Systems: A second major application is in software development. Developers and open-source maintainers create enormous value, likely trillions of dollars in downstream economic impact, yet receive no compensation when their work is incorporated into commercial AI systems. OpenLedger tackles this by fingerprinting code snippets, tracking their use through model training pipelines, and mapping how those snippets influence downstream agent actions or model outputs. When that influence is detected, developers receive ongoing compensation tied to actual usage, not one-time licensing. At the same time, enterprises benefit from clear licensing records and provable compliance with open-source governance. It is, in effect, a royalty system for intelligence derived from code, something the open-source world has long needed but never had the infrastructure to enable.

- Enterprise AI Governance & Data Lineage: Enterprises also likely recognize the value of tracing data lineage across internal knowledge bases, decision systems, domain-specific models, AI agents, and multi-agent workflows. In traditional AI pipelines, data flows are often fragmented and unclear, making it difficult to answer fundamental governance questions such as: Where did this model learn this insight? Which datasets influenced this decision? Who owns the underlying data? Were proper licenses and consents secured? What regulatory risks are associated with this output? OpenLedger gives enterprises a cryptographically verifiable chain of custody for all model inputs and agent actions. In an era where every AI-assisted decision may require regulatory justification, this becomes not just a competitive advantage but a foundational trust requirement for enterprise-grade AI adoption.

Our view at theCUBE Research is that OpenLedger’s vision reflects a broader paradigm shift, that the currency of AI ROI is trust, enabled by:

- AI is moving from extraction to attribution.

- From opacity to transparency.

- From centralized control to decentralized value sharing.

- To fair economic drivers, and proper monetization for all

The company is still in its early stages, but its design aligns closely with the larger forces shaping the future of AI. Enterprises urgently need explainability to trust AI-driven decisions. Regulators require verifiable provenance and transparent model lineage. Developers seek compensation as their code and contributions support commercial systems. Communities desire inclusion and shared economic value, as their cultural and linguistic insights influence global models. To accomplish all this, models themselves must rely on credible, rights-compliant data sources, while AI agents increasingly depend on verifiable knowledge to operate safely and reliably. In this context, OpenLedger is not competing with LLMs, agent platforms, or orchestration frameworks; it is building the trust layer that all of them will inevitably need.

This mirrors the historical pattern of the internet, which we have referenced often: Protocol layers win when the market becomes too complex for centralized control. Attribution is the missing protocol for AI.

As AI permeates every industry, the economic impact of attribution extends across multiple interconnected aspects of a long-term economic catalyst:

- Labor markets shift toward human-AI hybrid decision workflows.

- Data markets become more regulated, valued, and priced.

- Model markets require verified training sources.

- Agent markets demand trustable decision chains.

- Creator markets push for rights and compensation.

- Regulated markets enforce provenance and explainability.

- Consumer markets demand ethical AI.

In our view, on-chain attribution may very well become the binding fabric across all these domains. However, in the end, the challenge for OpenLedger will not be technological, but rather of achieving market visibility, influence, and leadership to gain the opportunity to make a transformative impact.

As we have said often, in the world of over 70K global AI startups, “your story may be more important than your software”. Our analysis, founded in the AI-engine Optimization (AEO) Advantage Index, is that OpenLedger has a way to go to gain the recognition it deserves.

Recommendations

Enterprises should begin preparing for the attribution era now. The next three years will separate organizations that can scale AI with trust from those that stall due to regulatory, ethical, or operational risk. We recommend executives take the following steps:

- Treat AI provenance and attribution a long-term foundation for AI trust: Drive a focus on sustainable AI governance. Do not wait for regulators to force the issue. Build the capability now.

- Evaluate attribution-capable platforms: Start with OpenLedger, sooner rather than later. The cost of retrofitting attribution into deployed AI systems is far higher than the cost of designing it in.

- Create an internal AI supply chain map: Identify internal and external sources of data, models, and knowledge flows. This starts the strategic longer-term journey.

- Establish a tokenized or rewardable contribution framework. Prepare for compensation models tied to content, code, employee knowledge, and community contributions, especially within your enterprise ecosystem.

- Engage legal, HR, risk, and data teams: Attribution affects every part of the enterprise and shouldn’t be owned by engineering alone. Think of governance as economic and workplace value, not just technical.

- Start experimenting now: Pilot small attribution-enabled use cases now. Start with internal knowledge workflows, regulated decisions, or domain-specific models.

Most importantly, understand that the AI economy will probably shift from a system that extracts value to one that distributes it, from a system that hides provenance to one that reveals it, and from a system that consolidates power to one that shares it.

OpenLedger stands at the forefront of this shift, building the infrastructure that will allow intelligence to become a verifiable, rewardable, real-world asset. They are helping create the rails for that future, one attribution record at a time.

Kudos to them!

📩 Contact Me 📚 Read More AI Research 🔔 Subscribe to Next Frontiers of AI Digest