Breaking Analysis: Arm Lays Down the Gauntlet at Intel's Feet

Contributing Author: David Floyer Exactly one week after Pat Gelsinger unveiled plans to reinvent Intel, Arm announced version 9 of its architecture and put forth its vision for the next decade. We believe Arm’s direction is strong and compelling as it combines an end-to-end capability, from edge to cloud to the datacenter to the home and […]

Breaking Analysis: Arm Lays Down the Gauntlet at Intel’s Feet

Contributing Author: David Floyer Exactly one week after Pat Gelsinger unveiled plans to reinvent Intel, Arm announced version 9 of its architecture and put forth its vision for the next decade. We believe Arm’s direction is strong and compelling as it combines an end-to-end capability, from edge to cloud to the datacenter to the home and […]

Breaking Analysis: Why Intel is Too Strategic to Fail

Contributing Author: David Floyer Intel’s big announcement this week underscores the threat that the United States faces from China. The U.S. needs to lead in semiconductor design and manufacturing; and that lead is slipping because Intel has been fumbling the ball over the past several years. A mere two months into the job, new CEO […]

Breaking Analysis: Tech Spending Powers the Roaring 2020s as Cloud Leads the Charge

In the year 2020, it was good to be in tech. It was even better to be in the cloud as organizations had to rely on remote cloud services to keep things running. We believe tech spending will increase 7-8% in 2021 but we don’t expect investments in cloud computing to attenuate as workers head […]

Breaking Analysis: NFTs, Crypto Madness & Enterprise Blockchain

When a piece of digital art sells for $69.3M, more than has ever been paid for works by Paul Gauguin or Salvador Dali, making its creator the third most expensive living artist in the world, one can’t help but take notice and ask: “What is going on?” The latest craze around NFTs may feel a […]

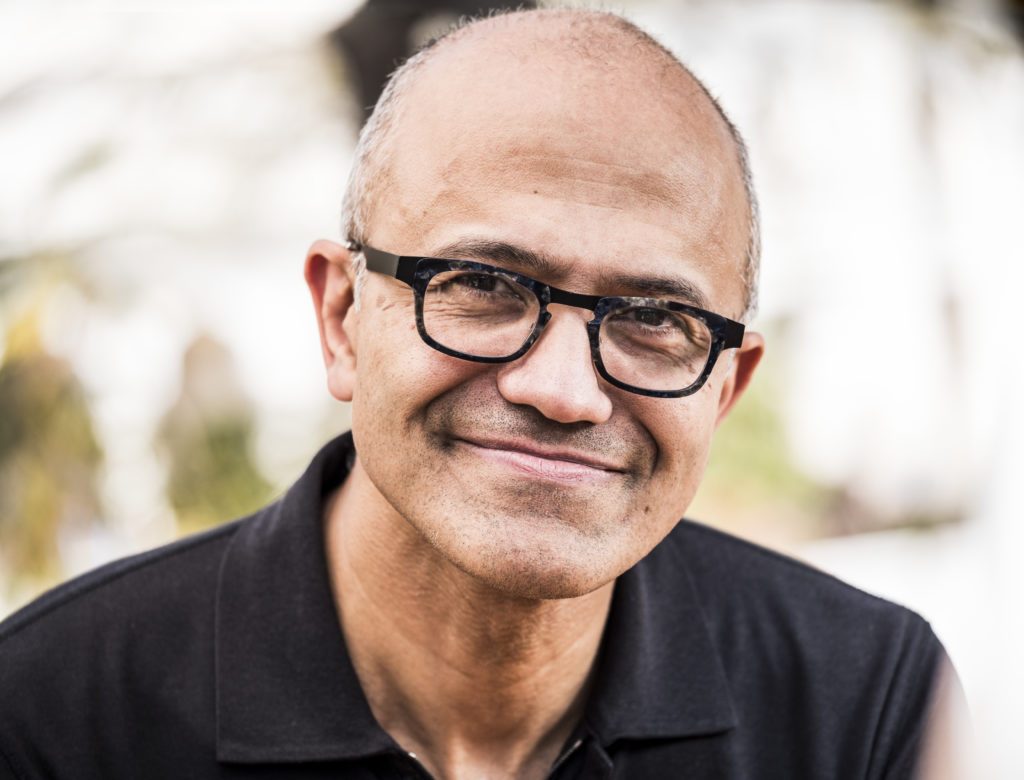

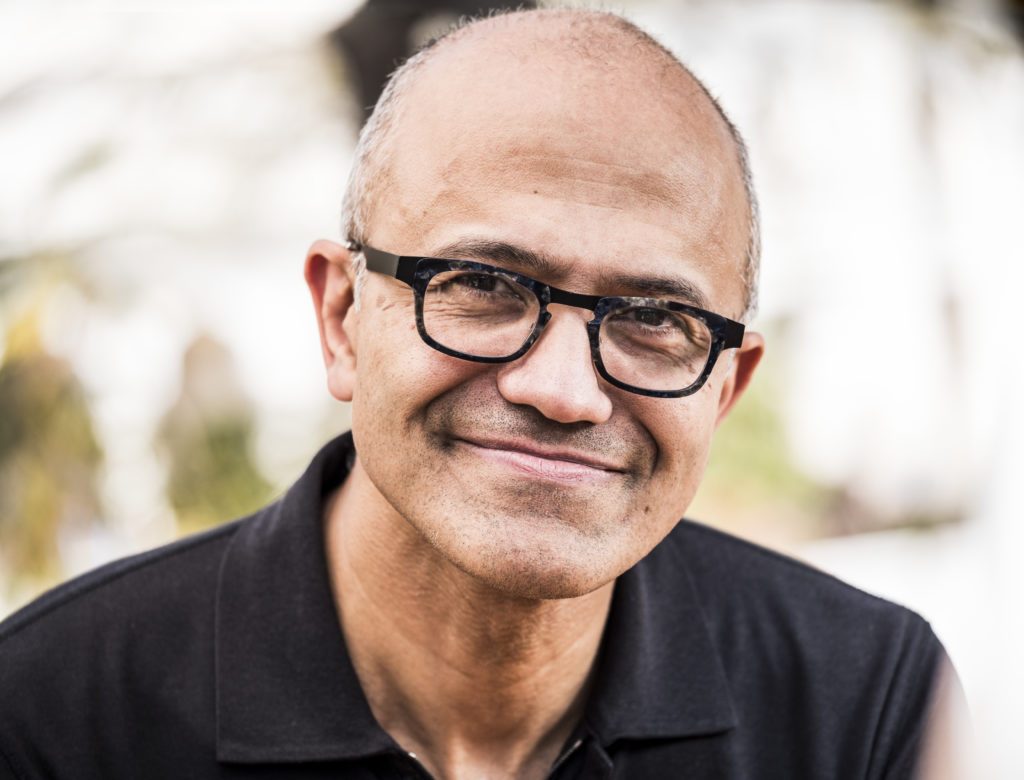

Breaking Analysis: Satya Nadella Lays out a Vision for Microsoft at Ignite 2021…What it Means for the Company & the Cloud

Microsoft CEO Satya Nadella sees a different future for cloud computing over the coming decade. In his Microsoft Ignite keynote, he laid out the five attributes that will define the cloud in the next ten years. His vision is a cloud platform that is decentralized, ubiquitous, intelligent, sensing and trusted. One that actually titillates the […]

Breaking Analysis: Satya Nadella Lays out a Vision for Microsoft at Ignite 2021…What it Means for the Company & the Cloud

Microsoft CEO Satya Nadella sees a different future for cloud computing over the coming decade. In his Microsoft Ignite keynote, he laid out the five attributes that will define the cloud in the next ten years. His vision is a cloud platform that is decentralized, ubiquitous, intelligent, sensing and trusted. One that actually titillates the […]

Breaking Analysis: SaaS Attack, On-prem Survival & Re-defining Cloud

SaaS companies have been some of the strongest performers during this COVID era. They finally took a bit of a breather this month but remain generally well-positioned for the next several years with their predictable revenue models and cloud-based offerings. Meanwhile, the demise of on-prem legacy players from COVID shock seems to have been overstated, […]

Breaking Analysis: SaaS Attack, On-prem Survival & Re-defining Cloud

SaaS companies have been some of the strongest performers during this COVID era. They finally took a bit of a breather this month but remain generally well-positioned for the next several years with their predictable revenue models and cloud-based offerings. Meanwhile, the demise of on-prem legacy players from COVID shock seems to have been overstated, […]