Sentiment at the Flash Summit

The mood of the 2011 Flash Memory Summit in Santa Clara was victorious, declaring victory over high-performance disk. It was a time to look back at history and the near misses and to smile at the mea culpa when Intel alumnus Stefan Lai declared to the Intel board that “flash is too error-prone and has too short a life to make it in the marketplace”.

Most of the flash conference was about the flash ecosystem, about programming and testing flash components, about chips and sub-assemblies. The problems of wear leveling and throttling have been solved. It is time to look over the horizon at new technologies such as IBM’s phase change memory or HP’s memristor and speculate on whether they can replace NAND or NOR memory (don’t hold your breath). With 64 Gbits on a single chip, it is time to worry about how many generations of flash are left and how to beat the usual pessimistic expert prognostications that insurmountable hurdles lie ahead.

Flash is about one thing: improving the end-user experience. It enables today’s applications to run better and sometimes run much more efficiently. It will dramatically affect the user experience and productivity of tomorrow’s applications, enabling an order-of-magnitude more data to be processed by a finger tip.

The great user experience of flash is evident when you look at the consumer space. These words are being written on a flash-only iPad connected to a cloud drop-box. The previous hard-disk PC workhorse, celebrating its 30th birthday on August 12th, is slow and clunky in comparison and now sits shorn of Outlook and used only for spreadsheets. Wall Street’s criticism of Steve Jobs for decimating his own product sales of Classic iPods with hard disk drives when he introduced the flash-only Mini iPod in 2004 are now forgotten. Vendors that don’t make similar bold moves will soon be as forgotten by history as the myriad teams trying to bring iPod killers to market.

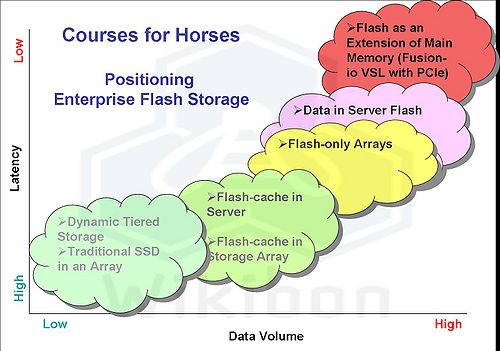

Courses for Horses

The conference displayed five distinct approaches to bringing flash to enterprise data centers. The workloads, the horses and riders in the race, have very different characteristics and pedigrees and are suitable for different courses. Figure 1 below shows the five courses and the different uses of flash to help the horses and riders finish faster. The sections below will describe each course architecture, and the applications that will run best in each.

Source: Wikibon 2011

Traditional SSD in an Array

This was the approach that EMC used when it introduced the first Tier 0 storage in January 2009. This was a great way to introduce flash technology because it retained the same form factor and software infrastructure as classic 3½ inch hard disks, minimizing adoption friction. EMC partner STEC helped solve the technical issues and make flash enterprise grade.

As flash prices have tumbled and performance has improved, more flash storage can be justified in storage arrays. Dynamic tiered storage software has helped improve the performance of some workloads by moving the most active data in volumes or parts of volumes to faster drives. The selection of data to be moved can be:

- Data centric: The data accessed most often is moved to a higher performance drive or tier;

- Drive centric: Data on the drives with the highest latency is moved to drives with lower latency.

However, the design of storage arrays is mainly focused on traditional hard disk drives and becomes saturated when the number of SSD drives goes over 10%-20%.

There is another reason, more technical but more fundamental, that this approach limits the potential of flash. The slowest part of the total storage system is the disk itself. Access time is measured in milliseconds, timing access to the rotation of the disks is a major challenge, and bandwidth and IOPS to a drive is severely limited and not improving. As a result, there is a high degree of variability in storage access time in practical environments. To overcome these problems, arrays have had read caches and battery-protected write caches. These reduce average latency but conversely severely increase variability. The higher the cache hit-rate, the worse the variability becomes. The greater the variation in workload mix, the worse the cache hit-rate becomes. As a result, the only way to achieve consistent response times is to over-provision the storage array. The virtualization storage tax makes the over-provisioning more acute.

The largest vendor display on the floor at the flash summit this year belonged to STEC, as the company contends for relevance in a much more crowded market place, with many more form factors and approaches to improving the user experience. This reflects an attempt to breathe life into an approach that is much less relevant than at the start of the flash revolution.

Bottom line: Wikibon believes that the approach of adding Tier 0 storage in the traditional storage array is a course that is suitable for legacy workloads where the IO intensity is low and the performance not critical. It also works well where there is a small, easily identifiable, and consistent set of data that needs a performance boost, and where “good-enough” performance is acceptable for the rest. Wikibon believes that this course architecture will become less competitive with other courses in 2012 and beyond for workloads with more stringent performance requirements.

Flash-cache

The second racing course is flash-cache, inserted either in the storage array or the server. This is a crowded marketplace, with a number of vendors at the Flash Summit. LSI illustrates the hardware approach, with the introduction of the CacheCade™ version 2 flash cache software on its server RAID cards. The interesting extension of this software is that it now supports both read and write. As an example, read flash improved exchange workloads by less 5%, as the exchange is often 50% reads and 50% writes. The LSI benchmarks were claiming 160% improvements with the new software, although high-availability for write flash-caches has to be carefully configured and is not yet proven in practice. Many vendors (e.g., Texas Memory, EMC) are putting the flash cache on server PCIe cards to improve performance and latency.

Another approach is to provide just the software. FlashSoft is providing software to turn either SSD or PCIe flash into high performance caches. IO Turbine takes a similar approach and has been acquired by Fusion-io to provide a high-performance cache.

Flash-caches at the storage array level are a good way of overcoming the limitations of storage array RAM cache sizes, although where there is a choice, array RAM cache is simpler and performs better. Write flash-cache is less important in the array, as it is simpler to use the non-volatile RAM array caches (with battery protection) to ensure that the data is written out to disk.

Bottom Line: Wikibon believes that flash-caches at the array level offer relief to arrays with limited cache sizes, particularly with entry level arrays. They are often more cost-effective than upgrading or replacing the array. Flash-caches at the server level can be very effective at improving the performance of a specific current workload and restoring performance in a virtualized environment. Wikibon expects significant adoption of this approach as a short-term fix for poor performance in arrays running legacy workloads. As discussed earlier, flash-caches in front of traditional disks ameliorate performance problems but only to a certain extent. The variable cache hit-rates and slow disks causes very high variability in access time to data, depending on whether that data is in the cache or has to be retrieved from the disk. This can be hidden in carefully crafted benchmarks, but there is a practical limit to the improvement in performance and functionality built into this approach. Other approaches are very likely to provide better value for modern workloads.

Flash-only Arrays

The most exciting new entrants at the 2011 Flash Summit have been the flash-only vendors. Huawei-Symantec Oceanspace Dorado, Nimbus Data Systems, Pure Storage, SolidFire, Texas Memory Systems, Violin, and WhipTail Tech have entered the race.

A closer examination of some of these contenders illustrates the different approaches and ways to market they are taking. SolidFire has just coming out of stealth and is planning to deliver its solution in 2012. The architecture of the SolidFire array has been redesigned from the ground up for cloud service providers supporting IO-intensive applications. This market is mainly addressed by the Tier 1.5 vendors such as HP 3PAR, IBM XIV, and NetApp. The traditional Tier 1 products such as EMC VMAX, Hitachi VSP and IBM 8000 are usually overkill and too expensive for this market. The problems SolidFire identified in this highly cost-sensitive market are:

- Inconsistent and highly variable performance during the day and on different arrays – service providers cannot guarantee IO response time by storage volume or user;

- Data efficiency capabilities (de-duplication/compression) are not available or are turned off because of performance impact concerns;

- Systems cannot use their total capacity (and are therefore routinely over-provisioned) because of performance constraints;

- Performance, provisioning, and reporting cannot be effectively managed by tenant or within tenant;

- Staffing to manage performance, particularly in virtualized environments, becomes a major cost.

The SolidFire approach to the design focuses on enabling:

- Consistent quality of service (QoS) by individual volume both on IOPS and bandwidth. This can be can be dynamically changed by the user and built into the charging structure of the service provider for the tenant and within a tenant;

- Automated management and integration into the service providers provisioning and reporting tools through management APIs;

- Capacity efficiency capabilities such as de-duplication, compression, virtualization, thin snapshots, and thin provisioning without any performance impact.

SolidFire claims that this approach makes its offering much cheaper than traditional tier 1.5 storage and empowers service providers to enter new business areas with improved consistent IO performance. SolidFire is tackling the cloud service provider marketplace and emphasizing the control over user experience and storage efficiency in a highly cost-conscious market. Time will tell if SolidFire can execute and be successful in this marketplace. If it is, it has an embarrassment of exit strategies, from entering the enterprise space to being acquired.

Another entrant at the flash conference is Nimbus Data Systems. Its approach is to enter the traditional enterprise marketplace. It started with small iSCSI-only arrays that could be scaled-up. Nimbus is showing well with a great classic win at eBay, with 12 arrays and over 100 TB of flash deployed to help the performance of many thousands of VMware vSphere 4.1 VMs. It claimed it won on a lower price point than the traditional arrays with SSDs and arrays with flash-cache, and in addition had far higher levels of performance. Recently Nimbus has added Fibre Channel and InfiniBand connectivity to help it compete in traditional high-performance enterprise marketplaces, and in the High Performance Compute (HPC) market.

Texas Memory is also making noise at the conference. It has a long history of improving IO times for the financial industry with RAM devices and is now introducing a flash version. Its go-to-market strategy is to focus on industry segments such as finance where latency is critical, as well as the OEM market.

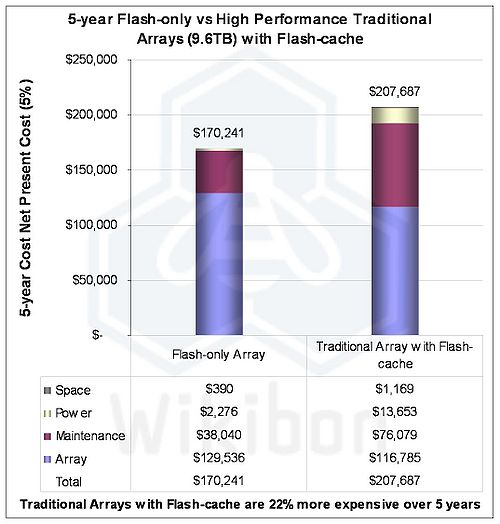

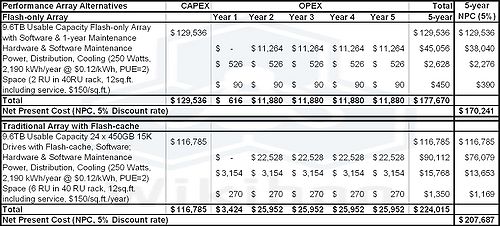

Source: Wikibon 2011. See Table 2 in the footnotes for detailed sources, assumptions and calculations

The Wikibon analysis results in Figure 2 show that the cost case for flash-only devices looks good against the traditional 1.5 tier arrays. The CAPEX costs are similar, but the maintenance costs (flash being non-mechanical) are much lower. The reduction in costs for power and space add additional benefit. The performance both in terms of IOPS and response time are an order of magnitude better. The cost for a flash-only array is $17,733/TB over five years. The cost of the traditional array with flash-cache is $21,634/TB, 22% higher.

Bottom Line: In summary, flash-only arrays offer the promise of consistentperformance for active data that can be shared across SANS (or even InfiniBand networks). They can outperform every high-performance traditional array in the marketplace, with or without flash drives or cache, at a similar or lower cost. Flash-only arrays will not be competitive against traditional arrays with SATA drives with low-cost controllers and software. These arrays will be 6-10 times cheaper than flash-only arrays and will continue to dominate this market for the foreseeable future; they will represent the largest amount of storage as measured by terabytes.

The impact of flash-only arrays is highly disruptive and is the death-knell for high-performance SAS drives. The roll-out of this technology will be measured, as acquisitions from established vendors and aggressive cost-cutting of traditional solutions in accounts under threat will slow down adoption. Many users will prefer to see more adoption and more time in the field before biting the bullet. In the meantime, SolidFire and Nimbus coffee mugs will be collectors items and in strong demand for storage negotiations. Tier 1 storage will be the last to fall, as the need for proof will be very strong and the risk of failure very high. When it finally falls, it will fall the hardest.

However, the flash-only course is is not as suitable for applications handicapped by the weight of all the additional baggage in the rest of the network and IO stack between the array and and the processor; this are the area addressed by flash on the server.

Data in Server Flash Memory

Wikibon has written extensively about the constraints of IO on application functionality and user productivity. A case study on the century-old, family-owned, Chicago-based Revere Electric Supply Company out of Chicago showed the value of rapid response time after it had installed its entire database system on a Fusion-io ioDrive, an example of data in server flash memory.

Application architects have had to battle the constraints of very slow IO since the beginning of IT, 65 years ago. Databases in particular need to lock resources so that the data remains consistent during an update process (write lock) and users can be assured that the data has not been updated while they were processing data (read-lock). This locking introduces serialization into the process, and the slowest part of the serial component is the writing of data to non-volatile storage (disk) to ensure data integrity in the case of system failure. There is always a trade-off between between ease-of-use and flexibility for the end-user of the system and response time and throughput of the system as a whole.

The Epicor Eclypse package used by Revere was designed to be very flexible and easy to use. However, as the system grew and additional functionality was implemented to improve productivity, response times went to hell in a hand basket. The culprit was slow spinning rust. The solution was holding the complete database in server flash memory, about 50 times faster.

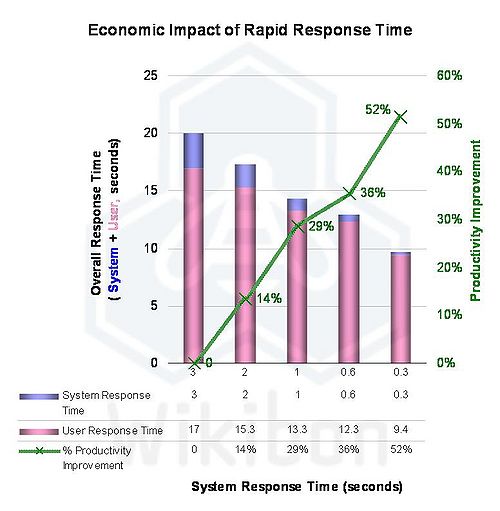

The theoretical results of improving response time are shown in Figure 3. A good rule of thumb is that halving response time will improve productivity by 20%.

Source: Wikibon, 2011

The practical results at Revere from putting the database onto Fusion-io ioDrives were dramatic. Mike Prepelica, director of IT for Revere, reported that the response time for some IT functions were 30 times faster. A business process that normally took three weeks was reduced to three days. The call center was able to handle a 20% increase in calls with no additional staff. Overall, Revere believe that putting the database into flash memory on the server saved them 10 staff out of a total of 200; that translates into $360,000 saving every year straight to be bottom line.

The modern cloud systems have all been built with end-user response times as a business priority. Google has built its search engine business based on getting the best answers back to the user in the shortest possible time. After every search it give the number of hits and the time taken to respond, always sub-second for search. Facebook has built its business around the end-user experience. The technical challenges for Facebook were more severe than search, because users are updating their pages constantly, and those updates need to be reflected instantaneously to linked friends and any other user. As Facebook grew in numbers and functionality, the scale-out database design behind the magic reached maximum throughput, constrained by the serialization of the processes at very high locking rates. The solution was to put the critical databases into Fusion-io flash in server drives. Apple has a similar story, both in its use of flash in products (Apple was a winner of the 2010 Wikibon CTO award) and in its use of Fusion-io flash in its iCloud infrastructure.

Bottom Line: Rapid response time is becoming a business imperative for businesses and the people they hire and support. Becoming Rapid response-time company, or RARC is emerging as a CEO and CIO imperative. As was said at the beginning, flash is about one thing: improving the end-user experience; that improvement will drive productivity and profitability for organizations that embrace it.

Flash as an Extension of Main Memory

After data is in server flash, the IO stack becomes the major bottleneck. The SCSI protocol was developed for very slow hard disk drives and is cumbersome and “chatty”. The ideal application environment would be if flash as a non-volatile medium could be implemented as an extension of volatile flash in the server and atomic writes could be made to server flash. Atomic writes allow guaranteed protection in non-volatile storage in a single pass and would greatly simplify the file-system architecture, and significantly improve the reliability and performance of database systems.

Fusion-io won the 2010 Wikibon CTO award for the introduction of VLS architecture, a significant announcement that heralds a completely new way that performance systems will be architected in the future. Flash devices such as the iPad are already showing a change in the way that mobile applications are being written, which makes available much more data to the end-user to improve functionality and ease of use. Future server systems will use flash on server architectures to increase the data access density and locking rates by a factor of 10 or more. This will significantly improve the functionality and ease of use of applications.

Bottom line: Wikibon expects that Linux systems will be the fastest adopters of flash as an extension of main memory. High performance file systems, volume systems, and database systems will be the main areas of adoption, as well as key areas inside the operating and hypervisor systems. Modern cloud systems will be in the forefront, and IT organisations should expect to see significant announcements from many software vendors over the next 18 months

Conclusions

Wikibon believes that flash has now reached a price and performance point where it will disrupt the traditional storage industry. The next two to three years will see three main tiers of enterprise storage:

- Flash as an Extension of Memory

This tier has the highest IO performance, with the fastest bandwidth and IOPS, and with IO latency in the measured in microseconds. An early Wikibon estimate is that it will account for the top 3% of data and 20% of storage spend by 2015. Some of this spend will be separate storage cards, and some of it as flash on the motherboard. Storage networking will be peer-to-peer across InfiniBand and 10Gb Ethernet server interconnects. This tier will see the slowest adoption rate, as software changes will be needed in operating systems and databases to gain its full benefit. The business impact of changes to this layer will be the most profound, as the ease-of-use and productivity of modern applications are implemented. ISVs that do not respond with the investments required to modernize their product offerings could face severe disruption. The cost of this layer will be higher than the flash-only arrays and offer higher performance but probably slightly less efficiency and management capabilities, at least at the beginning. - Flash-only Arrays

Flash-only arrays will be the industry norm for most active data that needs fast consistent performance using the current IO and network stacks that can be shared across the data center. An early Wikibon estimate is that it will account for the top 12% of data and 35% of storage spend by 2015. Key qualities will be the ability to provide QoS control capabilities and high levels of efficiency (data de-duplication, compression, thin provisioning, coherent snapshots, etc.) This tier will extend its market downwards into the SAS arrays as flash costs decline and performance improves. The last bastion to fall will the Tier 1 arrays, where users require specialist capabilities that have been proven for some time in the field before they will trust very-high-value, mission-critical applications to a new platform.

Service providers will be one of the early adopters of this tier, and it will enable them to offer ISV cloud services with fewer IT professionals and a greater degree of business control on the allocation and monitization of storage capacity and performance. - Traditional Storage Arrays:

This tier will hold the vast majority of enterprise data, but the least active. SATA high capacity disks will dominate. An early Wikibon estimate is that it will account for 85% of data and 40% of storage spend by 2015. Traditional high-functionality array software and the array controllers will decline in cost over time. Erasure encoding will increasingly be used for improving availability and recoverability. There will be some flash functionality for metadata and caching on the array controllers. The most interesting potential niche for storage arrays is in high levels of striping to achieve very high IO rates for rapid movement of data between the flash-only arrays and SATA disk. Will disk end up as the medium of choice for high-speed sequential transfer? Will disk finally replace tape?

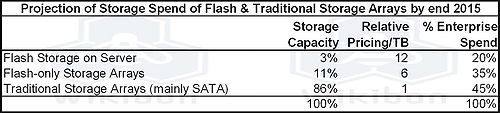

A summary of the projections is contained in Table 1 below. The market is very fluid, with plenty of room for innovation in combinations of storage topologies. But Wikibon agrees with the experts at the Flash 2011 summit that flash will effectively replace high-performance disk for new array shipments by the end of 2015.

Source: Wikibon 2011

Action Item: CTOs and CIO will need to align applications and data with the value they bring to the organization, and start segmentation of resources. In particular, systems architects should be looking at the active and very active data and look to be moving this data to flash-only media.

Source: Wikibon 2011, based on information from Nimbus and NetApp websites, and additional data from Chris Mellor’s posting in The Register, downloaded 8/10/2011.