Introduction

The data industry has arrived at a pivotal juncture that echoes the themes we’ve charted in previous Breaking Analysis episodes, from The Sixth Data Platform through The Yellow Brick Road to Agentic AI; Why Jamie Dimon is Sam Altman’s Biggest Competitor all the way to the Faceoff between Benioff and Nadella. Those analyses have chronicled the steady convergence of analytic data platforms such as Snowflake and Databricks, not only with the hyperscalers’ estates, but increasingly, with the operational applications that run businesses. Our research indicates that this merger is not a side-effect of Gen AI hype, rather it’s a structural shift in how enterprises will create value from data and software over the next several decades.

In this Breaking Analysis, and ahead of Snowflake Summit next week and the Databricks Data + AI Summit the week after, we frame what in our view is the data industry’s central aim. Specifically, the race to evolve from data platform into a true System of Intelligence. Systems of Intelligence, as we have argued, are differentiated by their capacity to absorb the fragmented business logic scattered across legacy operational estates, harmonize it alongside trusted data, and expose it to agentic frameworks under human supervision. Only when that Rubicon is crossed can enterprises consistently answer, at scale, the four enduring questions of management – that is, what happened, why it happened, what will likely happen next, and, most critically, what action should be taken next?

In our research, we’ve discussed the capabilities that hyperscalers possess and the infrastructure advantage they have captured. Morover, vendors such as Salesforce, ServiceNow, Palantir, Celonis and Blue Yonder, are actively traversing this chasm by embedding intelligence directly into workflows. Whether Snowflake, Databricks and the other analytical leaders choose to do so, or instead opt to solidify their position as the, let’s call it, “neutral analytics layer,” feeding these emerging systems of intelligence, is a thread that we examine throughout this research. As we head into summit season, our premise is that platform strategy, not incremental feature wars, will separate tomorrow’s leaders from yesterday’s best-of- breed specialists. We welcome you to join us as we dissect four examples in this note, including Snowflake, Databricks, AWS and Salesforce in terms of their architecture, how they’re extending their franchises, buoying control points, and ultimately addressing board-level mandates to operationalize AI at scale.

From Dashboards to a Living System of Intelligence

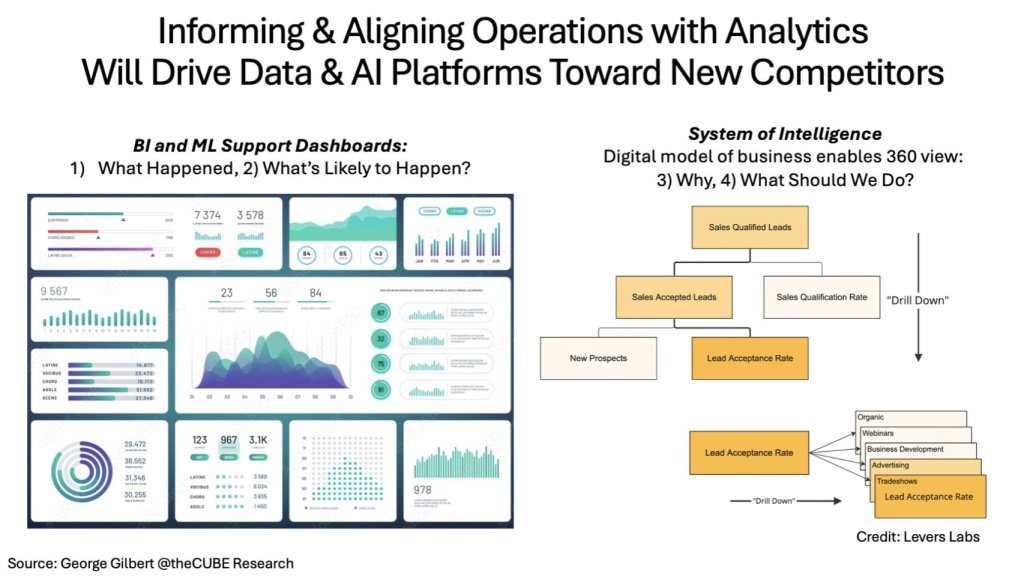

Our view is that enterprise analytics is evolving from static, historical dashboards to a dynamic, four-dimensional digital twin that continuously senses, predicts, and optimizes business performance. We believe this evolution is unavoidable if organizations expect to deploy autonomous agents with the requisite context and guardrails. The Exhibit 1 below captures the evolution. On the left we show familiar BI and ML capabilities that answer what happened and what will likely happen. On the right, we show an interconnected metric tree that exposes causal pathways that explain why results occur and what should be done next. Such a system becomes the operational control plane for a digital business, closing the feedback loop between data, decision, and action.

Exhibit 1 above shows: 1) A conventional BI/ML dashboard with color-coded KPIs, trend lines, and gauges; and 2) A cascading metric tree that starts at Sales Qualified Leads, drills into acceptance and qualification rates, and ultimately traces down to channel-specific contributors such as webinars or tradeshows. The right-hand graphic illustrates how linking metrics in a dependency graph enables real-time root-cause analysis and prescriptive automation.

Key implications

- Dashboards become nodes in a control loop. Traditional BI exposes lagging indicators, but a System of Intelligence (SoI) creates a live state, served up to agents, enabling micro-interventions that compound over time.

- A 4-D business map is fundamental for agentic AI. Our research premise is anchored on the assumption that without a time-series model of how activities influence one another, agents can neither learn safely nor justify their recommendations.

- Data platforms are rising “up the stack.” Snowflake, Databricks, and the hyperscalers (AWS in particular) are being pulled toward the operational domain (where Microsoft already exists with its applications suites); competing head-on with application players such as Salesforce and ServiceNow is now squarely on the roadmap.

- Feedback loops create platform lock-in. Once analytic, operational, and agentic workflows converge on a single SoI, switching costs increase dramatically, raising both the opportunity and the execution bar for vendors.

In our view, the journey from analytics to a full-fledged System of Intelligence reframes the competitive landscape. Specifically, data-native platforms must embed operational semantics, while application vendors race to harden their data layers. The next sections will examine the architectural evolution we see and specifically how each of the four contenders we highlight in this note is positioning for this new era.

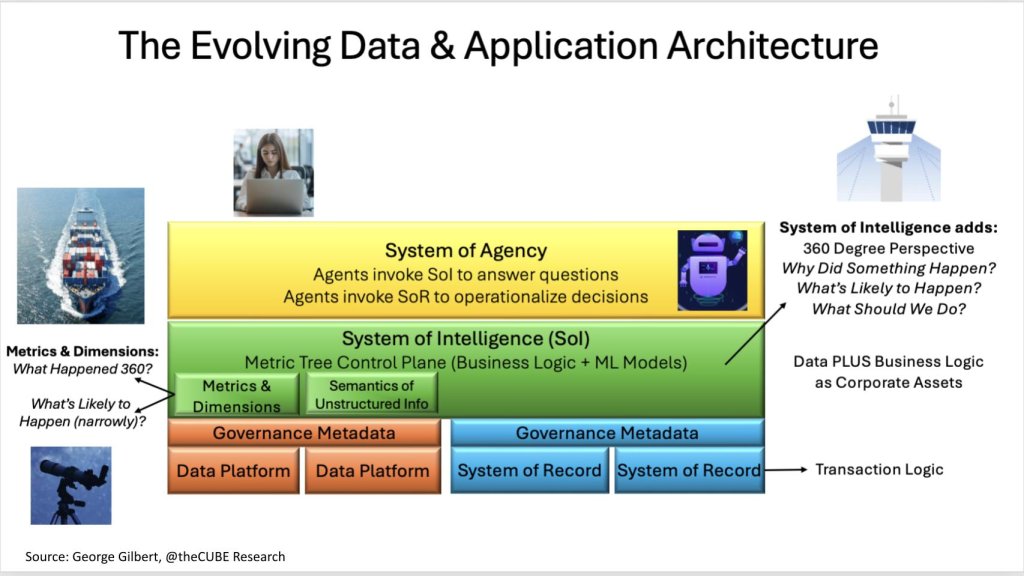

A New Three-Tier Stack and the Race to an Emerging Value Layer

The modern data stack is splitting into three distinct areas (see Exhibit 2 below). At the base sit systems of record, the transactional engines that run finance, supply chain, commerce, and customer operations. Above them emerges the system of intelligence (SoI), a metric-tree control plane that ingests data and harmonizes, data, metadata and business logic, with requisite machine-learning models necessary to diagnose root causes and prescribe interventions. Topping off the picture is the emerging system of agency (SoA), where autonomous or human-assisted agents interact with the SoI for context, then invoke the systems of record to execute decisions at machine speed.

For added context on Exhibit 2 let’s dig into the stack diagram. It depicts three color-coded layers. The bottom tier (orange/blue) houses data platforms and transactional systems, governed by shared metadata. The middle green band, labeled “System of Intelligence,” embeds a metric-tree control plane that stores both metrics/dimensions and the semantics of unstructured information. The top yellow layer, “System of Agency,” shows agents querying the SoI to answer why and what should we do, then invoking systems of record to operationalize decisions. Call-outs note that the SoI adds a 360-degree perspective and that “data plus business logic” becomes a new type of corporate assets. The small icons depict a cargo ship, a telescope, and an air-traffic control tower to reinforce the journey from hindsight to foresight to real-time orchestration.

Why the SoI matters

- Our research indicates that unifying business logic—not just data—is now compulsory to support agentic AI. Harmonizing definitions such as customer segments or lead-qualification rules allows changes to ripple automatically across every analytic and operational workflow.

- An SoI therefore acts as a 4-D state machine of the enterprise, giving agents a deterministic map to read from, and, importantly, to write back to, without corrupting underlying records.

- Vendors that control this middle layer effectively own the enterprise feedback loop. We believe the prize is meaningful as attach rates for consumption services, higher switching costs, and a launch point for premium agentic capabilities will be adjudicated here.

Vendor postures

| Player | Current Position | SoI Ambition & Gaps |

|---|---|---|

| Snowflake | Metric-store hints in Cortex Agents | Needs to elevate beyond analytic artifacts; no native logic engine yet |

| Databricks | Unity Catalog + Mosaic AI | Catalog could evolve into metric tree, but relational bias persists |

| Hyperscalers (AWS, Azure, GCP) | Fabric, Redshift RA, BigQuery Studio layers forming | Deep pockets but must bridge fragmented services into one control plane |

| Application Giants (Salesforce, ServiceNow, SAP, Workday) | Embedding metric trees inside SaaS domains | Advantage in domain semantics; risk of narrow scope outside core apps |

| Disruptors (Palantir, Celonis, Blue Yonder) | Already treat logic as first-class asset | Prove scalability beyond early adopter beachheads |

Bottom line

We believe the green layer is one of the most valuable and undeveloped pieces real estate in enterprise software – ripe for appreciation. The ones who control it will be in a stronger position to dictate the cadence at which data, models, and business logic evolve, and, by extension, the efficacy of every autonomous agent that sits above. Whether Snowflake, Databricks, a hyperscaler, or an application incumbent claims that ground will, we believe, define the competitive order of the next decade.

Snowflake: A “Statement” Earnings Print That Boosts Confidence

Snowflake’s May print was not only an earnings release but also a line-in-the-sand declaration that the company can accelerate product innovation while maintaining operating discipline. We highlight some of the key metrics in Exhibit 3 below.

The table above summarizes product revenue, RPO, NRR, gross margin, operating margin, and FY-26 guidance, with Y/Y deltas and commentary (e.g., bookings acceleration, AI cost absorption). The red box highlights an impressive $4.325 B revenue guide, underscoring management’s confidence in durable mid-20s growth.

Key narrative threads from management

The following points of emphasis from the earnings call were noteworthy in our view:

- Unified data, any workload. Snowflake finally has credible answers for lakehouse purists and unstructured-data skeptics. The architecture now spans warehousing, lakehouse, and data lake modalities under one governance umbrella.

- Workload expansion. By courting data engineers (Snowpark), developers (Java/Python/Scala), and business analysts (native apps), Snowflake is broadening its TAM beyond SQL analytics; and addressing specific data engineering workload TCO challenges that plagued the company in the past several years.

- Universal governance. Horizon’s policy layer, coupled with cloud region-agnostic Snowgrid, positions Snowflake as a compliance backbone for multi-cloud AI workloads.

- Architecture concentricity. The company positions storage/database at the hub, elastic compute as spokes, and a fast-growing Cortex AI ring that embeds vector search, model hosting, and, potentially, a metric store for agentic workflows.

What changed?

Twelve months ago Snowflake was barely mentioned in AI conversations within our community. Today, the company touts several Cortex wins including at firms like Kraft Heinz and Luminate Data. Sridhar Ramaswamy’s mandate to “ship faster” is showing up in release velocity and, as importantly, in customer perception. Wall Street noticed as shares popped ~14 % post-print, yet remain ~50 % below the euphoric 2021 peak. Snowflake has taken the opportunity to buyback shares at an average price of ~$150, well below today’s value.

Snowflake’s position in the SoI land-grab

We believe Snowflake’s most consequential move is not GenAI per se, rather it is the quiet build-out of a metric store that could mature into the green-layer System of Intelligence. If Cortex agents can read/write against a harmonized metric tree, Snowflake could morph from analytic powerhouse to an operational nerve center, bridging systems of record across clouds. The catch is this demands a logic-aware substrate closer to a graph or semantic model than a traditional relational catalog. Conversations with management suggests that existing primitives will suffice but we are less certain.

Risks and watch-items

- Data-engineering halo or head-fake? Competing for Spark workloads invites a knife-fight with Databricks on performance, price, and ecosystem mindshare.

- COGS creep. Gen-2 compute discounts mask rising inference costs; sustained gross-margin stability bears watching.

- SoI execution. Without a first-class logic layer, Snowflake risks ceding the green block to up-stack SaaS or down-stack hyperscalers.

Bottom line

In our opinion, Snowflake has re-entered the AI conversation with momentum and a healthier P&L, but the true test is whether it can convert Cortex and a nascent metric store into the decisive System of Intelligence layer. Own that, and the $4 billion run-rate could look small in hindsight; miss it, and Databricks, Salesforce, or the hyperscalers will try to fill the void.

ETR Data Signal: Snowflake Races to AI Leadership

The market’s initial impression was Snowflake got caught flat-footed by the AI awakening. But good AI starts with quality, clean, governed, trusted data and Snowflake customers have plenty of that. As such, with focus and engineering talent, Snowflake has catapulted itself to a leading position in the AI race.

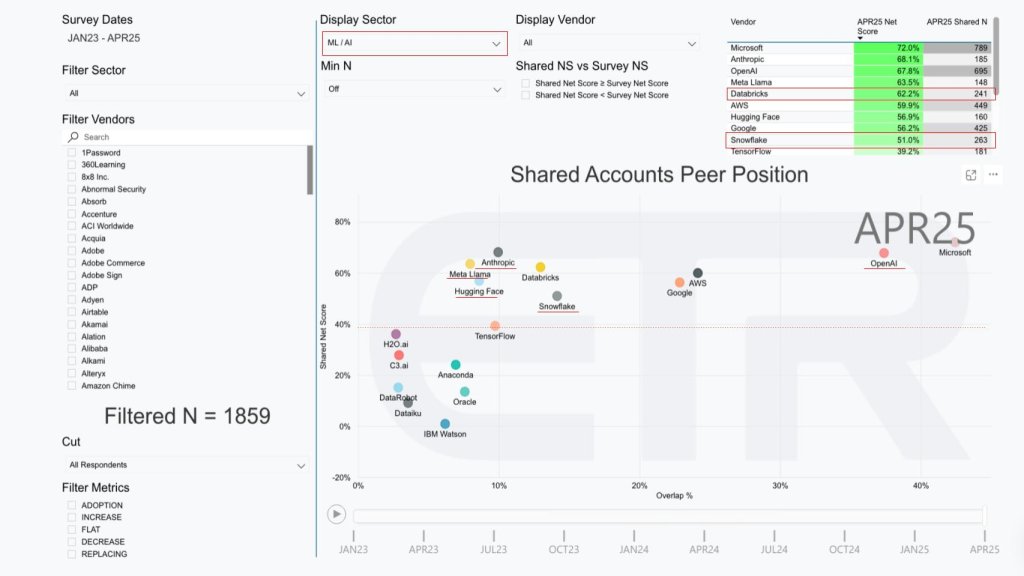

ETR’s longitudinal survey provides a quarterly pulse on CIO spending velocity (Shared Net Score, Y-axis) and vendor footprint (Overlap %, X-axis). The latest April 2025 cut for the ML/AI sector shown in Exhibit 4 below, shows a landscape few would have predicted just 18 months ago.

The scatter plot above shows vendors by Shared Net Score (a measure of spending velocity) versus Overlap % (account penetration). A red dotted horizontal line at 40 % indicates highly elevated spending momentum. Microsoft and OpenAI dominate the upper-right quadrant; AWS and Google hover just above the red line. Databricks sits high on velocity but mid-field on penetration. Snowflake has climbed above the 50 % velocity mark and, importantly, plots slightly further right than Databricks, signaling broader enterprise reach. Anthropic, Meta Llama, and Hugging Face cluster nearby, evidencing the rapid mainstreaming of open-weight and foundation-model providers. Notably, none of the red underlined vendors, including Snowflake, showed up in this segment in the January 2023 survey.

What the data says

- Snowflake breaks the 40 % red-line. Crossing ETR’s “highly elevated” threshold places the company in the same velocity tier as AWS and Google—a stark contrast to January 2023, when Snowflake didn’t even register in the ML/AI slice.

- Penetration exceeds Databricks. With 263 shared accounts versus Databricks’ 241, Snowflake now touches a marginally broader enterprise sample. Our research indicates this is a by-product of Cortex adoption and intensified data-engineering outreach. Notably, Databricks maintains the spending velocity advantage in the sector.

- Strong but narrow challengers emerge. Anthropic, Meta Llama, and Hugging Face enjoy elite net scores but lower overlap, reflecting concentrated—but rapidly growing—early-adopter clusters.

Strategic takeaways

- Validation of the Cortex strategy. The survey confirms that Snowflake’s later, but decisive move into native AI services is resonating with budget holders. Sustaining that trajectory will require shipping production-grade inference, vector search, and a robust metric store before competitive parity closes the window.

- Databricks still owns the MLOps mindshare. While Snowflake edges ahead on footprint, Databricks maintains a ∼11-point velocity premium, underscoring its stickiness in MLOps, model development and lifecycle tooling.

- Hyperscalers loom regardless. Microsoft and AWS combine ubiquitous infrastructure with vertically integrated AI stacks. Any SoI aspirant must differentiate on governance depth, cross-cloud portability, or domain semantics to avoid being subsumed.

Bottom line

The ETR data corroborates what Snowflake’s earnings hinted – i.e. customers are beginning to view the platform as a credible AI destination, not merely a cloud data warehouse. Penetration gains are tangible, but to close the velocity gap with Databricks—and keep up with fast-moving foundation-model incumbents—Snowflake must industrialize its Cortex roadmap and articulate a convincing path to the System of Intelligence green layer. Moreover, we expect Snowflake to continue to double down on its simplicity ethos and focus on taking the heavy lift out of AI deployments.

More than Product: Snowflake Tunes its Go to Market

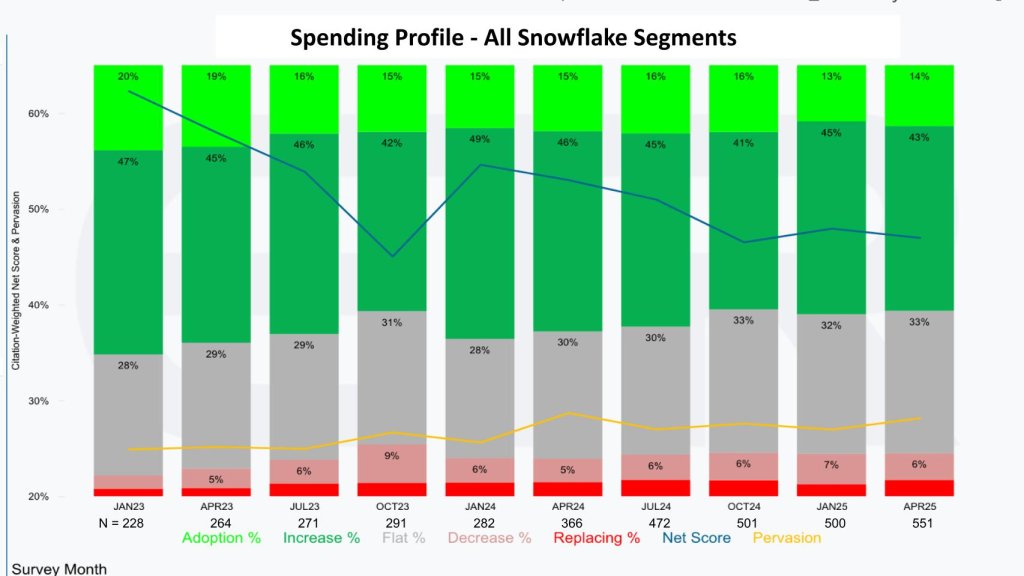

Snowflake’s engineering sprint has been supported by a material reset of its sales motions. Exhibit 5 applies ETR’s net-score-granularity lens across eleven survey snapshots (Jan ’23 to Apr ’25) and shows account spending patterns (not actual revenue but account behavior).

What the bars are telling us

- Adoption (lime green) – the share of respondents entering Snowflake for the first time—slid from 20 % to 14 % as a percentage of the total sample. At face value that looks bearish, yet the absolute count of new-logo citations jumped from ~46 to ~77 because the overall respondent base more than doubled (N = 228 → 551). Perhaps coincidentally, that coincides with the March arrival of new CRO Mike Gannon and a new bookings quota overlay that rewards both land and consumption.

- Increase spend (forest green) – workloads growing ≥6 % annually—remains the dominant block, hovering in the mid-40s. Consumption still scales, but the mix is healthier when new logos are backfilling the pipeline.

- Flat / Decrease / Replace (gray-pink-red) – churn stays contained at single-digit levels, a testament to Snowflake’s stickiness even as Gen-2 compute discounts and AI inference costs rejigger bills.

The blue line represents net score and descended from the mid-60s in early ’23 to a trough in Oct ’23 (~47 %), then recovered to ~50 % and has held comfortably above ETR’s 40 % “highly elevated” watermark. The yellow penetration curve climbs steadily, mirroring the rise in N and confirming that Snowflake is touching an ever-wider slice of the enterprise universe.

Why this matters

- Comp model evolution is bearing fruit. Snowflake’s original mandate—“only pay reps on consumption”—optimized expansion but thwarted new-logo velocity. By adding explicit booking quotas (and strong accelerators for >$10 M commits), management has reignited its hunters. It’s also firing on its consumption model as evidenced by two $100 M-plus deals in Q1. [Watch Frank Slootman correct Dave Vellante’s assertion regarding new logo sales targets back in 2023].

- Land + AI cross-sell. Cortex previews give account executives a reason to lead with capability rather than pure platform rent. Early proof points show AI services lifting first-year commit sizes by 20-30 %.

- Balanced pipeline de-risks macro. A thicker cohort of green-field customers cushions any cyclical softness in existing workloads and creates a larger surface for future consumption.

Note: We believe the emphasis on new logos represented a significant change in Snowflake’s compensation model that took quite some time to materialize. Perhaps the AI awakening distracted from the effort but as shown in the following section the ETR data confirms Snowflake’s assertions about its new logo trajectory.

We believe Snowflake’s go-to-market recalibration is more than cosmetic. A maturing product portfolio, paired with compensation that rewards new logos, bookings and consumption, positions the company to sustain mid-20s growth even if macro headwinds persist. The next test will be converting those incremental logos into Cortex-driven AI consumption dollars and funneling their operational data into the still-forming System of Intelligence. If Snowflake executes on that progression, the widening yellow line could prove to be the most important curve on the chart.

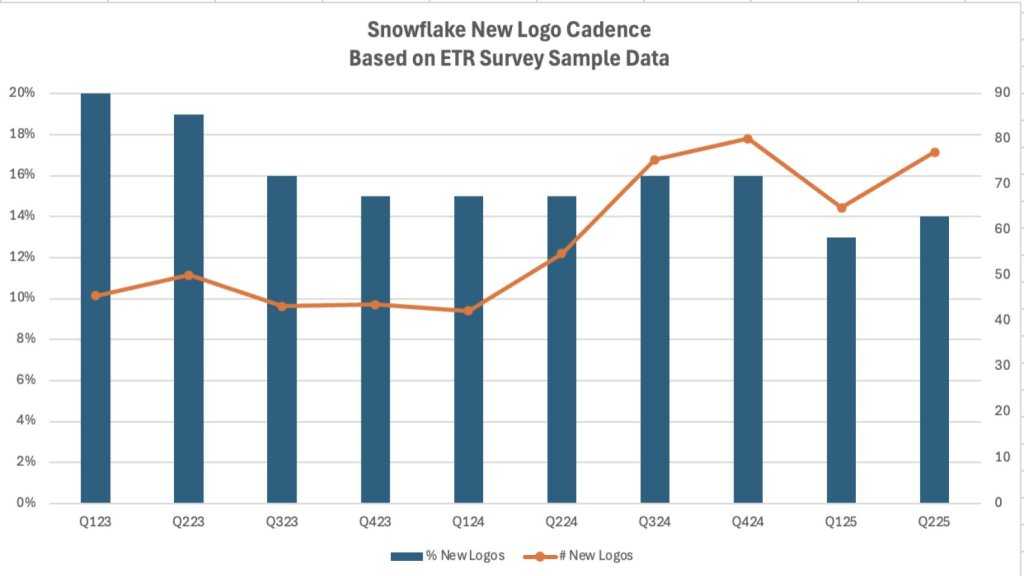

Snowflake’s New Logo Cadence: Proof Point in the ETR Time-Series

A quick but telling datapoint, Exhibit 6 below tracks Snowflake’s new-logo momentum across ten survey quarters. The blue bars represent the percentage of respondents citing Snowflake for the first time (from the Exhibit 5); the figure has held in the mid-teens. The orange line, however—calculated from absolute citation counts, shows the number of fresh logos rising sharply since Q2’24, mirroring the rough trajectory of Snowflake’s claims.

In sum, the data corroborate Snowflake’s assertion that its revamped go-to-market engine is landing meaningful net-new accounts. We see this as an important precursor to scaling Cortex and staking a claim to the System of Intelligence layer.

Snowflake’s Architectural Playbook: Unifying Relational, Vector, Structured, Unstructured and Governance Under One Roof

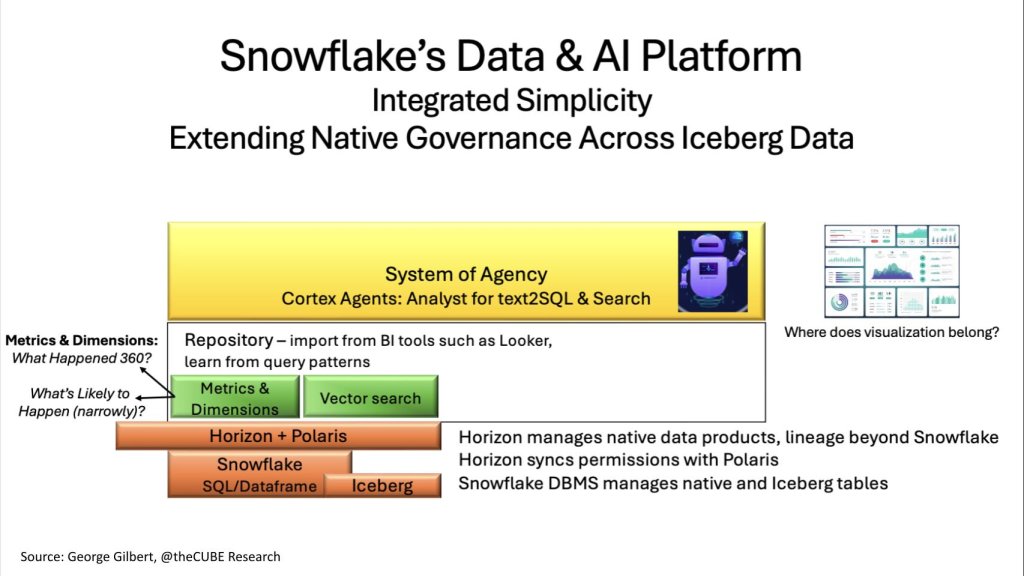

Let’s now examine Snowflake’s architectural approach in the context of the SoI/ “green-layer” challenge we put forth. Specifically its ability to weld its original cloud-native warehouse to three new pillars – i.e. open-format data access, first-class governance, and agentic query – all while preserving the company’s hallmark of operational simplicity (see Exhibit 7 below).

Exhibit 7: Snowflake’s Data + AI Platform Architecture

The diagram above stacks Snowflake’s layers, including Snowflake SQL/Dataframe + Iceberg at the base; a Horizon + Polaris governance bar; a white Repository box housing Metrics & Dimensions alongside Vector Search; and a yellow System of Agency band where Cortex Agents reside. Our call-outs note that Horizon syncs Iceberg permissions, Cortex supports text-to-SQL & RAG, and a question mark hovers over “Where does visualization belong?”

| Architectural Element | What It Does | Why It Matters in the SoI Context |

|---|---|---|

| Horizon + Polaris Catalogs | Horizon (native) stores rich semantic, lineage, and permissions metadata; Polaris (open-source) syncs Iceberg schema & access control lists. | Source-of-truth (and control) shifts from the DBMS to the catalog. Unified governance lets Cortex agents traverse Snowflake and external Iceberg assets without security gaps or duplicate policy trees. |

| Snowflake SQL / Dataframe + Iceberg Tables | Core DBMS now manages both proprietary tables and open Iceberg partitions. | Beyond separating compute from storage. Any compute detaches from any data. Customers gain freedom to run Spark, or other transforms on Iceberg while keeping a single policy domain. |

| Metrics & Dimensions Repository | Imports Looker/BI semantic layers; enriches definitions from live query patterns. | Becomes the metric store. Structured substrate agents need to translate natural-language questions into deterministic SQL. |

| Vector Search (green block) | Stores embeddings side-by-side with rows and columns. | Enables hybrid queries that mix structured filters with semantic similarity to marry enterprise text to transactional facts. |

| Cortex Agents (System of Agency) | Cortex Analyst enables text-to-SQL on structured data. Cortex Search enables RAG on unstructured docs. | Delivers a “talk to the data” experience, but—equally important—lays the groundwork for agents that can write back actions once the SoI is in place. |

Strategic perspective

- Compute-from-DBMS to Compute-from-Catalog. Snowflake popularized the separation of compute from storage. The next round focuses on separating compute from data location & format. Betting on Iceberg, and dual catalogs, keeps Snowflake in the open-data conversation without abandoning its proprietary value add.

- Metric store is a possible beachhead in the green layer. By folding semantics, lineage, and policy into Horizon and surfacing them through Cortex, Snowflake is inching toward an SoI. Whether Horizon evolves fast enough – or remains a glorified analytics catalog – will decide if Snowflake owns or merely rents that layer.

- Visualization remains an open question. BI vendors could hijack the text-to-SQL moment by coupling natural language with rich visuals out of the box. Snowflake may need tighter OEM deals, or a lightweight native viz layer, to close the loop between query and insight.

- From answers to actions. Cortex today stops at “what happened” and “why it happened.” Bridging to “what should we do” will require either workflow hooks into SaaS systems or native write-back APIs governed by the same Horizon policies. We’ll watch next week’s Summit for clues.

Our view

Snowflake’s architecture bets on “integrated simplicity” – i.e. one policy domain, one storage fabric (proprietary + Iceberg), and one agentic interface. If the company can industrialize Horizon into a full metric tree and embed lightweight operational hooks, it will have a credible claim to the System of Intelligence, without forfeiting its performance pedigree. The alternative is ceding that green layer to Databricks, a hyperscaler, or an application vendor and watching agents treat Snowflake as just another data substrate.

Databricks: Unity Catalog as the Lakehouse Control Plane and Launchpad for Agentic AI

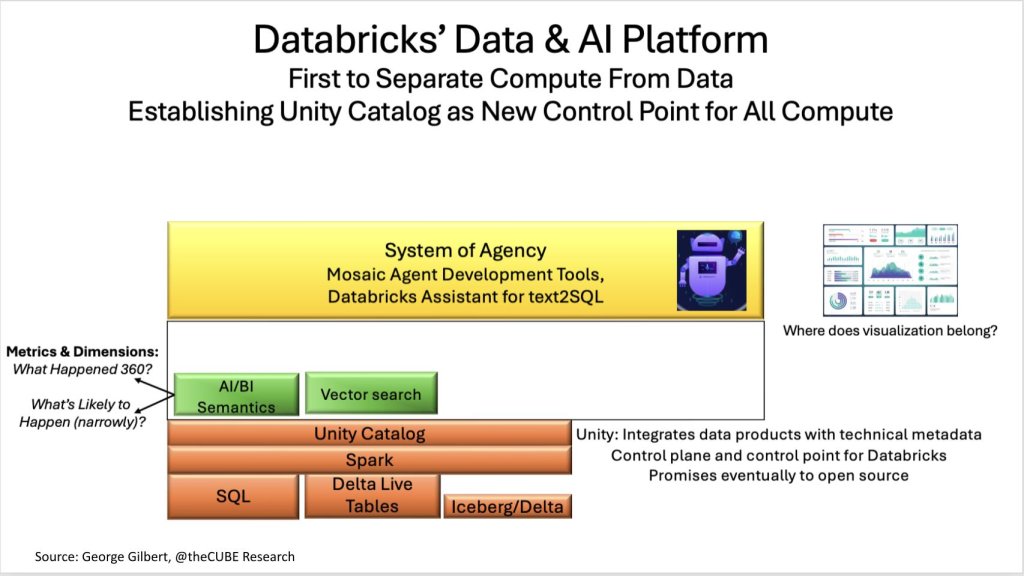

If Snowflake’s core innovation was separating compute from storage, Databricks’ contribution was to decouple any compute engine from any data format. Exhibit 8 below shows how that principle now extends further up the stack, with Unity Catalog positioned as the authoritative control point for data, models, and business semantics across Spark, SQL warehouses, Delta Live Tables, and external Iceberg/Delta stores.

The diagram above shows orange layers with Iceberg/Delta tables at the base, with Delta Live Tables, Spark and SQL Warehouse, with Unity Catalog bar spanning the full width. Unity being the integration point for data products and a key control point with the allure of open source. Above sits a white box for AI/BI Semantics and Vector Search (both in green), capped by a yellow System of Agency containing Mosaic Agent Tools and Databricks Assistant. Our call-out again asks, “Where does visualization belong?”

| Architectural Element | What It Does | Competitive Contrast & SoI Implications |

|---|---|---|

| Unity Catalog (stand-alone) | Governs schemas, lineage, permissions, and AI/BI metric definitions; promised to open-source. | Unlike Snowflake’s Horizon (nested in the DBMS), Unity is an independent service, which makes it easier to register data that never lands in a Databricks cluster. Control-plane neutrality will appeal to enterprises standardizing on Iceberg. |

| Spark + SQL Warehouse + Photon | “Any engine wins” ethos—batch, streaming, SQL, Python/Rust UDFs. | Flexibility attracts developers, but raises the bar for performance parity across engines. |

| Delta & Iceberg Tables | Databricks can now read and write Iceberg after acquiring Tabular (Ryan Blue’s firm). | Native dual-format support removes a barrier for customers standardizing on Iceberg while preserving Delta optimizations. |

| AI/BI Semantics Layer | Combines top-down metric definitions with bottom-up learning from query patterns. | Similar goal of Snowflake’s metric store, but fully embedded in Unity and automatically version-controlled. |

| Vector Search (green) | Embeddings stored and indexed alongside structured data. | Enables hybrid queries and RAG from a single catalog. |

| System of Agency (yellow) | Databricks Assistant text-to-SQL across lakehouse; Mosaic Agent Tools – SDK + orchestrator for custom agents. | We believe Mosaic’s low-level tooling may be the most sophisticated among platform vendors, but still needs a 4-D business model to supply outcome feedback loops. |

Key distinctions vs. Snowflake

- Stand-alone catalog vs. embedded catalog. Unity’s independence lets Databricks act as Switzerland for governance, in theory even over data that Snowflake stores. Snowflake counters with tighter integration, simpler policy inheritance and what it claims is true open source Polaris catalog.

- Agent tooling depth. Mosaic brings fine-grained control over agent planning, memory, and evaluation. These are features developers who build enterprise copilots covet. Snowflake’s Cortex focuses on turnkey text-to-SQL and search experiences.

- Visualization remains unresolved. Both Snowflake and Databricks punt the “last mile” to external BI tools, for now. Whoever closes that gap first could own the analytic workflow end-to-end. The flip side is leaving that gap means more value add opportunities for ecosystem partners.

- Path to the green layer. Databricks has the catalog foundation, vector‐structured unification, and agent SDK. What’s missing is the metric tree that maps causal relationships across business processes. Without it, agents can answer “what” but struggle with “why” and “what should we do.”

Our view

As a private company, Databricks is more difficult to track, but the firm continues to impress in the ETR data set and in our customer conversations. Very clearly the company has a winning posture in the market with a differentiated approach from Snowflake. It continues to press the “open compute, open data” advantage, which resonates with the market. Unity Catalog plus Tabular gives the company a credible route to becoming the enterprise’s de-facto data and model registry, irrespective of where the data lives. If Databricks can elevate Unity into a full-function metric tree and couple Mosaic agents to real-time business feedback loops, it could seize the System of Intelligence mantle. Fail to do so, and the green layer will be contested territory ripe for Salesforce, ServiceNow, or an ambitious hyperscaler to occupy.

AWS: From “Choose-Your-Own Primitive” to an Integrated SageMaker Experience

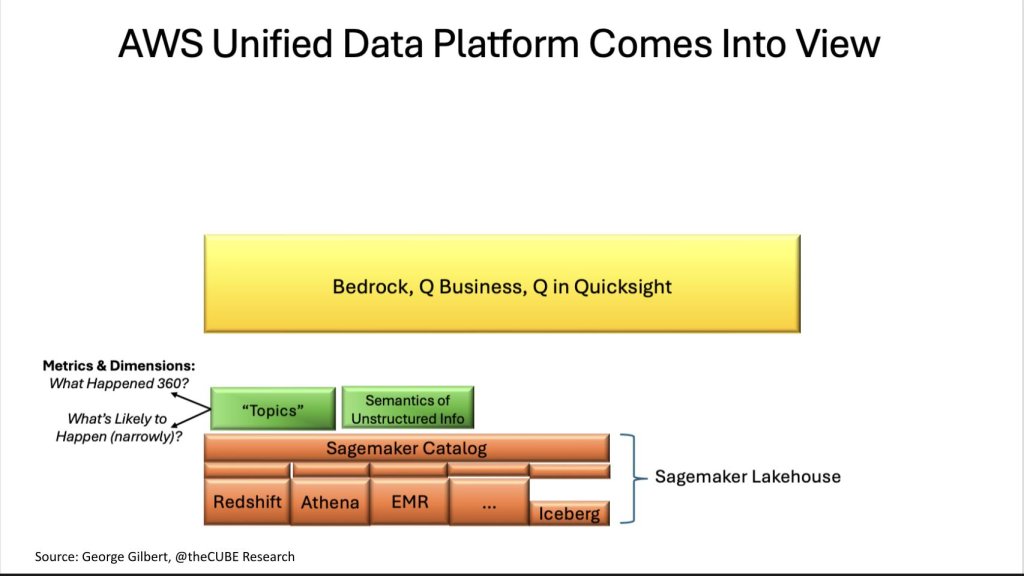

Re:Invent 2024 marked a philosophical portfolio extension for AWS in our view. After years of telling builders to assemble their own Lego sets using primitives, the company has also integrated what were relatively independent primitives into a more integrated and accessible framework. All the analytic data management and storage engines and governance are now all part of Sagemaker. AWS integrated Redshift, Athena, EMR (Spark), and Iceberg into a cohesive framework governed by a SageMaker Catalog, based on the DataZone metadata manager, then crowned the stack with Bedrock for building agent-based applications and the Q family of LLM and agent-based services (see Exhibit 9 below).

The diagram above shows the base layers in orange with Redshift, Athena, EMR, …other tooling and Iceberg bricks under a broad SageMaker Catalog bar that serves as a unifying layer. Above that, two green blocks—Topics (metrics/dimensions) and Semantics of Unstructured Info feed a large yellow System of Agency rectangle labeled Bedrock, Q Business, Q in QuickSight. The arrows at the left annotate What happened? vs. What’s likely to happen? questions; a brace at right indicates the whole stack is an integrated “SageMaker Lakehouse,” simplifying the customer experience.

There’s more coherence even than with AWS’s first-party data management and processing engines. Sagemaker has fully embraced the lakehouse architecture of open compute engines. It can join data or federate queries, not just to its own analytic engines and storage engines such as S3 Tables and Iceberg, but also to its operational database, DynamoDB, 3rd party analytic databases, Snowflake, and BigQuery, and enterprise applications such as Salesforce and SAP.

The glue that makes these disparate data sources appear as coherent data sources is Sagemaker Catalog. The catalog is where data producers and consumers meet in order to enable collaboration. Generative AI enriches the metadata in the catalog to make it easier to find, understand, and consume data. Similar to Databricks and Unity catalogs, Sagemaker Catalog manages full data products such as AI models, prompts, and GenAI assets. Most importantly, the catalog learns which data products are related and recommends how to use relevant ones together to accomplish tasks such as building queries or designing models.

| Architectural Element | Role in the Lakehouse | Why It Matters for a System of Intelligence |

|---|---|---|

| SageMaker Catalog (built on DataZone) | Unifies technical metadata, data-product lineage, and business “Topics” (metrics & dimensions) across all AWS analytic engines. | Becomes the control plane that insulates users from engine/file-format sprawl while inheriting AWS IAM, KMS, and Lake Formation policies. |

| Redshift * Athena * EMR * Iceberg | Multiple compute engines and table formats under one roof; catalog orchestrates cross-engine joins transparently. | Marries the flexibility of primitives with a framework’s simplicity, letting agents query without knowing where, or in what format, the data resides. |

| “Topics” + Semantic Index | Business-language layer that Q services reference when translating natural language questions into SQL/Athena queries. | Topics are similar to a metric store; organizes data essential for deterministic answers to GenAI queries . Semantic index organizes vector embeddings for agent-based RAG. |

| Bedrock + Q Business/Developer + Q in QuickSight | Yellow “System of Agency” band. Bedrock is a managed service for models and supports agent development. Q chats with data; QuickSight renders rich visuals. Q is like an in-house ChatGPT. Q in Quicksight marries text2SQL with BI visualization. Q Developer is AWS’ AI engineer. | Tight coupling of ask-answer-visualize in a single console could blunt the “where does viz belong?” gap plaguing Snowflake & Databricks. |

Strategic observations

- Primitives meet framework. AWS has reconciled its “no frameworks” dogma by treating the catalog as a simplified and opinionated composition layer. And AWS now hides the joins between disparate analytic and storage engines and operational databases. Developers can still access raw engines, but many users will choose to work through a curated entry point.

- Identity & security baked in. Because SageMaker Catalog hooks directly into IAM, policies propagate end-to-end, an advantage when enterprises weigh governance overhead versus multicloud optionality.

- Early glimpse of agentic deep-research. AWS engineers describe a future where Q chains multi-hop queries, clarifies intent, and updates catalog metadata based on user interactions. We see this as a feedback pattern that could evolve into a true SoI learning loop.

- Walled garden trade-off. Interoperability beyond AWS services finally opens up with BigQuery and Snowflake support; customers betting on cross-cloud metric trees may view SageMaker Lakehouse as another island. The hyperscaler’s answer is ecosystem breadth and price/performance leverage.

Our view

AWS has quietly constructed a vertically integrated data-and-AI platform that rivals anything the pure-plays offer, yet with the financial, geographic, and service breadth only a hyperscaler can muster. Whether SageMaker Catalog can someday matures to support a shared, causal metric tree – or remains an AWS-only operational and business catalog, will determine if Amazon simply hosts the next wave of Systems of Intelligence or owns them outright. Either way, the bar for Snowflake, Databricks, and SaaS incumbents just went up based on what we saw at re:Invent 2024.

Salesforce: Turning SaaS Inside-Out and Monetizing the System-of-Intelligence Itself

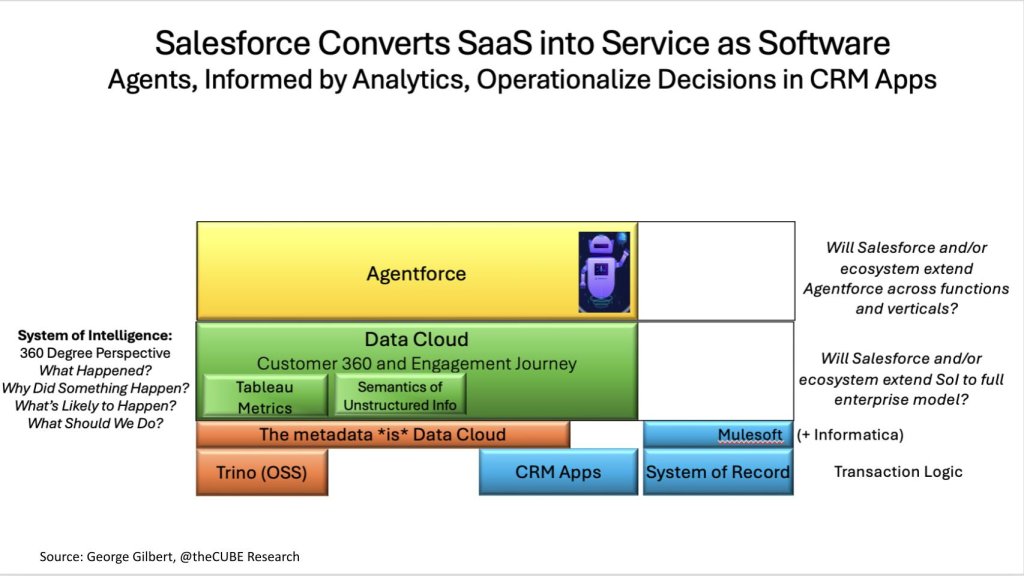

In our opinion, Marc Benioff’s end-game is no longer selling cloud seats; it is monetizing the feedback loop that turns raw customer events into autonomous actions. Exhibit 10 below shows how Salesforce has inverted the classic SaaS stack – i.e. Data Cloud sits in the green “System of Intelligence” band, abstracting away where data lives, while Agentforce occupies the yellow “System of Agency” layer, driving workflow back into CRM, MuleSoft APIs, and third-party systems.

The base layer above shows CRM Apps (blue) next to System of Record bricks, connected via MuleSoft (+ Informatica). Above, the orange areas start with Trino, the open source query engine. That is not Salesforce’s value add. Above Trino, the bar reads “The metadata is Data Cloud,” meaning schema-as-code. The green Data Cloud block hosts Customer 360 and Engagement Journey plus two smaller green tiles—Tableau Metrics and Semantics of Unstructured Info. A yellow Agentforce band crowns the diagram. Right-hand call-outs pose two questions: “Will Salesforce and/or ecosystem extend Agentforce across functions and verticals?” and “Will Salesforce and/or ecosystem extend SoI to full enterprise model?”

| Architectural Element | Function | Competitive & Monetization Angle |

|---|---|---|

| Data Cloud (Customer 360 + Engagement Journey) | Harmonized 4-D model of every customer interaction, enriched by Tableau Metrics and unstructured-data semantics. | Salesforce treats metadata—not tables—as the corporate asset. Zero-copy federation queries Snowflake, Databricks, and on-prem stores, lowering data-gravity friction and heading off “warehouse wars.” |

| Trino Fabric (open-source) | Executes distributed queries across remote sources. | Addresses lock-in criticism; keeps infra costs off Salesforce’s P&L while preserving governance control. |

| Agentforce | LLM-powered agents that read Data Cloud context and write back to Sales, Service, Marketing, etc. | Converts SaaS features into pay-per-outcome “Service-as-Software.” Raises the question: will pricing shift from seat licenses to usage- or KPI-based metrics? This is the new value add layer for SF. |

| MuleSoft + Informatica | MuleSoft exposes external APIs; Informatica excavates hidden logic and lineage from legacy apps/data. | Extends Data Cloud’s model beyond CRM, enabling agents to trigger actions in ERP, supply-chain, or vertical stacks. Important for enterprise-wide SoI. |

Why this is different

- Model > data. By anchoring on a metadata-driven customer graph, Salesforce positions itself as the interpreter of enterprise events, leaving heavyweight storage and infrastructure to partners.

- Closed loop through first-party apps. Agents don’t just suggest next best actions, rather they execute them inside Sales, Service, or Marketing Cloud, capturing outcome signals that continually refine the model. Enabling agents to learn in a continuous loop, or reasoning traces from human intervention.

- Ecosystem leverage. Zero-copy federation turns potential rivals (Snowflake, Databricks) into data pipes; MuleSoft & Informatica recruit legacy estates into the loop. The prize is cross-functional metric trees without rewriting core systems.

- Pricing wild card. If Agentforce charges per automated outcome – e.g. lead conversion, case resolution, etc. the ripple could pressure its own seat-based model and those of incumbents (e.g. SAP, Oracle).

Our view

We believe Salesforce is furthest along in productizing the System of Intelligence as a revenue line. By decoupling models from storage and embedding agents natively in transaction flows, the company moves from selling software to selling outcomes. We see this as a disruptive shift that could reset valuation frameworks across the SaaS sector. The open questions are scale and scope. In other words, can the ecosystem enrich Data Cloud beyond customer-facing domains, and will enterprises stomach a usage- or value-based pricing pivot? Those answers will determine whether Salesforce merely defends its CRM beachhead or commands the green layer across the full enterprise stack.

SoI Platform Wars: The Race to Own the Green-Layer Control Point

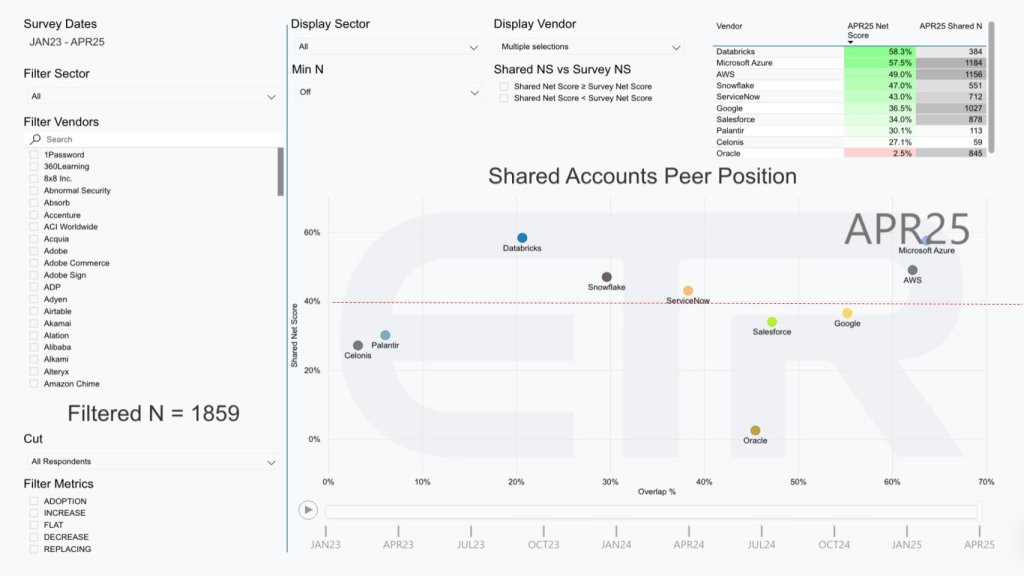

The April ’25 ETR “Shared-Accounts Peer Position” heat-map (Exhibit 11 below) lays bare the competitive board. The scatter plot below maps vendors by Shared Net Score (spending velocity Y-axis) versus Overlap % (account penetration X-axis). A red horizontal line at 40 % denotes a “highly elevated” velocity. Azure and AWS cluster top-right; Databricks posts the single highest velocity among independents; Snowflake, ServiceNow, Salesforce, and Google line up just above the red line; Palantir, Celonis, and Oracle appear lower, signaling either niche focus or maturity.

- Azure and AWS occupy the upper-right quadrant with spend velocity north of 40 % and penetration greater than 50 %. These levels make them the default substrate for enterprise AI experimentation and deployment.

- Databricks leads all independents with a 58 % net-score and the highest momentum in the survey, while Snowflake continues its steady drift rightward, penetrating accounts more deeply than any non-hyperscaler.

- ServiceNow and Salesforce sit in the 30-40 % spend velocity band with broad account penetration (Overlap), validating their push to embed metric trees inside domain apps.

- Google remains a step behind on spend velocity but claims the only home-grown foundation-model stack. This is an edge that may grow as Gemini’s agentic features converge with Google’s data platforms (AlloyDB and BigQuery).

- Palantir and Celonis show elevated but narrower plots, each banking on deep domain semantics – operational decision intelligence and process mining, respectively – to vault into the SoI conversation.

- Oracle stays below the 40 % red-line despite impressive momentum in OCI and its entire stack. The ETR data doesn’t tell the full story for Oracle. Our view is Oracle almost always wins because whatever market trend takes shape, Oracle will eventually apply its engineering muscle to capture value within its own stack. It has created a highly differentiated strategy that has thus far proven impenetrable by market changes or the competition. Indeed, the burden is on Oracle to continue to innovate as it has with Autonomous Database and OCI Generative AI to defend and expand its turf.

Mapping the data to our premise

- Green-layer gravity is real. Vendors that expose a harmonized metric tree – e.g. Databricks via Unity semantics, Snowflake via Horizon, Salesforce via Data Cloud – will continue to hold the velocity axis faster than those that merely host models.

- Penetration does not equate to inevitability. Azure’s and AWS’s reach is formidable, yet the survey shows room for AI-specific platforms (Databricks, ServiceNow, Salesforce) to win incremental budgets when they solve for end-to-end workflows.

- Outcome pricing looms. As we’ve underscored, once agents start altering P&L-level metrics, the market will shine a light on value-based outcomes which is certain to reflect pricing models. Seat-based pricing is a poor proxy for value. Consumption pricing is better but value pricing models will emerge that impact contracts and negotiations. We expect seat-based incumbents to be compressed and buyers to reward platforms with clear causal ties between recommendation and result.

Closing Thoughts – Crossing the Rubicon

Our research indicates enterprise data platforms are no longer defined by how, how well or how easily they store information, rather by how quickly they can convert data plus business logic into automated actions:

- System-of-Record vendors (SAP, Oracle, legacy ERP) face existential pressure to expose transaction semantics, while innovating, or risk being wrapped by metric trees they don’t control.

- Data-native players must decide whether to remain Switzerland – i.e. feeding every SoI – or step up the stack and compete with application vendors on outcome delivery.

- Hyperscalers wield distribution advantage, but their challenge is unifying overlapping catalogs, engines, and governance silos fast enough to present a single deterministic map to agents.

- SaaS innovators like Salesforce and ServiceNow are first to price the control loop itself. If outcome-based billing gains traction, we could witness a TAM expansion from ~$400 B enterprise software to a multi-trillion-dollar services-as-software market.

The journey to a full 4-D business model will take years and multiple architectural rewrites. Yet in our model the direction to own the metric tree, orchestrate the agents, and control the enterprise operating rhythm. The next test arrives this coming week at Snowflake Summit, followed by Databricks Data + AI Summit, where each vendor will undoubtedly show – not just tell – how its green-layer ambitions translate into tangible roadmap milestones.

We’ll be on the ground thecube at both events to separate aspiration from execution and will report back both live and after the dust is settles.