This theCUBE Research Whitepaper examines how VMware Private AI Foundation with NVIDIA can unlock Gen AI and unleash productivity. Today, organizations require a new type of Private AI tuned and fine-tuned on domain-specific data. This requires organizations to take steps to protect this information, keep it private and secure, and apply governance. At the same time, organizations also look to have a performant application built on models and software they trust. Organizations must choose the correct use cases and supporting infrastructure to meet ROI targets for the project.

Introduction

Generative AI (Gen AI) has a lot of hype for good reasons. However, as organizations look to deploy Gen AI, they face a number of challenges. These challenges range from privacy, choice, security, control, and compliance concerns to choosing the proper use case to start with that will deliver a true ROI. In getting started on the AI journey, organizations are also faced with the decision of where they should build their Gen AI applications or should they find a Gen AI platform or solution. Many factors go into the decision on where an organization can and will build its Gen AI applications. Not the least of them is what use case I am trying to solve and where my data live that I will use to solve that use case. Although a great amount of data lives in the public cloud, many organizations want to use more sensitive data contained in on-premises or cloud adjacent private clouds. Most organizations will not build foundation large language models (LLMs) to service very general needs and will pick use cases with tangible ROI for their organization. This leads us to theCUBE Research Power Law Distribution of Gen AI.

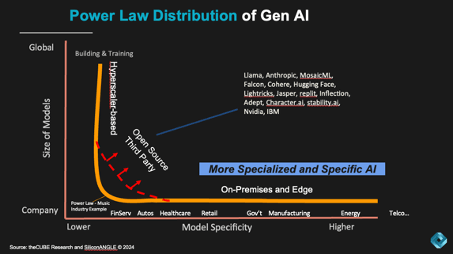

theCUBE Power Law Distribution of Gen AI 2023 – 2024

Figure 1 – theCUBE Research Power Law Distribution of Gen AI 2023 – 2024

Let’s start by reviewing the idea of a power law. A power law distribution has the property that large numbers are rare, but smaller numbers are more common. For our purposes of The Power Law of Gen AI, we are more interested in using the shape of the curve to describe the adoption trends and patterns in Gen AI and what they might mean for organizations and industries.

Our analyst team has developed the power law of Gen AI, which describes the long tail of Gen AI adoption. The Y-axis represents the size of the LLM, and the X-axis shows the industry specificity of the models. We see a similar pattern to the historic music industry, where only a few labels dominated the scene. In Gen AI, the size of the model is inversely proportional to the specificity of the model. Hence, the large public cloud LLMs will be few in number, but they will train giant LLMs. However, most applied models will be much smaller, creating a long tail.

This long tail will occur within industries and result in on-premises deployments that stretch to the edge and the far edge. From a volume perspective, private Gen AI and AI inference at the edge are expected to be the most dominant use cases. We also see the red lines in Figure 1 represent the numerous third-party and open-source models that are emerging and are going to have a market impact as customers look to make choices about the size of the models, model accuracy, and cost.

In order to effectively pursue Gen AI, organizations need to ensure diversity and utilize existing tools to quickly get up and running. This includes having processes in place for Day 0 operations as well as Day 1 operations for ongoing management and maintenance of the solution.

Use Case Consideration for Gen AI

Broadcom and NVIDIA aim to unlock the power of Gen AI and unleash productivity with a joint Gen AI platform- VMware Private AI Foundation with NVIDIA. This integrated Gen AI platform enables enterprises to run RAG workflows, fine-tune and customize LLM models, and run inference workloads in their data centers, addressing privacy, choice, cost, performance, and compliance concerns. The platform is designed to cater to several Gen AI use cases, including code generation, customer support, IT operations automation, advanced information retrieval, and text summarization for private content creation. One of the key factors for organizations to consider while selecting a use case for Gen AI is the clear ROI they can expect from their investment. As funding for Gen AI is often diverted from other projects, achieving a successful outcome becomes crucial.

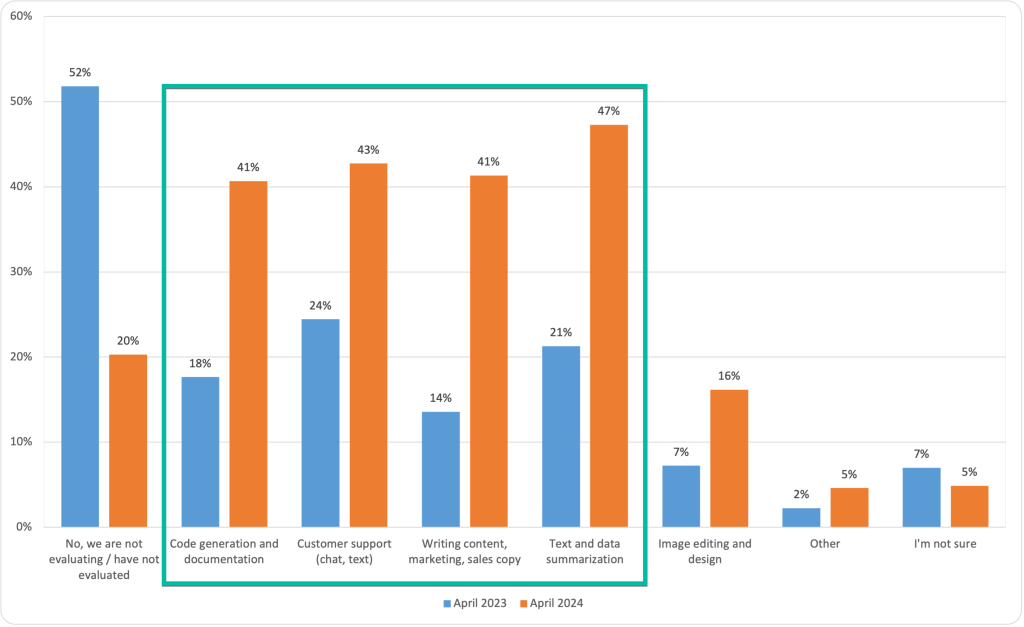

This central decision point is highlighted in Enterprise Technology Research (ETR), a partner research firm of theCUBE Research, which surveys over 1700 organizations quarterly; data in Figure 2 below where the use case evaluation shows many use cases are being evaluated before a move to production. There is a rapid increase in enterprises evaluating the different use cases between April 2023 and April 2024. It is evident that organizations are adopting a comprehensive approach when it comes to Gen AI.

Figure 2 – Use Cases Evaluated – Source: ETR.ai Generative AI Drill Down Report (N = 1884) – April 2024

Only 20 percent of the enterprises indicated that they have not yet evaluated or are not evaluating a Gen AI use case.

Note: It is important to note that the percentages in the charts will not add to 100 percent, as organizations could be evaluating, using, or being blocked by more than one Gen AI use case or variable.

The top four use cases that have been evaluated in the ETR data, are aligned with the direction and templates being laid out in the VMware Private AI Foundation with NVIDIA.

Contact Center (Customer Support above): Customer support augmentation and assistance are expected to be among the most successful use cases. Organizations are either fully automating certain aspects such as IT operations remediation or self-service help desks or have a person-in-the-loop for some customer support augmentation where the customer support agent is the customer of Gen AI to provide faster and more precise support.

Code Generation: Code generation and documentation are important use cases for private AI implementation. This is because source code is regarded as intellectual property and must be kept secure. There have been instances where software loaded into public cloud-based LLMs, resulting in IP leakages. Using LLMs for code generation directly impacts the productivity of an organization’s developers. One impact can be faster innovation through LLM-provided code, which gets base infrastructure code such as environment parameters for deployment scenarios. Another direct and meaningful impact is giving time back to developers by helping to audit code quality, sometimes leading to the reduced number of lines of code or being able to interpret code that was developed by another developer who has long left the organization. When implemented correctly, using Gen AI for code development for the aforementioned use cases and others can have positive implications for governance and security. Therefore, it is crucial to protect and keep private the Gen AI systems and underlying data within an organization.

Content Creation (Writing Content and Text Summarization above): The final set of significant use cases for Gen AI involves content creation, text summarization, and data summarization. These purposes can greatly benefit from secure data that meets an organization’s compliance and governance regulations. Although the resulting content may be published publicly, the data and content used to generate it may contain more information or intellectual property (IP) in the source content. Therefore, the ability to extract and summarize source documents that contain IP is essential to producing the most compelling content. This is particularly important when considering Gen AI’s potential for data summarization, which is often used in business intelligence (BI) to generate reports or dashboards.

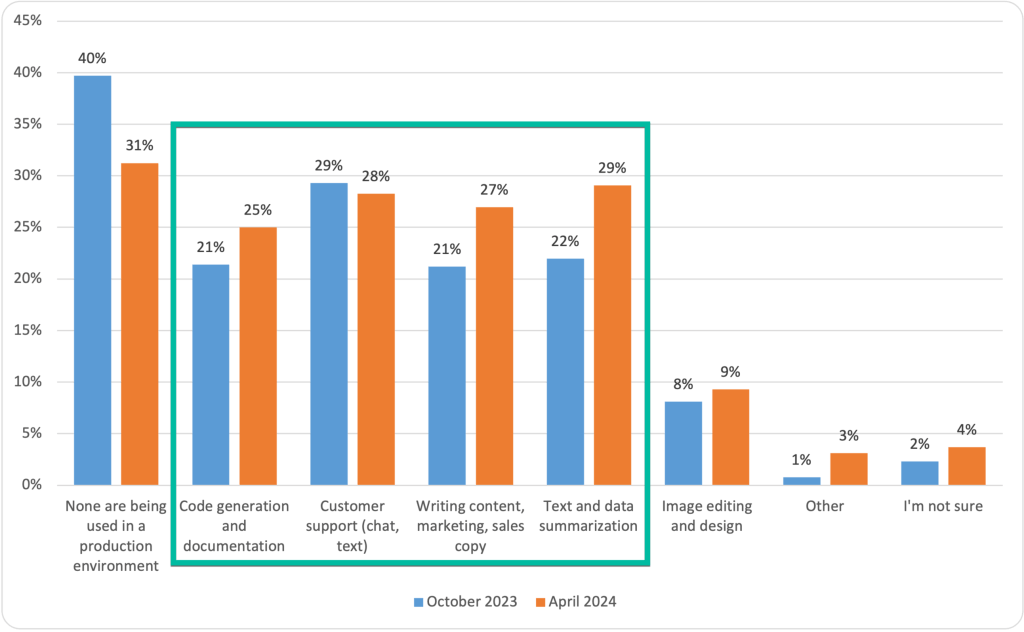

Figure 3 – Use Cases in Production – Source: ETR.ai Generative AI Drill Down Report (N = 1380) – April 2024

After analyzing the production survey responses in the ETR data presented in Figure 3, it was found that the customer support augmentation use case is the most commonly implemented. Following closely behind is the code generation and documentation use case, which is rapidly gaining popularity. These two use cases offer significant benefits to organizations through the Private AI approach and have clear returns on investment. Prioritizing use cases that enhance productivity and quality can provide good results. This can be observed in the form of increased customer satisfaction, reduced churn, fewer customer service calls due to bugs, and more.

Five Key Considerations for Gen AI

- Most organizations will not build foundation large language models (LLMs) to service very general needs.

- In Gen AI, the size of the model is inversely proportional to the specificity of the model.

- Organizations will use and fine-tune open-source models with their own data, keeping that intellectual property safe.

- Top use cases for building with Gen AI are code generation, contact center support, and content creation.

- Figuring out governance and legal compliance are the biggest challenges.

Core Challenges

Organizations are forging forward on Gen AI adoption but are also concerned about the ensuing challenges. This can result in high costs, not only in budget but also in employee morale and talent retention. Rushing into production without thoroughly understanding the architecture and platform engineering requirements can lead to disappointing outcomes. A large challenge has been the massive use of public cloud-based Gen AI LLMs via API. By doing so, many organizations cannot be assured how their data is being governed once it is utilized to train, or more scarily, they might have IP leakage via the API. Also, this can lead to other security issues, such as prompt injection, that could otherwise be minimized in a smaller private model that is trained or fine-tuned on an organization’s data and has the proper governance and guardrails.

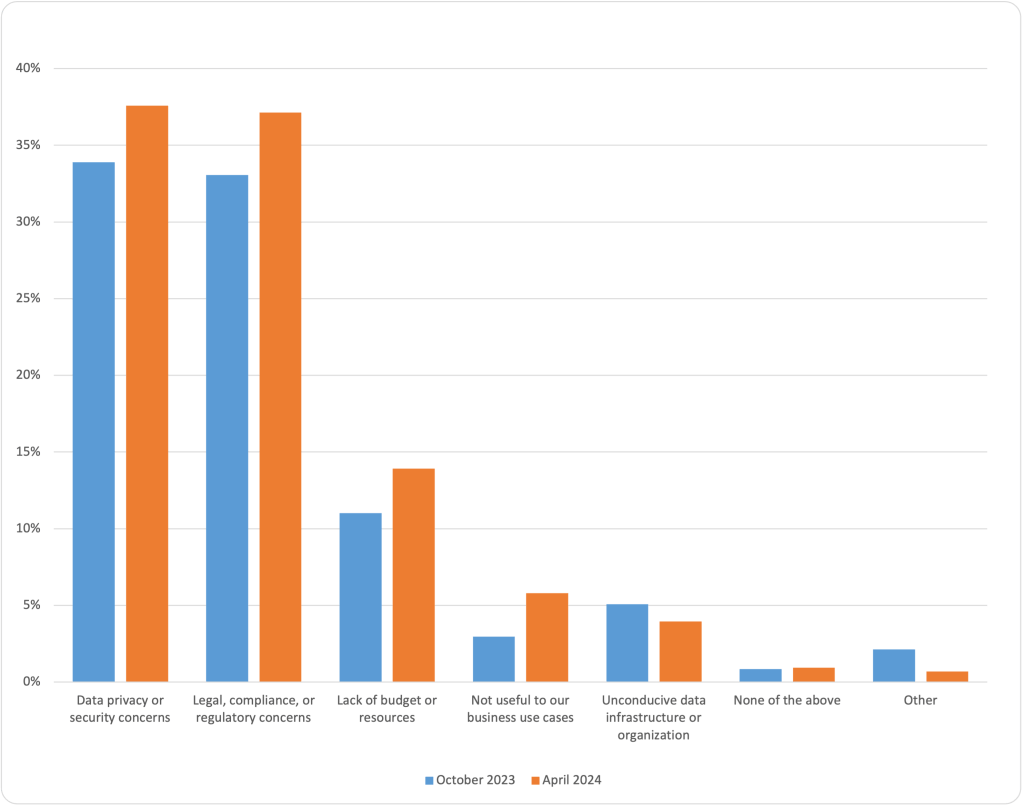

Figure 4 – Barriers to Production – Source: ETR.ai Generative AI Drill Down Report (N = 431) – April 2024

According to the ETR data shown in Figure 4, organizations are facing significant challenges related to data privacy and security as well as legal, compliance, and regulatory concerns. While much of an organization’s data is stored in public clouds, the data used to fine-tune Gen AI models is typically housed on-premises and contains valuable intellectual property that cannot be moved to a public cloud due to legal restrictions. In such cases, it is more practical to bring AI to the data rather than the other way around.

What is the VMware Private AI Foundation with NVIDIA

Broadcom and NVIDIA have co-engineered a path-breaking Gen AI platform- VMware Private AI Foundation with NVIDIA. This platform aims to unlock Gen AI and unleash productivity for all enterprises.

VMware Private AI Foundation with NVIDIA is built and run on the industry-leading private cloud platform, VMware Cloud Foundation. It comprises the newly announced NVIDIA NIM, enterprise AI models from NVIDIA, and others in the community (such as Hugging Face), NVIDIA AI tools, and frameworks, which are available with NVIDIA AI Enterprise licenses. This platform is an add-on SKU on top of VMware Cloud Foundation. It is important to note that NVIDIA AI Enterprise licenses must be purchased separately from NVIDIA.

This platform ensures that only authorized users and applications can access enterprise AI models and data sets. Applications are protected at a network level with micro-segmentation and advanced threat prevention via software-dedicated firewalls for applications and their associated AI models and data sets. NSX provides full-stack networking and security to VMware Cloud Foundation.

VMware Private AI Foundation with NVIDIA has specially architected capabilities that help simplify deployments and optimize costs for enterprise AI models.

VMware Enabled Capabilities

- Private AI Deployment Guide is a capability that provides a comprehensive list of steps and documents to deploy Gen AI workloads on VCF, simplifying deployments and costs.

- Vector databases have become an essential part of Retrieval-Augmented Generation (RAG) workflows. They allow for rapid data querying and real-time updates, which can enhance the outputs of LLMs without the need for costly and time-consuming retraining. Vector databases are now the industry standard for Gen AI and RAG workloads. VMware by Broadcom has made Vector databases possible by leveraging pgvector on PostgreSQL. This capability is managed through Data Services Manager and enables quick deployment of vector databases to support RAG AI applications.

- Deep Learning VM Templates – Creating a deep-learning virtual machine (VM) can be a complicated and lengthy task. Manually building a VM can lead to inconsistencies and missed optimization opportunities across various development environments. VMware Private AI Foundation with NVIDIA provides deep learning VMs that come pre-configured with required software frameworks like NVIDIA NGC, libraries, and drivers. This saves users from the complex and time-consuming task of setting up each component individually.

- Catalog Setup Wizard – This capability enables the creation of complex catalog objects, including the selection and deployment of the right VM classes, K8s clusters, vGPUs, and AI/ML software, such as the containers in the NGC catalog. LOB admins usually carry out this task. In many enterprises, Data Scientists and DevOps spend a lot of time assembling the infrastructure they need for AI/ML model development and production. The resulting infrastructure may not be compliant and scalable across teams and projects. Even in enterprises where AI/ML infrastructure deployments are more streamlined, Developers and DevOps may waste a lot of time waiting for LOB Admins to design, curate, and offer the complex AI/ML infrastructure catalog objects.

- GPU Monitoring: GPUs are an essential part of the AI infrastructure but can be cost-prohibitive. Enterprises are looking for ways to enhance efficiencies and regulate GPU spending. With the unveiling of VMware Private AI Foundation with NVIDIA, enterprises can now get visibility of GPU resource allocation across clusters and hosts alongside the existing host memory and capacity monitoring consoles. This capability enables admins to optimize GPU utilization and, hence, streamline performance and cost.

NVIDIA AI Enterprise Capabilities

The solution includes NVIDIA’s AI Enterprise software, which helps enterprises in building production-ready AI solutions. NVIDIA AI Enterprise is a cloud-native software platform that simplifies the development and deployment of AI applications, including Gen AI, by speeding up data science pipelines. Enterprises that depend on AI for their business operations rely on NVIDIA AI Enterprise for its security, support, and stability to ensure a seamless transition from pilot to production.

- NVIDIA NIM – NIM enables easy-to-use microservices to speed up the deployment of Gen AI across enterprises while retaining the full ownership and control of their intellectual property. This versatile microservice supports NVIDIA foundation models and provides pre-built containers powered by NVIDIA inference software- including NVIDIA Triton Inference Server, NVIDIA TensorRT, TensorRT-LLM, and PyTorch, NVIDIA NIM is engineered to facilitate seamless AI inferencing at scale, helping developers deploy AI in production with agility and assurance.

- NVIDIA Nemo Retriever – NVIDIA NeMo Retriever, part of the NVIDIA NeMo platform, is a collection of NVIDIA CUDA-X Gen AI microservices enabling organizations to seamlessly connect custom models to diverse business data and deliver highly accurate responses. NeMo Retriever provides world-class information retrieval with the lowest latency, highest throughput, and maximum data privacy, enabling organizations to make better use of their data and generate business insights in real time. NeMo Retriever enhances GenAI applications with enhanced RAG capabilities, which can be connected to business data wherever it resides.

- NVIDIA RAG LLM Operator – The NVIDIA RAG LLM Operator makes it easy to deploy RAG applications into production. The operator streamlines the deployment of RAG pipelines developed using NVIDIA AI workflow examples without rewriting code.

- NVIDIA GPU Operator – The NVIDIA GPU Operator automates the lifecycle management of the software required to use GPUs with Kubernetes. It enables advanced functionality, including better GPU performance, utilization, and telemetry. GPU Operator allows organizations to focus on building applications rather than managing Kubernetes.

- An important aspect of the framework is the choice of LLMs, which can harness NVIDIA proprietary LLMs like Nemotron-3B, NVIDIA fine-tuned models like NV-Llama2-70B, Meta’s Llama2, or open-source LLMs from Hugging Face.

The turn-key VMware Private AI Foundation with NVIDIA is delivered by major server OEMs such as Dell, Hewlett Packard Enterprise (HPE), and Lenovo. It provides a rapid path to Gen AI deployment, expertise in platform engineering simplification, and, importantly, supportability of the platform.

Benefits of utilizing Private AI

The promise of Private AI based on a foundation of VMware and NVIDIA is broken down into three core capabilities that can be delivered. The goals and vision of the VMware Private AI Foundation with NVIDIA can be summed up in the following goals.

One major goal is to enable the deployment of enterprise AI Models in a Private, Secure, and Compliant manner. The partnership between Broadcom and NVIDIA enables an architectural approach for AI services that allows enterprises to build and deploy private and secure AI models with integrated security capabilities in VCF and its components. This ensures privacy and control of corporate data, as well as compliance with various industry-specific regulations and global standards. With increasing data privacy and security scrutiny, compliance is critical for enterprises in today’s data-driven landscape. The private on-premises deployments enabled by VMware Private AI Foundation with NVIDIA provide enterprises with the controls to address these regulatory challenges easily without requiring a major re-architecture of their existing environment.

VMware by Broadcom and NVIDIA have collaborated to engineer software and hardware capabilities that enable maximum performance for enterprise Gen AI models regardless of the LLM. These integrated capabilities are built-in the VCF platform and include features such as GPU monitoring, live migration, load balancing, Instant Cloning (which allows you to deploy multi-node clusters with pre-loaded models within seconds), virtualization and pooling of GPUs, and scaling of GPU input/output with NVIDIA NVLink/NVIDIA NVSwitch.

The latest benchmark study, by VMware, compared AI workloads on the VMware + NVIDIA AI-Ready Enterprise Platform against bare metal. The results show that performance is similar to and up to 5% better than bare metal. By putting AI workloads on virtualized solutions, you can preserve performance and enjoy the benefits of virtualization, such as ease of management and enterprise-grade security.

A key differentiator in the VMware Private AI Foundation approach is that organizations have the flexibility to run a range of LLMs for your environment, including NVIDIA proprietary LLMs, 3rd party LLMs like Llama2, or open-source LLMs from Hugging Face. This allows you to achieve the best fit for your application and use case.

The VMware Private AI Foundation with NVIDIA is a robust platform that allows enterprises to simplify deployment and optimize costs for enterprise Gen AI models. By leveraging the extensive expertise of VMware and NVIDIA, capabilities such as the vector database for enabling RAG workflows, deep learning VMs, and quick start automation wizard are enabled. With this platform, enterprises can rest assured that they will have a simplified deployment experience and unified management tools and processes, enabling significant cost reductions.

This approach enables the virtualization and sharing of infrastructure resources such as GPUs, CPUs, memory, and networks, leading to significant cost reductions. With the VMware Private AI Foundation, enterprises can achieve an optimal and cost-effective solution for enterprise Gen AI models.

Our Perspective

Organizations should use the Power Law Distribution of Gen AI to guide them in considering use cases for implementing Gen AI. It’s important to note that not all instances and use cases are the same. We believe that organizations will have many more on-premises and edge-deployed Gen AI applications tied to applications with specific outcomes and a clear ROI. Therefore, Private AI is becoming increasingly important as fine-tuning Gen AI applications on an organization’s intellectual property data will require them to build their own private and secure AI models rather than relying on public cloud-based LLMs.

Building a Gen AI model is a significant undertaking for even the most sophisticated organizations, as the skill sets required to put all the pieces together are not readily available in most organizations. Furthermore, organizations must take steps to protect their information, keeping it private and secure, to comply with potential future regulations.

Lastly, we caution organizations to ensure that they have a performant Gen AI application built on models and software they trust.

This theCUBE Research Whitepaper was commissioned by VMware by Broadcom and is distributed under license from theCUBE Research.