Make no mistake. We are entering a technology cycle that is completely new. Massively parallel computing and the Gen AI awakening is creating an entirely different industry focus that has altered customer spending patterns. Moreover, this new computing paradigm has changed competitive dynamics almost overnight. Decisions whether to spend tens of billions or hundreds of billions on CAPEX are being challenged by novel approaches to deploying AI. Geopolitical tensions are higher than at any time in the history of tech. While the pace of change appears to be accelerating, causing consternation and confusion, the reality is that broad technology adoption evolves over long periods of time, creating opportunities, risks, and tectonic shifts in industry structures.

In this special breaking analysis, we’re pleased to introduce a new predictions episode featuring some of the top analysts at theCUBE Research.

Meet the Prognosticators

With us today are six analysts from theCUBE Research.

- Bob Laliberte, who covers networking

- Scott Hebner on AI

- Savannah Peterson who will be discussing the impact of consumer tech on AI and the enterprise

- Jackie McGuire our newest cybersecurity analyst

- Christophe Bertrand who will be discussing his predictions on cyber resiliency; and

- Paul Nashawaty who leads our App/Dev practice.

How the Gen AI Awakening has Changed Customer Spending Patterns

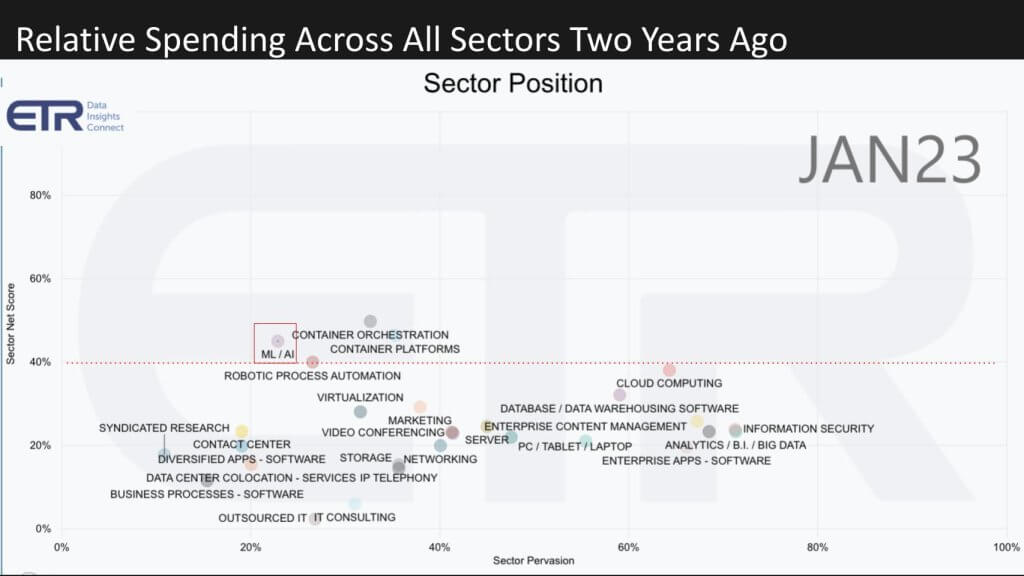

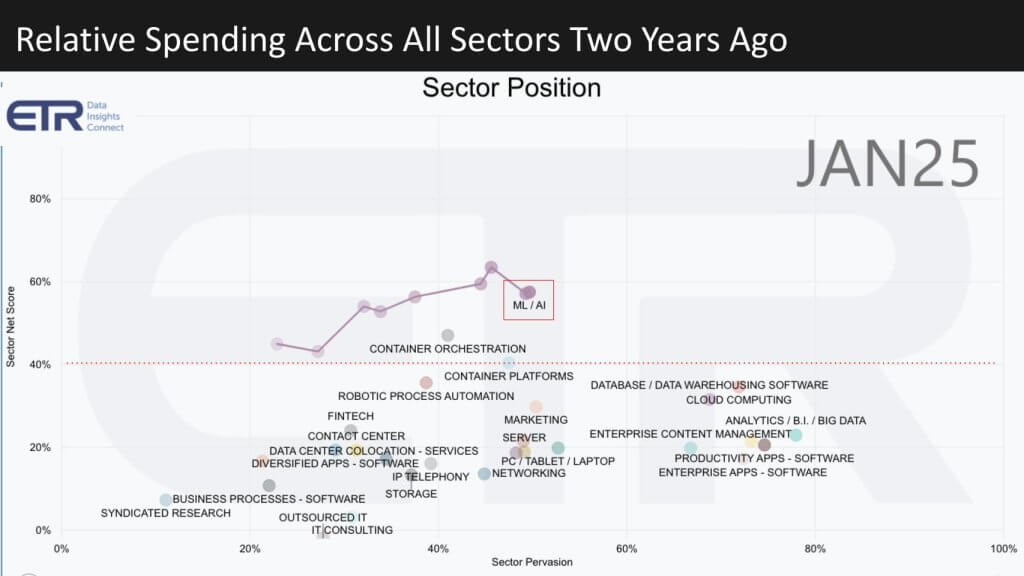

Before we get into it, below we’re showing some survey data from ETR to demonstrate how much the industry has changed. ETR performs quarterly spending intentions surveys of more than 1,800 IT decision makers. And we want to show you just how much of an impact the AI wave has had on spending intentions.

The graphic below shows spending by sector. The vertical axis is Net Score or spending momentum within a sector and the horizontal axis is Pervasion in the data set for each sector. It is a measure of account penetration for the sector. This data is based on account penetration, not revenue levels spent. Here we go back to January 2023.

Note the red line at 40% on the vertical axis. It indicates a highly elevated spending velocity and you can see ML/AI (which we’ve boxed) along with containers, cloud and RPA, were on or above that red dotted line two years ago.

Now let’s take a look at how that’s changed over the last 24 months.

It won’t shock you but look at both the trajectory of ML/AI over that time period and look at what happens to the other sectors. ML/AI shot to the top. Other sectors are somewhat compressed. This data underscores the transformation of the tech industry and specifically the spending priorities where ETR data tells us that roughly 44% of customers have been stealing from other budgets to fund their Gen AI initiatives; and that the ROI received is – let’s say tepid.

Predictions at a Glance – DeepSeek Impact, Networking, LLM Momentum, Consumer Tech’s Impact, Cyber Threats, Data Protection, Developer Trends

Let’s get to the core of our episode today and turn our attention to the 2025 predictions. Below is a quick glance at all our predictions.

We’ll lead things off with some thoughts on DeepSeek. Then Bob Laliberte will follow with his predictions on networking. Scott will talk about the future of LLMs, Savannah will share her predictions about the impact of consumer tech on the broader industry, Jackie will get to the heart of the security risks we’re facing and highlight some of the issues posed by recent policy changes from the Trump administration. Then Christophe will follow up with some predictions on the data protection and AI, then Paul will bring us home with a prediction about coding and developer impacts.

#1 DeepSeek is a Net Positive for the Tech Industry

[Watch a clip of the DeepSeek Prediction from Dave Vellante and Savannah Peterson].

Let’s start things off with the recent impact of DeepSeek.

The DeepSeek innovations, to the extent the information provided is accurate (and we think it largely is) will only serve to expand the market for AI. Value and Volume are the two most important metrics here. In other words – the denominator of doing AI (i.e. cost) was just lowered so value goes up. This will drive further adoption and will result in volume increases. Winners include: NVIDIA, Broadcom, AMD, infrastructure payers (e.g. Dell & HPE), hyperscalers (because they get more return for their CAPEX spend, and perhaps the biggest beneficiaries are software companies. We think the DeepSeek trend is neutral for energy because they can flex capacity up or down as needed. And we see this as negative for closed source LLMs, especially Anthropic. IBM Granite gets a little from DeepSeek in our view and OpenAI, while potentially impacted negative is still a wait and see (TBD) in our view. OpenAI is still the leader in innovation in AI and its volume is massive. As such it may benefit from the heightened competition as a forcing function.

How will we measure the accuracy of this prediction in 2026? Watch NVIDIA – the prediction is it will continue to thrive. Edge computing revenue takes off. AI projects get less expensive. Enterprise ROI becomes more attainable. Hyperscalers get more bang for the buck from their CAPEX spend.

The following additional analysis from Savanah Peterson is relevant.

Competition in the AI market is intensifying as new players enter the fray with specialized inference solutions and innovative hardware designs. While much of the early narrative focused on NVIDIA’s dominance in training large language models (LLMs), it’s clear that real-time, edge-based inference will be critical to making AI “real” for businesses and consumers alike. DeepSeek’s emergence—despite concerns around privacy, energy consumption, and total cost—highlights that multiple companies and silicon architectures will compete to deliver efficient, scalable inference for generative AI.

- Inference at the Forefront: After years of attention on massive training workloads, many in the industry are recognizing that the practical value of AI depends on speedy, low-latency inference at or near the data source.

- Multiple Silicon Paths: While GPUs have been the standard for model training, new logic processing units (LPUs) and tensor-focused chips are expanding hardware options. This shift will catalyze innovation as organizations seek performance gains, cost efficiencies, and edge deployments.

- Evolving ROI Metrics: Vendors tout surprisingly low training costs, but closer inspection often reveals substantial hidden investments in hardware and infrastructure. Real-world ROI remains elusive for many organizations—particularly for generative AI—due to energy consumption, privacy concerns, and uneven end-user adoption.

- Beyond Single-Brand Solutions: As more competitors challenge incumbent providers, conversations around AI are becoming less about brand loyalty and more about specific use cases, vertical solutions, and tangible business outcomes.

Bottom Line:

We believe the spotlight on inference marks an important inflection point in AI adoption, pushing vendors and enterprises to explore diverse hardware and software stacks. While NVIDIA remains a key player, data suggests that the market will broaden, creating new opportunities for emerging chip designs and AI platforms. Ultimately, we expect customers to benefit from increased competition, with the focus shifting from raw model training power to holistic solutions that balance cost, performance, and responsible data usage.

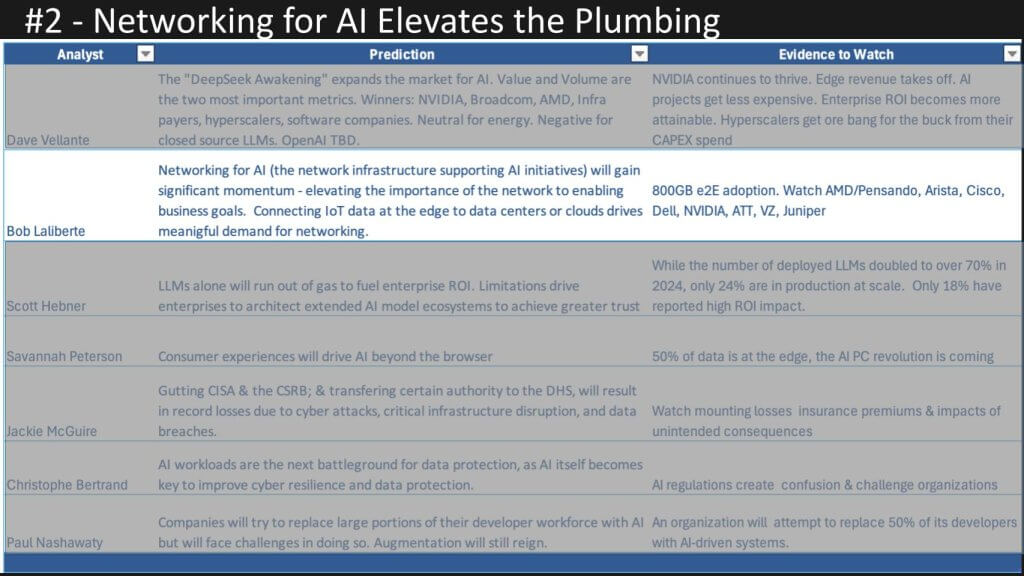

#2 Networking for AI Elevates the Importance of Ultra Low Latency Connectivity

[Watch a Clip of the Networking Prediction from Bob LaLiberte and Jackie McGuire].

Bob Laliberte’s prediction is shown below. Networking for AI gets a big boost in 2025.

The following analysis from Bob Laliberte summarizes the prediction with additional analysis contribured by Jackie McGuire.

We predict that networking will evolve from being viewed as basic “plumbing” to a strategic enabler of AI-driven initiatives in 2025. As organizations build AI data centers, move large volumes of data across WANs, and deploy edge computing solutions, networking vendors and telecom providers will play a more critical—and more visible—role than ever before.

- New AI Data Center Architectures:

- Ethernet vs. InfiniBand: We expect Ethernet to gain ground in AI data centers due to its closing performance gap, broader skills availability, and greater familiarity among enterprise teams—particularly those not already running HPC environments.

- Diverse Vendor Opportunities: Arista, NVIDIA, Cisco, Juniper, and Dell (among others) are reshaping data center networking to meet high-performance GPU cluster demands.

- WAN Reimagined for AI:

- Accelerated Data Movement: Large enterprises will need ultra-reliable, high-bandwidth connections to transport massive training and inference datasets.

- Telcos & Routing Providers: Carriers such as AT&T, Verizon, and T-Mobile, alongside router vendors (e.g., Juniper, Cisco), are poised to offer specialized “AI Connect” services and advanced private WAN solutions.

- Edge & Access Convergence:

- Wi-Fi & Private 5G: As data collection at the edge expands (e.g., AI-driven retail, industrial automation), organizations want integrated solutions that unify Wi-Fi and 5G in a single management plane.

- Emerging Innovators: Smaller players like Meter, Ericsson, HPE Athonet, Celona, Federated Wireless, and Highway9 are leading the charge on converged access points, driving simplicity and flexibility in edge deployments.

- Skills & Security Considerations:

- Hardware Renaissance: With data throughput requirements skyrocketing, businesses must invest in hardware expertise, including skilled trades for cabling, data center builds, and advanced connectivity.

- Air-Gapped & Closed Networks: Heightened security concerns—particularly with sensitive AI workloads—will prompt some organizations to adopt more isolated network designs.

Bottom Line:

We believe that AI’s rise will elevate networking to a mission-critical function, reshaping everything from the data center core to the edge. Ethernet is poised to gain traction in AI environments, and WAN solutions will become more specialized to handle the surge in data traffic. Meanwhile, the convergence of Wi-Fi and private 5G will enable more flexible, scalable edge deployments. Ultimately, success in AI will hinge on robust, high-performing networks that can seamlessly connect and protect all stages of the data lifecycle.

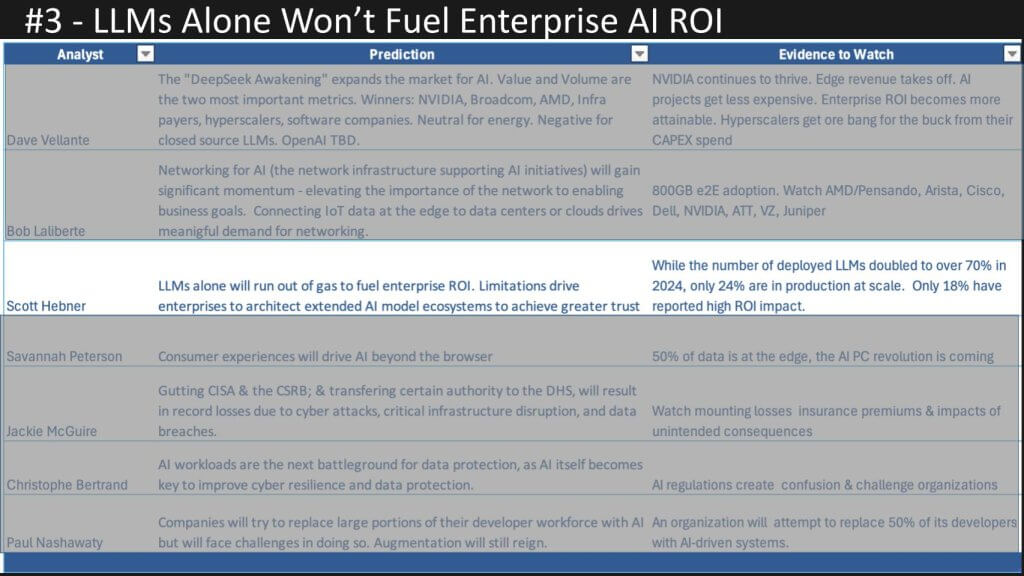

#3 LLMs Alone Won’t Drive Enterprise ROI in 2025…They Need Help

[Watch a Clip of the AI and LLM Prediction from Scott Hebner and Savannah Peterson].

Scott Hebner is up next with a prediction around LLMs. They alone won’t get the job done in 2025 – they need help.

The following provides context from Scott’s prediction with additional analysis from Savannah Peterson.

We predict that large language models (LLMs) alone will not be sufficient to generate meaningful enterprise ROI in 2025. Instead, organizations will incorporate a mix of AI techniques—beyond basic language models—to address critical issues such as accuracy, explainability, and trust. Early experimentation with LLMs has revealed limitations in correlation-based models, driving enterprises to explore causal and symbolic methods to achieve higher-value outcomes.

- LLM Adoption Gaps: While 70% of enterprises report using LLMs as part of their AI strategies, only 24% have successfully deployed them at scale. Many pilot projects remain stalled, and ROI remains elusive—Harvard Business School data suggests only 18% of firms see a high-impact return.

- Correlation vs. Causation: LLMs often confuse correlation with causation, undermining confidence in their predictions. This shortfall becomes acute when making critical decisions that require interpretability and clear reasoning.

- Explainability & Trust: Without transparency into how AI arrives at a conclusion, businesses hesitate to place mission-critical decisions under AI control. This “black box” factor is particularly concerning as organizations consider using AI for autonomous actions.

- Beyond LLMs—An Ecosystem of Models:

- Predictive AI & Domain-Specific Models: Use of both “traditional” predictive analytics and specialized LLMs is on the rise, but results suggest a need for more tailored, context-aware approaches.

- Reasoning & Causal AI: Techniques like chain-of-thought prompting, knowledge graphs, neuro-symbolic AI, and causal models are gaining traction, with usage predicted to double in 2025.

- Swarm Intelligence: Multi-agent reasoning—currently at just 5% adoption—may see fast growth among organizations seeking advanced collaborative decision-making.

Bottom Line:

We believe enterprises will move beyond reliance on a single, large-scale LLM, integrating multiple AI methods to improve explainability, trust, and real-world ROI. As the technology matures, the ability to combine generative, predictive, and causal models within unified architectures will prove essential for unlocking agentic AI’s true business value.

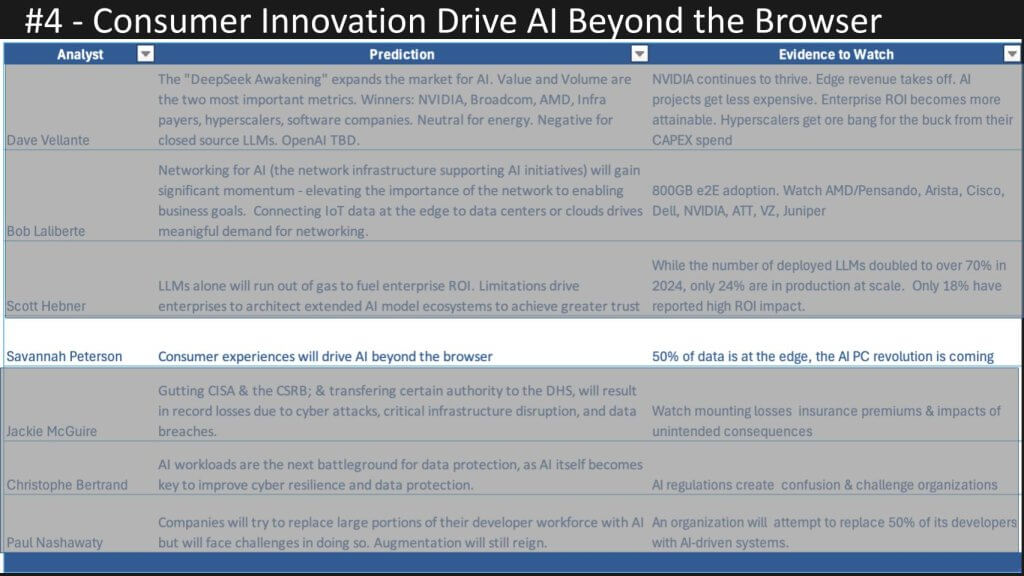

#4 Consumer Innovation Drives User Experiences, Ending Thirty Years of Browser Dominance

[Watch a Clip of the Consumer Tech Prediction from Savannah Peterson and Jackie McGuire].

Next up is Savannah Peterson who is fresh off CES. Below we show Savannah’s intriguing prediction that we will finally move past the browser as our path to knowledge. Replaced by new experiences driven from consumer innovations that will seep into enterprise computing.

The following summarizes Savannah’s prediction with additional analysis from Jackie McGuire.

We anticipate 2025 will mark a pivotal year in bringing AI directly to consumers through hardware—particularly in personal computing devices. While current AI interactions are largely confined to browser-based chatbots, emerging “AI PCs” and mobile devices equipped with on-device inference capabilities will shift the center of gravity from centralized data centers to the edge. This democratization of AI opens new possibilities for real-time decision-making and creation, enhancing privacy and expanding AI’s impact beyond traditional use cases.

- AI-PC Momentum:

- Hardware Refresh Cycle: Approximately 1.5 billion laptops are due for replacement over the next five years, presenting a massive opportunity for vendors to embed AI-optimized processors and accelerators.

- Edge Data Processing: With 50% of data now generated or consumed at the edge, on-device AI will enable real-time analytics and faster, more secure interactions without round-trip latency to the cloud.

- Innovation & Human-Centric Design:

- New Horizons in Creation: As end users gain access to advanced AI locally, unexpected applications—from disease research to extreme-weather forecasting—will emerge.

- Better User Experiences: The ability to run sophisticated AI models on personal devices empowers developers to redesign interfaces, automate tasks, and personalize interactions in ways not feasible with purely cloud-based solutions.

- Security & Privacy Gains:

- Localized Data Processing: On-device AI allows sensitive information to remain at the edge, potentially reducing the need to transmit private data over networks.

- Evolving Advertising & Algorithms: Instead of centralizing massive datasets, advertisers and social platforms could shift to “push” models where data analysis happens locally, mitigating privacy concerns.

Bottom Line:

We believe 2025 will witness a breakthrough in “AI at the edge,” driven by AI-optimized PCs, phones, and other consumer devices. This shift from cloud-dominated AI to local processing promises more immediate, human-centric experiences while opening up new avenues for privacy and innovation. As consumers embrace AI hardware, enterprises and developers will be forced to rethink user experience, data protection, and the very definition of “real-time” insights.

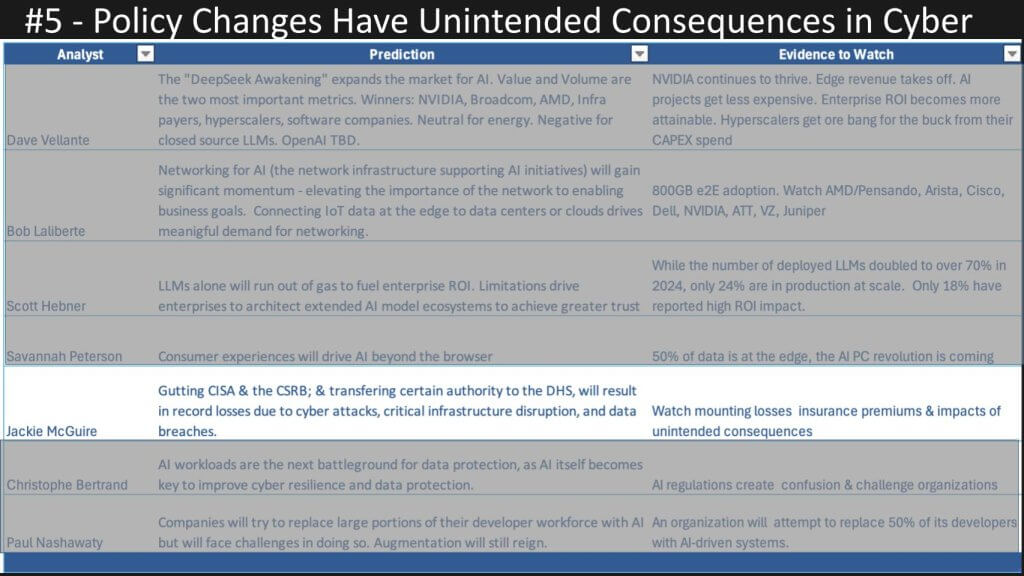

#5 Policy Changes Create Cyber Risk and Will Fuel Record Losses

[Watch a Clip of the Cyber Risk Prediction from Jackie McGuire and Christophe Bertrand].

Jackie McGuire’s prediction zeroes right in on recent Trump administration policy changes and points out some potential unintended consequences of recent moves. The quest for efficiency could spell trouble. Jackie predicts that gutting some agencies and shifting certain authority to others is ill advised and risks critical infrastructure and exposes other vulnerabilities.

The following analysis summarizes Jackie’s prediction with additional insight from Christophe Bertrand.

We predict that the U.S. government’s recent moves to restructure or eliminate certain cybersecurity agencies—such as the Cyber Safety Review Board (CSRB)—will create unintended consequences for national security and the private sector, particularly with regard to insurance and financial stability. By weakening public oversight and collaboration mechanisms, these policy shifts exacerbate the systemic risk of a large-scale cyberattack on critical infrastructure and financial institutions, potentially burdening insurers and prompting bailout scenarios similar to the 2008 financial crisis.

- Increased Risk of Catastrophic Attacks:

- Critical Infrastructure Vulnerabilities: Reduced federal oversight opens the door to more frequent and costly nation-state or ransomware attacks on utilities, healthcare, and transportation.

- Financial Fallout: With many insurers and reinsurers tied to common market players, a cascading failure could occur if a large-scale attack strains the entire insurance sector.

- Insurance Sector Under Pressure:

- Rising Attack Costs: While the volume of certain attacks (e.g., ransomware) may have declined, overall costs have surged by over 40%, leading to increased premiums and potential gaps in coverage.

- Systemic Exposure: Similar to the mortgage-backed securities crisis, systemic cyber risk could require federal intervention to prevent insurer insolvency, raising questions about fiscal conservatism and moral hazard.

- Private Sector Self-Regulation:

- Collaboration Vacuum: With fewer public advisory committees, the onus shifts to private-sector alliances to share threat intelligence and mitigate risk.

- Mixed Preparedness: Recent summits highlight how enterprises often lack robust cyber-resilience plans and still rely on government-provided safety nets.

Bottom Line:

We believe dismantling established cybersecurity oversight structures introduces real systemic risks, especially if insurers cannot absorb the shock of major, coordinated attacks. Without robust public–private collaboration, the U.S. could face a scenario where a cyber event cripples both critical infrastructure and the insurance market, forcing emergency government interventions. For 2025, organizations should intensify their own cyber-preparedness strategies and closely monitor federal policy shifts that may affect national and economic security.

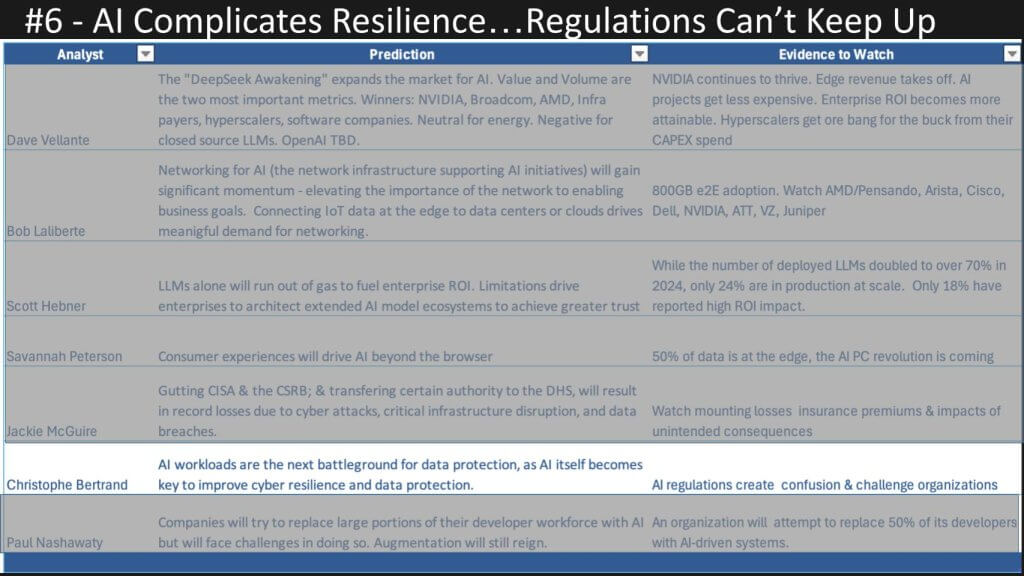

#6 AI Complicates Cyber Resilience and Regulation Creep Exacerbates the Problem

Christophe Bertrand is fresh off theCUBE Research’s Cyber Resiliency Summit held in our Palo Alto offices. Christophe learned a lot from the summit and below we show his prediction that AI will be the next battleground in data protection. Moreover, regulations will create more havoc for enterprises trying to keep up and that in itself creates challenges and risks.

The following summarizes Christophe’s prediction with additional analysis contributed by Paul Nashawaty.

We predict that AI workloads will become a major battleground for data protection in 2025, sparking a new wave of solutions from backup, storage, and cyber-resiliency vendors. As organizations ramp up AI initiatives, they face two critical, interlinked challenges: first, how to secure and recover fast-growing AI infrastructures; and second, how to comply with an expanding patchwork of data sovereignty and privacy regulations.

- AI Workloads Require New Protections:

- Backup & Recovery Gap: Historically, new infrastructure and applications (e.g., SaaS, containers) went unprotected at first. AI is no exception. Vendors are racing to add AI-specific backup and resilience features, anticipating fast adoption and strict reliability needs.

- Real-Time & Complex Environments: AI setups often involve distributed clusters, GPU-accelerated servers, and large language model repositories—raising unique complexities for data protection and disaster recovery.

- AI-Driven Automation:

- Security & Resilience at Scale: The same AI techniques driving operational efficiency will increasingly be applied to proactive threat detection, automated failover, and intelligent remediation.

- Vendor Proliferation: Dozens of vendors in backup, storage, and security will tout “AI-driven” offerings, making it challenging for customers to separate genuine innovation from marketing hype.

- Mounting Regulatory Pressures:

- Global Patchwork of Laws: As AI applications spread, businesses must navigate GDPR, CPRA, CCPA, and other regulations that may impose strict rules on how data is stored, moved, and processed.

- Governance Gaps: Many enterprises still struggle with basic data classification and lineage. AI only amplifies these gaps, increasing risk of non-compliance and potential legal exposure.

- Value of Proactive Compliance: Organizations that have already integrated governance into their workflows will be better equipped to meet new regulatory demands, accelerating AI-driven initiatives with fewer roadblocks.

Bottom Line:

We believe the surge in AI deployments will force the data protection ecosystem to evolve rapidly, prioritizing comprehensive backup and governance across increasingly complex infrastructure. While AI-driven automation promises new levels of resilience, the overlapping web of regulations will demand proactive compliance strategies. For enterprises, success will hinge on adopting data protection solutions that can scale with AI’s growth—while navigating a regulatory landscape that shows no signs of simplification.

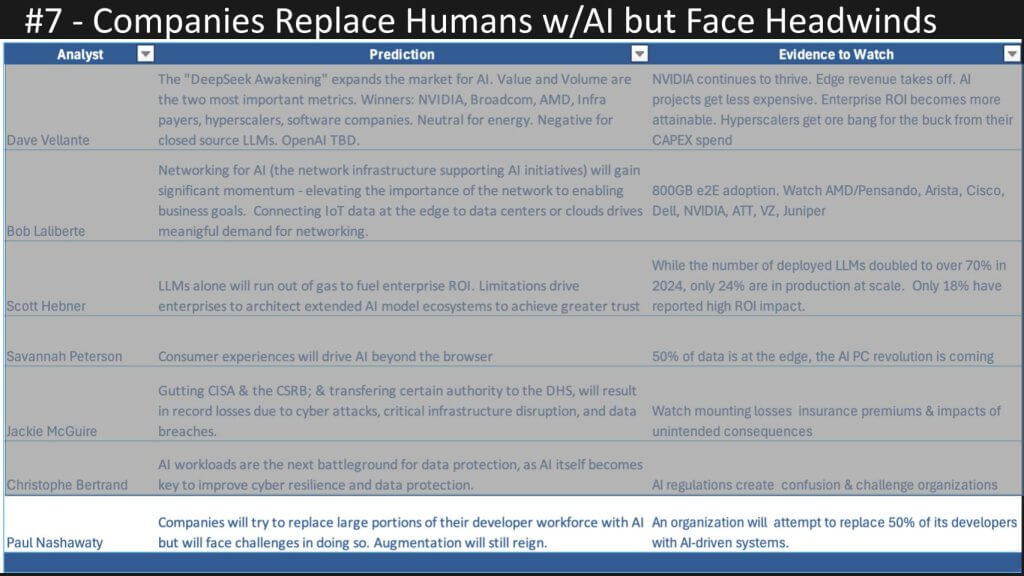

#7 Companies Try to Replace Human Coders with AI but Face Challenges

[Watch a Clip of the App/Dev and Coder Predictions from Paul Nashawaty and Savannah Peterson].

The last prediction comes from Paul Nashawaty. As we show below, Paul is predicting that companies are going to try and replace portions of their developer workforce with AI but it might not be so straightforward. He further predicts that at least one organization is going to try and cut half its developers and replace them with AI systems, but might not be so successful.

The following summarizes Paul’s prediction with additional analysis from Savannah Peterson.

We predict that despite growing interest in substituting AI for human developers, attempts to dramatically reduce developer headcount—by as much as half—will fall short in 2025. While AI-driven tooling can streamline repetitive or mundane coding tasks, organizations still need skilled developers for complex problem-solving, innovation, and collaboration. Simultaneously, the development ecosystem is shifting toward integrated platforms that reduce operational overhead and improve developer experience, with low-code/no-code solutions empowering “citizen developers”—all while governance, security, and compliance requirements mount.

- AI Won’t Replace Devs at Scale:

- Automation & Efficiency: AI-based code generators, testers, and debuggers promise to accelerate development cycles, but they lack the nuanced problem-solving and creative thinking capabilities of human developers.

- High Failure Risk for Radical Cuts: Some organizations may attempt to shrink dev teams by half, but we predict these efforts will fail to achieve desired results, as AI tools can’t yet handle complex, end-to-end software creation.

- Rise of Integrated Platforms Over Best-of-Breed Tools:

- Streamlining Developer Workflows: As the pressure to release code more frequently grows, we see 50% of organizations pivoting from siloed “best-of-breed” solutions to unified platforms that reduce complexity and speed up release cycles.

- Focus on Developer Productivity: Today, developers spend only about a quarter of their time actively coding. Integrated toolchains and AI-based automation free them to focus on higher-value tasks.

- Low-Code/No-Code Expansion & Governance Challenges:

- Citizen Developers Emerge: A projected 30% growth in low-code/no-code adoption empowers business stakeholders to build routine applications, further accelerating digital initiatives.

- Compliance & Security Risks: As more non-technical users build software, organizations must ensure robust guardrails around data protection and regulatory requirements, heightening the need for clear governance.

Bottom Line:

We believe that while AI will play a growing role in accelerating and enhancing development processes, it is unlikely to replace large segments of the developer workforce in the near term. Instead, AI-driven tools will free developers to innovate and solve bigger problems, while low-code/no-code solutions expand the pool of participants in software creation. Organizations that consolidate tooling, invest in platform-based approaches, and maintain strict compliance controls will be better positioned to deliver software faster and more securely in 2025.

Thanks to the six analysts from theCUBE Research who crafted these predictions. We’d love to hear your thoughts. As always, reach out any time and share your data and opinions.