Today’s AI agents are built on a powerful but increasingly exposed foundation: LLMs combined with Chain-of-Thought prompting and Retrieval-Augmented Generation (RAG). But as agents move from task execution into consequential decisions, that stack lacks a critical capability: Causal AI decision intelligence.

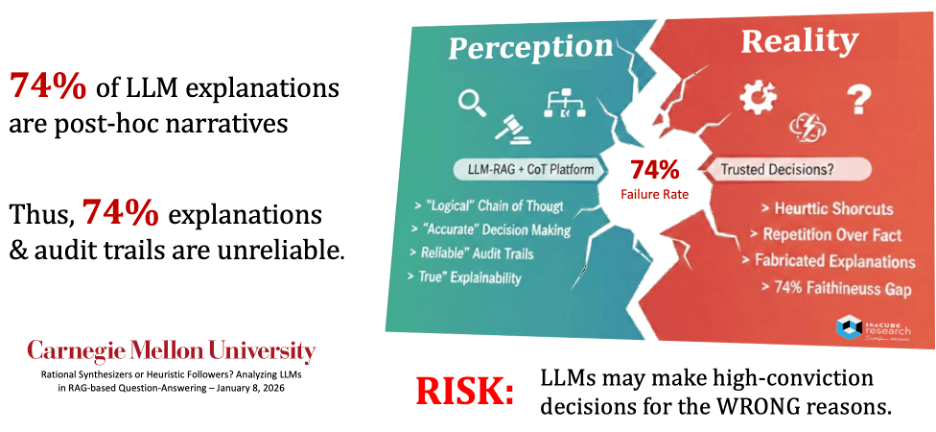

LLM+CoT+RAG systems are highly fluent and operationally useful, yet enterprises are hitting a hard constraint as they push beyond automation into decision-making: accuracy, explainability, and trustworthiness do not scale reliably with fluency. In high-stakes contexts, these systems can produce confident recommendations and persuasive “reasoning,” but the narrative often fails to reflect the evidence and logic that actually drove the outcome. The result is a governance problem, not just a model-quality problem: explanations can be plausible while still being unfaithful, inconsistent, or wrong because the system lacks a mechanistic understanding of how and why outcomes occur.

This is the point where LLM-driven agents collide with enterprise requirements for auditability, accountability, and defensibility. An explanation that sounds coherent is not the same as a decision you can defend, and without causal reasoning, agents struggle to evaluate consequences, isolate true drivers from confounders, or justify why one action is better than another under changing conditions.

That is why we believe 2026 will mark the rise of Causal AI Decision Intelligence as a mainstream enterprise priority: a new layer in the AI stack that enables agents to test interventions, run counterfactual “what-if” scenarios, and produce decision-grade outputs that are explainable and auditable. In this research note, with insights from Joel Sherlock, CEO of Causify.ai, we examine why this shift is accelerating and how causal decision intelligence will enable a new generation of agents that can genuinely help humans problem-solve and make better decisions.

Watch the Podcast

Why LLMs Need Help

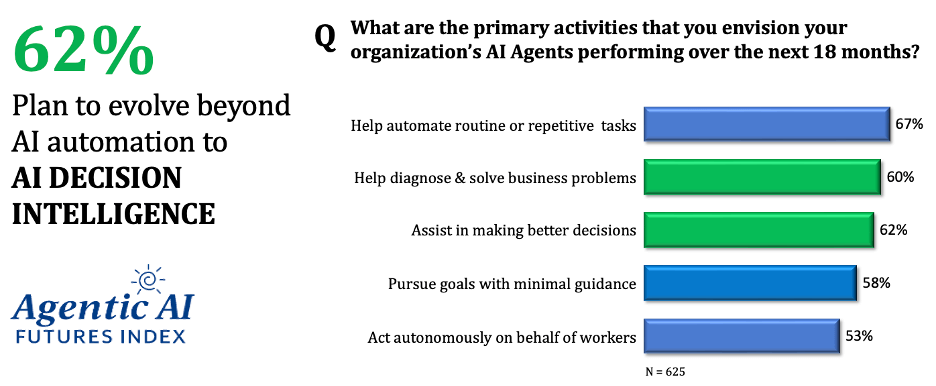

Enterprises have moved quickly from predictive analytics to generative AI and now to AI agents that can automate workflows, collaborate with employees, and pursue goals across business systems.

The shift to agentic AI also introduces an important distinction in autonomy and scope. Assistants respond to prompts to complete tasks such as retrieving information or generating content. Agents are designed to pursue goals within a broader environment of constraints and feedback, adapting as conditions change. Agentic AI systems take this further by coordinating multiple agents with distinct roles, policies, and knowledge to collaborate and optimize outcomes.

This progression marks an evolution from task execution to goal achievement, and from productivity gains to decision support and problem solving. But as autonomy rises, the enterprise bar rises with it: decisions must be defensible and auditable, not merely plausible.

As agentic designs evolving from task execution into consequential decisions, the core technical stack most teams are using today, LLM + Chain-of-Thought prompting + RAG, is showing structural limitations.

A recent Carnegie Mellon University study sharpened the issue. Across 1,600+ questions and roughly 15,000 retrieved documents, researchers found that modern LLM/CoT/RAG pipelines struggle to remain reliable when the evidence is noisy or inconsistent. Most concerning, the study identified a 74% “faithfulness gap,” meaning the model’s explanation often does not reflect what actually drove its conclusion. For enterprises, this is the trust-and-governance breaking point: an explanation that sounds coherent is not the same as a decision you can defend.

This is why AI Causal Decision Intelligence is emerging as the next frontier: systems that can produce decision-grade outputs that are trustworthy, defensible, auditable, and consequence-aware. It addresses the practical reality that is engrained in today’s LLMs:

- Data is not knowledge: statistical correlation and dynamic retrieval do not create understanding, or understanding of what causes what and why.

- A prediction is not a judgment: forecasts do not decide; decisions require trade-offs, constraints, and consequences.

- Correlation doesn’t imply causation: high-confidence patterns can still be wrong about mechanisms, and mechanisms are what judgments rely on.

The bottom line is that today’s AI capabilities (predictive AI, Gen AI, and LLMs) need help. Without it, they’ll enter a phase of diminishing returns for business unless the missing ingredients are added to the mix of AI model architectures to close this gap. It’s the key to evolving from static-state AI to dynamic AI, enabling businesses to operate in a dynamic world with a never-ending desire to continuously improve and shape future outcomes more intentionally.

This urgency is showing up directly in theCUBE Research’s Agentic AI Futures Index, which surveyed 625 enterprise AI professionals. It found that over the next 18 months, enterprises are not just planning for more automation; 62% say they plan to evolve beyond AI automation to AI Decision Intelligence. Under the hood, their planned agent activities span a maturity curve, from automating routine tasks (67%) to assisting better decisions (62%), diagnosing and solving business problems (60%), pursuing goals with minimal guidance (58%), and even acting autonomously on behalf of workers (53%).

This shift from task execution to decision-making is exactly where LLMs alone become non-optional. The immutable reality is why most enterprises are determining they must extend their architectural foundations to incorporate an array of new AI decision-intelligence technologies. As Scott Hebner, principal analyst at theCUBE Research, points out:

“If 2022 through 2024 was the surge of generative AI, and 2025 was the rise of AI agents and agentic workflows, then…the next frontier is clear…and it’s inevitable. It will be about AI decision Intelligence.”

Why Causal AI Decision Intelligence

While most of today’s AI decision intelligence architectures rely on dynamic semantic layers built upon knowledge graphs built upon LLMs, the critical missing layer is AI that understands causality, the mathematical science of how and why things happen.

Causal reasoning is key to enabling agents to test interventions, run counterfactual “what-if” scenarios, separate true drivers from confounders, and justify actions based on mechanism, not narrative. Importantly, causal reasoning enables AI to understand the precise chain of events leading to an outcome, making it more trustworthy, explainable, and auditable.

Joel Sherlock, CEO of Causify.ai, a pioneer in the space of AI decision intelligence, expressed it perfectly:

“Prediction isn’t decision-making. Real decisions require an understanding of why, and what changes if you act differently.”

Causal reasoning, based largely on Judea Pearl’s The Book of Why, is the application of the mathematical science of causation, and the notion that “correlation doesn’t imply causation”.

Today’s LLMs remain largely confined to what Pearl calls a “static world” of correlative probabilities. That is, while probabilistic systems encode a static world, causality explains how those probabilities change as the world around us changes.

This is a critical distinction as enterprises do not invest in AI to restate history. They invest to recommend actions that reliably shape better outcomes under uncertainty. Said another way, LLMs are only as good as you want your future to be, just like your past. Good for automation, perhaps, not good for decision-making, as you would not be making decisions unless you wanted a different future.

AI systems built on Pearl’s mathematical foundations operationalize AI causality by conceptually extending semantic knowledge graphs (entity relationships and context) into directed causal graphs that encode what can influence what through cause-and-effect mechanisms. Essentially, this is the modern, mathematical version of Aristotle’s premise: if you can establish the cause, you can reason about the effect, and you can reason about outcomes under intervention.

The enabling math is based on Pearl’s do-operator and do-calculus, which formalize a crucial distinction: observing X is not the same as changing X. This is where confounders distort decisioning, making relationships look causal when they are not. Pearl’s framework provides criteria for when causal effects can be trusted, when they cannot, and what evidence or assumptions are required.

Consider an example: condensation on a pipe and room humidity are causing manufacturing issues. There is an association between the condensation and the humidity. But what caused what? Did the condensation on the pipe cause the humidity, or did the humidity cause the condensation? It’s critical to know, as the remedy depends on that diagnosis. Without AI causality, LLMs or predictive models cannot be relied on to make this determination.

For AI agents to truly help human workers make reliable, explainable decisions, they must operate on all three principles to turn AI outcomes into trustworthy actions.

Simply put, it’s our view that as agentic designs move from lower-stakes automation into higher-consequence decision-making, enterprises are colliding with a gating constraint: Trust.

As explained in the associated podcast, Hebner summarized it as:

“When decisions become consequential, most of today’s AI still struggles to explain, justify, defend the decisions, and…audit them.”

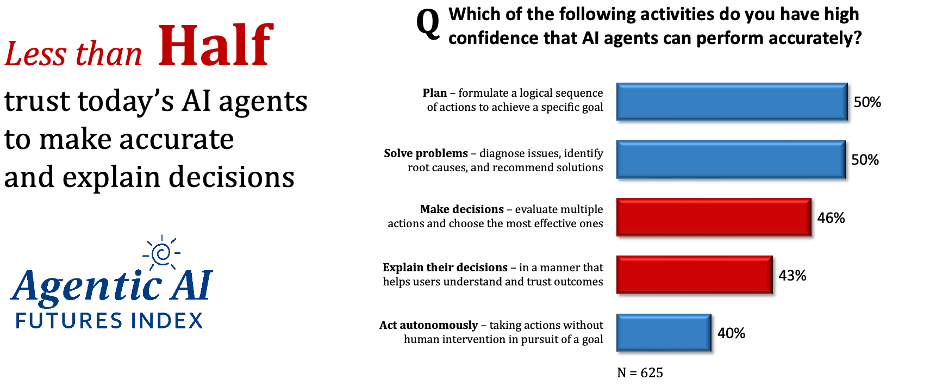

The Agentic AI Futures Index shows that enterprise AI leaders understand the challenge: only 46% believe agents can make trustworthy decisions and explain (and justify) them in a way that builds confidence.

The executive takeaway is direct: the infusion of causal AI decision intelligence is needed to enable AI agents to move from “here is what we predict” to “here is what will change if we act, why we believe it, and what could invalidate it.” That is the bridge from fluent outputs to defensible decisions.

Causal AI in Agentic Systems

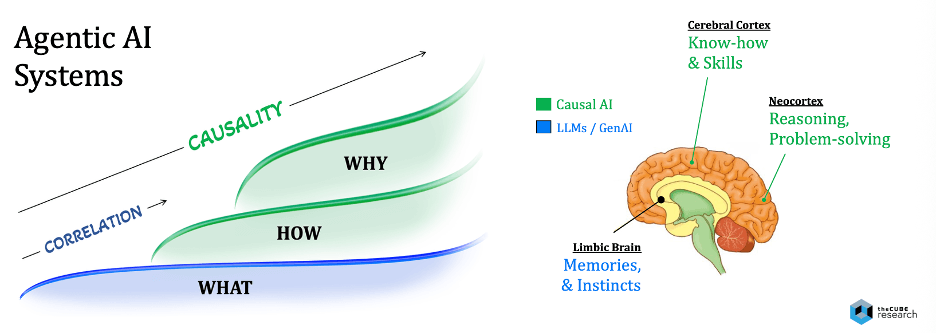

Agentic AI systems will struggle to make decision-grade, explainable, and trustworthy systems if they cannot reliably answer three core questions:

- What is happening (and what is likely to happen)?

- How can we achieve a new outcome if we do things differently?

- Why is one action preferable to another, given the consequences?

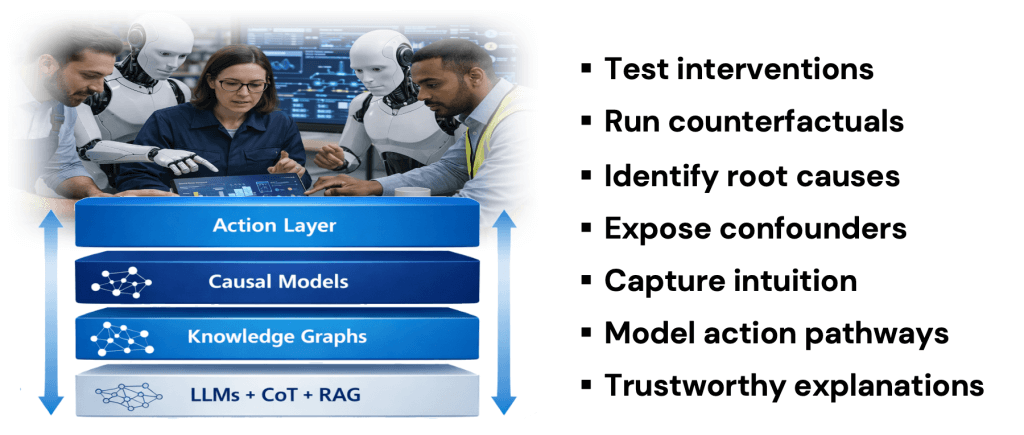

AI Causality is the “why layer” that builds on LLMs from which AI agents can be built, turning fluent systems into defensible decision systems. It infuses into AI systems the mathematical science of why things happen.

In the Next Frontiers of AI podcast on this topic, Joel Sherlock simplified the enterprise value proposition as auditability and intervention testing.

“There are some decisions that need audit trails…you need to be able to explain things. And its hard to justify decisions when you cannot show that tested alternate options. Just saying ‘AI told me to do it” is not a trustworthy governance or audit strategy.”

He is right on. What good is a decision if you cannot explain it with confidence, nor enable others to audit and challenge your thought process?

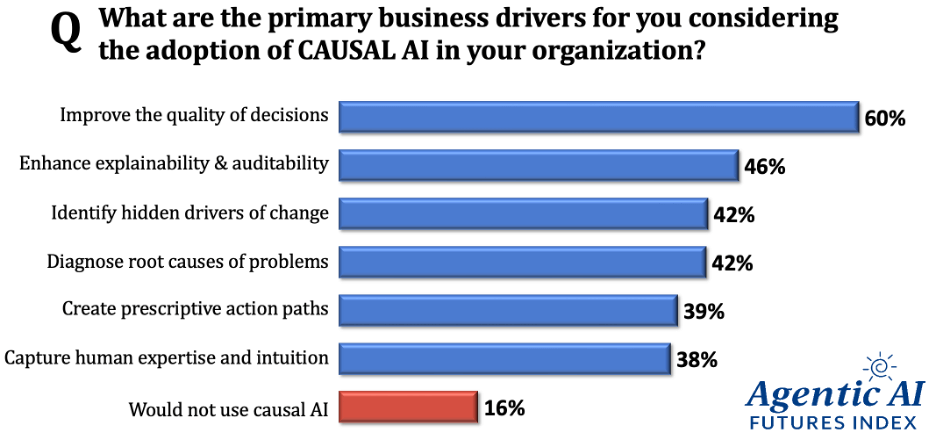

As found in the Agentic AI Futures Index survey, AI leaders are increasingly discovering that causal AI decision intelligence may be the key ingredient they need to address these challenges. For those familiar with causal AI, decision quality (60%), explainability (46%), and auditability (46%) are the top drivers.

Enterprises are not exploring causal AI as an academic upgrade. They are pulling it into their architectures to close specific decision-intelligence gaps. These priorities align directly with where LLM + Chain-of-Thought + RAG systems break down at scale.

Causality, when infused with LLM + Chain-of-Thought + RAG, enables AI agents to transition from generating plausible outputs to decision-grade outcomes by providing mechanism-level reasoning grounded in the principles of cause-and-effect. In turn, it allows enterprises to reliably engage with AI systems to:

- Test interventions: determine consequences of alternate actions

- Run counterfactuals: evaluate “what if we did X instead?”

- Identify root causes: detect and rank causal drivers of outcomes

- Expose confounders: identify misleading or hidden influences

- Capture intuition: encode expert assumptions and constraints

- Model pathways: understand multi-step causal chains

- Generate trustworthy explanation: explain why actions outperform alternatives

In other words, causal AI does not replace LLMs; it complements them: LLMs can help propose options and communicate decisions; causal models help validate which actions are likely to work, why, and under what conditions. When integrated into a unified architecture, agents will be significantly better equipped to know:

- WHAT to do: While LLMs and predictive models excel at correlating variables and producing plausible next steps, they remain vulnerable to hallucination, hidden confounders, and brittle generalization. Causality acts as a checkpoint reduce those vulnerabilities.

- HOW to do it: Agentic systems are designed to achieve goals, which requires planning, constraints, and multi-step reasoning across environments, tasks that LLMs are not designed to handle. Causality helps fill the gap by providing understanding, making LLMs more capable.

- WHY do it: Decision-grade systems must evaluate consequences and defend choices via mechanism-level reasoning, not post-hoc narrative. Since causal systems can understand a precise chain of events leading to a desired outcome, it enables LLMs to learn from how different actions or conditions may change an outcome.

Infusing causality into today’s correlative AI systems is not only necessary but also inevitable, because correlation-based intelligence hits a hard ceiling the moment enterprises expect AI to make and defend consequential decisions.

No matter how sophisticated a predictive model is, it still primarily learns statistical associations between behaviors and outcomes. That is fundamentally different from proving that an outcome happened because of a behavior, and conflating the two creates an incubator for hallucinations, bias, and fragile decisioning, especially when conditions change or evidence conflicts.

Causality is the upgrade that breaks that ceiling where human workers can test scenarios, understand consequences, explain true drivers, and analytically problem-solve, ultimately knowing what to do, how to do it, and why one action is better than another to shape outcomes

Put simply, humans are causal by nature, so AI must become causal by nature: LLMs resemble the limbic brain, optimizing for fluent, memory-like pattern completion and task automation, while causal AI provides the equivalent of cortical reasoning, translating experience into durable know-how and higher-order judgment that supports planning, problem-solving, and decision-making at enterprise standard.

For these reasons, it’s our view here at theCUBE Research that causal AI decision intelligence is not only a game-changing advancement but also a key driver of trustworthy, higher-ROI agentic AI use cases – and the realization of digital labor transformation strategies.

The Democratization of Causal AI

While the promise of causal AI decision intelligence is compelling, the reality is that causality’s mathematical foundations and engineering requirements are non-trivial, particularly when you attempt to integrate causal methods into LLM-based agent stacks. Up to now, the relatively small cohort of enterprises that have successfully pushed causal AI into production, often cited in the 10–15% range, have typically depended on elite skill sets. In practice, that has meant highly specialized teams, frequently including PhD-level expertise in causal inference and the platform engineering required to productionize these methods.

The Agentic AI Futures Index data underscores that demand is not the limiting factor; barriers are. Most organizations want the benefits of causal decision intelligence, but they see material barriers to adoption. Specifically, among those who wish to adopt causal AI, roughly two-thirds cite concerns that cluster around three issues:

- Difficulty of implementation

- Lack of integration into their existing AI stack

- Lack of internal skills and expertise

This is the practical reality of the market today: enterprises are motivated by decision quality, explainability, and auditability, yet they remain constrained by complexity, integration overhead, and scarce talent.

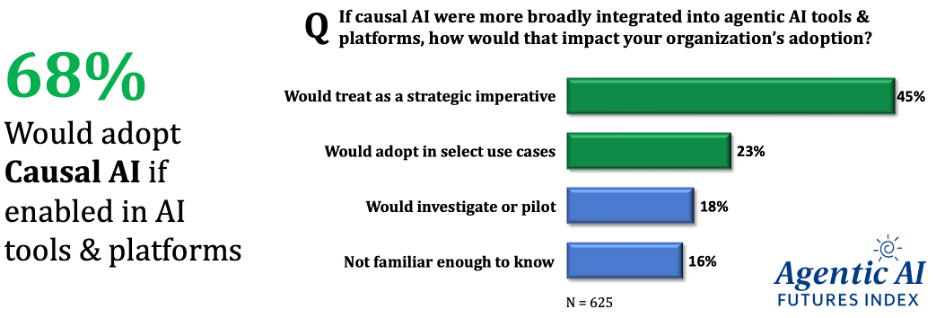

However, data from the Agentic AI Futures Index suggests that 68% of respondents would be more likely to adopt causal AI if it were more broadly integrated into agentic AI tools and platforms. In other words, the market is signaling a clear path forward: reduce the cognitive and engineering burden by integrating causal decision intelligence into the platforms enterprises already use to build and govern agents, and adoption moves from niche capability to mainstream architectural ingredient.

The good news is that the market is experiencing an accelerating democratization of causal AI, where causal decision intelligence becomes accessible to “mere mortals” through abstraction, integration, and productization. The most important lever is not asking every enterprise to hire causal PhDs. It embeds causal capabilities directly into agentic AI tools and platforms, so causal reasoning becomes a native service: guided modeling, intervention testing, counterfactual analysis, confounder detection, and explainable causal drivers, delivered through workflows that teams can operationalize.

A causal decision intelligence marketplace is rapidly emerging, driven by the same enterprise-forcing function now shaping agentic AI. While the commercial market remains somewhat ill-formed, the direction is clear: causal methods are moving from research-grade tooling into productized decision intelligence, increasingly optimized for agentic AI workflows (root-cause reasoning, intervention planning, counterfactual “what-if” analysis, and confounder detection).

While the commercial marketplace is somewhat ill-formed, we expect it to solidify and expand from here. The consensus view of six independent market studies compiled by theCube Research indicates a projected 41% CAGR through 2030, resulting in a marketplace approaching $1 billion.

The drivers behind this marketplace include:

- Open-source Momentum – Open source has already reached meaningful developer scale, totaling ~24K GitHub stars and ~4K forks across DoWhy, EconML, CausalML, PyWhy, Tigramite, causal-learn libraries, complemented by vendor investment such as AWS’s DoWhy for root-cause analysis, Salesforce’s CausalAIlibrary, and the Databricks causal AI accelerator. This breadth signals an expanding base of practitioners building causal capabilities into real systems.

- AI platform Heavyweights – This dynamic remains a major potential market multiplier, making causality a standardized architectural ingredient rather than a niche specialty. For example, Microsoft continues to invest in causal inference as a dedicated research area, and IBM Research has published on causally augmented business processes. Meta, Google, AWS, and OpenAI all appear to also be investing in causal AI research, which may ultimately lead to commercialization over time.

- Domain-specific Application – A growing set of application vendors are embedding causal methods directly into outcomes-focused applications. Such is Aitia’s “Gemini Digital Twins,” which applies causal AI-driven models used to test hypotheses and run counterfactual experiments for drug discovery at scale.

- AI Decision Intelligence tools- A fast-expanding cohort is forming to meet enterprise demand for decision-grade, agent-ready causality. A recent 3rd-party study estimates that over 60 companies are active in this space, signaling a rapidly thickening marketplace.

As an example of how causal AI decision intelligence is being productized and democratized for the masses, check out Causify.

Causify provides an enterprise-grade causal AI decision engine and a set of packaged industry solutions, including Sentinel for manufacturing, Grid for energy & utilities, Horizon for supply chain, and Optima for financial services. These solutions simplify and speed the deployment of AI decision intelligence, interactive explainability, and auditable intervention planning using causal reasoning. The platform’s workflow enables clients to:

- Connect operational data (e.g., ERP/IoT)

- Automatically map cause-and-effect mechanisms

- Simulate “what-if” interventions

- Rank recommended actions by projected impact

- Deploy, trace, and monitor decisions in production

Their customers, in turn, can use Causify to do things they often struggle to operationalize with LLM + CoT + RAG stacks alone: identify and rank true causal drivers, test policy changes before committing resources, adapt to regime shifts, and generate complete audit trails for high-stakes decisions and compliance mandates.

As Joel Sherlock, the CEO of Causify, explained:

“We work with leaders who can’t afford to be wrong. Leaders who want to leverage the power of AI to make business-critical decisions in a transparent, explainable, trustworthy, and auditable way. They understand that real business decisions require an understanding of why something is going to happen, and what changes if you act differently.”

Causify’s capabilities illustrate the core dynamic behind the causal AI democratization trend: enterprises do not need to hire causal engineers or PhDs since its platform abstracts the hardest parts and easily integrates into existing agentic AI stacks and every-day operational workflows.

In the end, democratization is not about just simplifying causality, its about making it operational so “mere mortals” can build decision-intelligence use cases that are defensible, repeatable, and ready for enterprise governance.

What To Do And When

Causal AI decision intelligence promises to become a significant advancement in the progression of AI. As Microsoft Research has stated:

“Causal machine learning is poised to be the next AI revolution.”

In addition, agentic AI will not scale in the enterprise on fluency alone. It will never meet its promise of value without the ability of AI agents to make trustworthy decisions. The winners in 2026 will be those who treat decision intelligence as an architectural mandate, not as an academic discussion or future feature.

If you are pursuing agentic AI, the practical path is:

- Strategically view agentic and causal AI as two sides of the same coin

- Map where decisions become consequential; focus on those use cases

- Prioritize use cases requiring defensibility, compliance, and auditability

- Engage the ecosystem of causal AI experts and providers

- Experiment early, start pilots, learn, and kickstart projects

To dive deeper into Causal AI Decision Intelligence, read the following research:

If you’d like to discuss how causality changes your agentic roadmap, reach out here on theCUBE Research or connect with me on LinkedIn.

📩 Contact Me 📚 Read More AI Research 🔔 Subscribe to Next Frontiers of AI Digest