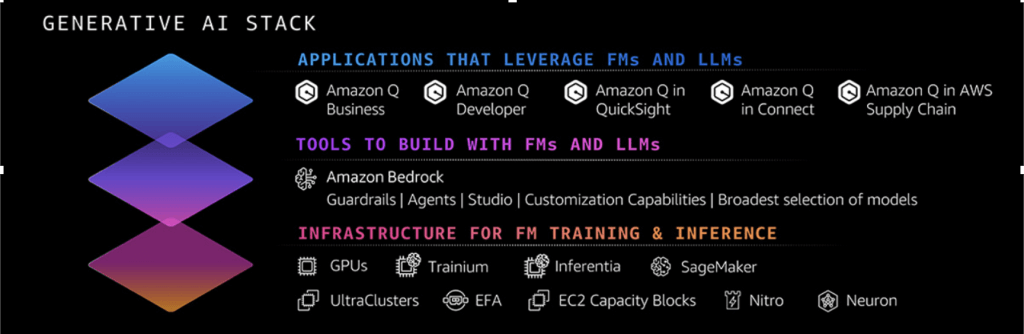

Amazon Web Services (AWS) recently announced several significant enhancements to its generative AI offerings at the AWS Summit New York, further solidifying its position in the rapidly evolving AI landscape. These updates span the entire AI stack, from infrastructure to applications, and demonstrate AWS’s commitment to providing enterprises with robust, scalable, and increasingly accessible AI tools. Here’s a peek at AWS’s generative AI stack: you’ll see the infrastructure layer at the bottom, the tools to build within the middle layer, and the apps at the top level.

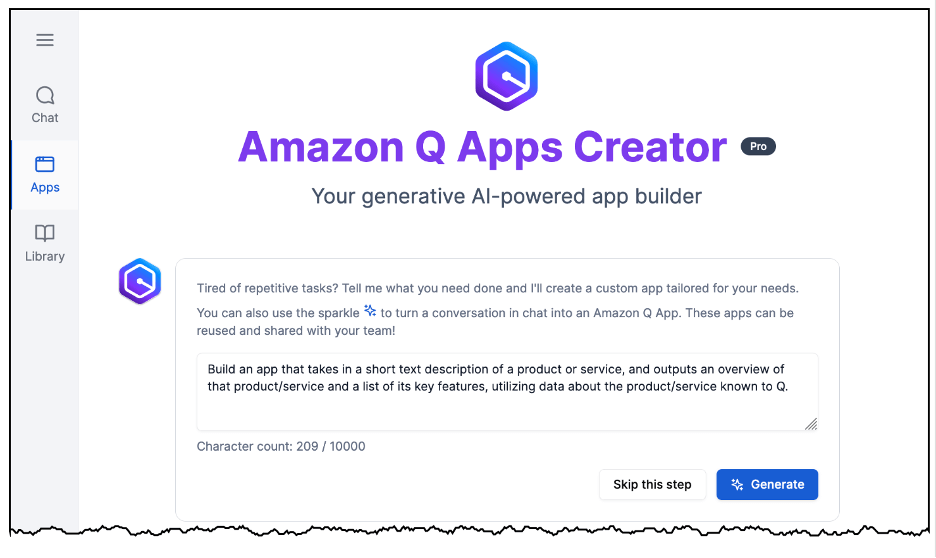

Amazon Q Apps

Amazon Q, AWS’s generative AI powered assistant that can be specifically tailored for unique business use cases, was first introduced at AWS re:Invent late last year and rolled out to developers and IT pros. Amazon Q was trained on more than 17 years of AWS knowledge and in my opinion, is a solid competitor against Microsoft Copilot for 365, Google Duet AI for Workspace, and/or OpenAI’s ChatGPT Enterprise. I find Amazon Q’s conversational capabilities particularly appealing and imagine that within the workplace, whether at the developer, IT pro, or other corporate user level.

Amazon is wasting no time here. AWS Summit NY featured considerable focus on Amazon’s Q suite of offerings as they continue to grow at a fairly rapid pace. At the application layer, the recently announced general availability of Amazon Q Apps Creator stands out as a notable development. This feature, part of Amazon Q Business, allows users to create and generate applications through natural language prompts or even from existing conversations. While the concept of low-code/no-code development isn’t new, the integration of generative AI and the ability to use natural language or other conversational prompts takes it to another level. While I will admit to growing somewhat weary of the term “democratization” the reality is that this capability can democratize app creation within organizations and put anyone, even individuals with no coding ability, to conceptualize and create apps. However, the true test will be in the quality and complexity of the apps created, as well as how easily they can be integrated into existing workflows. Amazon Q was unveiled late last year during AWS Re:invent, and rolled out first to developers and IT pros

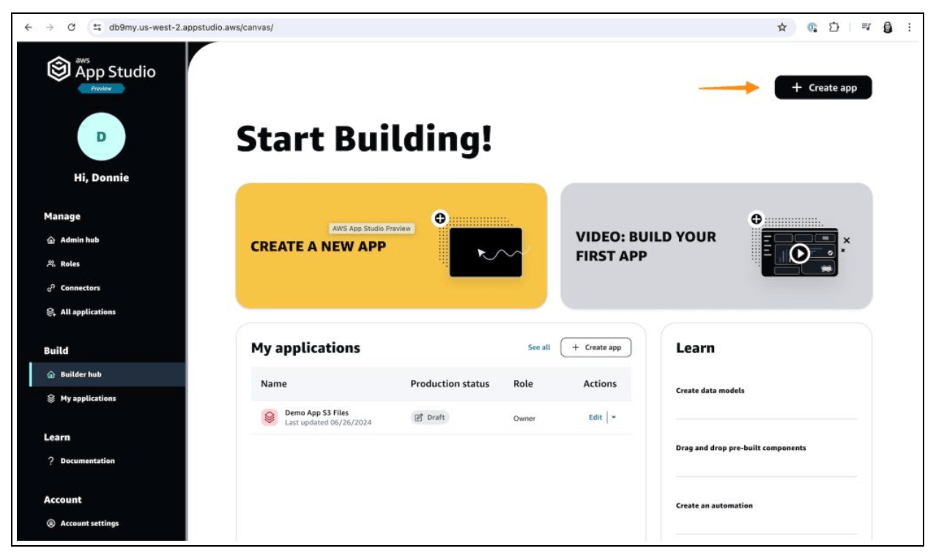

AWS App Studio Further Democratizes App Development

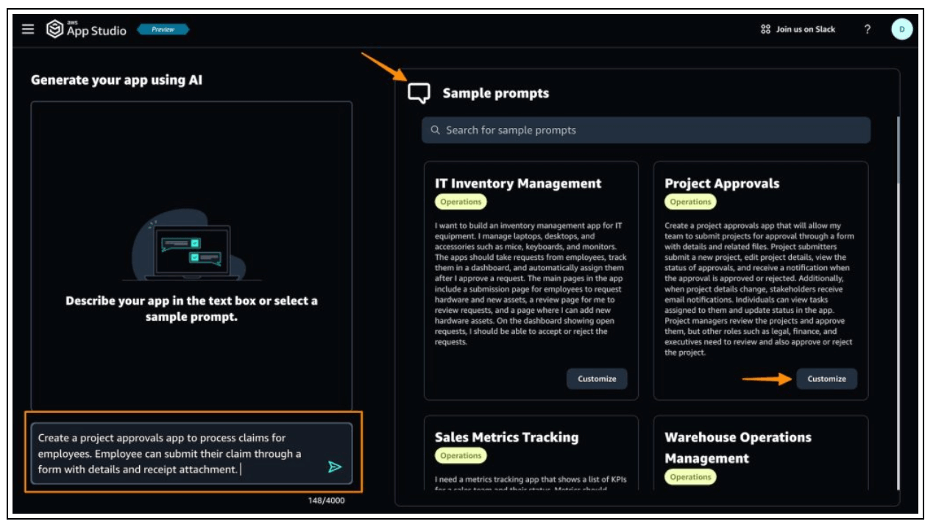

Other news out of the AWS Summit NY event included the public preview release of AWS App Studio, which pushes this concept of democratization even further. By enabling users — think data engineers and IT project managers — to create multi-page applications with data models and business logic from simple prompts, AWS is clearly targeting the growing demand for rapid application development.

In today’s fast-paced business world, speed is king, queen, and everything in between. I’m particularly excited about the ability to connect these generated apps to various AWS services (think Aurora, S3, and DynamoDB), as well as to third-party platforms like Salesforce, Zendesk, Twilio, and HubSpot. This could be an absolute game-changer for organizations looking to quickly prototype and deploy custom solutions, and hopefully will serve as inspiration throughout organizations for individuals to dive in and begin experimenting. Once that happens, the real fun begins.

AWS has made getting started on this front incredibly easy:

Using AWS App Studio, users can build an app using AI or do it from scratch using simple prompts:

I should also mention that building with App Studio is designed to be affordable, which is a smart move on the part of AWS. It’s free to build with AWS App Studio; customers will only pay for the time employees spend using the published apps. AWS claims this could save as much as 80% compared to other low-code solutions on the market. App Studio is now available in preview in the US West (Oregon).

Amazon Bedrock News

On the infrastructure and model development front, at AWS Summit NY AWS shared enhancements to Amazon Bedrock that I find particularly noteworthy. The addition of fine-tuning capabilities (which creates a copy of the model adapted for specific tasks), addresses a crucial need for enterprises looking to adapt foundation models to their specific use cases. By allowing customers to use their own labeled data to customize models, AWS is opening up possibilities for more accurate and context-aware AI applications. Based on the buzz I’ve seen around this in particular, customers are excited about these fine-tuning capabilities.

The expansion of data sources for Retrieval Augmented Generation (RAG) is another significant step. By supporting connections to popular platforms like Salesforce, Confluence, and SharePoint, AWS is clearly focused on making it easier for organizations to leverage their existing data repositories to enhance AI model outputs — which is something every customer wants — and quickly! This move recognizes the reality that most enterprises have valuable data spread across multiple systems and aims to make that data more accessible and useful in AI applications.

The improvements to AI Agents within Bedrock, particularly the ability to retain memory across interactions and interpret code, showcase AWS’s focus on creating more intelligent and capable AI assistants, as well as more personalized user experiences. This means that when you’re working on something and get distracted or interrupted, the AI agents remember where you let off, and when you’re ready to pick the work back up, the AI agent is ready for you. These enhancements could lead to more natural and context-aware interactions in areas like customer service and data analysis.

Agents for Amazon Bedrock also supports the ability to generate and execute code in a secure environment and automates analytical queries that model reasoning alone might not always have been able to answer.

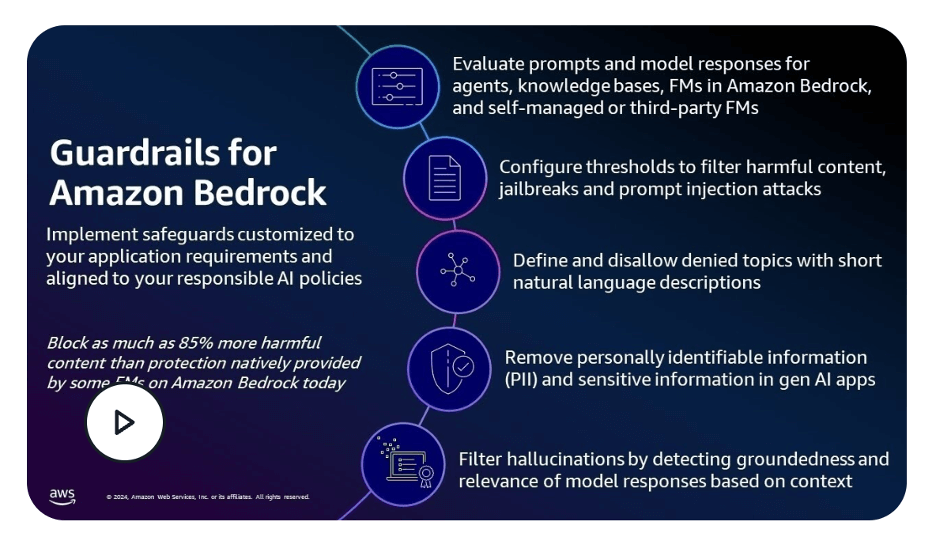

Guardrails for Amazon Bedrock

Announcements from AWS Summit NY on the Guardrails for Amazon Bedrock front included the addition of contextual grounding checks which can detect hallucinations and provide safeguards that are customizable on top of the native protections of FMs. AWS claims Guardrails can block as much as 85% more harmful content and filters over 75% of hallucinated responses for RAG and summarization workloads. The beauty of Guardrails for Amazon Bedrock is that users can apply customized safety, privacy, and “truthfulness,” or responsible AI protections based on corporate policies, within just one solution, providing consistency across all AI applications. Also worth mentioning is the fact that users can create multiple guardrails across myriad use cases and apps.

Wrapping Up and What’s Ahead

From a competitive standpoint, while the updates announced at AWS Summit NY may not be groundbreaking in isolation, collectively they represent a comprehensive approach to generative AI that leverages AWS’s strengths in cloud infrastructure and enterprise services. The focus on “de-risking” AI adoption for enterprises through features like contextual grounding checks in Bedrock Guardrails demonstrates an understanding of the concerns many organizations have about AI hallucinations and accuracy and I see this as the right solution set at the right time.

However, AWS faces stiff competition in the generative AI space from other tech giants like Google, Microsoft, and IBM, as well as from any number of AI-focused startups. While AWS benefits from its extensive cloud customer base and reputation for reliability, it will need to continue innovating and potentially develop more specialized AI solutions for different industries to maintain its edge.

I see that as one of the key parts of the value proposition of the collaboration between AWS and Deloitte, also announced at the AWS Summit NY. Those customized AI solutions, for different verticals and different industries will no doubt serve to speed enterprise deployment and scale enterprise adoption, and Deloitte has the customer base and industry knowledge and expertise to help speed this along. This collaboration is sure to reap benefits for both AWS and Deloitte at a time when most organizations are still in the beginning stages of AI exploration and experimentation. Speeding adoption, scaling throughout the organization, and the ability to target specific verticals and industry use cases is incredibly appealing.

Looking ahead, the key challenges for AWS will be in helping customers effectively implement these new AI capabilities at scale, ensuring model performance and accuracy, and addressing ongoing concerns around AI ethics and governance. As enterprises move from experimentation to production with generative AI, AWS’s ability to provide end-to-end solutions that are both powerful and responsible will be crucial.

In conclusion, these updates to AWS’s generative AI stack announced at AWS Summit NY represent a significant step forward in making generative AI more accessible and practical for enterprises. While there’s still much work to be done in realizing the full potential of these technologies, AWS is clearly positioning itself as a key player in shaping the future of AI in the enterprise.

See more of our coverage here:

Deloitte AWS Collaboration Designed to Accelerate and Scale Enterprise Adoption

AWS’s AI Blueprint Emphasizes Optionality, Trust, and Scalable Industry Solutions

Trustwise’s Optimize:ai Launches, All Eyes on Gen AI Safety and Efficiency