A Big Data Manifesto from the Wikibon Community

Providing effective business analytics tools and technologies to the enterprise is a top priority of CIOs and for good reason. Effective business analytics – from basic reporting to advanced data mining and predictive analytics — allows data analysts and business users alike to extract insights from corporate data that, when translated into action, deliver higher levels of efficiency and profitability to the enterprise.

Underlying every business analytics practice is data. Traditionally, this meant structured data created and stored by enterprises themselves, such as customer data housed in CRM applications, operational data stored in ERP systems or financial data tallied in accounting databases. But the volume and type of data now available to enterprises — and the need to analyze it in near-real time for maximum business value — is growing rapidly thanks to the popularity of social media and networking services like Facebook and Twitter, data-generating sensor equipped and networked devices, both machine- and human-generated online transactions, and other sources of unstructured and semi-structured data. We call this Big Data.

Traditional data management and business analytics tools and technologies are straining under the added weight of Big Data and new approaches are emerging to help enterprises gain actionable insights from Big Data. These new approaches – namely an open source framework called Hadoop, NoSQL databases such as Cassandra and Accumulo, and massively parallel analytic databases from vendors like EMC Greenplum, HP Vertica, and Teradata Aster Data – take a radically different approach to data processing, analytics and applications than traditional tools and technologies. This means enterprises likewise need to radically rethink the way they approach business analytics both from a technological and cultural perspective.

This will not be an easy transition for most enterprises, but those that undertake the task and embrace Big Data as the foundation of their business analytics practices stand to gain significant competitive advantage over their more timid rivals. Big Data combined with sophisticated business analytics have the potential to give enterprises unprecedented insights into customer behavior and volatile market conditions, allowing them to make data-driven business decisions faster and more effectively than the competition.

From storage and server technology that support Big Data processing to front-end data visualization tools that bring new insights alive for end-users, the emergence of Big Data also provides significant opportunities for hardware, software and services vendors. Those vendors that aid the enterprise in its transition to Big Data practitioner, both in the form of identifying Big Data use cases that add business value and developing the technology and services to make Big Data a practical reality, will be the ones that thrive.

Make no mistake: Big Data is the new definitive source of competitive advantage across all industries. Enterprises and technology vendors that dismiss Big Data as a passing fad do so at their peril and, in our opinion, will soon find themselves struggling to keep up with more foreword-thinking rivals. For those organizations that understand and embrace the new reality of Big Data, the possibilities for new innovation, improved agility, and increased profitability are nearly endless.

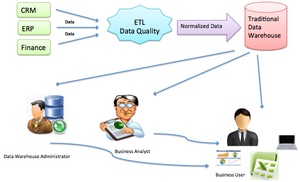

Data Processing and Analytics: The Old Way

Traditionally, data processing for analytic purposes followed a fairly static blueprint. Through the regular course of business, enterprises create modest amounts of structured data with stable data models via such enterprise applications as CRM, ERP and financial systems. Data integration tools are used to extract, transform and load the data from enterprise applications and transactional databases to a staging area where data quality and data normalization (hopefully) occur and the data is modeled into neat rows and tables. The modeled, cleansed data is then loaded into an enterprise data warehouse. This routine usually occurs on a scheduled basis — daily or weekly, sometimes more frequently.

From there, data warehouse administrators create and schedule regular reports to run against normalized data stored in the warehouse, which are distributed to the business. They also create dashboards and other limited visualization tools for executives and management.

Business analysts, meanwhile, use data analytics tools/engines to run advanced analytics against the warehouse, or more often against sample data migrated to a local data mart due to size limitations. Non-expert business users perform basic data visualization and limited analytics against the data warehouse via front-end business intelligence tools from vendors like SAP BusinessObjects and IBM Cognos. Data volumes in traditional data warehouses rarely exceeded multiple terabytes (and even that much is rare) as large volumes of data strain warehouse resources and degrade performance.

The Changing Nature of Big Data

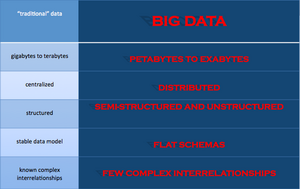

The advent of the Web, mobile devices and other technologies has caused a fundamental change to the nature of data. Big Data has important, distinct qualities that differentiate it from “traditional” corporate data. No longer centralized, highly structured and easily manageable, now more than ever data is highly distributed, loosely structured (if structured at all), and increasingly large in volume.

Specifically:

- Volume – The amount of data created both inside corporations and outside the firewall via the Web, mobile devices, IT infrastructure and other sources is increasing exponentially each year.

- Type – The variety of data types is increasing, and includes unstructured text-based data and semi-structured data like social media data, location-based data, and log-file data.

- Speed – The speed at which new data is being created – and the need for real-time analytics to derive business value from it — is increasing thanks to digitization of transactions, mobile computing and the sheer number of Internet and mobile device users.

Broadly speaking, Big Data is generated by a number of sources, including:

- Social Networking and Media: There are currently more than 700 million Facebook users, 250 million Twitter users and 156 million public blogs. Each Facebook update, Tweet, blog post and comment creates multiple new data points – structured, semi-structured and unstructured – sometimes called “data exhaust”.

- Mobile Devices: More than 5 billion mobile phones are in use worldwide. Each call, text and instant message is logged as data. Mobile devices, particularly smart phones and tablets, also make it easier to use social media and other data-generating applications. Mobile devices also collect and transmit location data.

- Internet Transactions: Billions of online purchases, stock trades and other transactions happen every day, including countless automated transactions. Each creates a number of data points collected by retailers, banks, credit cards, credit agencies and others.

- Networked Devices and Sensors: Electronic devices of all sorts – including servers and other IT hardware, smart energy meters and temperature sensors — all create semi-structured log data that record every action.

Traditional data warehouses and other data management tools are not up to the job of processing and analyzing Big Data in a time- or cost-efficient manner. Namely, data must be organized into relational tables — neat rows and columns — before a traditional enterprise data warehouse can ingest it.

Due to the time and man-power needed, applying such structure to vast amounts of unstructured data is impractical. Further, in order to scale-up a traditional enterprise data warehouse to accommodate potentially petabytes of data would require unrealistic financial investments in new, often (depending on the vendor) proprietary hardware. Data warehouse performance would also suffer due to a single choke point for loading data.

Therefore new ways of processing and analyzing Big Data are required.

New Approaches to Data Management and Analytics

There are number of approaches to processing and analyzing Big Data, but most share some common characteristics. Namely, they take advantage of commodity hardware to enable scale-out, parallel processing techniques; employ non-relational data storage capabilities to process unstructured and semi-structured data; and apply advanced analytics and data visualization technology to Big Data to convey insights to end-users.

Wikibon has identified three Big Data approaches that it believes will transform the business analytics and data management markets.

Hadoop

Hadoop is an open source framework for processing, storing and analyzing massive amounts of distributed, unstructured data. Originally created by Doug Cutting at Yahoo!, Hadoop was inspired by MapReduce, a user-defined function developed by Google in early 2000s for indexing the Web. It was designed to handle petabytes and exabytes of data distributed over multiple nodes in parallel.

Hadoop clusters run on inexpensive commodity hardware so projects can scale-out without breaking the bank. Hadoop is now a project of the Apache Software Foundation, where hundreds of contributors continuously improve the core technology. Fundamental concept: Rather than banging away at one, huge block of data with a single machine, Hadoop breaks up Big Data into multiple parts so each part can be processed and analyzed at the same time.

How Hadoop Works

A client accesses unstructured and semi-structured data from sources including log files, social media feeds and internal data stores. It breaks the data up into “parts,” which are then loaded into a file system made up of multiple nodes running on commodity hardware. The default file store in Hadoop is the Hadoop Distributed File System, or HDFS. File systems such as HDFS are adept at storing large volumes of unstructured and sem-structured data, as they do not require data to be organized into relational rows and columns.

Each “part” is replicated multiple times and loaded into the file system so that if a node fails, another node has a copy of the data contained on the failed node. A Name Node acts as facilitator, communicating information such as which nodes are available, where in the cluster certain data resides, and which nodes have failed back to the client.

Once the data is loaded into the cluster, it is ready to be analyzed via the MapReduce framework. The client submits a “Map” job — usually a query written in Java – to one of the nodes in the cluster known as the Job Tracker. The Job Tracker refers to the Name Node to determine which data it needs to access to complete the job and where in the cluster that data is located. Once determined, the Job Tracker submits the query to the relevant nodes. Rather than bringing all the data back into a central location, processing then occurs at each node simultaneously, in parallel. This is an essential characteristic of Hadoop.

When each node has finished processing its given job, it stores the results. The client initiates a “Reduce” job through the Job Tracker in which results of the map phase stored locally on individual nodes are aggregated to determine the “answer” to the original query, then loaded on to another node in the cluster. The client accesses these results, which can then be loaded into one of several analytic environments for analysis. The MapReduce job has now been completed.

Once the MapReduce phase is complete, the processed data is ready for further analysis by data scientists and others with advanced data analytics skills. Data scientists can manipulate and analyze the data using any of a number of tools for any number of uses, including to search for hidden insights and patterns or to create the foundation to build user-facing analytic applications. The data can also be modeled and transferred from Hadoop clusters into existing relational databases, data warehouses and other traditional IT systems for further analysis and/or to support transactional processing.

Hadoop Technical Components

A Hadoop “stack” is made up of a number of components. They include:

- Hadoop Distributed File System (HDFS): The default storage layer in any given Hadoop cluster;

- Name Node: The node in a Hadoop cluster that provides the client information on where in the cluster particular data is stored and if any nodes fail;

- Secondary Node: A backup to the Name Node, it periodically replicates and stores data from the Name Node should it fail;

- Job Tracker: The node in a Hadoop cluster that initiates and coordinates MapReduce jobs, or the processing of the data.

- Slave Nodes: The grunts of any Hadoop cluster, slave nodes store data and take direction to process it from the Job Tracker.

In addition to the above, the Hadoop ecosystem is made up of a number of complimentary sub-projects. NoSQL data stores like Cassandra and HBase are also used to store the results of MapReduce jobs in Hadoop. In addition to Java, some MapReduce jobs and other Hadoop functions are written in Pig, an open source language designed specifically for Hadoop. Hive is an open source data warehouse originally developed by Facebook that allows for analytic modeling within Hadoop.

Please see HBase, Sqoop, Flume and More: Apache Hadoop Defined for a guide to Hadoop’s components and sub-projects:

Hadoop: The Pros and Cons

The main benefit of Hadoop is that it allows enterprises to process and analyze large volumes of unstructured and semi-structured data, heretofore inaccessible to them, in a cost- and time-effective manner. Because Hadoop clusters can scale to petabytes and even exabytes of data, enterprises no longer must rely on sample data sets but can process and analyze ALL relevant data. Data scientists can apply an iterative approach to analysis, continually refining and testing queries to uncover previously unknown insights. It is also inexpensive to get started with Hadoop. Developers can download the Apache Hadoop distribution for free and begin experimenting with Hadoop in less than a day.

The downside to Hadoop and its myriad components is that they are immature and still developing. As with any young, raw technology, implementing and managing Hadoop clusters and performing advanced analytics on large volumes of unstructured data requires significant expertise, skill and training. Unfortunately, there is currently a dearth of Hadoop developers and data scientists available, making it impracticale for many enterprises to maintain and take advantage of complex Hadoop clusters. Further, as Hadoop’s myriad components are improved upon by the community and new components are created, there is, as with any immature open source technology/approach, a risk of forking. Finally, Hadoop is a batch-oriented framework, meaning it does not support real-time data processing and analysis.

The good news is that some of the brightest minds in IT are contributing to the Apache Hadoop project, and a new generation of Hadoop developers and data scientists are coming of age. As a result, the technology is advancing rapidly, becoming both more powerful and easier to implement and manage. An ecosystems of vendors, both Hadoop-focused start-ups like Cloudera and Hortonworks and well-worn IT stalwarts like IBM and Microsoft, are working to offer commercial, enterprise-ready Hadoop distributions, tools and services to make deploying and managing the technology a practical reality for the traditional enterprise. Other bleeding-edge start-ups are working to perfect NoSQL (Not Just SQL) data stores capable of delivering near real-time insights in conjunction with Hadoop.

NoSQL

A related new style of database called NoSQL (Not Only SQL) has emerged to, like Hadoop, process large volumes of multi-structured data. However, where as Hadoop is adept at supporting large-scale, batch-style historical analysis, NoSQL databases are aimed, for the most part (though there are some important exceptions) at serving up discrete data stored among large volumes of multi-structured data to end-user and automated Big Data applications. This capability is sorely lacking from relational database technology, which simply can’t maintain needed application performance levels at Big Data scale.

In some cases, NoSQL and Hadoop work in conjunction. HBase, for example, is a popular NoSQL database modeled after Google BigTable that is often deployed on top of HDFS, the Hadoop Distributed File System, to provide low-latency, quick lookups in Hadoop.

NoSQL databases currently available include:

- HBase

- Cassandra

- MarkLogic

- Aerospike

- MongoDB

- Accumulo

- Riak

- CouchDB

- DynamoDB

The downside of most NoSQL databases today is that they traded ACID (atomicity, consistency, isolation, durability) compliance for performance and scalability. Many also lack mature management and monitoring tools. Both these shortcomings are in the process of being overcome by both the open source NoSQL communities and a handful of vendors — such as DataStax, Sqrrl, 10gen, Aerospike and Couchbase — that are attempting to commercialize the various NoSQL databases.

Massively Parallel Analytic Databases

Unlike traditional data warehouses, massively parallel analytic databases are capable of quickly ingesting large amounts of mainly structured data with minimal data modeling required and can scale-out to accommodate multiple terabytes and sometimes petabytes of data.

Most importantly for end-users, massively parallel analytic databases support near real-time results to complex SQL queries – also called interactive query capabilities – a notable missing capability in Hadoop, and in some cases the ability to support near real-time Big Data applications. The fundamental characteristics of a massively parallel analytic database include:

Massively parallel processing, or MPP, capabilities:As the name states, massively parallel analytic databases employ massively parallel processing, or MPP, that support the ingest, processing and querying of data on multiple machines simultaneously. The result is significantly faster performance than traditional data warehouses that run on a single, large box and are constrained by a single choke point for data ingest.

Shared-nothing architectures: A shared-nothing architecture ensures there is no single point of failure in in some analytic database environments. In these cases, each node operates independently of the others so if one machine fails, the others keep running. This is particularly important in MPP environments in which, with sometimes hundreds of machines processing data in parallel, the occasional failure of one or more machines is inevitable.

Columnar architectures: Rather than storing and processing data in rows, as is typical with most relational databases, most massively parallel analytic databases employ columnar architectures. In columnar environments, only columns that contain the necessary data to determine the “answer” to a given query are processed, rather than entire rows of data, resulting in split-second query results. This also means data does not need to be structured into neat tables as with traditional relational databases.

Advanced data compression capabilities: These allow analytic databases to ingest and store larger volumes of data than otherwise possible and to do so with significantly fewer hardware resources than traditional databases. A database with 10-to-1 compression capabilities, for example, can compress 10 terabytes of data down to 1 terabyte. Data compression, and a related technique called data encoding, are critical to scaling to massive volumes of data efficiently.

Commodity hardware: Like Hadoop clusters, most – but certainly not all – massively parallel analytic databases run on off-the-shelf commodity hardware from Dell, IBM and others, so they can scale-out in a cost-effective manner.

In-memory data processing: Some, but certainly not all, massively parallel analytic databases use Dynamic RAM and/or Flash for some real-time data processing. Some, such as SAP HANA and Aerospike, are fully in-memory, while others use a hybrid approach that blends less expensive but lower performing disk-based storage for “colder” data with DRAM and/or flash for “hotter” data.

Massively parallel analytic databases do have some blind spots, however. Most notably, they are are not designed to ingest, process and analyze semi-structured and unstructured data that are largely responsible for the explosion if data volumes in the Big Data Era.

Complimentary Big Data Approaches

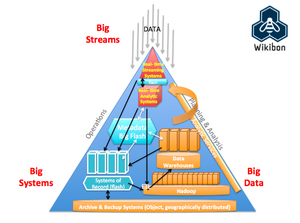

Hadoop, NoSQL and massively parallel analytic databases are not mutually exclusive. Far from it, Wikibon believes the three approaches are complimentary to each other and can and should co-exist in many enterprises. Hadoop excels at processing and analyzing large volumes of distributed, unstructured data in batch fashion for historical analysis. NoSQL databases are adept at storing and serving up multi-structured data in near-real time for Web-based Big Data applications. And massively parallel analytic databases are best at providing near real-time analysis of large volumes of mainly structured data.

Historical analysis done in Hadoop can be ported into analytic databases for further analysis and/or integrated with structured data in traditional enterprise data warehouses, for example. Insights gleaned from Big Data analytics can (and should) be productionized via Big Data applications. Enterprises should aim for flexible Big Data architectures to enable these three technologies/approaches to share data and insights as seamlessly as possible.

There are a number of pre-built connectors to help Hadoop developers and administrators perform such data integration, while a handful of vendors — among them Pivotal Initiative (formerly EMC Greenplum, Cetas, et al.) and Teradata Aster — offer Big Data appliances that bundle Hadoop and analytic databases with preconfigured hardware for quick deployment with minimal tuning required. Others, namely a start-up called Hadapt, offers a single platform that provides both SQL and Hadoop/MapReduce processing on the same cluster. Cloudera is also pursuing this strategy with its Impala project and Hortonworks via the open source Stinger Initiative.

In order to fully take advantage of Big Data, however, enterprises must take further steps. Namely, they must employ advanced analytics techniques on the processed data to reveal meaningful insights. Data scientists perform this sophisticated work in one of a handful of languages or approaches, including SAS and R. The results of this analysis can then be visualized with tools like Tableau or operationalized via Big Data applications, either homegrown or off-the-shelf. Other vendors, including Platfora and Datameer, are developing business intelligence-style applications to allow non-power users to interact with Big Data directly.

The bottom-line is that Big Data approaches such as Hadoop, NoSQL and massively parallel analytic databases are complimentary not just to each other but to most existing data management technology deployed in large enterprises. Wikibon does not suggest enterprise CIOs undertake whole-sale “rip-and-replacement” of existing enterprise data warehouses, data integration and other data management technologies for Big Data approaches.

Rather, Wikibon believes CIOs must think like portfolio managers, re-weighing priorities and laying the groundwork toward innovation and growth while taking necessary steps to mitigate risk factors. Replace existing data management technology with Big Data approaches only where it makes business sense and develop plans to integrate Big Data with remaining legacy data management infrastructure as seamlessly as possible. The end goal should be the transformation to a modern data architecture (see figure 3 and Flash and Hyperscale Changing Database and System Design Forever.)

Big Data Vendor Landscape

The Big Data vendor landscape is developing rapidly. For a complete breakdown of the Big Data market, including market size (current and five-year forecast through 2017) and vendor-by-vendor Big Data revenue figures, see Big Data Vendor Revenue and Market Forecast 2012-2017.

Big Data: Real-World Use Cases

Part of what makes Hadoop and other Big Data technologies and approaches so compelling is that they allow enterprises to find answers to questions they didn’t even know to ask. This can result in insights that lead to new product ideas or help identify ways to improve operational efficiencies. Still, there are a number of already identified use-cases for Big Data, both for Web giants like Google, Facebook and LinkedIn and for the more traditional enterprise. They include:

Recommendation Engine: Web properties and online retailers use Hadoop to match and recommend users to one another or to products and services based on analysis of user profile and behavioral data. LinkedIn uses this approach to power its “People You May Know” feature, while Amazon uses it to suggest related products for purchase to online consumers.

Sentiment Analysis: Used in conjunction with Hadoop, advanced text analytics tools analyze the unstructured text of social media and social networking posts, including Tweets and Facebook posts, to determine the user sentiment related to particular companies, brands or products. Analysis can focus on macro-level sentiment down to individual user sentiment.

Risk Modeling: Financial firms, banks and others use Hadoop and Next Generation Data Warehouses to analyze large volumes of transactional data to determine risk and exposure of financial assets, to prepare for potential “what-if” scenarios based on simulated market behavior, and to score potential clients for risk.

Fraud Detection: Financial companies, retailers and others use Big Data techniques to combine customer behavior, historical and transactional data to detect fraudulent activity. Credit card companies, for example, use Big Data technologies to identify transactional behavior that indicates a high likelihood of a stolen card.

Marketing Campaign Analysis: Marketing departments across industries have long used technology to monitor and determine the effectiveness of marketing campaigns. Big Data allows marketing teams to incorporate higher volumes of increasingly granular data, like click-stream data and call detail records, to increase the accuracy of analysis.

Customer Churn Analysis: Enterprises use Hadoop and Big Data technologies to analyze customer behavior data to identify patterns that indicate which customers are most likely to leave for a competing vendor or service. Action can then be taken to save the most profitable of these customers.

Social Graph Analysis: In conjunction with Hadoop and often Next Generation Data Warehousing, social networking data is mined to determine which customers pose the most influence over others inside social networks. This helps enterprises determine their “most important” customers, who are not always those that buy the most products or spend the most but those that tend to influence the buying behavior of others the most.

Customer Experience Analytics: Consumer-facing enterprises use Hadoop and related Big Data technologies to integrate data from previously siloed customer interaction channels such as call centers, online chat, Twitter, etc., to gain a complete view of the customer experience. This enables enterprises to understand the impact one customer interaction channel has on another in order to optimize the entire customer lifecycle experience.

Network Monitoring: Hadoop and other Big Data technologies are used to ingest, analyze and display data collected from servers, storage devices and other IT hardware to allow administrators to monitor network activity and diagnose bottlenecks and other issues. This type of analysis can also be applied to transportation networks to improve fuel efficiency and to othr kinds of networks.

Research And Development: Enterprises, such as pharmaceutical manufacturers, use Hadoop to comb through enormous volumes of text-based research and other historical data to assist in the development of new products.

These are, of course, just a sampling of Big Data use cases. In fact, the most compelling use case at any given enterprise may be as yet undiscovered. Such is the promise of Big Data.

The Big Data Skills Gap

One of the most pressing barriers of adoption for Big Data in the enterprise is the lack of skills around Hadoop administration and Big Data Analytics skills, or Data Science. In order for Big Data to truly gain mainstream adoption and achieve its full potential, it is critical that this skills gap be overcome. Doing so requires a two-front attack on the problem:

First, that means that the open source community and commercial Big Data vendors must develop easy-to-use Big Data administration and analytic tools and technologies to lower the barrier to entry for traditional IT and business intelligence professionals. These tools and technologies must abstract away as much complexity from underlying data processing frameworks as possible via a combination of graphical user interfaces, wizard-like installation capabilities and automation of routine tasks.

Second, the community must develop more educational resources to train both existing IT & business intelligence professionals and high school & college students to become the Big Data practitioners of the future that we need. This is particularly important on the analytics side of the equation.

According to McKinsey & Company, the United States alone is likely to “face a shortage of 140,000 to 190,000 people with deep analytical skills as well as 1.5 million managers and analysts with the know-how to use the analysis of big data to make effective decisions” by 2018. This shortage is in part because of the discipline of data science itself, which encompasses a blend of functional areas.

As mentioned earlier in this report, a number of Big Data vendors have begun offering training courses in Big Data. IT practitioners have an excellent opportunity to take advantage of these training and education initiatives to hone their data skills and identify new career paths within their organizations. Likewise, a handful of university-level programs in Big Data and advanced analytics have emerged, with programs sprouting up at USC, N.C. State, NYU and elsewhere. But more programs are needed.

Only by fighting the Hadoop skills gap war on two fronts – better tools & technologies andbetter education & training — will the Big Data skills gap be overcome.

Watch this video of Wikibon analysts discussing the skills gap and how some organizations areproviding services to address the issue.

Big Data: Next Steps for Enterprises and Vendors

Big Data holds tremendous potential for both enterprises and the vendors that service them, but action must be taken first. Wikibon recommends the following.

Action Item: Enterprises across all industries should evaluate current and potential Big Data use cases and engage the Big Data community to understand the latest technological developments. Work with the community, like-minded organizations and vendors to identify areas Big Data can provide business value. Next, consider the level of Big Data skills within your enterprise to determine if you are in a position to begin experimenting with Big Data approaches like Hadoop. If so, engage both IT and the business to develop a plan to integrate Big Data tools, technology and approaches into your existing IT infrastructure. Most importantly, begin to cultivate a data-driven culture among workers at all levels and encourage data experimentation. When this foundation has been laid, apply Big Data technologies and techniques today where they deliver the most business value and continuously re-evaluate new areas ripe for Big Data approaches.

IT vendors should help enterprises identify the most profitable and practical Big Data use cases and develop products and services make Big Data technologies easier to deploy, manage and use. Embrace an open, rather than proprietary, approach, to give customers the flexibility needed to experiment with new Big Data technologies and tools. Likewise, begin to build out Big Data services practices from within and partner with external service providers to help enterprises develop the skills needed to deploy and manage Big Data approaches like Hadoop. Most importantly, listen and respond to customer feedback as Big Data deployments mature and grow.