We attended both NVIDIA GTC and Broadcom’s investor day this week where the AI platform shift was on full display. In our view, GTC24 was the most important event in the history of the technology industry, surpassing Steve Jobs’ iPod and iPhone launches. The event was not the largest but, in our opinion, it was the most significant in terms of its reach, vision, ecosystem impact and broad-based recognition that the AI era will permanently change the world. Meanwhile, Broadcom’s first investor day underscored both the importance of the AI era and the highly differentiated strategies and paths that NVIDIA and Broadcom are each taking. We believe NVIDIA and Broadcom are currently the two best positioned companies to capitalize on the AI wave and will each dominate their respective markets for the better part of a decade. But importantly, we see them each as enablers of a broader ecosystem that collectively will create more value than either of these firms will in and of themselves.

In this Breaking Analysis we will share our perspectives on the state of AI and how NVIDIA and Broadcom are each leading the way with dramatically different but overlapping strategies that may be headed for an eventual collision course.

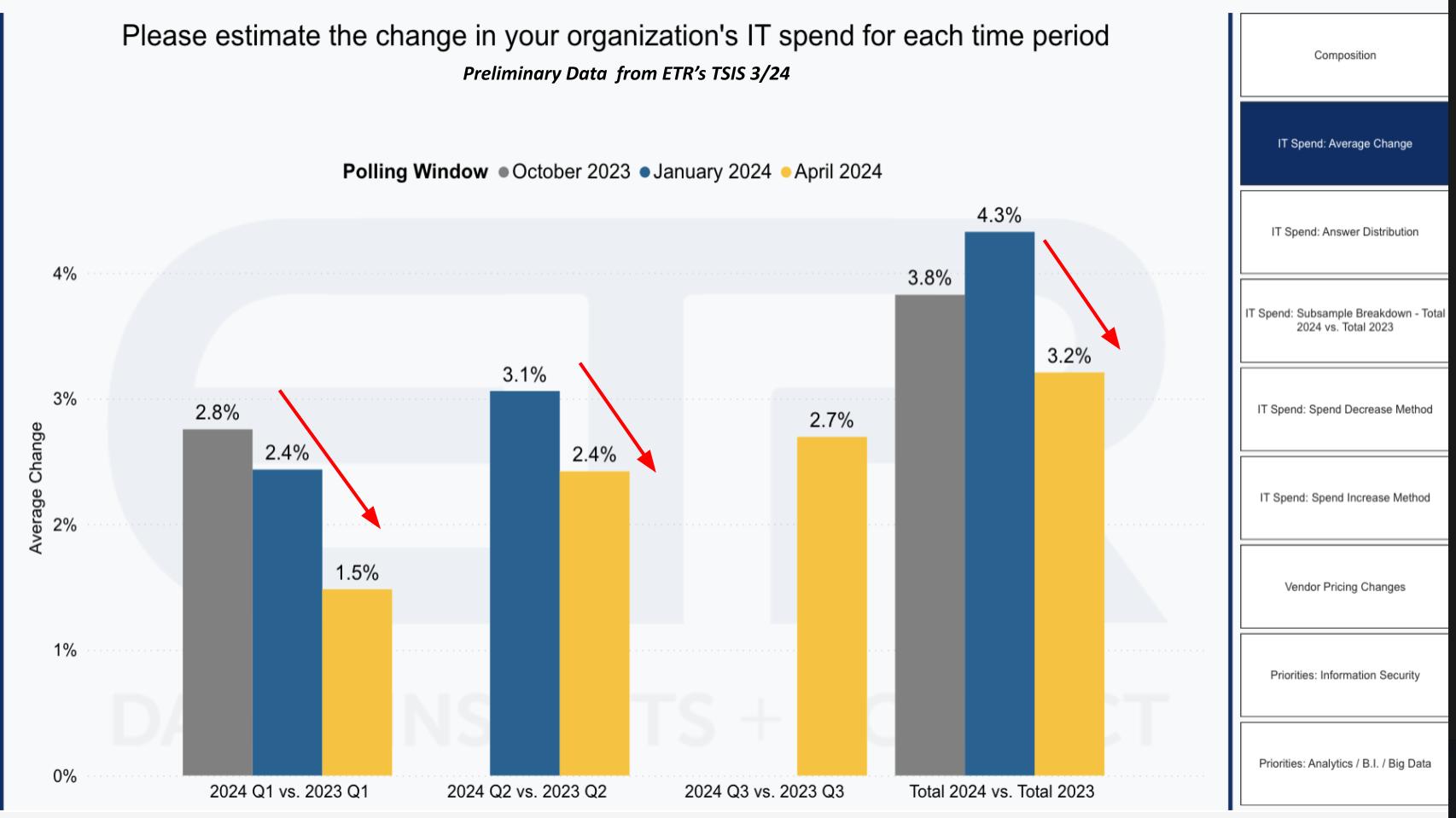

Despite AI Hype, the Macro is Softening

You’d never know it from all the AI froth but the macro appears to be getting worse.

ETR shared the chart above earlier in the week with its private clients. It shows the expected IT spending growth rates from more than 1,500 IT decision makers (ITDMs) for three time periods – October of 2023, January 2024 and the most recent survey in the field. Note the material drop in Q1 from 2.8% to 1.5% and more than a 100-basis point drop in the expectations for the full year 2024. As we reported earlier this year the tech spending outlook is back loaded toward the second half and is further deteriorating based on the latest data.

But the AI Trade is Broadening

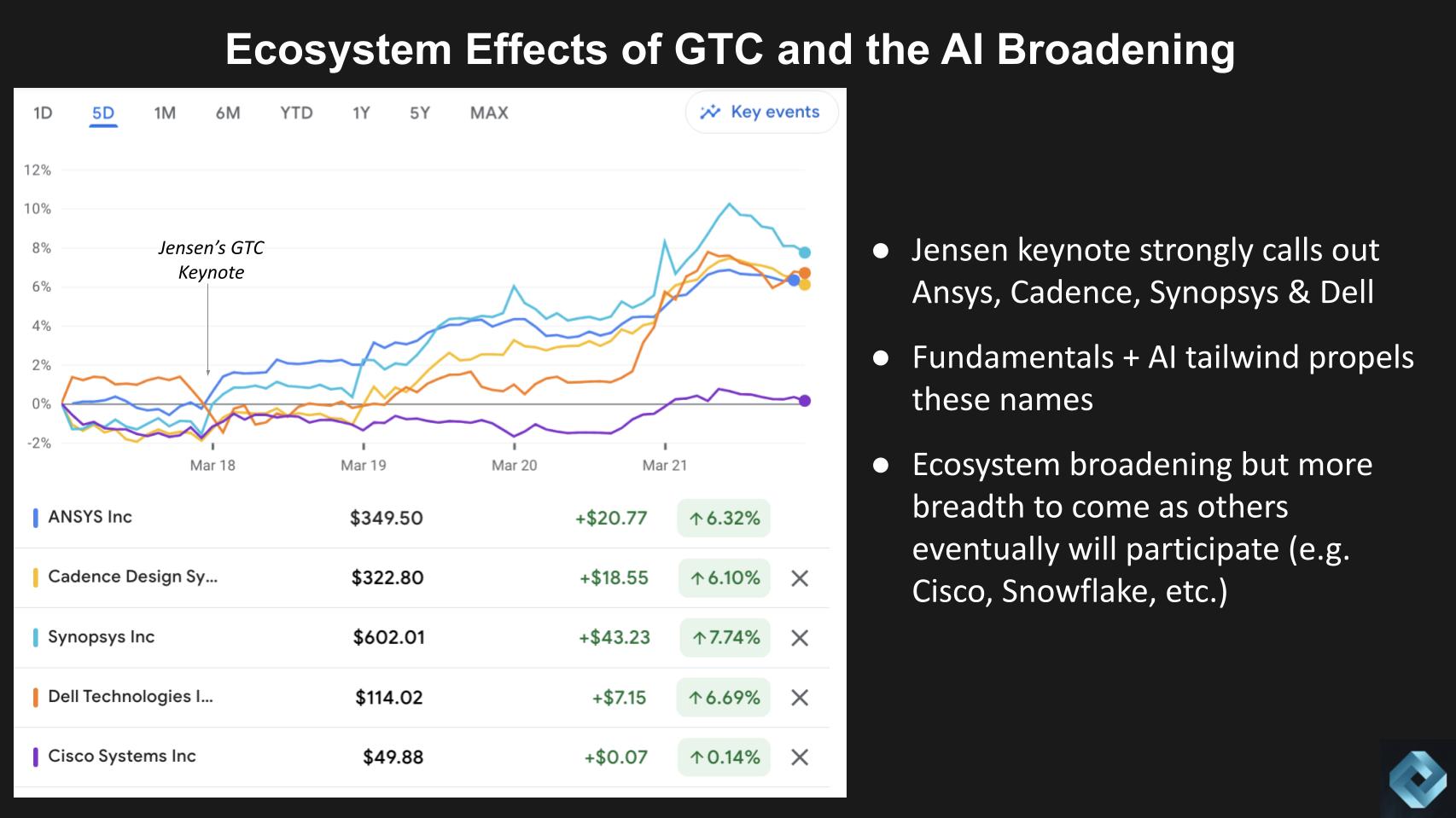

Despite the softer macro environment, we’re seeing countervailing effects from investors toward clear AI beneficiaries beyond the giants. Specifically, the strong performance of the market in 2023 was narrow with just a few names powering the tech rally. While both NVIDIA and Broadcom were part of that momentum along with Microsoft and some other names, 2024 is shaping up to have much broader participation in the AI tailwind. Firms like Ansys, Cadence, Synopsys, Dell, Micron, IBM, Supermicro, Pure Storage, VAST Data, ServiceNow, CrowdStrike, Arm, UiPath, Lam Research and many others are benefitting from the froth around GTC24.

Above we show the recent movement post Jensen’s keynote at GTC24 for Ansys, Cadence, Synopsys and Dell, each of whom got major shoutouts from Jensen Huang in his presentation. You can see they all moved up nicely and we believe it’s from the combination of 1) The GTC momentum; and 2) Good fundamentals including demand outstripping supply for these firms. In the case of Ansys, Cadence and Synopsys, they are critical design software providers for silicon chips. In the case of Dell, the company’s supply chain leverage is putting it in a good position to secure GPUs. It’s execution is throwing of cash that it’s returning to investors and as well it is benefitting from the AI tailwind. And there’s likely more to come from a new PC cycle that will take shape later this year.

We also show Cisco, a company that is not considered an AI leader, despite the fact that it has a robust portfolio, which includes many AI innovations. Cisco has silicon to power AI networking, AI in its own networking, AI in its collaboration software, data from its recently completed Splunk acquisition and ThousandEyes to provide network intelligence data that can feed Splunk. But the macro is hurting Cisco right now as the company has disappointed investors recently.

Snowflake (not shown above) is another company that in theory should be benefitting from AI because it houses so much critical analytic data. It has partnerships with the likes of NVIDIA and it has acquired AI expertise in the form of Neeva. But investors were shaken by Frank Slootman stepping down as CEO and the street is waiting for Snowflake’s new leader, Sridhar Ramaswamy to prove that Snowflake is still on track.

AI is everywhere but still is in the experimental phase within the enterprise. Our premise is that while the macro is still challenging, AI will be infused into all sectors and eventually be a rising tide for all areas of tech and beyond. However at the moment, while the ROI of AI is clear for consumer Internet companies like Google and Meta, the business impact for enterprise AI has not been as evident. Until it shows up in the quarterly numbers, we expect CFOs to remain conservative with their budget allocations.

The point is while the AI rally is broadening but it’s still not a tide that lifts all ships. Nonetheless, a broadening of a rally signals investor concern about the valuations at leading companies. They’re making the case for putting money to work with other AI beneficiaries that could give them better returns in the near term. As well, others like the Cisco and Snowflake examples we shared will eventually benefit in our opinion. These are indicators in our view that there’s still plenty of upside in this AI run. We understand it’s just a matter of time before the other AI winners become highly valued and the market gets toppy. But we’re not there yet in our view.

NVIDIA GTC Marks an Industry Milestone

GTC was a remarkable moment for our industry. The event was held in the San Jose Convention Center, a venue that was filled to the max in 2019, the last time GTC was held as an in person event. GTC24 was far too large for this venue. In fact, the keynote took place in the SAP sports arena, about a mile from the main event. Rumors circulated that Jensen wanted GTC to be held at the Sphere in Las Vegas but it was booked. Perhaps next year as clearly GTC has outgrown the coziness of San Jose.

The main venue was packed for days, with Monday seeing the largest attendance. Perhaps as many as 30,000 people attended over the course of the week but what made GTC so important was the reach not only in the technology community but with many presenters outside of tech in automotive, healthcare, financial services – virtually every industry was represented.

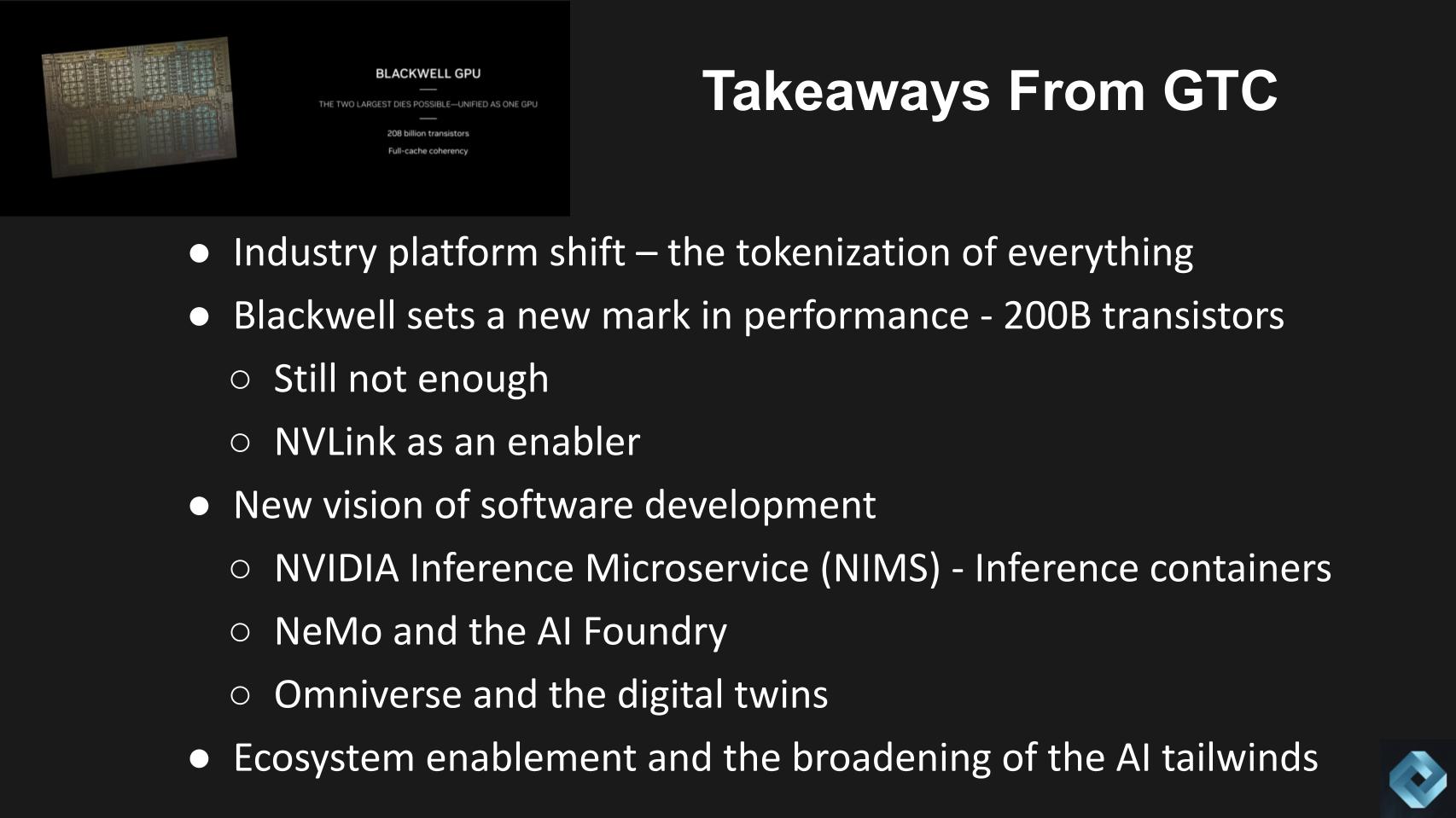

A New Industry Platform – The Tokenization of Everything

The big takeaways – and there are many we won’t touch on today – started with Jensen’s keynote and his vision for the industry and what he calls accelerated computing. And the building of AI factories. The vision he put forth during the week was that a world of generated content is here. The images from his keynote he said were all simulation, not animation – and the simulations were impressive.

He also put forth a vision of a token economy where the computer sees tokens and these tokens can be generalized. If you can generalize he said, then you can talk to the AI and generate images, perform gene analysis, control robotic arms and more.

The World’s Biggest GPU

Jensen said that Blackwell, its new chip named after mathematician David Blackwell, was built for this Gen AI moment. He described a reinforcement learning loop where training, learning and generation become part of the same process. And if done well and enough times it becomes reality. He implied that this workflow would perform both inference and training and a critical enabler to Blackwell is NVLink, a high speed interconnect that is proprietary to NVIDIA. He said Blackwell took three years, 25,000 people and $10B to create. With incredible complexity – 600,000 parts and lots of software to do switching, links, cables and all the plumbing. He also explained that NVIDIA is not a chip company. Rather it builds entire systems then disaggregates them to sell to customers. This no doubt caused some stress among systems vendors.

A New Software Development Paradigm

GTC24 is a developer conference first, even though that point gets lost sometimes in all the noise from the ecosystem. Jensen laid out a vision for developers showing off NIMS (NVIDIA Inference Microservices) which are inference containers. As well, NeMo (Neural Modules), which are models for conversational AI and a platform for developing end-to-end generative AI using automated speech recognition, natural language processing (NLP) and retrieval augmented generation (RAG) at very high performance.

And he discussed the AI Foundry, which packages all this technology into systems and essentially changes the way data centers are built.

He also spent a lot of time showing off Omniverse a platform of APIs, services, and SDKs that allow developers to build digital representations of a business and its workflows using generative AI-enabled tools. Jensen talked about digital twins at length and had robots on stage with him. In a private meeting with Jensen he told us that within 8-10 years, robots will be generally fully functioning. And he said this will be done with “just a bunch of tokens.” Think tokenizing of movement. He said we have lots of data and there’s a finite vocabulary around what various human movements like walking, sprinting, skipping, throwing, hugging, etc. look like. And this can be simulated with AI. And done so very accurately by being tokenized and made generative.

A key he said was to be able to ground Omniverse in the laws of physics and he described a cycle of imitate>learn>adapt>generalize>reinforce and ground in the laws of physics.

Ecosystem Enablement

The ecosystem was on full display at GTC24. Virtually every company with a play in AI was basking in the AI glow. And hence the broadening of the AI tailwind we’re seeing post GTC.

You left the event truly believing we saw proof of a new era emerging and coming into full swing. The days Moore’s Law driving industry innovation are over. A new performance curve has emerged and new styles of computing will dominate virtually all sectors for the next decade.

This was the strong feeling one gets when assessing the impact of GTC 2024.

Meanwhile Broadcom Continues to Execute on a Differentiated AI Strategy

A different but compelling AI vision was put forth by Broadcom at its investor event.

Broadcom held its first ever investor day at its facilities in San Jose on Wednesday morning, perfectly timed to capitalize on the GTC24 momentum and position the company for investors. It was attended by both buy side and sell side analysts and only a few industry analysts like ourselves. This was Charlie Kawwas’ show designed to educate the investment community on Broadcom’s unique approach to the market. We learned a lot about the company’s strategy, philosophy, technology, execution ethos, engineering talent and we saw demos of some of the most leading edge silicon technology on the planet.

Broadcom has twenty-six divisions or P&Ls and seventeen of them are in the semiconductor group. The semiconductor group is a roughly $30B revenue business, growing in the double digits. The group spends $3B annually on R&D.

Kawwas and his team took us through the history of how Broadcom came to be, with roots in Bell Labs, HP, LSI Logic, LSI Avago, Broadcom, Brocade and more recently software assets like CA, Symantec and of course VMware.

Broadcom’s Unconventional Strategy

Charlie Kawwas explained the company’s unique three-pronged strategy shown below.

Broadcom doesn’t chase hockey stick markets to try and ride the steep part of an S-Curve. Rather they look for durable markets with sustainable franchises that have a decade or more runway. In those markets they go for technology leadership and they execute to a firm plan. With AI Broadcom just happened to catch a big wave and is exceedingly well-positioned.

So this is a nice Powerpoint but what was impressive to us is that the five presenters coming after Charlie Kawwas each demonstrated: 1) A deep understanding of their markets and the history of their businesses; 2) Technology leadership within their division, showing us when they entered the market, how they were first with innovations, their technology roadmaps and; 3) Evidence of execution.

The proof points were many from AI networks presented by Ram Velaga, server interconnects by Jas Tremblay, optical interconnects from Near Margalit, foundational technologies like SerDes that are shared across the P&L’s from Vijay Janapaty and custom AI accelerators presented by Frank Ostojic.

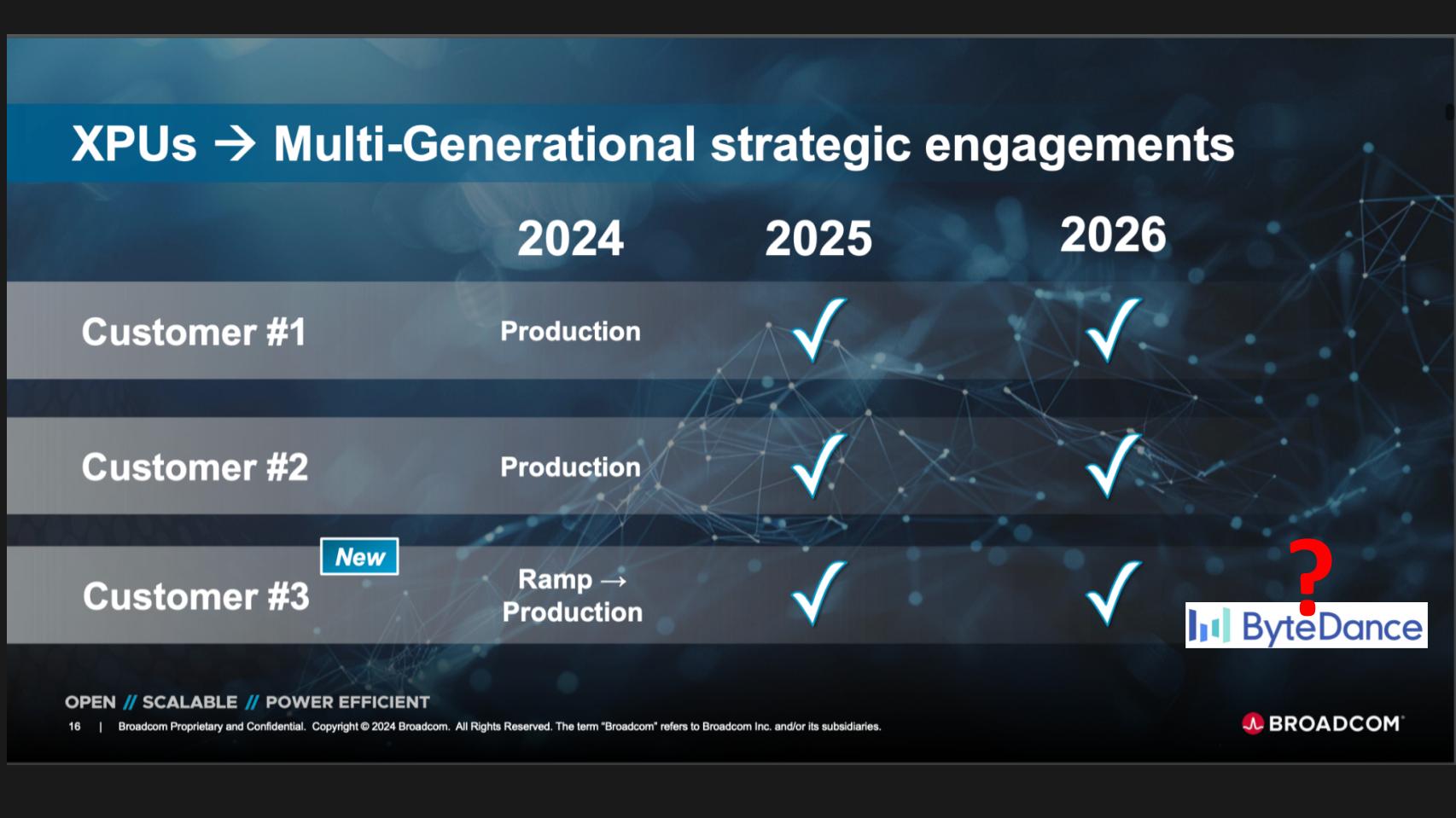

A New Custom Silicon Customer – Is #3 ByteDance?

The biggest news from the event and part of the reason why the stock popped after Investor Day- in addition to the credibility of the presentations and the GTC24 momentum – was the announcement of a third custom silicon customer.

Customer #1 is Google in our opinion. They’ve been a custom silicon customer of Broadcom for ten years. Custom customer #2 we believe is Meta, a customer for the past four years and one where Hock Tan sits on the board. We were all speculating on which customer is #3. We think it’s ByteDance but this wasn’t disclosed. Charlie Kawwas was asked in the Q&A if their technologies are restricted from selling to China and his answer was currently there are no restrictions on selling their technology. So ByteDance, the owner of Tik Tok can’t be ruled out. But as well we know that ByteDance is an advanced customer of Broadcom. It has a large network and has embraced Broadcom’s on-chip, neural-network inference engine giving it programmability and flexibility. It also is a large consumer-oriented social network that can get fast ROI from using more custom versus merchant silicon.

Further strengthening our belief that customer #3 is ByteDance came from a two other key takeaways from this discussion that further explain the value of custom silicon.

Consumer Markets Drive Innovation Ahead of Enterprise

-

First, the business case for consumer AI at companies like Google and Meta are is very strong. The bigger the AI clusters they can build the better AI they have, the better they can serve content to customers and the more ad revenue they generate. ByteDance is a leader in AI with perhaps the best algorithm and it would make sense that custom silicon would further support its mission.

-

The second point is that the consumer Internet giants have very specific workloads and if you can customize silicon for those workloads you can significantly cut costs and power. Power is the #1 problem at scale and these Internet giants are scaling. So if custom silicon can save 80 watts per chip and these Internet companies use hundreds of thousands or millions of chips…well you do the math.

For these reasons, we think custom customer #3 is ByteDance. Could it be Amazon, Apple or Tesla? Maybe…but we think ByteDance makes the most sense. And the reason this is important is because these are durable customers with long life cycles and a highly differentiated and very difficult to displace business.

These custom customers are AI-driven and will contribute to Broadcom’s AI revenue. The company increased its AI contribution forecast saying that 35% of its semiconductor revenues will come from AI in 2024. That’s a 10% increase from previous forecasts and its up from less than 5% in 2021.

In 2024, 35% of Broadcom’s silicon revenue will come from AI workloads.

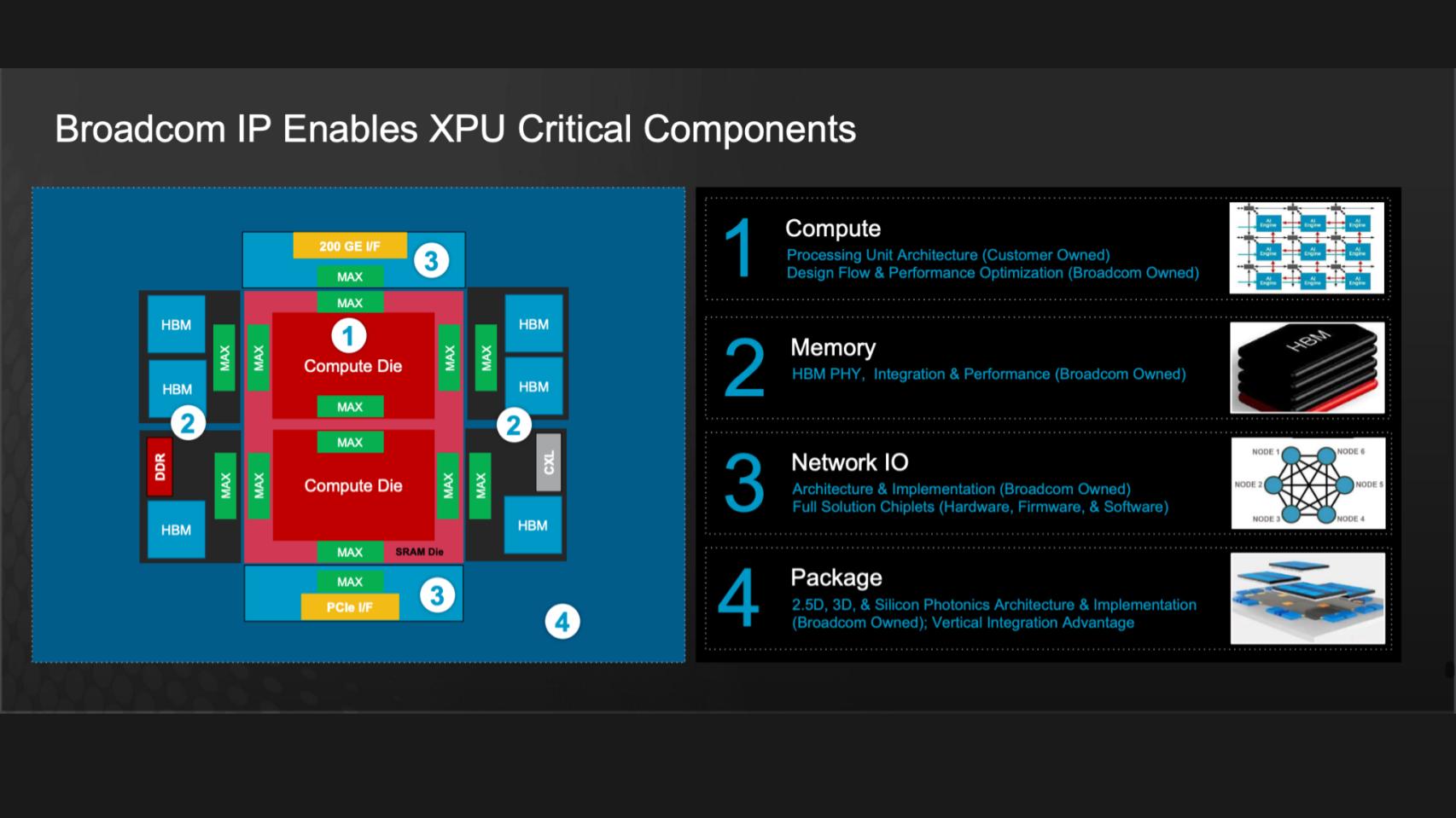

We Don’t Make GPUs…We Make the Stuff That Makes XPUs & HBMs Work

There’s so much to unpack from the Broadcom meeting. We have almost 90 slides of content and multiple pages of notes from the event. But one other key point we want to leave you with is shown here.

Broadcom’s premise is that we’re moving from a world that is CPU-centric to one that is connectivity-centric. That the emergence of alternative processors beyond the CPU like the GPU, NPU, LPU – the XPUs if you will – require high speed connections between them. And that is Broadcom’s specialty. And the power of its business model is shown above. This is an example of a type of system needed to support AI workloads. Broadcom technologies are shown on the right and the numbers show where they fit in the packaging. The company is essentially surrounding the AI infrastructure.

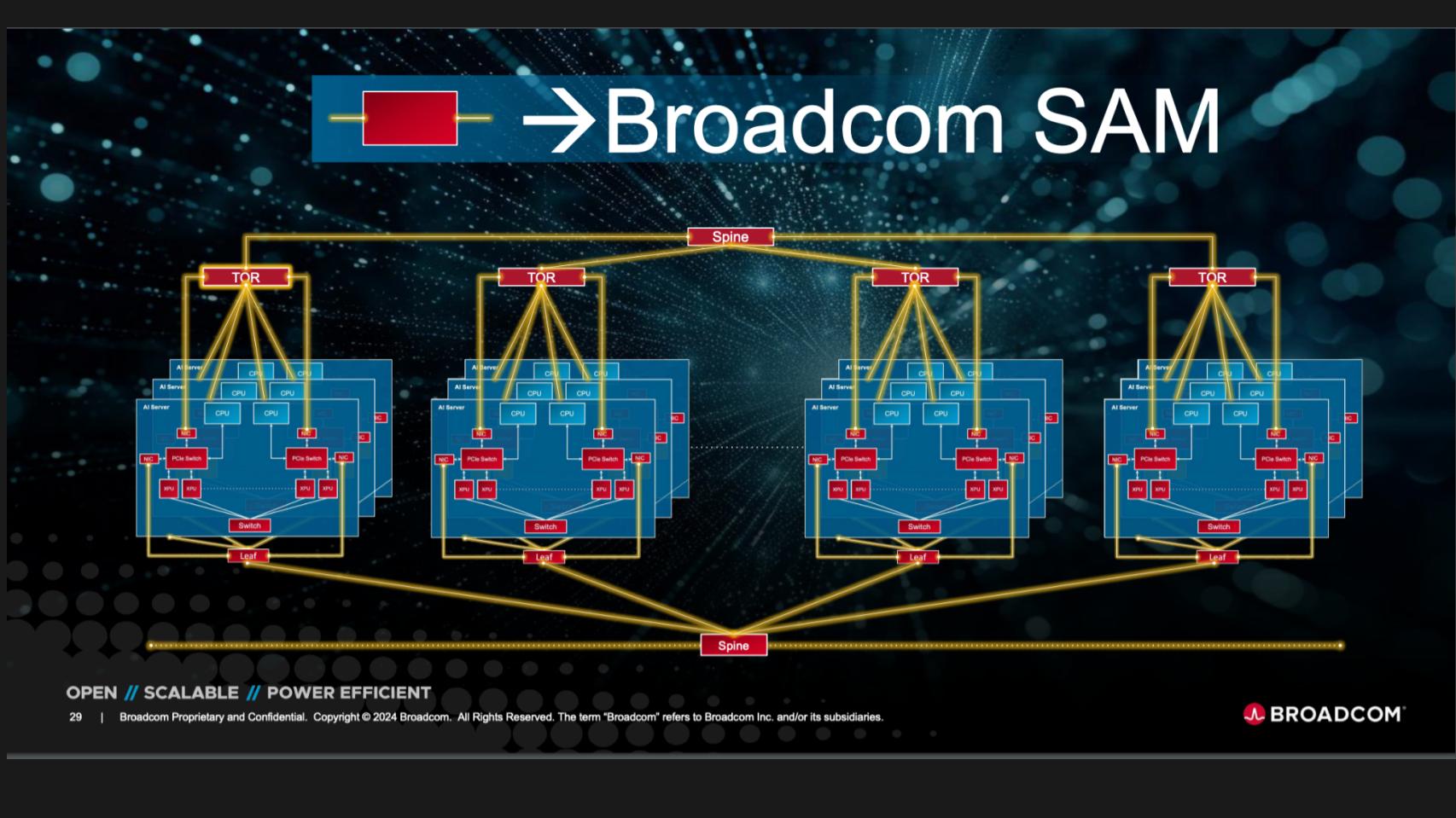

Imagine Broadcom Tech at Scale

Below is a different picture of this dynamic with a configuration that scales up and out to many clusters. Broadcom’s technology is shown in the red and the yellow optics that connect all the various components of a system, from the spine,, top of rack switching, NICs, PCIe innovation, etc. It has a massive TAM beyond that GPU and CPU that is a very difficult market in which to participate.

Another key takeaway which was shown on all of Broadcom’s slides – Charlie Kawwas’ mantra – Open, Scalable, Power Efficient.

Broadcom is focused on open technology standards. Two examples are PCIe and Ethernet. There was a nuanced but interesting tidbit shared by Jas Tremblay about Blackwell, NVIDIA’s giant GPU. The southbound connectivity of Blackwell is proprietary NVLink but the northbound connection is PCIe. NVIDIA’s highly proprietary architecture is built around Infiniband from the Mellanox acquisition. But even NVIDIA must play in the open standards world.

The southbound connectivity of Blackwell is proprietary NVLink but the northbound connection is PCIe. -Jas Tremblay, GM Data Center Solutions Group, Broadcom

NVIDIA is Broadcom’s Fastest Growing Customer

Despite the tension between NVIDIA’s proprietary approach to network connectivity and Broadcom’s embrace of Ethernet, Kawwas highlighted that Broadcom has a great relationship with NVIDIA and NVIDIA is its fastest growing customer.

So you can begin to see how compelling Broadcom’s business model is because it sells to consumer Internet companies, device manufacturers like Apple, hyperscalers, enterprise players like Dell and HPE and the most important company in AI right now, NVIDIA. Which in some ways makes Broadcom as important to the growth of the industry.

Another clear takeaway is that Broadcom does the really hard silicon work that others can’t do. The example given was many folks can ski the green trails but the double blacks and extreme skiing with warning signs and skeletons – Broadcom does really well but that’s a huge barrier to entry.

There’s so much more we could talk about and we will share more over the coming weeks and months as we digest this material. Especially in the Infiniband vs. Ethernet discussion which was fascinating – Jensen saying Ethernet is essentially useless for AI and Broadcom countering with many proof points of AI leaders like Meta, Google and others adopting Ethernet.

For now, we’ll leave you with the following thoughts.

The AI Outlook

We’ll close with the following key points:

- The AI tailwind is broadening and is especially evident for those firms seeing unprecedented AI demand.

- Other firms are more susceptible to the macro right now and will have to prove their AI innovations and execution to participate in the updraft.

- NVIDIA and Broadcom have radically different strategies but both have sustainable moats with long runways.

- There’s lots of discussion on training vs. inference and how NVIDIA will play as costs come down. Broadcom will 100% participate in both.

- Consumer AI ROI is clear. Consumer markets always lead and eventually spill into the enterprise. AI ROI must be tangible for the macro headwinds to subside.

Will 2024 be the year of AI ROI in the enterprise, the way it has been for years in consumer Internet markets like search and social. We think perhaps in the second half of this year, enterprises will start to see ROI on their AI investments but 80% of organizations are still firmly in the experimentation phase. It’s more likely AI ROI is a 2025 story where enough value is thrown off from investments to gain share back into new AI projects that will ideally drive productivity growth and fulfill the promise of AI.

For now, keep experimenting, finding those high value uses cases and stay ahead of the competition in your industry.