Here’s an overview of our conversation about data loss prevention tools and the role they play in protecting against AI data exfiltration.

Data Loss Prevention Tools and the Role They Play

As we know, generative AI is a quickly moving, disruptive trend. While generative AI is without question driving productivity gains, it’s also creating new security risks, and is increasingly being used for as much bad as good. The integration of AI in cybersecurity presents both opportunities and challenges, making it crucial for organizations to adapt and protect themselves — including corporate networks, mobile apps, and beyond. That’s where our focus on data loss prevention tools today comes in.

Data loss prevention is a set of tools and procedures that form part of an organization’s overall security operations/strategy and detect and prevent the loss, leakage, or misuse of data through unauthorized use, data breaches, and exfiltration systems. Let’s drill down a little further: Data Loss Prevention solutions can be appliances, software, cloud services, and hybrid solutions, all designed to provide data management and data supervision to help organizations prevent non-compliant data sharing.

Categories of Data Loss Prevention Solutions

Data loss prevention tools are designed to protect data at rest, data in use, and data in motion. The solutions are also capable of understanding what content they are protecting beyond relying simply on keywords: we call that “context awareness” capabilities. There are several categories of DLP solutions, which include:

- Full Data Loss Prevention: Full DLP solutions are designed to protect data at rest, in use, and in motion. These solutions are context-aware of what is being produced. This means it looks at keyword matching, incorporates metadata, the role of the employee in the organization, who wants access to the data, and other information in order to make a decision as to the sensitivity of the content and grant or deny access request.

- Channel Data Loss Prevention: Channel data loss prevention solutions are generally specific to one kind of data, most often data in motion and over a particular channel, for instance, email. There might be some content awareness here, but in most cases, these solutions rely on keyword blocking.

- “Lite” Data Loss Prevention: Some solutions are called “DLP Lite” and are essentially add-ons to other enterprise solutions. These are sometimes context aware, but that is not always the case.

With the Rise of AI, Data Loss Prevention Finds a New Purpose: Protecting AI Data Exfiltration

One of the cybersecurity challenges associated with generative AI is the potential for attackers to utilize it for multivector social engineering attacks and creating clean malware code. Attackers are using genAI to create language models that can launch targeted voice, email, and text attacks, making it difficult to protect against because they appear legitimate. Additionally, generative AI is being used to create clean malware code, making it challenging to identify the actor behind the attack. This poses a significant challenge for cybersecurity professionals in defending against these sophisticated attacks and identifying potential threats.

The good news is that we are seeing security vendors step up with solutions designed to protect against AI data exfiltration, which is, quite simply, data theft. This data theft can be done manually or automated through malware, and is the intentional, unauthorized, covert transfer of data from a computer or other device. Businesses of all kinds are targets for cyber attackers, and data exfiltration attacks can be incredibly damaging to a government entity or organization. Protecting against data exfiltration safeguards intellectual property, helps companies adhere to industry regulatory compliance standards, and also protects an organization’s intellectual property.

The Rapid Global Adoption of AI Brings New Challenges for Organizations to Consider

Common data exfiltration techniques and attack vectors include many of the things we’ve covered here before: social engineering attacks (phishing, smishing, vishing, exploiting vulnerabilities (like unpatched endpoints) or security flaws or openings.

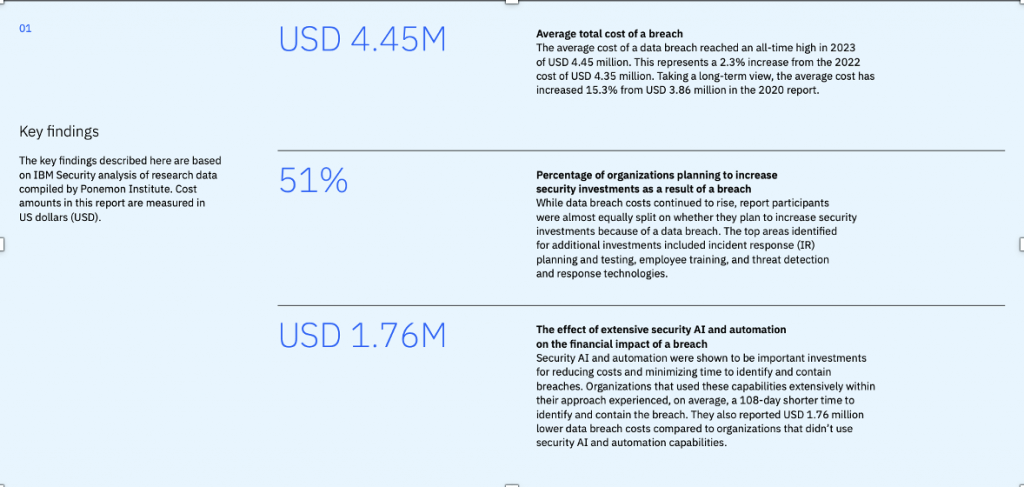

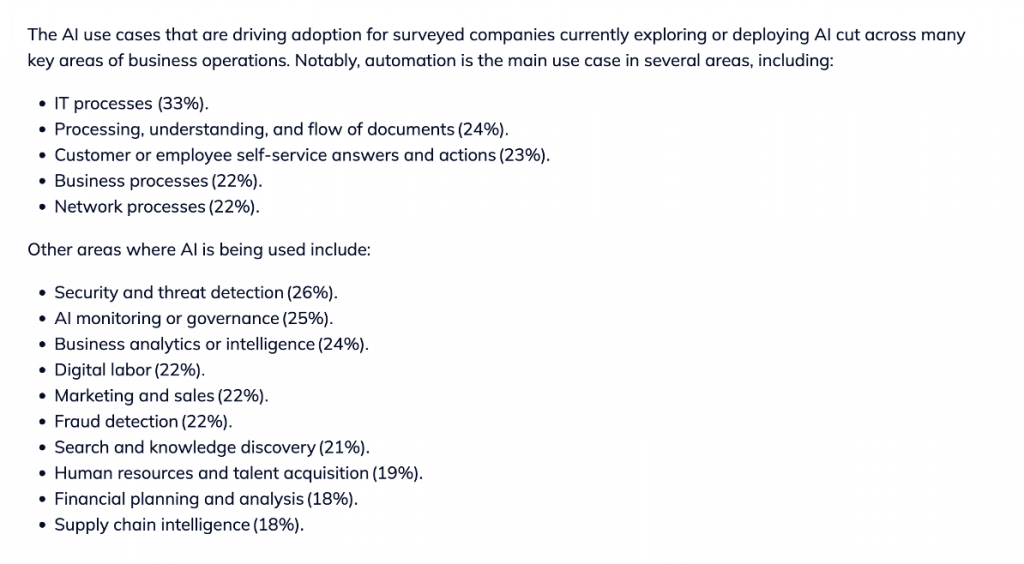

As we think about data exfiltration and the rise in the global adoption of AI, organizations have some new challenges to consider. In fact, one of the highlights from the IBM Global AI Adoption Index 2023 was that in addition to transparency concerns, data privacy and trust were some of the biggest inhibitors of generative AI growth in the enterprise. Here are some data points from the IBM study that indicate how important security AI and automation are to protecting against a data breach.

Image source: IBM Global AI Adoption Index 2023

A few other stats from the IBM study include good news on the security and threat detection front, as organizations using AI for threat detection, monitoring, and governance are at a solid 25% and growing.

Image source: IBM Global AI Adoption Index 2023

O’Reilly’s 2023 report, Generative AI in the Enterprise, showed that two-thirds of enterprises report their companies are using generative AI, and many use five or more apps. It’s believed that extensive data permissions are prevalent in one in five of those, at a minimum. Corporate IT teams are understandably concerned about protecting proprietary corporate data and intellectual property from leaking into LLms that are part of generative AI platforms — and with good reason.

Speaking of LLMs, public AI platforms like ChatGPT are only part of the problem. A bigger and equally pressing concern is corporate-owned/developed LLMs, which contain treasure troves of sensitive data that certainly shouldn’t leave the organization and which also shouldn’t be widely accessible throughout the organization. That said, segmenting that data, restricting, and permissioning it is a key component of data security.

There are many ways to protect sensitive data and data loss prevention is one of the tools in that arsenal. The goal is to align corporate policy around AI utilization with corporate governance around AI data exfiltration. Data loss prevention solutions can help clients classify and filter documents and can also help restrict outbound data from leaving SaaS applications.

The good news: with the speedy rise in the interest in and adoption of AI in the enterprise, security vendors have been listening, and moving quickly to retrofit their existing tool sets or creating new ones designed to protect sensitive data from AI.

Security Vendors Are Rising to the Occasion

The good news is that security vendors are coming to the table with solutions designed to address these challenges. Here’s a look at just a few we are watching:

Skyhigh Security added data loss prevention tools to their CASB solution.

Cyberhaven automatically logs data moving to AI tools so that organizations can understand how data is flowing and offer them the ability to create guidelines and policy around patterns they observe in that data.

Google’s Sensitive Data Protection services include cloud data loss prevention.

Cloudflare One’s platform began including data loss prevention for generative AI in 2023.

Code42 created the Insider Risk Management Program Launchpad, which allows clients visibility into the use of ChatGPT, and detects copy-and-paste activity, which it can then block.

Fortra’s Digital Guardian data loss prevention tool allows IT teams to manage generative AI data protection against a spectrum ranging from simple monitoring to complete access blocking, to just the blocking of specific content like customer information or source code.

DoControl’s SaaS Data Loss Prevention takes things a step further than simply blocking apps and permissioning by monitoring sensitive SaaS application data security, performing end-user behavioral analytics to prevent insider threats, automatically initiating secure workflows and evaluating the risk of an AI tool in use, and then taking steps to educate the user and offer alternative suggestions as appropriate.

Zscaler’s AI Apps category blocks access and/or offers warnings to users visiting AI sites.

Palo Alto’s data security solution is a solid choice for safeguarding sensitive data from exfiltration and unintended exposure via AI applications.

Symantec recently added generative AI support to its data loss prevention solution that allows for the classification and management of AI platforms. Symantec’s platform uses optical character recognition, which analyzes images. There are content formats that most data loss prevention tools can’t recognize, and the optical character recognition catches these nonstandard images.

Next DLP is bringing policy templates to the table, designed to make complex policies easier. Next DLP began offering organizations preconfigured policies to help create out-of-the-box guardrails in the spring of 2023. The templates are available for Hugging Face, Bard, Claude, Dall-E, Coy.AL, Rytr, Tome and Lumen 5. Equally as interesting, Next DLP’s Policy Testing Tool allows organizations to assess the performance of their DLP solutions, ensuring the accuracy of their policies.

Reducing the Risk of Data Exfiltration Moving Forward

The risk of data exfiltration can have significant business ramifications. To give just one example of a data exfiltration attack, consider the Lapsus$ gang’s 2022 exfiltration of 1 terabyte of sensitive information from chipmaker NVIDIA, which also leaked the source code for the company’s deep learning technology. And we wonder why CISOs don’t sleep at night.

In sum, while the risk of data exfiltration isn’t new, the rise of generative AI and its rapid adoption in organizations of all sizes adds complexity to what was already no small challenge for security professionals. Organizations continue to deal with a lack of internal resources and highly skilled employees, which is also not a new industry challenge. The IBM study showed that one in five organizations surveyed don’t have employees with the skills to use AI or automation tools, and 16% reported they can’t find new hires with those skill sets.

So, what to do and think about moving forward? To reduce the risk of data exfiltration, especially in the AI age, organizations must integrate security awareness and best practices into their culture. They need to work to recruit the best and brightest tech talent actively and also work to upskill and reskill their existing IT teams to ensure they have the internal capabilities they need.

Beyond those basics, they also need to consistently evaluate the risks of EVERY interaction with computer networks, devices, applications, data, and other users AND keep discussions about generative AI and the risks it presents center stage, and last, but certainly not least, they should seek out trusted vendor partners and solutions who can best help protect against data loss prevention in the AI age.

About the SecurityANGLE: In this series, you can expect interesting, insightful, and timely discussions, including cybersecurity news, security management strategies, security technology, and coverage of what major vendors in the space are doing on the cybersecurity solutions front. Be sure to hit that “subscribe” button so that you’ll never miss an episode. As always, let us know if you’ve got something you’d like us to cover. You can find us on the interwebs here:

Disclosure: theCUBE Research is a research and advisory services firm that engages or has engaged in research, analysis, and advisory services with many technology companies, which can include those mentioned in this article. The author holds no equity positions with any company mentioned in this article.Analysis and opinions expressed herein are specific to the analyst individually, and data and other information that might have been provided for validation, not those of theCUBE Research or SiliconANGLE Media as a whole.

Check out other episodes from the SecurityANGLE series:

5 Top Enterprise Risk Management Trends We’re Tracking in 2024