Introduction

Big Data Hadoop is moving from development and test cases, to small-scale production, to large-scale production mode. Hadoop is well tested in development/test and small-scale projects but is new to the demands of meeting the more exacting requirements of large-scale production workloads, including meeting deadlines and SLAs. Automation, measuring SLAs, production problem high-availability (recovering from node failure) and problem determination tools suitable for large-scale Hadoop are still work in progress.

In this paper Wikibon looks at large-scale production Hadoop from four vendors offering different types of high-density, large-scale rack solutions and compares the integration levels, the density of the offerings, environmentals, automation and the HA approach. The paper discusses in general what is required to achieve large-scale production and discusses what high-performance compute can bring to the Big Data table.

High Performance System Characteristics

High performance computing (HPC) is a well-established market segment. Key imperatives are:

- High processor density – placing the processors as close together as possible to minimize interconnect latency;

- High-speed IO organized to keep the processors fed;

- Providing and managing the power density to keep the infrastructure running;

- Providing high-density cooling mechanisms to ensure reliability and continuity;

- Providing automated high-availability solutions that will keep production going when a node fails.

The measurement of success for production HPC systems is time to value, usually measured as overall job elapsed time. The key to this is designing a balanced system that keeps the processors as busy as possible over extended amounts of time, keeping system overheads to a minimum, and providing low-latency interconnection and just-in-time highly parallel data movement to and from the processors. Automated high availability solutions that avoid data corruption and job restarts when a single node fails are essential. Quality of data movement is also important – one retry on a block of data can delay work getting to hundreds of processors. The movers and shakers in this space are Dell, HP and IBM, as well as traditional HPC vendors such as Cray, Fujitsu, and SGI.

Comparing Production HPC & Big Data Systems

Production HPC and production Big Data systems have a great deal in common. The key metric is the same – time to value. The key technical problem is also very similar in principle – keeping processors fed with data and avoiding stopages!

Big data is heavy and not moved around easily, and certainly not over long distances. These factors usually mean that virtualization is not common because of the impact on processor and IO throughput (and subsequently on job elapsed time). Good job schedulers are more important for large-scale production than virtualization. In addition, data consolidation is important, and infrastructure tends to be dedicated around specific “pools” of data.

Big Data is often built around Hadoop clusters. These environments have no single deployment option; Big Data systems development is often deployed straight to cloud environments such as Amazon AWS or OpenStack Savanna (under development). For production, practical issues about the cost and elapsed time movement of large amounts of data to the cloud have often dictated the use of in-house solutions. For large-scale in-house deployment, a high-growth area of servers dubbed “density optimized servers” is often deployed. In IDC’s August 2013 report, density optimized servers grew 26.6% year/year ($735M which represented 6.2% of all revenue and 10% of volume), while IDC showed a 6.2% year/year decline in blade servers ($2B revenue for the quarter). As expected, in response to the high growth, most of the server vendors are pushing hard to deliver solutions that meet the specialized requirements of this market segment, including high availability.

Comparing Density Optimized Servers For Hadoop

Wikibon focused on four density optimized server racks for Hadoop from four vendors. One of them deploys standard racks with a Hadoop reference architecture, one with deployment guides, one with integrated hardware and software mainly from the vendor, and one integrated solution from an HPC base with specific hardware and mainly open-source integrated software. The vendors include:

- Dell has the early lead in this market segment, (60.5% in 2Q13 according to the same IDC report), with it’s C-line “cloud servers”. Dell has a long history of creating custom solutions in large volumes at low margins, and this led to many design wins in cloud providers. Dell has a Cloudera Solution Deployment Guide to help configure and deploy solutions.

- HP is #1 in both the overall server and blade server market and is a solid #2 in the density optimized market with a number of offerings including the Proliant SL-serieslaunched in 2012 for Big Data and cloud deployments. HP has a reference architecture for Hadoop based on HortonWorks.

- Oracle has emphasized Oracle’s Big Data Appliance X3-2 is a pre-integrated full rack configuration with 18 12-core x86 servers that include InfiniBand and Ethernet connectivity. The Cloudera distribution of Apache Hadoop is included to acquire and organize data, together with Oracle NoSQL Database Community Edition. Additional integrated system software includes Oracle Linux, Oracle Java Hotspot VM, and an open source distribution of R.

- SGI is a company that has specialized in high performance computing, and providing converged infrastructure for that market. High Performance Computing (HPC) is driven by highly parallel architectures with very large numbers of processors. SGI InfiniteData Cluster is a cluster-computing platform with high server and storage density. InfiniteData Cluster offers up to 1,920 cores and up to 1.9PB of data capacity per rack. The cluster is centrally managed using SGI Management Center. InfiniteData Cluster solutions for Apache Hadoop® are pre-racked, pre-configured and pre-tested with all compute/storage and network hardware, Red Hat® Enterprise Linux®, and Cloudera® software.

The balance of the design (specifically the cores:spindle ratio) and environmental (space, power, cooling) are very important in this segment of the market. Each family of servers offers a wide variety of options since different workloads have different requirements:

- Hadoop environments are usually optimized at a 1:1 core/spindle ratio;

- noSQL and MPP databases at about a 1:2 ratio;

- Object storage at 1:10.

Within this professional alert, all comparison tables and charts assume a 1:1 core/spindle ratio.

As deployments are often multiple racks of gear at a time, the scalability, power and cooling requirements of large server clusters are very similar to the high-performance computing (HPC) marketplace. While rack level architectures are not new, most hyperscale deployments have spun their own designs. Converged infrastructure vendors such as VCE have been geared more for virtualization environments rather than scale-out applications.

Big Data Density Optimized Solution Comparison

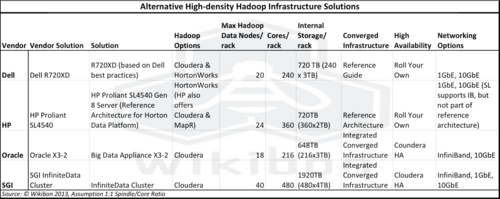

For production Big Data, there is a growing need for high-density standard offerings built out at rack-scale. As guidance to users, Table 1 looks at Hadoop environments at rack-scale (standard 42U racks). Where pre-racked configurations were not available, best efforts were made to depict a comparable solution using the densest storage while maintaining 1:1 core/spindle ratio. Table 1 shows the data on four high-density Hadoop solutions:

- Dell R720XD,

- HP Proliant SL4540,

- Oracle X3-2,

- SGI InfiniteData Cluster.

The Dell and HP solutions are not fully integrated solutions and would need to be integrated in-house or through an SI. This would include any high availability solution, to ensure automatically that data is not lost in the event of one or more nodes failing, and that recovery is automatic and rapid. The Oracle and SGI solutions are fully integrated system hardware and software, both with Hadoop high availability.

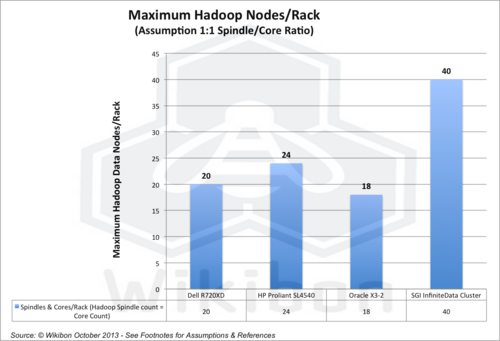

Figure 1 shows maximum number of Hadoop nodes per rack. SGI has 40 nodes, about twice as many as any competitor.

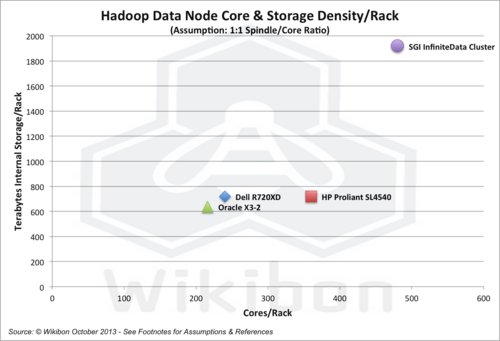

Figure two shows the number of cores per rack plotted against the amount of storage per rack. Again, SGI has outstanding high-density characteristics with a combination of tray design and utilizing 4 Terabyte drives.

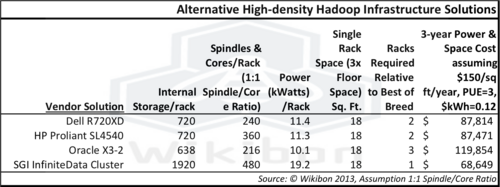

Table 2 shows environmental characteristics of the four solutions. All of the solutions are high-density and efficient compared with traditional server deployments. Because of the rack density, SGI has the best three-year environmental costs. The differences are relatively small compared with acquisition and integration costs.

Conclusions

High performance computing and large-scale Hadoop clusters have similar requirements for availability and high-density deployment. All the vendors in the comparison are well established with excellent products and services. Because large-scale production Big Data deployments are few and the technologies not yet fully ready for prime time, Wikibon would recommend a converged infrastructure solution with Hadoop node HA built-in. Wikibon has found that the greater the level of integration, the lower the operational costs over time. Operational costs are likely to be higher at this stage of platform maturity, and one throat to choke, together with integrated upgrades, is a significant benefit. Both Oracle and SGI have good InfiniBand connectivity, with SGI having the nod on HPC deployment experience.

Action Item: As companies look at large-scale production Hadoop Infrastructure, strong consideration should be given to solutions that are pre-integrated and optimize data center resources. As a strong player in high availability for HPC and with experience of large-scale converged infrastructure, SGI should be included in Hadoop production architecture evaluations.

Footnotes:

HP Reference Architecture for Hortonworks Data Platform on HP ProLiant SL4540 Gen8 Server

Dell Optimized for Hadoop and Dell Cloudera Solution Deployment Guide

Oracle Big Data Appliance