Executive Summary

Wikibon believes that the traditional data center as we know it, for most organizations, will not exist within ten years, even in the largest enterprises of the world. What will remain are enterprise IT control centers; the data processing will be executed in mega-datacenters, and the data, (all the data), will be stored in these mega-datacenters. Alongside will be the data from cloud service providers and data aggregators, as well as multiple communication vendors with state-of-the-art long distance communication to users, partners, suppliers and other mega-datacenters.

The underpinning of mega-datacenters will be a software-led infrastructure (software-defined) where compute, storage and network functionality will be provided by layers of control software running on commodity hardware. For example, storage will be located close to the servers in a Server SAN model, rather than the traditional network storage model of function based on proprietary code locked within storage arrays. The former will support applications horizontally across the application portfolio, whereas the latter requires purpose-built stacks on an application by application basis.

The benefits of choosing a software-led, industry relevant and properly set-up mega-datacenter, are much lower costs of IT management compared with today, as well as the ability to access vast amounts of Internet and industrial Internet data at local datacenter speed & bandwidth. This capability will likely spur IT spending globally, as there will be strong opportunities for early adopters to invest in new IT techniques to decrease overall business costs and increase revenues.

Wikibon believes that successful enterprises will aggressively adopt a mega-datacenter strategy that fits their business objectives within their industry. Effective enterprise IT leaders will increasingly exit the business of assembling IT infrastructure to run applications, and the business of trouble-shooting ISV software issues. Successful CIOs will move to position their enterprises to exploit the data avalanche from the Internet and industrial Internet and enable real-time data analytics.

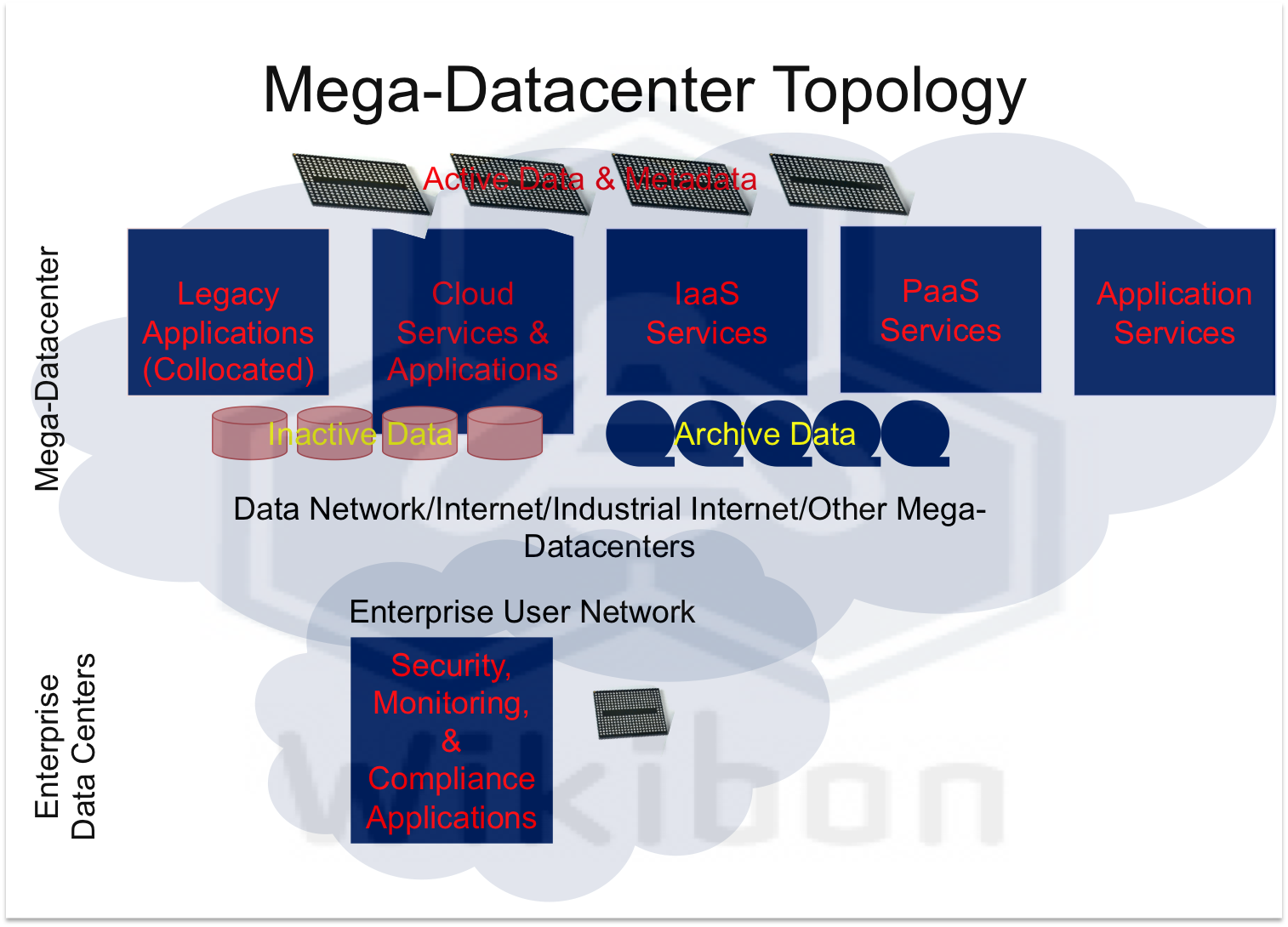

Figure 1 below shows the likely key components and topology of a future enterprise datacenter.

Source: Wikibon, 2014

The top layer in Figure 1 shows the active data and metadata distributed held mainly in flash storage positioned close to the servers. The technology used is very likely to be based on aServer SAN model deployed across the servers using high speed interconnects. The legacy applications that are working well will run on the original configuration using a collocation model within the mega-datacenter, allowing back-hauling data to and from other cloud, IaaS or PaaS applications within the metadata center.

Key Challenges Of Today’s Datacenter Topology

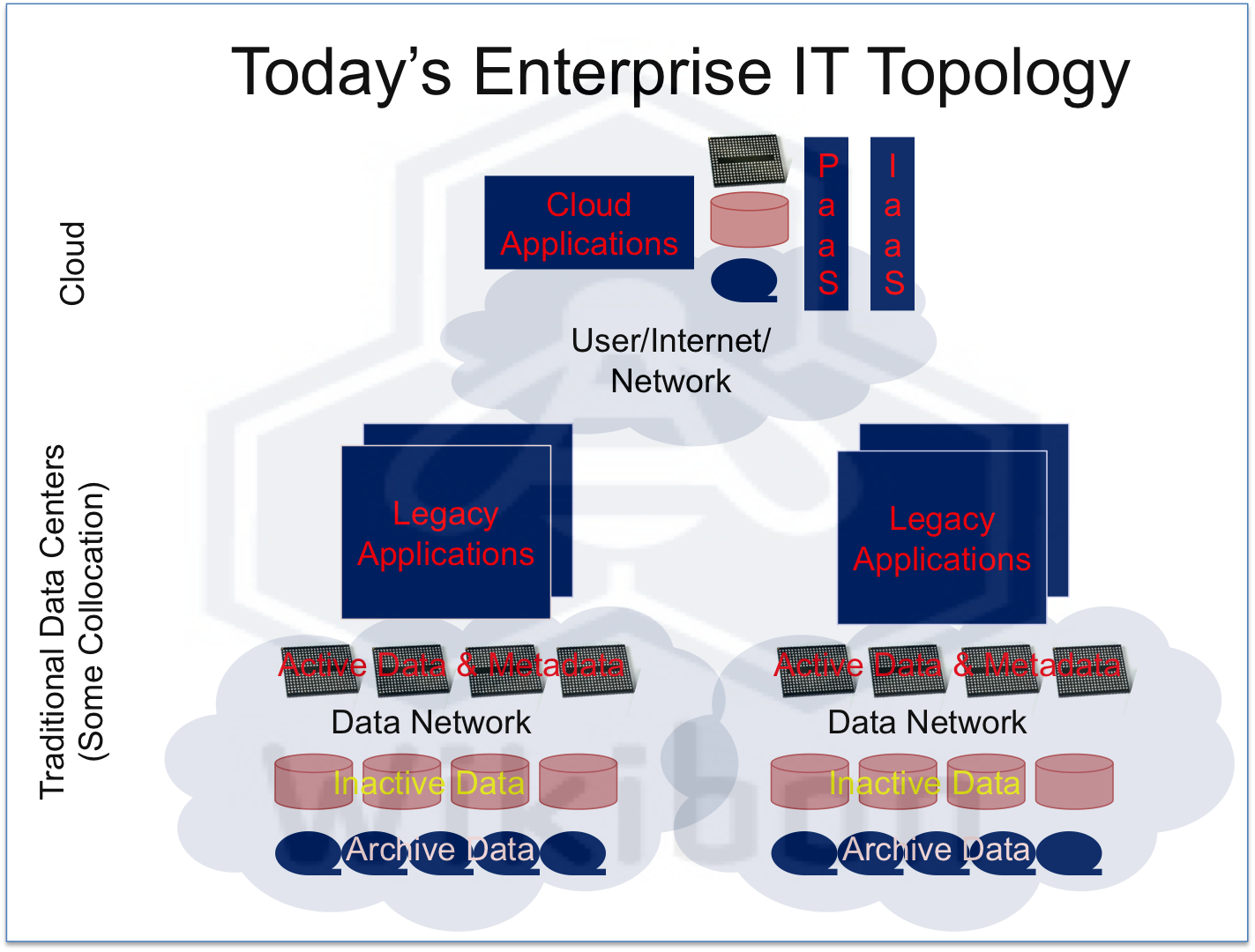

The key challenges to today’s IT datacenters include:

- Stove-piped islands of application infrastructure.

- Stove-piped IT organizations with separate groups of:

- Application development,

- Application maintenance,

- Database administrators (DBAs),

- Server infrastructure operations,

- Storage infrastructure operations,

- Network infrastructure operations.

- Slow connection to public cloud services (which are mainly used for development and edge applications).

- Poor integration between cloud & legacy applications.

- High-cost operations, slow to change.

- Great difficulty optimizing to meet application SLAs.

- Very expensive and difficult to enable real-time analytics.

Overall IT is perceived by most business executives as inefficient, high cost and very slow to change. Line-of-business executives are exerting pressure to use external IT services to achieve their business goals.

Figure 2 shows the general topology of today’s datacenters.

Source: Wikibon, 2014

Why Software Defined Datacenter

The software defined datacenter is likely to be the key ingredient of future datacenter infrastructure. Very likely components of software defined data centers are open hardware stacks (e.g., Open Compute Project) and open software stacks (e.g., OpenStack). For example, a combination of OCP and OpenStack together, combined with a datacenter integration and orchestration layer that will optimize a facility’s infrastructure power and cooling provide a powerful base for next-generation cloud infrastructure. This set of technologies would ensure a low-cost automated and integrated software-defined infrastructure which will enable Mega-datacenters to provide an ideal platform for Infrastructure-as-a-Service (IaaS) and Platform-as-a-Service (PaaS) offerings to enterprises, cloud service providers and government organizations. This will enable Mega-datacenter services to be formidable competitors to cloud service providers such as AWS and traditional system vendors offering cloud services such as HP, IBM, Oracle, etc.

Importantly, while many vendors talk about the software-defined data center, many ignore the data center itself. The true “Cloud OS” of the future will control not only server, storage and network resources, but also allow orchestration of facilities infrastructure as well. Specifically, we are referring to power, cooling/chillers, lighting, routing of connectivity infrastructure, etc.

The next section looks at why the Mega-datacenter is the future.

Why Mega-Datacenters

The reasons for this coming sea change are:

- The cost of IT Infrastructure is at least 50% lower:

- Space – MegaDs can be positioned in low cost areas, the wrong side of any track.

- Power & Cooling – MegaDs can be positioned to take advantage of low cost green power and can achieve efficiencies of true PUE1 of 1.5 or less, compared with true PUE of 3 or more in traditional data centers.

- Long-distance Communication Costs – Effective MegaDs are set up with multiple carriers that compete for long-distance communication. There is no “last-mile” tax from the ex-Bell companies.

- Communication costs to cloud services – The cost of high-speed communications to multiple service providers that are also resident in MegaDs can be cut by a factor of 100, from $10,000s/month to $100s/month for a line.

- The cost of IT equipment from standardization & just-in-time deployment – By providing standardized racks, servers, networking & storage equipment with a standardized layout across a large number of customers, MegaDs can use economies of scale and bulk purchasing to reduce equipment and set-up costs. The ease of deploying additional equipment avoids the costs of buying equipment early and reduces “just in case” over-purchasing.

- The cost of implementing & managing IT infrastructure is up to 80% lower:

- Infrastructure-as-a-Service (IaaS) – MegaDs (or cloud-clients of MegaDs if they don’t want to compete with their customers) can provision and maintain a complete IT stack (from servers to storage to hypervisor to operating system), sometimes including orchestration and automation layers. The technology controls pools of processing, storage, and networking resources throughout a datacenter managed or provisioned from dashboards, CLIs, or most importantly RESTful APIs. These APIs that can logically call IaaS services and remove the need to understand the physical layer. Overall, IaaS can reduce the cost of management for enterprise IT by 30%-40%. Examples of IaaS include AWS IaaS services and EMCs VCE platform and services. Open source IaaS infrastructure stacks include CloudStack and OpenStack.

- Platform-as-a-Service (PaaS): – The potential value and potential of platforms such as Pivotal’s Cloud Foundry and IBM’s BlueMix are getting a great deal of attention. BlueMix is turning Rational tools, WebSphere middleware, and IBM applications into services on SoftLayer. The stated objective of some PaaS vendors is to develop future cloud-based applications quickly and run them on any IaaS platform. That begs the question of what future technologies cloud-based applications will utilize, what business problems they will solve, and what new tools are required to develop and run them. This platform approach also begs the question of how vendors can integrate all the components to achieve a single managed entity (SME), a pre-requisite for lowering the cost of maintaining the platform. The requirements for an SME is an integrated delivery of the solution as a single component, regular singular globally pretested updates to the solution, a single business partner for sales/deployment/maintenance and upgrades, a single hand to shake, a single throat to choke. PaaS without SME significantly decreases the cost reductions from PaaS.In contrast to the above PaaS approach, the current more prosaic working PaaS offerings are aimed at simplifying the provisioning and maintenance of development and operations by providing a converged infrastructure single managed entity (SME). The referenced Wikibon research shows that an SME can cut the cost of delivery by 50% or more. AWS offers a Database-as-a-Service offering using the Oracle database with AWS IaaS as part of the Equinix MegaDs. There are box solutions such as Oracle’s Exadata with Oracle Platinum Service, or EMC’s VCE Database-as-a-Service.

- Application-as-a-Service (AaaS) – ISVs of major software will provide or define complete stacks, including IaaS, middleware and the application and provide SME maintenance of the complete stack. The ISVs can provide this as a service running on enterprise equipment or as a cloud service. Examples of AaaS include ISVs that integrate their solutions on Oracle’s Database Appliance, and Oracle’s SPARC-based SuperCluster running Oracle’s own E-Business Suite with Platinum Service. Running AssS as a SME significantly reduces costs of traditional IT to the enterprise (75%+). The cost of maintenance for the ISV is also very significantly reduced (75%+).

- Backup-as-a-Service – providing common backup services that can be tailored to meet application SLAs for Defining RPO and RTO, and provide application consistency or crash-consistency as required. The metadata around what data has been backed up and where the backup files are stored needs itself to be secured across different sites. Backup services should include the ability to use native backup (e.g., Oracle’s RMAN though the Oracle Enterprise Manager) provided by ISV vendor, but integrate the metadata into an Integrated backup catalog.

- Disaster Recover Services – MegaDs can provide much more cost-effective disaster recovery infrastructure between MegaDs sites, and cloud service providers can use this infrastructure to provide fail-over and fail-back services at much lower costs than the traditional two datacenter approach.

- Security Services – Perhaps the biggest constraint to adopting an enterprise MegaD strategy is the security issue. The NSA has complicit IT vendors that have done immense harm to the IT industry with the resultant world-wide lack of trust. However, when coldly analyzed, greater security can be provided at lower cost within MegaDs than within traditional enterprise data centers. Wikibon has discussed this issue in depth with a very large US federal IT provider. This provider looked in depth at all the security requirements with AWS over a six month period and concluded that AWS and the Equinix MegaD could provide at least equal and probably better overall security than in-house. Most MegaDs and many cloud service providers operating in MegaDs are now offering complete security services. One of the critical roles of the IT control rooms will be the monitoring of these services, and the operation of critical security services such as end-to-end encryption.

- Big Data allows the value of enterprise applications to be vastly increased:

- Accessing Big Data – The most important reason for adopting an enterprise MegaD strategy is the potential to take advantage of huge amounts of Big Data quickly and cost effectively. Data is “heavy”, and moving it is expensive and time consuming. As the industrial Internet grows rapidly, and the traditional Internet grows, the amount of data available to analyze and combine with internal enterprise data will grow exponentially. The amount of Internet data, provided mainly by data aggregators and cloud service providers, will be many times the size of enterprise data. If a traditional data center approach is taken, it will require increasingly massive data movement either to or from the enterprise data center. In reality, the cost and elapsed time for this data movement would make most potential combinations of enterprise and Internet data impractical. Centralizing and consolidating data and access to data is a business imperative.

- Accessing the Right Data & Cloud Services: – The right data for an enterprise is likely to be related to the particular vertical industry the enterprise operates in. For example, the film industry uses many cloud services such as rendering, stock film and special effects. The Switch SuperNAP MegaD in Las Vegas provides a complete film-industry set of IT services for the LA-based film industry, where film data is shipped in by truck (still the largest and lowest-cost bandwidth providers). The SuperNAP then provides very cost effective multi-provider communication services for world-wide communication.

Selecting Mega-Datacenters

The are many US-based and international mega-datacenter providers, such as Equinix, IBM, IO, and Switch (SuperNAP). The selection critia include the ability to:

- The ability to provide a best-of-breed IT infrastructure environment, with best-of-breed power/cooling/space cost efficiency,

- Prove cost-effective collocation facilities for legacy applications,

- Provide cost-effective communication with multiple competing communication providers,

- Provide IaaS and PaaS services either from themselves or cloud service providers,

- Attract industry relevant AaaS or cloud service providers,

- Attract data aggregators and cloud service providers relevant to the enterprise vertical industry(ies) it operates in,

- Provide backup-as-a-service and a full and testable disaster recovery infrastructure, and,

- Provide the security services, including compliance auditing, relevant to the enterprise’s industry.

Will All Computing Occur In Mega Data Centers?

The industrial Internet and the Internet of Things (IoT) underscore the clear answer here is no. Many distributed facilities will require local computing and data center infrastructure – e.g. utilities, factories, warehouses, etc. There will be three main differences in the future with regard to this infrastructure:

- The prominence of so-called data center “PODS” will increase. These pre-packaged and fully integrated data center facilities (“Data Center on a Stick”) will drive efficiencies, increase standardization and lower costs for remote facilities.

- Control of these distributed resources will occur through software from mega data centers. The human element will be reduced remotely, relying on centralized intelligence monitoring and orchestrating distributed resources.

- Where possible, the data itself will flow to mega data centers for integration with other organizational resources – these PODS will not be islands.

This “end-to-end” view of data center resources, we believe, will increasingly become a fundamental staple of IT.

Conclusions

Wikibon strongly believes that the traditional data center for most organizations will radically change over the next decade from one that is stovepiped and hardware-centric to an environment that is software-led. The current topology and organization will not sustain the potential opportunities for exploiting IT, even in the largest enterprises of the world. Unless a new topology is aggressively adopted, line-of-business executives will go their own way and adopt cloud-based services. For some very loosely based organizations, this can be an effective strategy. However, most medium-to-large organizations have a strong core of shared IT services and data standards, which will be difficult to impossible to retrofit after each line-of-business has independently chosen its IT strategy.

Outsourcing every existing application to the cloud is a flawed strategy for all but the smallest organizations. So-called legacy applications usually work well, and converting them to run in the cloud is a risky and usually unnecessary strategy. Wikibon has personally reviewed the almost fatal conversion of core applications at banks and insurance companies, where the conversion stalled and nearly bankrupted the companies. The easiest way to bring legacy applications forward is to collocate the data in the same mega-datacenter that houses the new home-grown and cloud applications. This way data can be moved efficiently between applications. Wikibon recommends that organizations should keep what remains in the enterprise IT control centers to a minimum. There is likely to be strong pressure from uninformed heads of lines-of-business and uninformed CXOs to keep some core processing in house. This should not be accepted as a business requirement, and IT should educate senior business executives on the realities of risk and the cost of not embracing the mega-datacenter strategy.

The benefits of choosing an industry relevant and properly set-up mega-datacenters are much lower costs of IT management compared with today, as well as the ability to access vast amounts of Internet and industrial Internet data by back-hauling the data with the mega-datacenter at local datacenter speed and bandwidth.

Wikibon believes that successful enterprises will be aggressive and early adopters of a mega-datacenter strategy. The choice of mega-datacenter is important and should fit the business objectives within the organization’s industry. For companies with international data centers, the mega-datacenters should offer sufficient locations overseas, or partner with others to provide worldwide coverage, to ensure compliance with international data laws. IT management should set out a timescale for exiting the business of assembling IT infrastructure to run applications and the business of trouble-shooting ISV software issues. Figures 1 and Figure 2 show there will be usually be a requirement to reorganize IT along application lines and towards Dev/Ops and/or Dev/Cloud models.

Perhaps most important of all, CIOs should have a clear idea of how new technologies such as hyperscale, in-memory databases, flash and metadata models can change database and application system design forever. Successful CIOs will move to position their enterprises to exploit the data avalanche from the Internet and industrial Internet and enable real-time data analytics. These capabilities will probably increase the overall IT budget over the next decade by a few percentage points of revenue, as there will be an strong opportunities for early adopters to invest in new IT techniques to decrease overall business costs and increase revenues.

Action Item: CIOs and CXOs should aggressively adopt a mega-datacenter strategy that fits their business objectives within their industry. Enterprise IT should exit the business of assembling IT infrastructure to run applications and the business of trouble-shooting ISV software issues. The focus of IT should move to positioning the enterprise to exploit the data avalanche from the Internet and industrial Internet, and enable real-time analytics.

Footnotes: Note 1

PUE (Power usage effectiveness) is defined by the Green Grid as Total Facility Energy/IT Equipment Energy, where a perfect score of 1 (100% efficiency) is achieved if all the power is going to IT equipment and none wasted. The problem with the definition of this metric is that IT equipment includes components such as fans and power supplies which should be in the denominator, as they do not provide IT compute power. As a result, PUEs of 1 or even lower than 1 are claimed. A True PUE would correct for this as discussed by Wikibon in Fanning the DCiE Metric Controversy (DCiE is 1/PUE)