Executive Summary

The question addressed is how to provide 80% of applications with storage at 50% of the cost.

A key IT imperative is to reduce the total cost of internal computing (CAPEX and OPEX) and improve service times to the lines-of-business in order to be competitive with best-of-breed public cloud options. Enterprises are actively implementing private clouds to achieve this objective and avoid the reaction of CEOs and lines-of-business to outsource everything. Similarly, smaller cloud providers are seeking to reduce their total cost of infrastructure (CAPEX and OPEX). Both enterprise private clouds and cloud service providers must ensure that the speed to spin-up or tear-down logical infrastructure deployments to support specific business objectives is reduced from weeks to minutes, and must provide sufficient automation to enable customers or LOBs to select infrastructure for deployment easily and unambiguously.

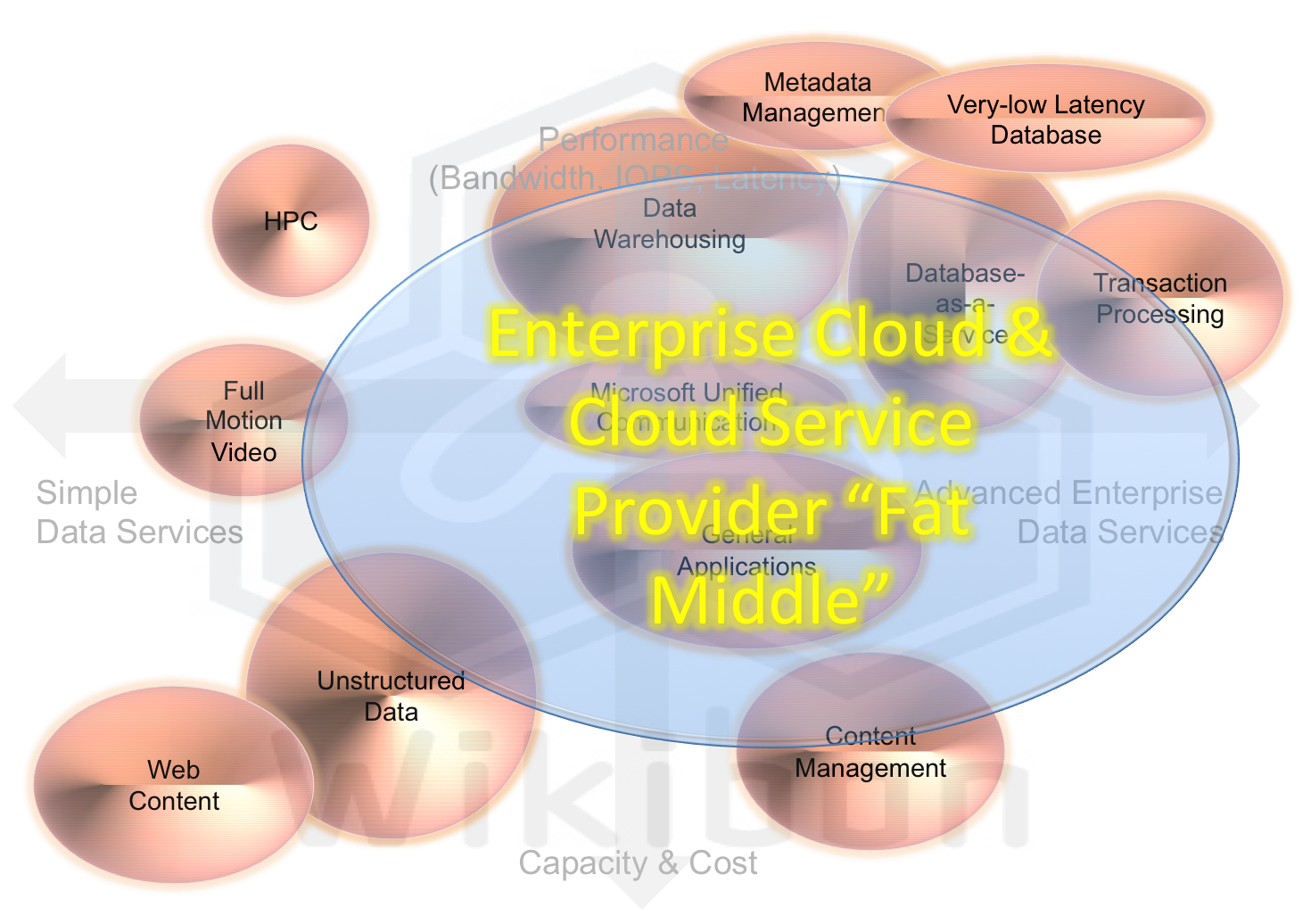

Wikibon regularly discusses infrastructure concerns with enterprise organizations and cloud service providers. Our conclusion is that the imperative for low-cost consistent cloud storage is to keep it simple and easy to administer. The focus should be to deliver on the “Fat Middle” of applications (see figure 1 below) and not try to fit this approach to every application. Alternative higher cost bespoke services can provide more complex storage services for applications that need and can afford.

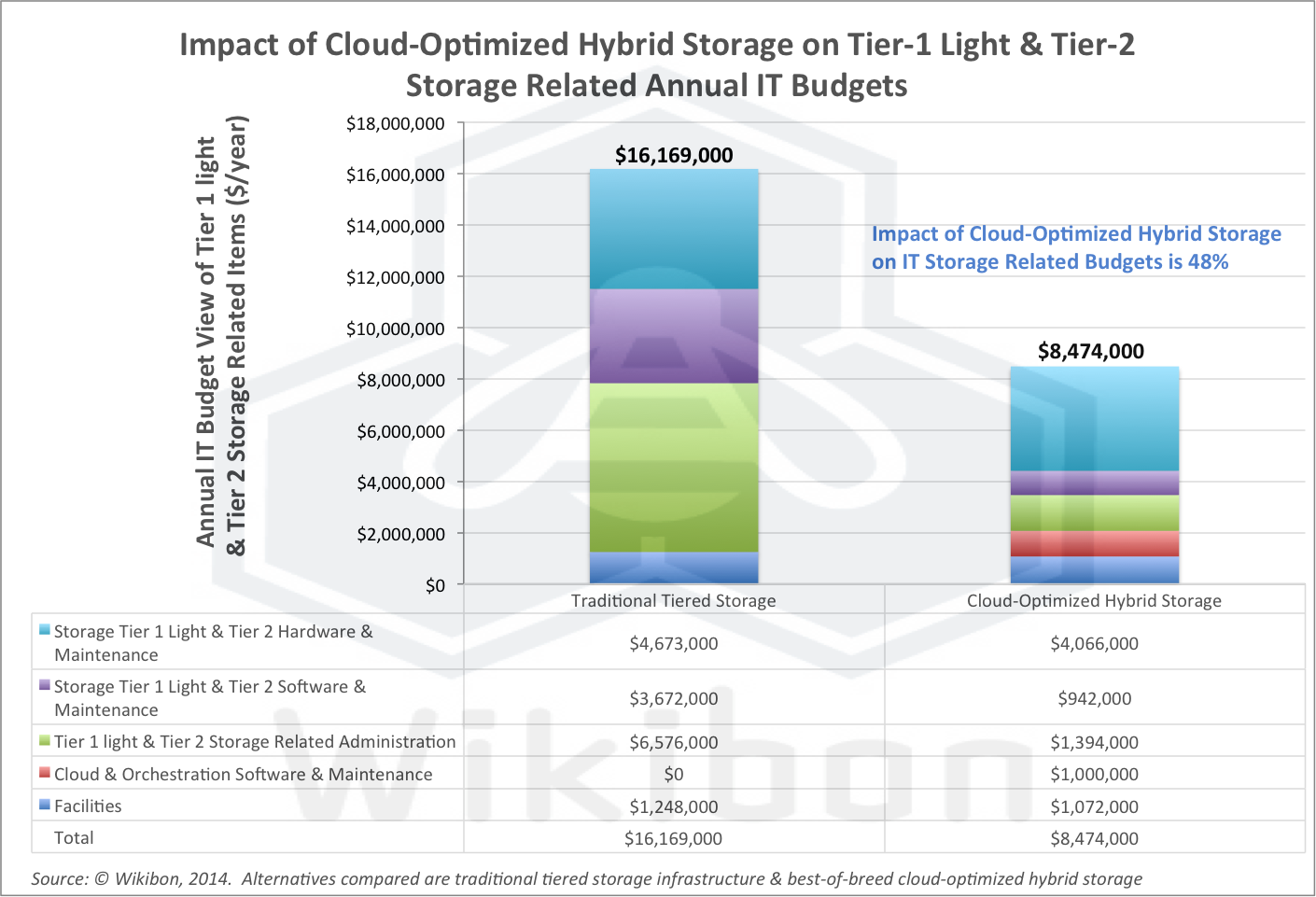

Hybrid storage arrays offer the best storage architecture for simple low-cost cloud storage services for tier 2 and tier 1 light applications. In order to minimize storage administration, the architecture should provide consistent response times at all uses and be very tolerant of noisy neighbors. The cost of storage software should be minimized, and a quality-of-service (QoS) control that allows charging, chargeback or show-back. If this approach is taken, the cost of such a service can be 50% of traditional storage arrays (see Figure 2 below).

Cloud Storage Services

The major components of infrastructure are compute, networking and storage. Cloud computing has been heavily virtualized, making compute services relatively easy to cloud-enable and automate. Networking for access to resources is cloud-enabled as part of the orchestration and security layer. The most difficult components to automate and cloud-enable have been storage and storage networking. The reason for this is mostly rooted in the performance characteristics of magnetic drives. Today’s hard disk drives (HDD) contain up to 4 terabytes of data. The access to this data on a HDD is limited by bandwidth and in the number of requests for data (input/outputs per second, or IOPS) that can be performed. Imagine trying to empty a gallon of water through a drinking straw.

The challenge for both enterprise clouds and cloud providers is balancing performance across large numbers of magnetic drives. Each HDD is a potential hot-spot, and hot-spots can move around. In a shared cloud environment this leads to four major problems:

- ”Noisy Neighbors:”

- Ane application sharing access to the drives that makes heavy demands on resources can directly affect other users of the system.

- If this is a regular occurrence, “clashing” workloads can be separated, but this requires significant performance monitoring by storage administrators.

- In a large cloud environment, noisy neighbor problems become random but significant hot-spots of poor performance.

- Security Problems:

- Enterprise cloud and especially cloud service providers need to ensure that application administrators only see their own data and where it is stored and have no access to any information as to where other application data is stored.

- This capability is usually referred to as multi-tenancy.

- Tenants should have the ability to manage multiple storage pools within their tenancy agreement.

- Storage Management Problems – Major causes of IT storage administration resource being required include:

- Resolving performance issues with specific applications. This is primarily caused by noisy neighbors, or by the performance constraints of disk. Solutions can include moving workloads to different storage or using tiering software and promoting applications to a higher tier (either higher performance disk or flash drives). However, the use of tiering software requires significantly more storage administration resources.

- Storage administration often increases as the utilization of storage increases, especially above 40%.

- Managing the integration of additional capacity, and balancing the workload across a federation of storage arrays adds to management burdens.

- Feedback of Storage Resources Consumed – this is especially important to cloud service providers who need optimize on revenues. This includes:

- Capacity, IOPS & bandwidth,

- The ability to set upper limits on all storage resources used for tenants and storage pools, and guarantee minimum performance levels,

- Relating storage resources consumed to either a specific budget or to chargeback/show-back mechanisms.

Any solution for cloud storage services must cost effectively solve the problem of noisy neighbors, be secure and private, and minimize storage administration overhead. In addition, it must perform consistently at all utilizations. It should only request information that can be easily answered by application development or business owners (e.g., capacity, not RAID). All parameters set should be capable of being revised either up or down and storage resources made available or released.

A Blueprint For Low-Cost Enterprise Cloud & Cloud Storage Services

To achieve storage cost structures that compete with public cloud service offerings, cloud storage services should be as simple as possible and aim clearly at the “fat middle” of the workload map, as shown in Figure 1.

Source: Wikibon, 2014.

Click on the image to enlarge.

Enterprise cloud and cloud service providers should make the “fat middle” the primary target of their initial cloud-enabled storage services. Figure 1 shows different types of workload on two axes. The “x” axis is the data services, with simple lower cost data services on the left, and advanced enterprise data services on the right. The “y” axis has performance orientated workloads at the top, requiring high bandwidth, higher IOPS and lower access times to data (latency) with capacity/cost oriented workloads at the bottom. The fat middle covers most workload types, but not those requiring very high performance or very low cost solutions.

Simplicity comes from:

- Ensuring clear performance guidelines for selecting applications that can use “Fat Middle” storage services. There should be separate high-touch services for very-high performance workloads that will require high levels of storage and DBA services to manage.

- Avoiding including applications requiring large amounts of very-low cost storage (e.g., archives) in the “Fat Middle” storage services (applications with small amounts of low-activity data can be included).

- Ensuring all questions asked of users can be easily answered and easily changed (e.g., no questions on RAID type). If resources are deployed, the direct cost, charge-back/show-back dollars should be available.

- Ensuring all storage services offered do not require any individual high-touch availability services. Applications requiring aggressive RPO/RTO guarantees should not be included in the initial Fat Middle cloud services. If complex storage services are required (e.g., three datacenter replication, Oracle RAC or GoldenGate implementations) these should not be included as cloud services, at least initially.

- Ensuring the storage platform has very even performance whether there are many users or few users, many small volumes or a few large volumes, and/or there are many or few applications.

- Eliminating any noisy neighbors effects that requires storage management intervention.

- Eliminating tiering software and administration processes where possible. A single tier is much simpler to manage. Higher performance for “edge” applications (e.g., very-low latency database applications) should be provided by a separate storage service based, for instance, on all flash arrays for low-latency databases.

- Enabling a federation of storage arrays that would permit migration to new technologies without application interruptions and with seamless expansion of capacity.

- Providing a standard back-up service for all applications

Selecting Technologies For Low-Cost Enterprise Cloud & Cloud Storage Services

The three main storage hardware options are:

- All Flash Arrays: (AFAs)

- AFAs can provide all of the low-cost management advantages. Many AFAs provide inline de-duplication and compression, which lowers the cost of storage. However, as of May 2014, the price point for AFAs is $3/GB or higher for CAPEX. Over time, all flash arrays will decline in cost relative to other solutions, but at the moment other solutions such as hybrid arrays can provide significantly lower costs.

- Hybrid Storage Arrays:

- The CAPEX cost for hybrid storage arrays should be significantly lower than $1/GB.

- The hybrid array is a combination of storage controller with DRAM, flash and spinning disk. The flash drives or cards are integrated (e.g., as cache), and not SSDs for main storage.

- Write IOs in hybrid storage arrays are written to protected DRAM or flash and acknowledged very quickly. There should be sufficient controller and bandwidth resources to ensure that workloads with sustained high write IOs do not suddenly revert to HDD IO performance.

- Large-scale caching services in flash and/or DRAM are available for read IOs.

- Many traditional storage arrays are now designed or marketed as hybrid arrays. Many new vendors have introduced specialized storage arrays with innovative architectures.

- Server SAN:

- Server SAN is a new technology that allows the aggregation of storage on servers. This will become an important model for storage services in the future, focusing initially on high-performance and very low cost storage services. However, as of May 2014 Server SAN vendors have not yet reached the level of maturity and simplicity to provide Fat Middle cloud storage services in bulk. This will change rapidly in 2015 and beyond, as new Server SAN software is introduced and battle-tested.

Hybrid storage arrays are currently the best fit for low-cost Fat Middle cloud storage services for enterprises and cloud service providers.

Hybrid Storage Arrays

It is important to establish if a simple storage service providing low-cost consistent response times at all storage utilizations for 80% of applications can be achieved. Examples of hybrid storage arrays (a combination of storage controllers, flash services, and HDD/SSDs) that are potential candidates include:

- Dell Compellent hybrid storage array,

- EMC VNX storage arrays,

- Fusion-io ioControl hybrid,

- Hitachi VSP,

- HP 3PAR storage arrays,

- NetApp FAS storage arrays (sold by NetApp and IBM),

- Nimble hybrid storage arrays,

- Tegile hybrid storage arrays,

- Tintrí hybrid storage arrays,

- ZFS hybrid Storage from Oracle.

All of these arrays and many others have excellent installed bases and work well within their strategic fit and price point. Some have superior services in federation (in particular, NetApp and HP 3PAR). Some have excellent tiered storage software, such as the EMC VNX. However, Wikibon selected the IBM XIV as the reference architecture for five reasons:

- The XIV architecture met the characteristics for simplicity (see footnotes for description). In particular, the architecture protects against noisy neighbors and provides consistent performance for multiple simultaneous applications at high utilization. The write performance in particular is fast and consistent, important for database applications.

- The XIV fundamental architecture automatically spreads data and load, eliminating the requirement for optimization software such as tiering. The simplicity of the construct cuts to a minimum storage management and storage administration.

- The architecture allows very high utilization without impacting performance, recovers from disk failures with little impact on performance, allows easy deployment of additional storage arrays and simple workloads migration.

- In our discussions with users, XIV was a clear leader in enabling a low staff count to manage this class of storage when the workload was within the strategic fit of XIV. Multiple petabytes can be managed with fractions of a person when the storage operation is simple.

- XIV integrates well into different cloud environments, including Cloud Director on VMware, and directly into OpenStack.

These advantages overcame some of the limitations of XIV today, which include:

- Performance is consistent but not fast. The master copy of the data is on disk and access is slow. IO writes are more consistent than reads. Large applications with significant IO requiring low latency and low latency variance may not behave well on XIV and should be supported by an all-flash array.

- For private and public service providers, it is very important that performance is managed and fully monetized and at the same time performance guarantees consistently suppordted. XIV does an excellent job of providing maximum limits on storage pools for capacity, IOPS and bandwidth. The tools for guaranteeing minimum performance levels are indirect and need improvement to allow automatic management and monitoring.

- No current availability of multi-tenancy (is promised in a statement of direction for expected delivery in 2014.)

- The recent introduction of automation and orchestration custom storage operation using a new RESTful API is not yet battle-tested;

- The recent introduction of the IBM Hyper-Scale Manager for storage federation is not yet battle-tested;

- The recent introduction of Hyper-Scale consistency groups (probably should not be included in a Fat Middle storage service, at least initially) is not yet battle-tested.

The fundamental architecture of XIV enables good, consistent performance across the board, but it is difficult to give better performance to a specific workload. The XIV flash cache feature can be pointed at specific volumes within applications. However, this is a slippery slope to increasing complexity and storage administration costs. Wikibon would recommend keeping the cloud storage simple (e.g., share flash cache across all applications) and not using any feature which allows specific workloads to benefit. This will ensure that the cloud storage is as low-cost as possible.

- If a workload does not fit within the strategic fit of the cloud service model as is, it should be part of an alternative bespoke storage pool with a much higher cost structure. A specially configured XIV or flash-only array might be used to fulfill that requirement.

- Like most other hybrid storage arrays, standard XIV does not deal well with very heavy high-bandwidth applications or applications requiring very low (<2) millisecond IO response times. Figure 1 shows the strategic fit of hybrid storage arrays, including IBM XIV. Although in theory these workloads could be accommodated on hybrid arrays, Wikibon recommends keeping the cloud services as simple and low-cost as possible and provide bespoke storage services for applications that need and can afford them.

The Wikibon analysis has been based on extensive knowledge of storage arrays, and numerous interviews with practitioners. Wikibon has not run specific benchmarks or bake-offs. Wikibon acknowledges that other hybrid storage arrays could do the job, especially as storage software costs are easily discounted. Wikibon believes that what as important is in achieving overall simplicity and low cost is not a plethora of features but what is left out and does not need to be managed.

Conclusions

Figure 2 shows the Wikibon analysis of the potential cost reduction. The enterprise is assumed to have $10 billion in revenue, with a total IT budget of $150 million. The costs are represented as yearly IT budget costs, including either lease or depreciation costs for CAPEX items. The left-hand column represents the typical cost profile of traditional storage arrays, using a tiered storage approach. The right-hand column represents the cost of a best-of-breed hybrid array. The major differences are the cost of storage software ($3.7 million vs. $1.9 million), and support costs ($6.6 million vs. $1.4 million). The support costs are fully loaded, and include server, network and DBA storage work, as well as the work of provisioning storage and implementing new storage.

The model predicts that the total cost of tier 1 light storage and tier 2 storage within the strategic fit shown in Figure 1 should be reduced to about 50%, which is in line with public cloud storage offerings with similar performance characteristics. With this approach, Wikibon believes most enterprise IT and cloud service providers can achieve the objective of giving 80% of applications storage at 50% of normal cost. Cloud service providers will specifically need the ability to control and charge on both storage capacity and storage access.

Action Item: Implementing a low-cost enterprise cloud storage service competitive with public cloud offerings is an imperative for enterprises and cloud service providers. Wikibon recommends that practitioners implement a very simple service that covers the fat middle with extremely consistent performance that needs almost no storage administration. These storage services should apply to a high percentage of tier 1 light and tier 2 applications. The scope of the applications supported should be limited and exceptions accommodated on other storage services on platforms, rather that diluting the low-cost characteristics of the cloud storage pool. Wikibon recommends that hybrid storage arrays with a simple architecture and simple software, and the ability to control and charge independently for capacity and access. Wikibon recommends that IBM XIV be included in any RFP.

Footnotes: Reference Architecture XIV Gen3: Technology Details

XIV is a software-led distributed architecture, using 15 storage processor nodes, each with its own processing and up to 720 gigabytes of DRAM memory. There are 180 7.2K RPM nearline SAS HDDs with 4 terabytes per HDD, giving about 260 terabytes of usable storage. There are up to 12 terabytes of flash cache drives, and a high-speed InfiniBand interconnect. The data is spread automatically and evenly across all the disk drives, with a second copy of data spread over the disks in the same way.

This software-led distributed architecture ensures that data is spread evenly across all resources, and there is very even performance with many users or few users, with many small volumes or a few large volumes, and when there are many application or few applications. It deals superbly with noisy neighbors. It uses low cost high capacity storage and the functionality is part of the software. The GUI is best of breed in the industry. But the ease of use is more a function of what the user does not need to do, rather than the GUI interface itself. The amount of storage that can be managed by one person is in the multiple petabytes.

Write IOs are dealt with very effectively within the architecture. Multiple copies of the writes are made to multiple nodes, and kept in DRAM until written down to hard disk drives, the master copy of all data. Read IOs are not tiered, and rely on caching algorithms for caching held in DRAM and flash. The DRAM on the nodes is a shared caching pool connected by high-speed InfiniBand. When reads are not found in cache, the data is fetched from hard disk. These IOs are spread across all disks, and do not introduce hot spots. Like all storage arrays, applications with small working sets and cache friendly behavior work best.

The XIV give cloud service providers the ability to allocate and charge storage as three independent vectors: usable capacity, IOPS and bandwidth. Ceilings can be applied to all three vectors within a logical storage pools, and tenants can define multiple storage pools. This enable the cloud providers to charge for what the applications use, and optimize revenues.

The result is low cost storage with very even, reasonable performance for everybody, extremely easy to use and low-cost storage management, with excellent space, power and cooling costs compared with arrays using high-speed disks.

All storage array software is included in the base price of the storage array.