Premise

The premise of this research is that enterprises can extract the greatest potential value from emerging technologies by deploying them to automate decision support systems, and to implement this automation as an extension of the existing operational systems. The role of big data and data lakes is to support this automation process. The objective is to change decision support from delivering information to individuals to delivering information to systems. This means moving from analyzing in days to near real-time in milliseconds or seconds.

This change allows organizations to face forward and make most decisions in real-time, instead of facing backwards and steering the organization by its wake.

Revolution?

One view of the future IT world is a new platform, with a complete panoply of features and characteristics. IDC calls this nirvana the 3rd platform, a convergence of disruptive trends in the IT industry “built on mobile devices and apps, cloud services, mobile broadband networks, big data analytics, and social technologies.” Gartner calls it “The Nexus of Forces: Social, Mobile, Cloud and Information.” Cisco and Intel would add the Internet of Things and put more emphasis on the network.

The implication of this thinking is this 3rd platform nirvana is a necessity for most enterprises for most applications, and that organizational IT should measure progress towards this nirvana as a sole beacon of success. However, it also implies all platforms should have all the features, and that all these features are valuable to every organization. It implies the necessity of conversion to this new platform, that old enterprise applications and new enterprise applications will live side-by-side (Gartner’s bi-modal IT), as the old applications are converted or rewritten for the new platform. It implies that an evolutionary “jump” from today’s platforms to this new one is required for “true enablement” of IT.

Evolution

Nobody would disagree with the premise that today is awash with innovation, unlike any other time. Nobody would disagree that mobile, social, cloud and big data are disruptive technologies. Disruptive business models and disruptive technologies abound. However, Richard Dawkins taught us in “The Blind Watchmaker” that evolution is constrained by where you start from, and by the necessity that each successful change is self-sufficient, survivable and competitive. Evolution does NOT try and make something, like an eye. Rather, each successful change is a new starting point for incremental change. The environmental pressures at that time will determine the next successful change or changes.

This evolutionary analysis would suggest there is no point in defining a third platform. A better model is that IT will evolve, sometimes faster, sometime slower, as organizations and people apply new IT technologies to create sustainable value. Long necks appear in Giraffes when there is a consistent benefit of greater access to food greater that the cost to the animal of specialization. Each evolutionary step is independent of the previous steps. Not all animals evolve with long necks.

Evolutionary Forces

What should replace this 3rd platform model? MIT’s Erik Brynjolfsson and Andrew McAfee talk about a second machine age, driven by technology becoming more and more capable at taking over human decision making, in the same way that the first machine age replaced human muscle-power with machine muscle-power. In this second age, self-driving cars can replace human drivers, and are safer and more efficient. Smarter algorithms allow systems like IBM’s Watson to play jeopardy better than the best human contestants, and allow diagnosis and treatment planning better than the best human doctors. The key aspect of these systems is that very large amounts of information are collected and analyzed from the environment in real time, sufficiently fast to be able to automatically adapt to any change. The data from the cars sensors have to be detected, analyzed and reacted to within 250 milliseconds, the response time of humans, and fed back into the operational driving system. The response time for winning at jeopardy is similar.

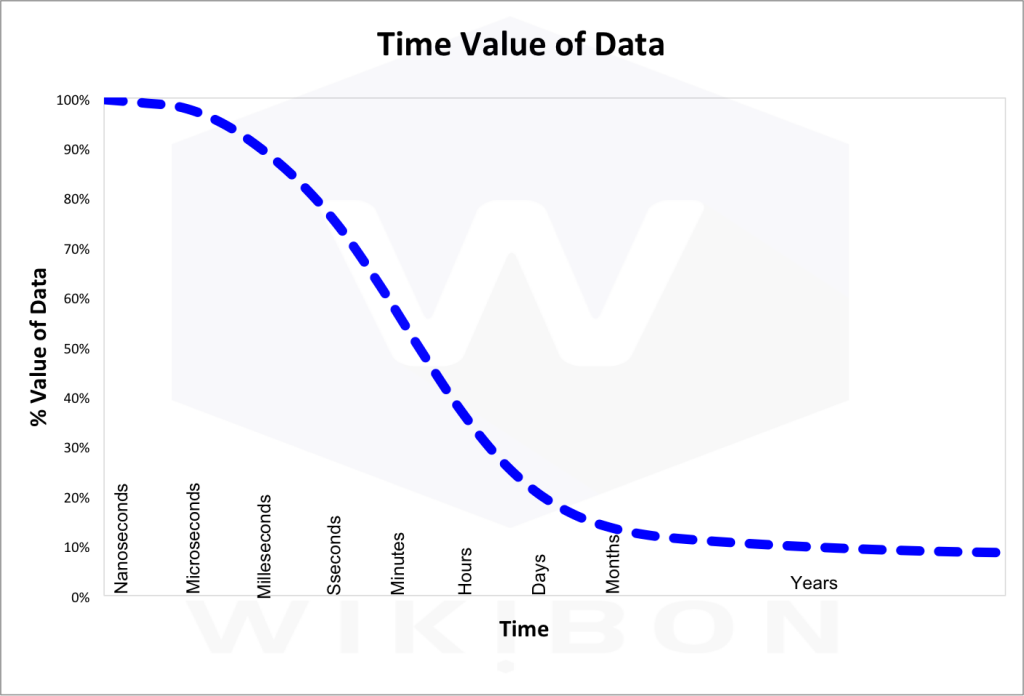

Figure 1 shows the how rapidly the value of data declines rapidly over time. The key to analyzing the data is to ingest and process it as efficiently at possible using all the resources available. The more data that can be analyzed and pre-analyzed, the better the decision. The fundamental model is that data is analyzed by machines, the decision made by machines, and the resultant action implemented by machines.

Application Evolution

Applications are the largest IT source of business value in every organization. Internal users of an organization and their external customer and partner users create value by using applications. Infrastructure exists to assist development and execute applications. Applications and the application requirements dictate the infrastructure deployed. Equally, advances in infrastructure can improve the quality of applications.

Technologies that have enormous impact on the functionality of applications include cluster and parallel technologies that break requests into parallel streams and reduce time to completion, time to value. It includes NAND flash devices that can improve the application speed, and more importantly improve the amount of data that can be processed by operational applications. New MEMS technologies have reduced the cost and size of sensors by orders of magnitude, and have paved the way to the data available to applications from the Internet of Things. New processor technologies such as CAPI and the Open Power Foundation can process 10 times more data in the same time, and new interconnect technologies such a PCIe switches from EMC DSSD and 100gigabit ROCE (RDMA over Converged Ethernet) from Mellanox can reduce interconnect delays to single digit microsecond delays.

Equally impressive are the software building blocks that are exploiting the new technologies. Automated systems such as the Google self-driving car have progressed dramatically over the last few years, and pieces of this technology are already entering the volume car market. Systems of intelligence such as IBM’s Watson and many other new entrants are allowing automated decision support applications to be designed and deployed in days rather than years. New databases have proliferated with new ways of dealing with data, and are manipulating array data rather than table data.

Designing all new application systems so that they can be used on mobile devices goes without saying. Using cloud platforms for deploying SaaS applications, deploying IaaS where it is economical, and looking longer term at PaaS make sense. Using converged infrastructure to reduce costs and move to a DevOps IT organization makes sense. All of these technologies help IT reduce costs and deploy systems faster. All of these technologies have the potential to save money, especially the IT budget.

None of these technologies change the fundamental fact that companies are still sailing blind. The operational systems are working well, and can be made to work better. The decision support systems are not working well. I have yet to meet a CIO who is happy with the enterprise data warehouse implementation; they are unhappy with cost, but even more unhappy with functionality. The objective of today’s decision support infrastructure is to help executives guide the organization by looking at the wake of the ship better. These systems make a few people in the organization smart, and those few people rarely use the smartness smartly. Creating more reports quicker and better interactive graphics can helps a bit, but does not fundamentally reduce cost. Creating a data lake to do the same job is just a cheaper way of creating a bigger mess.

As stated at the beginning, the premise of this research is that enterprises can extract the greatest potential value from emerging technologies by deploying them to automate decision support systems, and to implement this automation as an extension of the existing operational systems. This means moving from analyzing in days to near real-time in milliseconds or seconds.

The evolutionary way to implement this type of automation is one small step at a time. Taking the application where it is, whether it is on a mainframe or a Linux Power system or a Windows Intel system, building models of how data streams can assist the application, and implementing real-time analytics to automate the response back to the application. The area of value will depend on the business and the vertical. For some it will be identifying potential fraud, as in health care insurance. For others it will be optimizing price, or more targeted marketing of products. For many identifying customer dissatisfaction and reducing churn will be most important. Even agriculture and fishing can benefit from knowing what to grow and when/where to sell. Identifying the areas to automate will be serial and cumulative, and will significantly improve the productivity of the line of business. Early Wikibon studies show that there is a potential to improve productivity by 30% or more. Companies such as Uber and DoorDash have taken this IT approach right from inception.

Thinking in evolutionary terms, the key questions become where and how are these principles and capabilities can be applied to the applications, especially in organizations and organizational IT. A high-level assessment of current IT concludes:

- Most operational systems work well enough. Orders get placed, invoices are created, bills are paid. Most of them work in real-time or near real-time to some extent; for example an order will “wait” until the item is in stock before it completes the process; an Amazon user can see in real-time if the item wanted is available in blue or red.

- Most decision support systems don’t work well. The current IT processes (e.g., Extract, Transform & Load (ETL or ELT)) mean that most reports are available weeks later. These reports smarten-up only a few people about what might have been done better in the past. These few people have little incentive to share this data except to further their own interest. Wikibon research showed an average return of 50¢ for every $ for big data applications. The best evolutionary analysis is that organizations change course by looking backwards at where they have gone, and take days or weeks to correct course. Imagine a shark looking backwards at its wake and watching the fish it might have caught pass by.

- Mobile hardware and applications are working well, and will continue to evolve. Most PCs will become mobile, and the applications on most desktop PC are being absorbed into the server layer. Social, like human voices, will continue to travel with mobile devices, and communicate via clouds.

- Many application workloads will migrate to public clouds, and many will stay in private clouds. Many private clouds and the applications they support will migrate to mega-datacenters, to be close to the Internet, the Internet of Things and Cloud services. Private and public clouds will form individual and organizational hybrid clouds.

- Data is heavy, and big data is very heavy. Bulk transfer will be avoided for both cost and elapsed time reasons. Edge systems will hold some data, and more centralized clouds will hold other data. An equilibrium will evolve.

The greatest evolutionary force is from item 2 in the above list, the improvement of decision support systems. The move towards systems of engagement, and towards systems of intelligence will generate enormous value for organizations that adopt early, and for the companies and individuals that enable and support them. The data sources are varied, both internal and external. Internal data can come from other internal systems and call-home functionality in products. External data can come from partners, customers, mobile, social and Internet sources, and the nascent Internet of Things. Organizations can use these data sources to help derive algorithms, but sustainable value comes from implementing these algorithms into automated incremental change to the current operational systems.

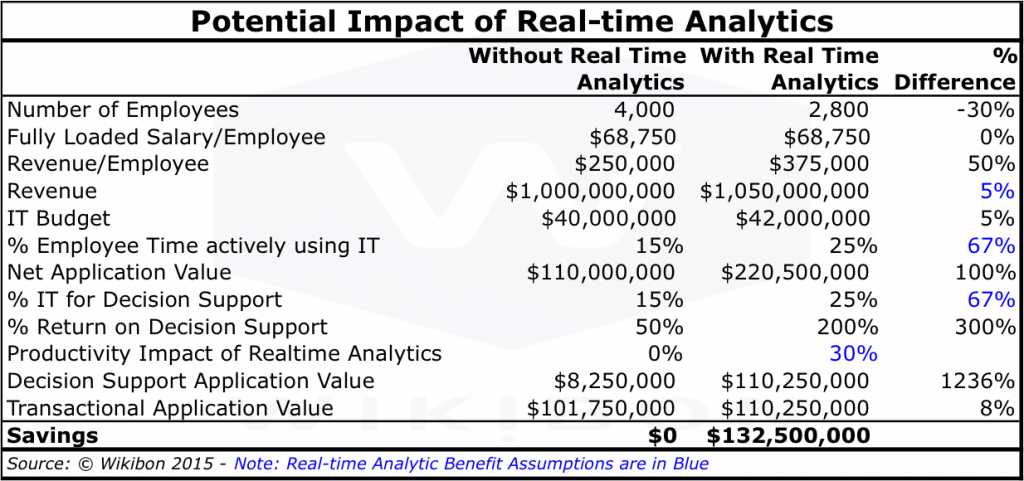

Table 1 is an illustration of the impact of real-time or near real-time analytics on an organization as a whole. The column without real-time analytics reflect the standard Wikibon company, and the business metrics are good approximations to those found in many companies. The revenue is assumed to be $1 billion, the number of employees 4,000, and the revenue/employee about $250K. The assumption is that the total IT budget is about 4% of revenue, $40 million. The application value assumes that employees are as productive using IT as they are at any other part of their job, and that they spend about 15% of their time actively using IT. For knowledge workers that percentage will be higher, for shop-floor workers that percentage may be lower. The application value generated by IT is therefore revenue/employee x 15% x number of employees less the cost of IT, which is $110K. The model assumes that 15% of IT is spend on decision support, and the percentage return on decision support is half that of operational systems. This calculates out to operational systems being worth $8million, and operational system being worth $102million. These represent typical results from many IT interviews conducted by Wikibon.

The column reflecting the impact of real-time analytics assumes the first column, uses the assumptions in blue to calculate the impact on the organization. The application value from IT is doubled, to $220K, almost all of that benefit coming from the real-time decision support systems. The bottom line is that by focusing on real-time or near real-time analytics, and using conservative assumption of 30% improvement in productivity and 5% additional revenue, the value of this evolution is about 13% of revenue, going straight to the bottom line.

Evolution not Conversion

The conclusion from this analysis is that applications should and will evolve. The greatest financial opportunity is to continuously adapt today’s operational applications by the addition of real-time or near real-time analytics applied directly to the current organizational processes and applications that support these processes. This is likely to translate to the greatest value to most organizations, and where possible avoid the risks of converting systems. The benefits of this migration will be to allow significant market share gain for some, and for others survival in an increasingly competitive marketplace. The study of organizations that have applied real-time analytics to their current operational systems have shown incredible improvements in lower costs and greater adaptability, and the assumptions in Table 1 reflect these studies. Wikibon will be sharing the results of this research shortly. The evolution will not stop with the addition of just one or two real-time analytic functions, but will continue to increase the data addressed and evolve greater functionality. The automated evolution of better algorithms is likely to be an important component of future iterations of application systems of intelligence.

Many IT vendors who initially support the 3rd platform have rethought their strategies, and are more focused on adding value in the short-term. An example for a company that has backed away from the 3rd platform is EMC, who originally “over-rotated”; now EMC is focusing on what it calls 2.5 platform.

Action Item:

Business and IT executives should understand the enormous potential for adding decision automation through near real-time analytics to current operational applications in their organizations. New technologies should be judged by their ability to support near real-time analytics applied to operational systems, and supporting incremental improvement over time.