Executive Summary

Server SAN is software-led storage built on commodity servers with directly attached storage (DAS). Wikibon believes Server SAN is poised to disrupt traditional storage architectures over the next decade. As enterprise organizations begin to replicate the infrastructure of hyperscale giants, software will lead a transition where storage function migrates closer to the host. This will occur over a decade while stacks are made more robust and mature. Fundamental to this shift will be the return of direct attached storage (DAS) devices with high speed interconnects and intelligent software to manage coherency and performance. DAS pools will begin to replicate SAN and NAS functionality at much lower cost and provide compelling economic advantages to enterprise customers. In many important application segments (e.g., high performance databases), Server SAN will offer superior performance, functionality and availability over traditional SAN & NAS.

Server SAN has disrupted the hyperscale service providers marketplace, where cost is king. Hyperscale service providers have developed their own solutions to provide enterprise storage services. Amazon, Facebook, Google, Microsoft, Yahoo and others have all developed their own Server SAN solutions, based on commodity servers and disks. As a representative example, Amazon initially developed its S3 (Simple Storage Service) object storage service, and has subsequently introduced EC2 Block Storage Volumes, Glacier archive storage, and many other storage services. All the Amazon storage services are based on software services on a Server SAN architecture and are used by enterprises to support many types of application.

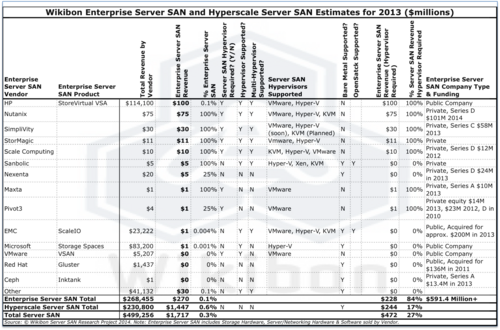

Server SAN is in the very early states of penetrating the enterprise storage market, with a dozen vendors offering Server SAN solutions (see Table 1 in footnotes).

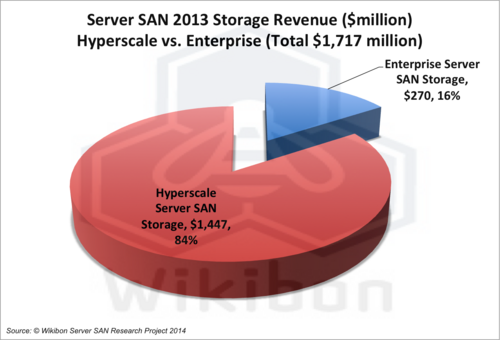

In Figure 1 below, Wikibon assesses the total Server SAN marketplace at the end of 2013 to be about $1.7 billion. Of that, $1.44 billion (84%) comes from hyperscale Server SAN, and $0.27 billion (6%) from enterprise Server SAN.

Source: Wikibon Server SAN Research Project, 2014

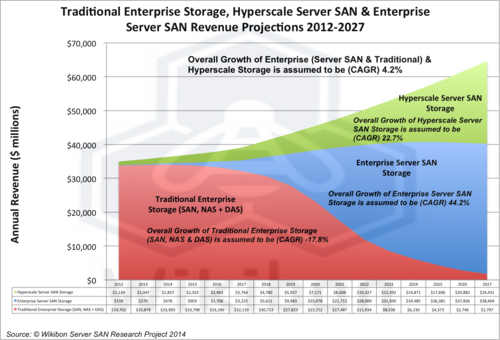

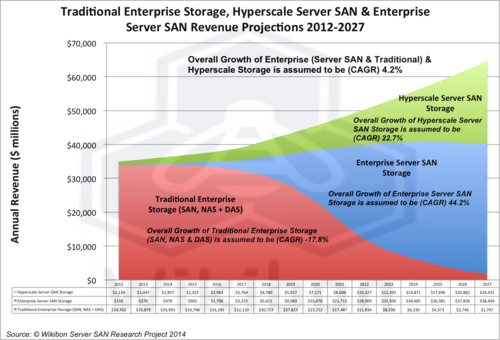

In Figure 2, Wikibon assesses the overall growth of the enterprise storage market to be about 3.9% per year, as represented by the sum of three components (the top line in Figure 2), traditional enterprise storage, enterprise Server SAN and hyperscale Server SAN. Traditional enterprise storage (colored red in Figure 2) is the dominant component with 95% of the market in 2013. This share will decline over the next decade. Enterprise Server SAN (blue in Figure 2) essentially replaces traditional storage, starting in areas where it has unique performance and cost advantages. The growth in the market is projected to come from hyperscale Server SAN (green in Figure 2), as enterprises migrate to mega-datacenters and adopt more hyperscale storage services.

Source: Wikibon Server SAN Research Project, 2014. See Table 1 in Footnotes.

By bringing data closer to the server, Server SAN will be one of the key foundation stones of lower cost and higher function computing.

The Wisdom Of Crowds

Wikibon members are very experienced technologists. Wikibon is evoking Wisdom of Crowdsto help project the adoption rate and market size of Server SAN storage. Earlier this year, Wikibon introduced the concept and definition of Server SAN and discussed some of the reasons why the Server SAN model would be lower cost, higher performance and easier to integrate into a software-led infrastructure. Like all major changes in technology architecture and topology, the change from traditional SAN/NAS storage is likely to take place slowly to begin with. If adoption is successful, the Server SAN market will grow rapidly before slowing again. This Wikibon professional alert presents a first cut at a long term projection of Server SAN adoption, gives the reasons both for its rapid adoption and reasons for adoption friction and asks for the support of the Wikibon community in improving our projections.

Enterprise & Hyperscale Server SAN 2013

Figure 2 in the Executive Summary shows the 2013 Server SAN market of $1,699 million has two major components:

- Enterprise Server SAN:

- Revenues of $253 million in 2013,

- Addresses the needs of enterprises with support for multiple mixed workloads, enterprise documentation and training, enterprise security and enterprise compliance.

- Hyperscale Server SAN:

- Revenues of $1,447 million in 2013;

- Developed by each of the large-scale hyperscale service providers to meet the needs of their own applications.

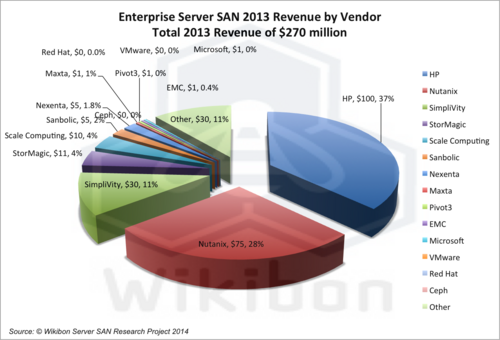

Figure 3 below shows the enterprise Server SAN 2013 based on the data in Table 1 in the footnotes, which was collected by Wikibon vendor interviews and other research. The research shows a typical early marketplace, with HP as an established player with 40% market share, Nutanix as an early new-entrant leader with 24%, 11 other early entrants and about $600 million in early funding. Within the 11 are three “whales”, well-funded well-established technology companies:

- EMC with a recent acquisition of ScaleIO;

- Microsoft with the recent introduction of Storage Spaces in Windows Server 2012, a fast growing Azure hyperscale cloud service and new leadership from Satya Nadella;

- VMware with the recent introduction of VSAN.

Source: Wikibon Server SAN Research Project, 2014. See Table 1 in Footnotes.

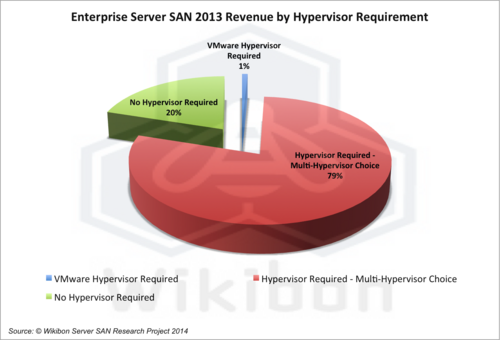

Figure 4 below looks at hypervisors associated with current enterprise Server SAN solutions. It is interesting to note that 80% of the revenue was driven by solutions that depend on hypervisors. VMware’s VSAN supports only VMware, but all the other established hypervisor-dependant enterprise Server SAN solutions support multiple hypervisors. VMware and Hyper-V support dominated, with KVM support is increasing.

Source: Wikibon Server SAN Research Project, 2014. See Table 1 in Footnotes.

Figure 5 below shows the Wikibon estimate of 2013 revenue ($1,447 million) split by vendor. Revenue from these vendors is estimated by taking the total 2013 technology investment estimate, calculating the revenue supported by that investment, and multiplying that revenue by the percentage of spend that is storage related (typically 15%).

Source: Wikibon Server SAN Research Project, 2014

The data estimates indicate that Microsoft has gained significant traction from its continued investments in cloud services, with Google also well placed. Facebook and AWS are growing fast. There is a likely long tail, with 40% in “other”. Most of these proprietary implementations are not dependent on hypervisors, mainly for performance reasons.

Wisdom of Crowds Action Request: Please put forward estimates of the 2013 Hyperscale Server marketplace.

The Business & Technology Drivers Behind Server SAN

The current Networked Storage SAN/NAS model was itself a reaction to the inefficiencies of the direct attached (DAS) model of the late 20th century. Centralizing storage onto storage arrays attached to multiple servers created significant improvements in storage utilization. Moving applications to different servers is much easier – seconds/minutes vs. hours or days. Over time, functionality previously in the server migrated out to the storage array, e.g., synchronous and asynchronous replication. Switched network capabilities improved, and a complete ecosystem was born around the storage array. The arrays adapted to new technologies such as flash, embraced automatic tiering technologies, and helped ameliorate the overheads of virtualization with complex APIs between the hypervisor and the storage arrays.

The performance of arrays has always centered on caching IO in the storage array controllers. Reads are cached in storage controller DRAM, and writes are cached in small amounts of battery or capacitance protected DRAM. Storage arrays work best with applications that have small working set sizes, have good locality of reference and are “well behaved” in terms of IO request rates. Application design and operational processes and procedures have adapted to the storage array technologies and topologies.

From a business perspective, the choice of storage vendor brought with it a strong incentive to buy the storage management software from the same vendor. Operational procedures are baked into the specific array software. The cost of migration from one vendor to another is very high (see The Cost of Storage Array Migration in 2014), and upgrade prices reflect the vendor lock-in reality.

Virtualization and flash technologies have also highlighted the disadvantages of the fibre networked storage model. When IO latency is measured in 10s of milliseconds, and the number of IOs to a disk drive is limited to 100 IOs/second, the overheads of a fibre network are not important. As flash allows orders of magnitude more IO, and potential IO latencies are reduced by a 1,000 times, the protocol overheads of IO and fibre networks become a bottleneck for new applications. The management of storage in storage arrays is complex to set up and monitor, and even the language of storage (LUNs, ports, RAID, etc.) has resisted simplification.

Server SAN has a large number of potential benefits to application design, application operation, application performance and infrastructure cost. These come from an increased flexibility in how a storage is mapped to the applications.

- Server SAN allows a variety of network types underneath an overall storage network umbrella. Some examples of sub-networks that would be difficult or impossible to reproduce in traditional array:

- High Performance Subnet – e.g., a high performance database network could utilize RDMA over an InfiniBand point-to-point network, using PCIe flash cards using atomic writes and NVM compression over multiple servers and software replication services. As discuss in MySQL receives 3X Boost in Performance from PCIe Flash, a high performance database needs lower processor overhead and greater throughput from Atomic writes, non-SCSI high performance protocols and writing half the compressed data. It has lower costs because flash storage has four times the longevity with Atomic writes and NVM compression, and lower processor costs from fewer IO waits. For the majority of high performance databases the most important aspect is low latency and latency variance from holding all the active data and metadata in flash, far fewer IO and quicker IO reads and writes and more consistent operation times. The resultant database environment allow faster processing times for end-users or contingent processes, the ability to process two orders of magnitude (100 times+) more data in the same time. This means that modern applications can give great productivity to consumers and enterprise users from faster consistent response times and vastly improved applications.

- Big Data Subnet – e.g., use knowledge of where data is stored and the data retrieval latencies to optimize big data applications and help optimizers decide when data should be brought to the application on the server and when the application should be moved to the server next to the data.

- Metadata Subnet – e.g., Process and hold metadata on high fidelity security video (more than 500 million files/day/camera) on storage close to the servers, with the ability to search the historical metadata in seconds for new threats and pull up original tape archives in minutes if necessary.

- Low cost file storage – e.g., define use of low cost low performance disk with low performance Ethernet links and minimal storage services.

- Server SAN can scale out easily by adding commodity servers, storage and network components:

- The development of servers with large numbers of cores make it easy.

- Current and future x86 server designs have very high bandwidth capabilities and all but obviate the need for special ASICs.

- Making storage software and services independent of hardware will increase competition and lower costs.

- One fundamental architectural problem is each SAN array has the storage management built-in to its own architecture. Software-led Storage architectures such as Server SAN will address this issue by completely separating the storage itself from the storage management.

- Software-led storage will integrate into software-led infrastructure initiatives such as the orchestration layer of OpenStack. This will lead to storage management being independent from hardware, and allow greater choice and lower software costs.

- Open hardware stacks such as the Open Compute Project, especially together with software stacks like OpenStack, will allow enterprise data centers to be cost-competitive with hyperscale vendors and cloud service providers such as AWS.

- ISVs will have every incentive to write applications for a Server SAN environment, knowing that technology can be brought to bear to solve performance problems. They will be able to create more value by utilizing technology rather than constraining design to meet old storage architectures.

- The simplification of storage will lead to higher levels of convergence, fewer SMEs in the stack, and far lower operational costs. This in turn will allow much more productive and flexible organizational structures such as Dev/Ops and Cloud/Ops to deliver application value to the business.

- The convergence of hyperscale Server SAN and enterprise Server SAN will allow storage solutions to go in either direction, and increase the software choices available to infrastructure architects.

Wisdom of the Crowd Input: Are the above factors real or imaginary? What factors are important, and what factors not important?

The Friction To Adoption Of Server SAN

The current installed base of storage arrays is extremely high. The average length of time a storage array remains in a datacenter is more than 10 years! This gives current storage array vendors room to cut software prices, reduce maintenance costs and reduce upgrade costs. The current application software installed in most data centers will work well enough with the storage array technology. The lock-in of existing vendors on their installed base is real. Flash-only arrays will provide significant performance improvements for many workloads under performance pressure.

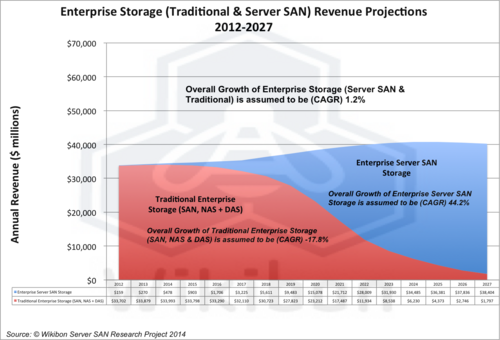

In Figure 6, Wikibon has taken a conservative view of the migration from traditional NAS & SAN to the new topology. The rapid migration to Server SAN is projected to start in 2018. The overall growth of enterprise storage is low (1.2%), because of the growth of hyperscale cloud services, which will be responsible for nearly all the growth in the storage marketplace (4.2%, see Figure 2 above).

Source: Wikibon Server SAN Research Project, 2014

Wisdom of Crowd input: Will Server SAN take more share from the traditional storage array market faster or slower?

Vendor Potential With Server SAN

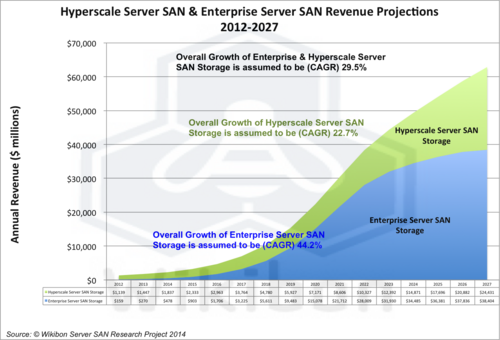

Figure 7 shows the projection for Server SAN revenue, both from hyperscale and enterprise Server SAN. Wikibon projects that Server SAN will become the predominant storage topology by the end of the decade, growing at an compound annual growth rate of 29.5% to over $20 billion and with a long-term potential to grow to over $50 billion. Over time, the software available to the hyperscale and enterprise Server SANs is very likely to merge and create a single market for hardware and software solution.

Source: Wikibon Server SAN Research Project, 2014

The major winners from this technology shift will be the component manufacturers, which will see an increase in component volumes because of the lower markups on storage software from the array vendors and the lower markups from Server SAN solutions. The component winners will be Intel with more x86 chips, Seagate and Western Digital because of short-term magnetic disk volume increases, and flash storage vendors such as Samsung, Toshiba, Micron and possibly Intel because of short- and long-term flash storage growth.

The major software winners will be harder to identify, and the list is longer:

- HP is a leading vendor at the moment with $100 million in revenue. HP will need to work very hard to grow a solution that will be seen as “modern”, and have the functionality required to compete.

- Nutanix and companies like it will need to decide in the long term if they are hardware or software companies.

- VMware has made a major investment in VSAN and a strong base to sell into. VMware will have great difficulty making its software relevant to any but the smallest of hyperscale cloud providers. It will face some critical decisions on whether to grow its market by allowing VSAN to operate on other hypervisors and other platforms such as OpenStack.

- EMC has acquired a high function software package in ScaleIO. This package will need major resources to make it competitive in the marketplace and will compete directly with EMC’s main revenue providers (VMAX, VNX and XtremIO), which are all networked storage solutions. Joe Tucci will have to protect it strongly and give major resources to it to make it fly. EMC also has the ability to include ScaleIO in its VCE converged infrastructure packages.

- Fusion-io (now acquired by SanDisk July 2014) and others can potentially focus on the high-performance segments of hyperscale and enterprise marketplaces.

- Facebook and other hyperscale providers could release their software as an Open Source project to reduce their development and maintenance costs and make a major impact on the market.l

- In Wikibon’s view, the most likely winners will provide solutions to both the hyperscale and enterprise markets. The new Microsoft, which has already shown under Satya Nadella’s leadership that it will not artificially support segments of Microsoft that are not competitive (e.g., Surface) can potentially be a major player in Server SAN software. The storage space solution, which was released as part of Windows Server 2012, and a leading position in the hyperscale market, is well positioned. If Microsoft released its Storage Spaces software to other hypervisors and as an open source project, Microsoft could position itself to own the storage software stack.

- Other companies such as IBM and Dell will need to focus and to acquire technologies to compete directly but may prefer to focus on the significant services business that will grow around Server SAN.

Wisdom of Crowds Action Request: Esteemed Storage Practitioners – what use have you made of Server SAN – what products/vendors have worked for you?

The Server SAN business is at the start of the first innings. There is time at the moment for all the companies to compete. The winners in Server SAN will probably have emerged in the next two years.

Conclusions & Recommendations

In any technology disruption, the focus of attention is often the technology itself. However, the key question for understanding the likely adoption of a new technology is the potential direct value delivered to an enterprise. Server SAN is an enabler of new application architectures. With today’s SAN architectures, the master copy of data is held in the SAN, at least one millisecond away from the server. Server-based caching technologies can help improve some IO, but at the end of the day the access time to the master copy constrains application throughput and application scope. The faster and more consistent the access time to the master copy(ies) of data, the greater the performance and functionality of the application. The greater the performance and functionality, the greater the contribution of application value to the enterprise.

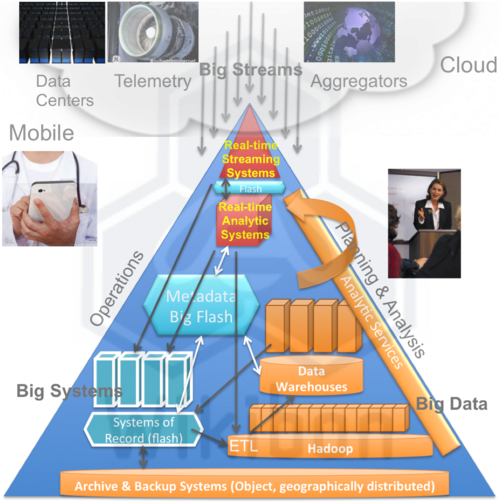

Figure 8 comes from previous Wikibon research on how flash and hyperscale are changing database and system design forever. By bringing data closer to the server and allowing access in microseconds or even nanoseconds rather than milliseconds, thousands-of-times more data can be processed in the same length of time. Non-volatile memory technologies such as NAND flash, Server SAN high performance subnets, modern x86 server architectures and new techniques such as atomic writes and NVM Compression are all compressing the time taken to read and most importantly to write the master copy(ies) of data. Traditional SAN architectures cannot ever deliver the same application functionality as Server SAN. SAN architectures have done a good job of supporting applications designed for the hard disk drive but are not a sound foundation for the flash-enhanced applications of tomorrow.

Server SAN will be one of the key foundation stones of lower cost and higher function computing.

Source: Flash and Hyperscale Changing Database and System Design Forever, Wikibon 2013

Action Item: Server SAN is a proven architecture in the hyperscale service provider market. In order to compete with the economics of service providers, enterprise CIOs and CTOs should assume that they will be adopting the same architecture within the enterprise. Focus areas for initial implementations include low cost storage within integrated stacks (e.g., OpenStack on top of Open Compute) and high performance databases with flash.

Footnotes:

Appendix I

Source: Wikibon Server SAN Research Project, 2014