Cisco AI Summit 2026: From AI Possibility to AI Reality

I had the good fortune to attend the Cisco AI Summit 2026 in San Francisco this week. The event brought together policymakers, technologists, investors, and enterprise leaders to examine how artificial intelligence is moving from experimentation to large-scale operational impact. Unlike many AI-focused events centered on model performance or speculative futures, this summit emphasized execution, […]

Feature Management Becomes a Control Plane for Modern Software Delivery

Feature flags are evolving into a delivery control plane as observability connects release decisions to production behavior and business outcomes.

305 | Breaking Analysis | Cisco AI Summit 2026: Making agentic systems real, breaking physical barriers and operationalizing AI

The Cisco AI Summit 2026 was a gift to the industry. There was no registration page, no product announcements, only very subtle Cisco marketing and some really excellent and unscripted conversations. All open. All free. A huge shoutout to Jeetu Patel, Cisco’s President & Chief Product Officer, CEO Chuck Robbins and the Cisco team behind them. Jeetu in particular did an outstanding job moderating the AI Summit 2026 and grinding through the day. Jeetu and Chuck Robbins elevated the entire event with their preparation, sharp insights and ability to draw out candid perspectives from an all-star lineup.

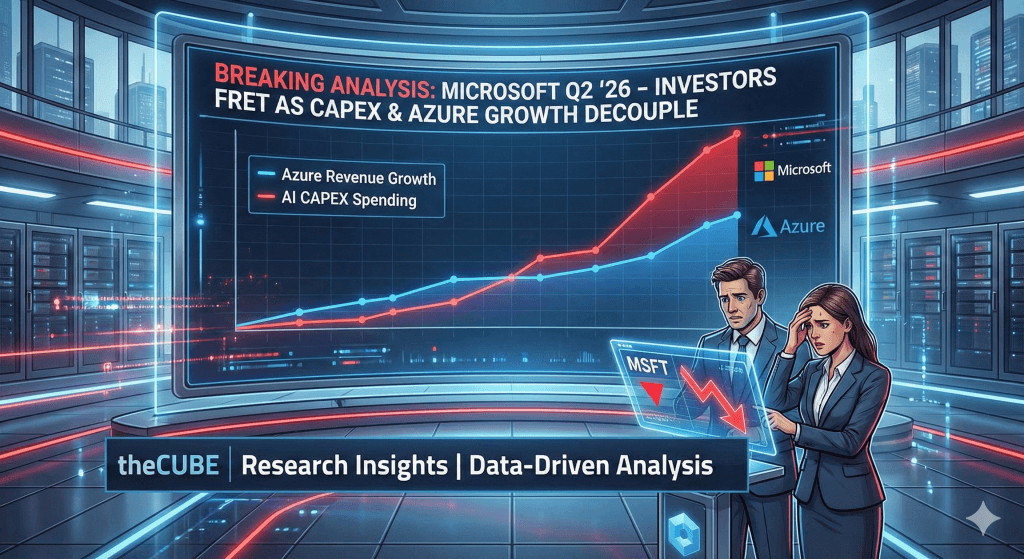

304 | Breaking Analysis | Microsoft Q2 ’26 – Investors Fret as CAPEX & Azure Growth Decouple

Microsoft just delivered what looks on paper like a great quarter, with a beat of 1% and 5% on revenue and operating operating profit respectively. But the two day reaction from investors tells a different story with the stock off double digits from its pre-earnings price . Last quarter, increased capital spending was interpreted as a signal for enthusiasm and confidence. But AI ambition has turned into AI skepticism. Specifically, Microsoft’s CapEx came in higher than expected but Azure growth didn’t. Without a clean bridge from capital spend to clear cloud ROI, Azure growth, despite an impressive performance, has become a sticking point.

How To Build Decision-grade AI Agents You Can Trust and Audit

Enterprises are pushing agentic AI beyond copilots into diagnosis, problem-solving, and decision-making—but trust is now the ROI limiter. In this episode of Next Frontiers of AI, Scott Hebner and George Gilbert explain why LLM-only architectures are reliability traps and outline a practical, three-layer blueprint—LLM+CoT, semantic layers (knowledge graphs), and causal reasoning—to deliver decisions you can verify, defend, and audit.

AI in DevOps Shifts From Faster Delivery to Smarter Decisions

How AI is reshaping DevOps from faster delivery to smarter decisions, improving flow, trust, and technical debt management.

303 | Breaking Analysis | Enterprise Technology Predictions 2026

At the beginning of each year, as is our tradition, we team up with ETR to dig through the latest data and craft ten predictions for the coming year. This year’s prognostication follows the publication where we grade our 2025 predictions. In this Breaking Analysis, we tap some of the most telling nuggets from ETR’s rich data set and put forth our top ten predictions for enterprise tech in 2025.

Twilio and AEG Signal the Platformization of Fan Engagement

Twilio and AEG show how real-time data, communications, and AI are turning fan engagement into a core application platform.

302 | Breaking Analysis | 2026 Data Predictions: Scaling Agents via Contextual Intelligence

More than eight years into the modern era of AI, the industry has moved past the awe of GenAI 1.0. The novelty is gone and in its place is scrutiny. Enterprises are less impressed by demos and more impatient about outcomes. Market watchers are increasingly skeptical about vague narratives; and the commentary has shifted from “look what AI can do” to “show me the money, give me visibility and control.”

This dynamic is backstopped by our central premise that the linchpin of AI is data; and specifically data in context. The modern data stack of the 2010s – i.e. cloud-centric, separation of compute and storage, pipelines, dashboards, etc.- now feels trivial. The target has moved to enabling agentic systems that can act, coordinate, and learn across enterprises with a mess of structured and unstructured data, complex workflows, conflicting policies, multiple identities, and the nuanced semantics that live inside business processes.

The industry remains excited and at the same time conflicted. To tap an oft-cited baseball analogy – the first inning was academic discovery in and around 2017 – papers explaining transformers and diffusion models. But most people weren’t paying attention. The second inning was the ChatGPT moment and the AI heard ‘round the world, which has brought excitement and plenty of hype.