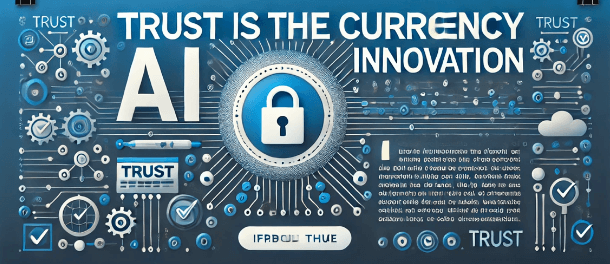

As artificial intelligence (AI) evolves from simple automation to more complex decision-making represented by agentic AI systems, a significant challenge remains: TRUST.

Businesses are becoming increasingly reluctant to depend on AI to make decisions. Many have already indicated that AI adoption will stall without clear, logical explanations that grasp the consequences of suggested actions and outcomes—particularly in areas where compliance, accountability, and risk management are essential.

As the McKinsey & Company 2024 State of AI study reported, over 60% see inaccuracies, bias, and the concealment of influential factors in AI outcomes as a key inhibitor to using AI in business-critical use cases. Enterprise Technology Research also found that half of enterprises are slowing deployments due to regulatory reporting concerns.

Going forward, TRUST will become the currency of innovation. The more AI is trusted, the more businesses will be willing to “buy into” the innovation promised by Agentic AI.

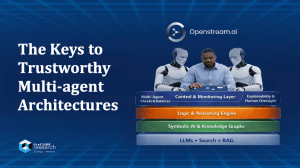

It’s our belief at theCUBE Research that the keys to achieving greater TRUST in AI will become a function of its ability to:

- Transparently explain itself in the language of the user

- Help users understand why one decision is better than others

- Enable users to intervene to test out alternate scenarios

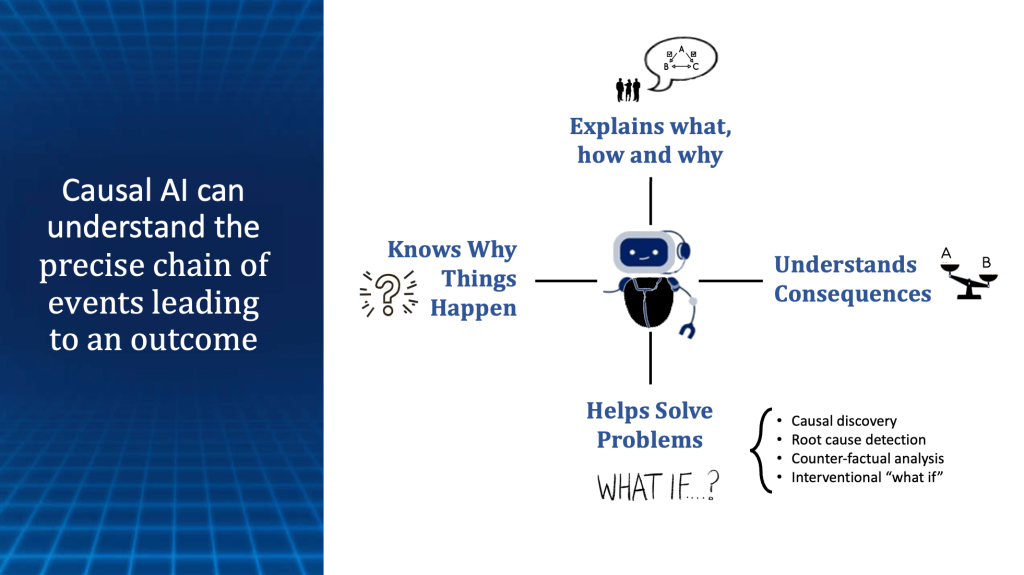

The good news is that several advanced technologies are emerging to support these capabilities. Most notably, causal AI, which can help businesses comprehend the reasoning behind decisions, addresses a major limitation of today’s AI models. This will enable users to understand the exact chain of events leading to an outcome, thereby fostering greater trust in AI outcomes.

In this research note and corresponding podcast, we’ll explore how one innovative company in the mobile marketing and programmatic advertising space, ScanBuy, has achieved 10x ROI by addressing the TRUST issue head-on with the help of Causal AI.

Watch The Podcast

In this episode of the Next Frontiers of AI Podcast, Marc Le Maitre, the CTO of Scanbuy, discusses how his organization achieved a 10x ROI in digital advertising campaigns by creating fully explainable and transparent AI models through the emerging innovation of causal AI.

AI That Can Explain Why

While today’s generative AI and LLMs are incredibly impressive, one essential ingredient in decision-making is missing. That missing ingredient is causality and the understanding of why things happen.

Causal AI will enable businesses to do more than create predictions, generate content, identify patterns, and automate tasks. They’ll also be able to play out countless scenarios to understand the consequences of various actions, explain their business’s causal drivers, and interactively problem-solve.

That is, they’ll know WHAT to do, HOW to do it, and WHY certain actions are better than others to shape future outcomes. This is key because predicting something is less valuable than knowing why something happened and what can be done differently to improve an outcome.

Simply put, humans are causal by nature, so AI must also become causal by nature. If, of course, the goal is to help humans problem-solve and make better decisions by mimicking how humans reason.

To emulate human reasoning, AI must advance beyond its correlational abilities to comprehend “cause and effect” relationships and evaluate the outcomes of potential actions.

Furthermore, with an understanding of cause and effect, AI can explain the precise pathways to an outcome and tell you how variables interacted, their values, and how much they influenced the outcome. This helps to open up the “black box” of today’s AI, providing greater trust and transparency in AI recommendations and outcomes.

Finally, with the help of causal AI techniques, models will become more adaptive to changing conditions and emergent data, enabling them to “learn” things not explicitly derived from their training data and “unlearn” things as a result of future discoveries. This is critical to enabling “digital twins” that adapt as the real world adapts.

For these reasons, AI model architectures will inevitably build upon the foundation of LLMs and generative AI to infuse progressive degrees of semantic and causal reasoning. With a growing list of vendors providing causal AI tools and platforms, the task of infusing causality into AI systems will only become easier as time passes.

Trust in Digital Advertising

Trust has become the currency of success in the evolving landscape of programmatic advertising. As AI-driven models increasingly dictate advertising strategies, the need for transparency and accountability has never been more critical.

The recent discourse among industry leaders highlights a pervasive trust deficit in AI systems, exacerbated by “black-box” algorithms and inconsistent data practices.

For example, as advertisers scale their target audiences, only about 10% come from deterministic datasets, while 90% are probabilistic. These probabilistic audiences are typically generated via “black box” predictive AI models, such as LLMs, which cannot explain themselves and are prone to biases and inaccuracies.

As a result, agency executives lack trust in probabilistic datasets due to a lack of understanding of how they were generated. They fear poor data inputs will lead to suboptimal outputs, increased fraud, and new regulatory compliance challenges. It’s not just agencies that are concerned; brands are increasingly aware of the trust gap, as are other actors in the ad tech value chain.

As Marc Le Maitre, Scanbuy’s Chief Technology Officer, stated,

“We operate in a data enrichment sector that is increasingly regulated, such as with the Digital Services Act in Europe, and we need to find new ways to bring AI transparency and explainability to the industry. If we don’t, using AI in advertising will deliver diminishing returns over the long haul.”

Applying Causality in Ad Tech

The call for transparency is loud and clear; however, the path to achieving it has been elusive. This is why Scanbuy partnered with Howso, a pioneer in understandable AI, to employ causal AI to help programmatic advertisers:

- Understand the causal drivers of effective advertising

- Generate lookalike audiences that are open and auditable

- Make real-time adjustments and model hypotheticals (what if)

- Provide meaningful information about ad creation and target audiences

- Simplify regulatory compliance reporting

Drawing inspiration from the trusted On-Board Diagnostics (OBD) systems in vehicles, which self-report faults and allow for deep inspection, Scanbuy has adopted a similar approach to employ causal AI as the “check engine light” in the ad tech space. By leveraging instance-based learning alongside LLMs that operate in partnership with causal AI models, Scanbuy has provided advertisers with a transparent, auditable system for monitoring trust metrics in AI-driven campaigns.

Their approach is based on a fundamental principle: understanding the causal relationships between variables rather than relying solely on statistical correlations. Unlike traditional AI models that identify patterns from vast datasets, causal AI can predict how changes in one variable will affect outcomes. This facilitates more robust decision-making, as advertisers can test hypothetical scenarios and model the consequences of their actions.

For example, in digital advertising, predictive models may identify a target audience based on correlation. However, these models often lack the ability to explain why a particular group was selected. Causal AI, on the other hand, can analyze the factors that contribute to user engagement and optimize strategies based on cause-and-effect relationships rather than mere correlation. The critical point here is that correlation doesn’t imply causation.

As a result, unlike traditional black-box models in today’s LLMs, they have achieved unparalleled transparency in AI models. It also enables real-time adjustments and hypotheticals to ensure that AI models remain aligned with evolving consent and regulatory requirements, tracing decision paths back to the source data. This fosters greater audibility and a culture of accountability and trust.

Furthermore, with new counterfactual analysis capabilities, advertisers can explore “what if” scenarios, gaining insights into decision-making paths and potential outcomes. They can also enable real-time adaptability to advertising models by fine-tuning “on the fly” while ensuring compliance with the latest changes in regulatory guidelines and consumer consent. As a result, Scanbuy can probabilistically scale target audiences by up to 10x without degrading engagement rates, which typically experience up to 50% decay when using “black box” AI models.

The net outcome is that causal AI techniques helped to drive a breakthrough 10x ROI by achieving the same deterministic audience campaign success rate with a 10x larger audience, with no additional incremental campaign spending. This resulted from creating a 900K lookalike dataset that performed similarly to the foundational 100K deterministic dataset, representing a significant improvement over the degradation in performance that traditional AI models had delivered. And importantly, they were able to understand and explain the causal drivers of this improved outcome.

Shaping the Future of Explainable AI

More strategically, Marc Le Maitre sees a broader industry-wide impact:

“We are using LLMs and Gen AI to explain outcomes based on causal models. So we’re actually using LLMs as the gateway, but the reasoning is built on causal models at a very atomic level, achieving almost no hallucination or bias.”

“Without causal AI, we are relying on statistical correlation rather than actual reasoning—there’s was no way to interrogate or intervene in the decision process to build trust.”

“So, the combination is fantastic. Causal AI built around LLMs is what we are flying our flag on. It’s exactly what the industry needs. “

“It can tell us what caused an ad to be effective or rejected by their target audiences and help us determine if the advertising model is transparent enough to create benchmarks for audibility.”

This makes a ton of practical sense. For example, the European Digital Services Act says that when serving ads, you must show what caused this ad to be served to its target audience, what meaningful information was used to create it, and that you must give the recipient the opportunity to make changes to that ad when appropriate. There is no framework today on how to do that, which is becoming a critical need for digital advertising players. Causal AI may represent the key to demonstrating how auditable programmatic advertising can be across the algorithmic and decision-making process.

Marc’s view:

“If you don’t do this, you end up with model collapse or dementia. Over time, models start losing information about the true distribution. Learned behaviors start converging to a point estimate with very small variance.”

“If you don’t have causality in your model, it’s like taking a cake and trying to un-bake the sugar from it. As the model becomes less accurate, there is simply no way to unlearn things, and thus, you would need to start over again”.

His experience is that Causal AI enables meaningful reinforcement learning with humans in the loop to improve ad effectiveness by understanding causal drivers of action and understanding the pathways to better outcomes. When a user sees an advertisement they don’t like, the causal model can understand why and rebuild itself to be better, or “unlearn” what it thought was a truth.

“This is transformational if applied correctly. Creating transparent and explainable open-lookalike advertising models will transform the industry and likely reduce regulatory friction. It will democratize the industry at its core.”

“The north star for AI should be reasoning. If the goal is to make AI systems work like human intelligence, then causality is not an optional feature—it’s an inevitable step in AI’s evolution.”

The innovation at Scanbuy, in partnership with HowSo, promises to set new standards for transparency and trust in programmatic advertising. Just as Onboard diagnostics (OBD) systems have become a trusted standard in automotive diagnostics, this emerging approach to AI offers advertisers a reliable, transparent framework for navigating the complexities of modern ad tech. As the industry moves forward, those who prioritize trust and transparency will lead the way in delivering meaningful, compliant advertising experiences.

Based on their success, Scanbuy is now working with the IAB Tech Lab to position its approach to create industry standards for open and auditable AI advertising. This initiative would ease the complex regulatory landscape and foster greater trust among consumers and advertisers.

Read the full Scanbuy use case and download the Howso Causal AI starter kit to learn more.

The Future of AI Decision-Making

As AI evolves in agentic AI systems, its ability to explain and justify decisions will become increasingly important. The emergence of Causal-RAG (Retrieval-Augmented Generation with Causal AI) is set to further enhance AI’s capabilities by combining large-scale knowledge retrieval with causal inference. This new approach ensures that AI-generated responses are not only relevant and connected to other pertinent information but also grounded in causal reasoning, thereby reducing the risk of bias and misinformation. Again, it not only ensures that correlation is not confused with causation but also ranks the influences on an outcome with greater transparency.

As regulatory pressure increases and consumer expectations for AI accountability rise, businesses will be compelled to create more trustworthy AI systems that improve AI-driven decision-making.

It’s our bet is that causal AI will emerge as the key ingredient in enabling AI decision-making agents. By moving beyond correlation-based AI, businesses will increasingly rely on more transparent, reliable, and effective AI-driven decision-making systems rooted in the principles of causality. As 2025 unfolds, trust will become the currency of AI innovation, and causal AI will be key to earning it.

This is why the marketplace is speaking louder and louder about causal AI.

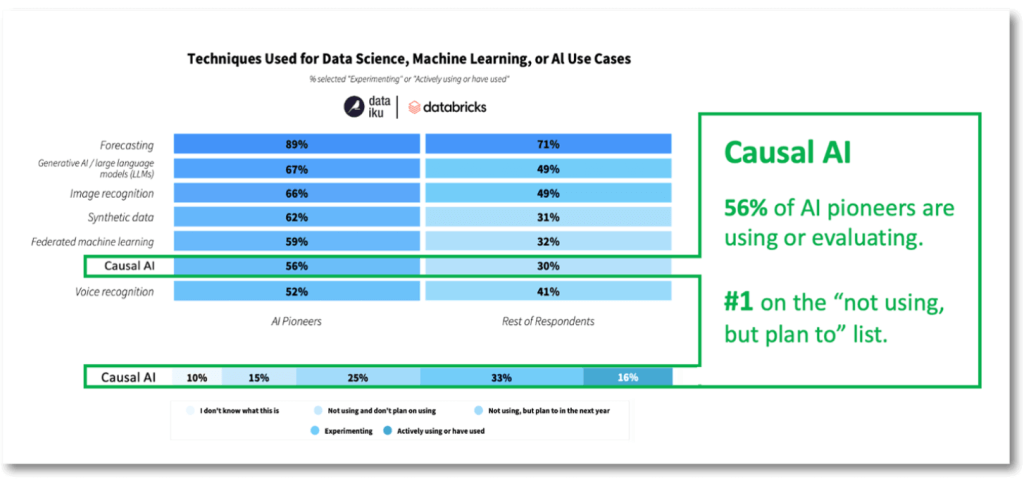

A survey of 400 senior AI professionals by Databricks showed that among AI pioneering companies, 56% were already using or experimenting with causal AI. In addition, among the total population of the survey, causal AI was ranked as the #1 AI technology “not using, but plan to next year.” The study reported that 16% are already actively using causal methods, 33% are in the experimental stage, and 25% plan to adopt. Overall, 7 in 10 will adopt Causal AI techniques by 2026.

The true competition will turn from applying Gen AI toward creating integrated AI model ecosystems that not only fix the limitations in today’s Gen AI but open up countless new possibilities by incorporating both semantic and causal reasoning technologies.

Companies that recognize this change early—by focusing on building value on these Gen AI platforms—will lead the way in AI innovation and ROI.

What to Do and When

The Scanbuy use case illustrates one of the countless ways causal AI will reshape how businesses solve complex problems. Stay tuned for a series of additional research notes that will further explore high-ROI use cases and the vendors democratizing causal reasoning for the masses by delivering new methods, tools, and platforms.

Perhaps the time is now to start preparing. We’d recommend you:

- Build competency in knowledge graphs and causal AI

- Evaluate the impact of causality on your use cases

- Engage with the array of vendors that provide causal AI solutions

- Experiment with the technology and build talent

We also recommend checking out the following research and podcasts:

Thanks for reading. Feedback is always appreciated.

As always, contact us if we can help you on this journey by messaging me on LinkedIn.