In this episode of the SecurityANGLE, I’m joined by Zscaler’s Chief Security Officer, Deepen Desai, for a conversation about the findings in the Zscaler ThreatLabz 2024 AI Security Report. The survey relied on more than 18 billion transactions across the company’s cloud security platform, the Zscaler Zero Trust Exchange, from April of 2023 to January of 2024.

In the Zscaler ThreatLabz 2024 AI Security Report, researchers explored how AI/ML tools are being used across the enterprise, and then went deeper, mapping out trends across sectors and geographies. They explored how companies are thinking about AI, how they are integrating AI into their business operations, how they are thinking about security around the use of AI tools, and the risks that generative AI brings and how organizations are addressing those AI cyber risks — all things top of mind for many business leaders today.

Zscaler’s value prop is all about accelerating DX so that customers can be more agile, efficient, resilient, and secure, and The Zscaler Zero Trust Exchange platform protects thousands of customers from cyberattacks and data loss by securely connecting users, devices, and applications in any location. Distributed across more than 150 data centers globally, the SSE-based Zero Trust Exchange™ is the world’s largest in-line cloud security platform.

As Chief Security Officer, Desai is responsible for global security research operations and works with Zscaler’s product teams to ensure security across the Zscaler platform.

Watch our conversation — Unpacking Zscaler ThreatLabz 2024 AI Security Report — here, or stream it wherever you stream your podcasts:

Thoughts on Leading Security Operations

Leading security operations is a role that is in no way for the faint of heart, and that’s even more true today with the advent of generative AI and the rapid transformation we’re seeing as organizations rush to embrace AI. Zscaler’s Desai shared his thoughts on the myriad opportunities AI presents and its role in transforming security operations in meaningful ways. This includes using AI to help visualize and quantify top-down risk and prioritize remediation in ways that simply weren’t possible before. Enterprises are harnessing generative AI for cybersecurity, combining vast data log data sets and streams into unified AI data fabrics, and using this to transform security outcomes. Complexity is a given, but it’s an exciting time to be in security.

Challenges Facing Customers on the AI Security Front

As they work to secure operations, enterprises are faced with some big decisions, and my conversation with Desai explored those challenges. One part of our conversation centered on whether to enable AI apps for productivity and exploring what apps should initially be blocked for data protection purposes. Shadow AI is a very real threat, and keeping pace with the rapid proliferation of SaaS applications and somehow managing to temper the excitement around going all in on generative AI is a daily challenge for security operations leaders. Working to develop an AI strategy, an AI security strategy, and getting the right guardrails in place to both enable and speed transformation efforts but also minimize the security risks AI poses is a delicate dance.

The Zscaler 2024 AI Security Threat Report

Some key findings from the Zscaler ThreatLabz 2024 AI Security Threat Report confirm what we already know: AI is quickly being woven into the fabric of enterprise life, as well as across the small and midmarket sectors. As expected, AI security remains a primary concern. As we discuss often on this show, security was already a challenge — if not the most significant challenge — for CSOs and CISOs before the advent of gen AI, and today, it’s even more of a pressing concern.

As we discussed the report, Desai shared thoughts on security and risk: “Security and risk with AI come in two flavors. The first is the risks that enterprises take in building and integrating AI tools within their own business, which involves the risk of leaking private or customer data, the security risks of AI applications themselves, the challenges in enabling secure user access to the right AI tools, among other concerns like data poisoning. That’s where we see the rise in blocked transactions; from that security officer mindset, enterprises are taking concrete steps to prevent their users from engaging with riskier or unapproved AI applications, limiting their risk where the odds of leaking proprietary data may be higher — think an engineer asking a gen AI tool to refactor source code or a finance employee leaking stock-impacting financial information.

On the other hand, there are the external, outside-in threats posed by cybercriminals using AI tools. To speak to that part of your question, AI is enabling virtually every kind of cyberattack to be launched faster, in more sophisticated ways, and at a larger scale.”

Some other interesting data points from the Zscaler ThreatLabz 2024 AI Security Report include:

AI/ML Usage Skyrocketing: One of the things that immediately jumped out from the report was that it showed AI/ML usage skyrocketing by 594.82%, rising from 521 million AI/ML-driven transactions in April 2023 to 3.1 billion AI/ML-driven transactions monthly by January 2024.

Most-widely Used AI Apps by Transaction Volume. Not surprisingly, the most widely used AI applications by transaction volume are ChatGPT, Drift, OpenAI, Writer, and LivePerson, and the top three most often blocked applications by transaction volume are ChatGPT, OpenAI, and Fraud.net.

Blocking Applications is a Common Enterprise Security Strategy. While ChatGPT is the most blocked application by enterprises, its use continues to soar, with a massive 634.1% growth.

Blocking remains a foundational part of AI security operations today as organizations work to implement AI policies, guidelines, and guardrails. Enterprises reported blocking 18.5% of all AI/ML transactions over a nine-month period.

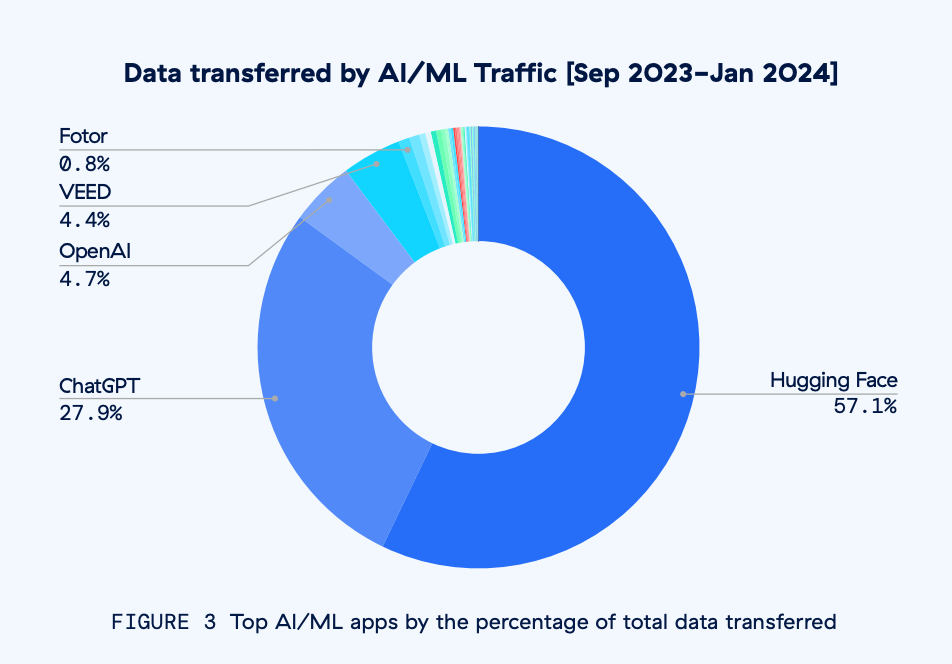

Data and data volume are exploding. The report showed that enterprises are sending large volumes of data to AI tools, with 569 terabytes of data exchanged between AI/ML applications between September 2023 and January 2024. Here’s a look at the AI/ML applications sending the most data:

Note that Hugging Face, an open source AI developer platform, accounts for almost 60% of enterprise data transferred by AI tools. This is as expected, since Hugging Face is being used to host and train AI models.

AI and Cyber Threats. Alongside the benefits organizations can gain from adopting and utilizing generative AI, as we’ve discussed in this series many times before, AI brings capabilities to cyber threat actors that allow them to benefit immensely. Phishing, smishing, social engineering, deepfake video creation, ransomware, automated exploit generation, enterprise attack surface discovery —cyber criminals have much to gain by embracing and utilizing generative AI.

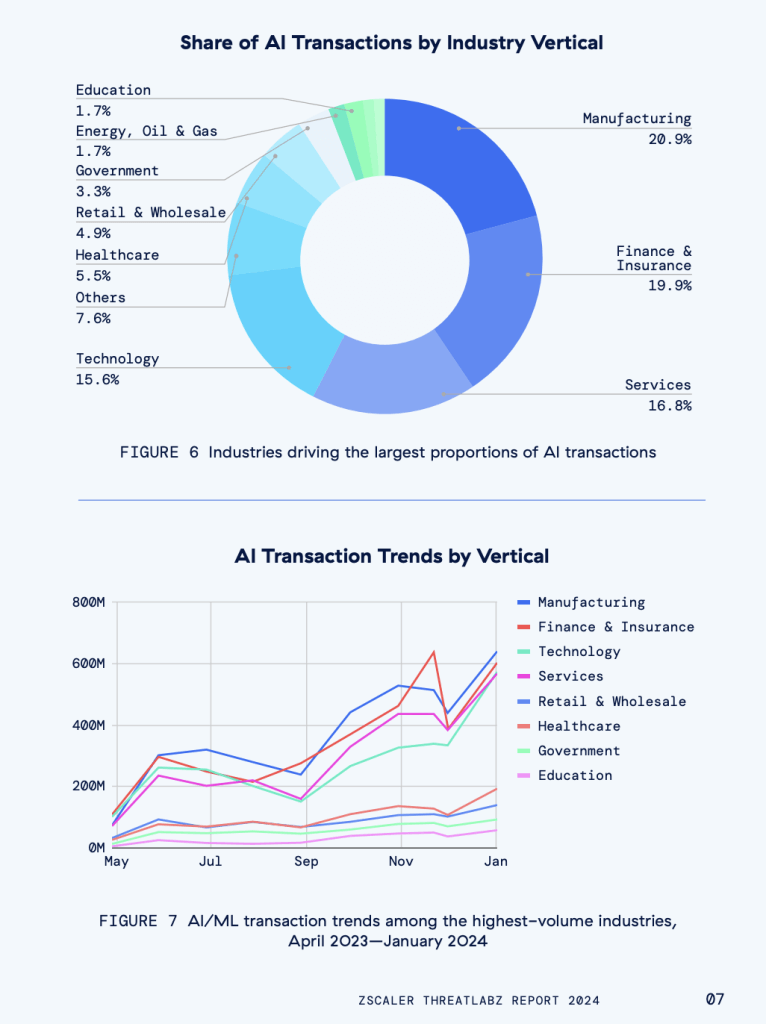

The Zscaler ThreatLabz 2024 AI Security Report also provided an industry-specific AI breakdown that I thought was interesting. Manufacturing leads the queue with the highest influx of AI/ML traffic, followed by Finance & Insurance, Technology, Services, Retail & Wholesale, Healthcare, Government, and Education. Take a look at that breakdown here:

Protecting Attack Surfaces and Data Management Remain Challenging

AI is being used at every stage of the attack chain, from using it to suss out vulnerabilities in an organization’s external attack surface to generating automated exploits for those vulnerabilities. AI also takes the creation of phishing emails and smishing messages to a whole new level, enabling and facilitating the creation of highly persuasive, grammatically correct messages. Deepen shared that in the report, ThreatLabz was able to use ChatGPT to create a fake Microsoft login page in as few as seven prompts. We can also expect to see more sophisticated ransomware as well as AI modules developed specifically for exfiltrating data.

AI is making it much easier to discover and exploit any public-facing vulnerability in an enterprise attack surface, which includes exposed applications, servers, VPNs, and more. As if all this isn’t enough to be concerned about, Desai mentioned that he is most concerned with the “unknown unknowns” — the AI threats that will inevitably evolve in the near future, which we’ve not even thought about yet, much less have any idea how to manage and mitigate.

Desai cautions that putting secure controls around data used with AI should be a top priority; failing to do so could potentially expose data and put the organization at risk. He shared that researchers have recently released security flaws discovered with major AI-as-a-Service providers, one of which drove over 50% of the data shared by enterprises between AI tools mentioned in the Zscaler 2024 AI Threat report. More broadly, Desai advised enterprise security leaders to think carefully about what data is able to leave their organizations when it’s sent in a query to a gen AI tool, whether that is intentional or not. This is where data loss prevention (DLP) tools, which we’ve discussed often in this series, are more important than ever. DLP solutions are designed to help prevent proprietary data, customer data, PII, financial data, and beyond from being entered into AI tools.

Advice from a CSO to Other Security Leaders on Getting Arms Around AI

Desai left us with some advice for enterprises and his fellow security officers on how best to get arms around AI, harness its massive capabilities, and keep the organization secure. He shared that as a data protection best practice, CSOs might do well to start by simply blocking all AI applications throughout an organization and then allowing selectively vetted and approved applications for internal use.

The rest of the AI journey should be focused on securely enabling AI, and gaining complete visibility into the AI apps in use in their organizations. Security teams need granular access controls, which enable the right access to the right AI tools for the right user, team, and department levels. They also need secure controls to protect their internal LLMS and data protections in place for their third-party AI applications. Once they have these key elements in place, enterprises will then have an extremely robust security foundation on which they can begin to spur innovation and transform their businesses utilizing the power of AI.

Five Key Questions to Ask to Inform and Drive Development of a Secure AI Strategy

Given the extremely rapid pace of innovation in AI, Desai urges enterprises to be proactive and take steps now to secure their AI tools. The more time that elapses, the harder it will be to contain shadow AI sprawl, and you’ll be at a disadvantage — playing a catch-up game. Developing an AI strategy, developing clear AI policies, establishing and communicating expectations with employees, and maintaining secure, robust controls around critical data and AI tools are all table stakes for organizations today. Desai believes a crawl-walk-run approach is the way forward, and that prioritization of goals and objectives is a key part of any AI strategy. He suggests enterprise leaders ask themselves these five key AI-related questions to help identify gaps and help inform and drive a secure AI strategy:

- Do we have deep visibility into employee AI app usage?

- Can we create highly granular access controls to AI apps at the department, team, and user levels?

- Is DLP enabled to protect key data from being leaked?

- What security controls do we have in place to protect our internal LLMs?

- What data security measures do we have in place for third-party AI apps?

This information should provide a good foundation and solid insights into next steps for developing a secure AI strategy. And because of course you’ll want it, here’s a link to download the Zscaler ThreatLabz 2024 AI Security Report.

If we haven’t connected on LinkedIn yet, find and connect with Deepen Desai and me there. Be sure to send any comments or questions our way—we’d love to hear from you.