Breaking Analysis: Enterprise Software Download in the Summer of COVID

Enterprise software markets are dominated by mega cap companies like SAP, Oracle, Salesforce and Microsoft. SaaS leaders like ServiceNow and Workday have solidified their market positions by to a great extent, replicating the Salesforce model in their respective domains. There is still room for innovative upstarts that are introducing new cloud native services and value […]

Breaking Analysis: Tectonic Shifts Power Cloud, IAM & Endpoint Security

Cloud, IAM & Endpoint Security are rocking the markets. Over the past 150 days, everyone in the technology industry has become an expert on COVID in some way shape or form. We have all lived the reality that COVID-19 has accelerated by at least 2 years, many trends that were in motion well before the […]

Breaking Analysis: Tectonic Shifts Power Cloud, IAM & Endpoint Security

Cloud, IAM & Endpoint Security are rocking the markets. Over the past 150 days, everyone in the technology industry has become an expert on COVID in some way shape or form. We have all lived the reality that COVID-19 has accelerated by at least 2 years, many trends that were in motion well before the […]

Breaking Analysis: Cloud Remains Strong but not Immune to COVID

Cloud Remains Strong but not Immune to COVID. While cloud computing is generally seen as a bright spot in tech spending, the sector is not immune from the effects of COVID-19. It’s better to be cloud than not cloud, no question, but recent survey data shows that the V-shaped recovery in the stock market looks […]

Breaking Analysis: RPA Competitors Eye Deeper Business Integration Agenda

RPA competitors vie for a bigger prize… RPA Leads all Sectors in Spending Intentions Robotic process automation (RPA) solutions remain one of the most attractive investments for business technology buyers. This despite our overall 2020 tech spending forecasts, which remain at the depressed levels of -4% to -5% for the year. Relative to previous surveys, […]

Breaking Analysis: Five Questions Investors are Asking about Snowflake’s IPO

According to reports, Snowflake recently filed a confidential IPO document with the U.S. Security and Exchange Commission. Sources suggest that Snowflake’s value could be pegged as high as $20B. In this week’s Breaking Analysis we address five questions that we’ve been getting from theCUBE, Wikibon and ETR communities. ETR’s Erik Bradley provides data and insights […]

Breaking Analysis: Five Questions Investors are Asking about Snowflake's IPO

According to reports, Snowflake recently filed a confidential IPO document with the U.S. Security and Exchange Commission. Sources suggest that Snowflake’s value could be pegged as high as $20B. In this week’s Breaking Analysis we address five questions that we’ve been getting from theCUBE, Wikibon and ETR communities. ETR’s Erik Bradley provides data and insights […]

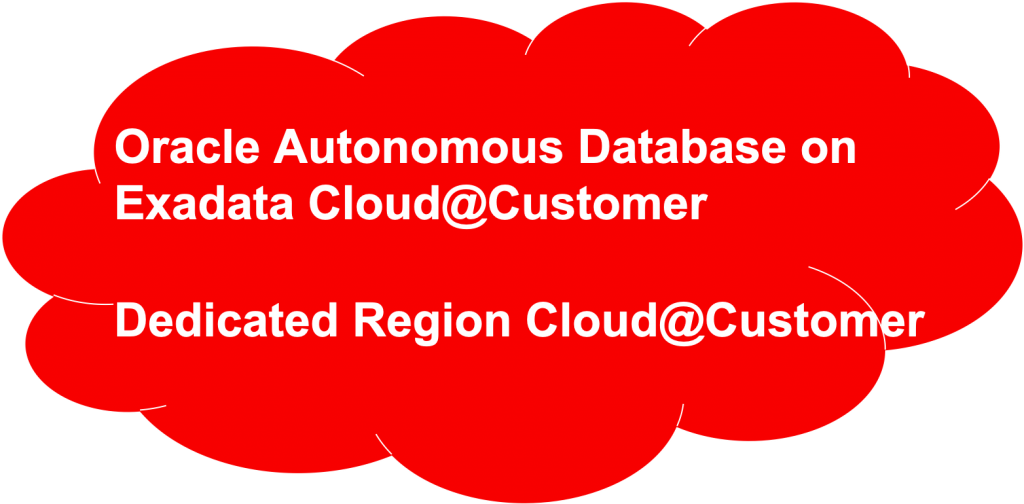

Oracle Cloud@Customer Brings Full Cloud Experience On-Premises

Oracle Autonomous Database on Exadata Cloud@Customer and Dedicated Region Cloud@Customer is a radical approach at bringing cloud services on-premises. Our initial take is those substantial customers running mission-critical Oracle applications can cut costs by up to 40% with this offering. This strategy is the most ambitious attempt we’ve seen in the industry to bring a full public cloud experience to organizations’ premises.

Global 2000 customers running mission-critical large-scale applications on Oracle Databases should assume that they will migrate to one of Oracle’s new Cloud@Custmer offerings. Wikibon recommends that CxOs begin engaging with Oracle to conduct their TCO/ROI/IRR analyses now.

Breaking Analysis: Google Cloud Rides the Wave but Remains a Distant Third Place

Despite its faster growth in infrastructure-as-a-service relative to AWS and Azure, Google Cloud Platform remains a third wheel in the race for cloud dominance. Google begins its Cloud Next online event starting July 14th in a series of nine rolling sessions through early September. Ahead of that, we want to update you on our most […]